1.GRU从零开始实现

#9.1.2GRU从零开始实现

import torch

from torch import nn

from d2l import torch as d2l#首先读取 8.5节中使用的时间机器数据集

batch_size,num_steps = 32,35

train_iter,vocab = d2l.load_data_time_machine(batch_size,num_steps)

#初始化模型参数

def get_params(vocab_size,num_hiddens,device):num_inputs = num_outputs = vocab_sizedef normal(shape):return torch.randn(size=shape,device=device)*0.01def three():return (normal((num_inputs,num_hiddens)),normal((num_hiddens,num_hiddens)),torch.zeros(num_hiddens,device=device))W_xz,W_hz,b_z = three() #更新门参数W_xr,W_hr,b_r = three() #重置门参数W_xh,W_hh,b_h = three() #候选隐状态参数#输出层参数W_hq = normal((num_hiddens,num_outputs))b_q = torch.zeros(num_outputs,device=device)#附加梯度params = [W_xz,W_hz,b_z,W_xr,W_hr,b_r,W_xh,W_hh,b_h,W_hq,b_q]for param in params:param.requires_grad_(True)return params

#定义隐状态的初始化函数init_gru_state

def init_gru_state(batch_size,num_hiddens,device):return (torch.zeros((batch_size,num_hiddens),device=device),)

#门控循环单元模型

def gru(inputs,state,params):W_xz,W_hz,b_z,W_xr,W_hr,b_r,W_xh,W_hh,b_h,W_hq,b_q = paramsH, = stateoutputs = []for X in inputs:Z = torch.sigmoid((X @ W_xz)+(H @ W_hz) + b_z)R = torch.sigmoid((X @ W_xr)+(H @ W_hr) + b_r)H_tilda = torch.tanh((X @ W_xh)+((R*H) @ W_hh) + b_h)H = Z * H + (1-Z) * H_tildaY = H @ W_hq + b_qoutputs.append(Y)return torch.cat(outputs,dim=0),(H,)

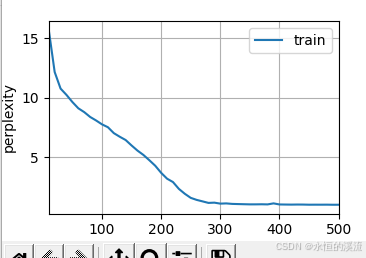

#训练与预测:打印输出训练集的困惑度,以及前缀“time traveler”和“traveler”的预测序列上的困惑度。

vocab_size,num_hiddens,device = len(vocab),256,d2l.try_gpu()

num_epochs,lr = 500,1

model = d2l.RNNModelScratch(len(vocab),num_hiddens,device,get_params,init_gru_state,gru)

print(d2l.train_ch8(model,train_iter,vocab,lr,num_epochs,device))

d2l.plt.show()

2.GRU简洁实现

#9.1.3简洁实现

import torch

from torch import nn

from d2l import torch as d2l

#首先读取 8.5节中使用的时间机器数据集

batch_size,num_steps = 32,35

train_iter,vocab = d2l.load_data_time_machine(batch_size,num_steps)vocab_size,num_hiddens,device = len(vocab),256,d2l.try_gpu()

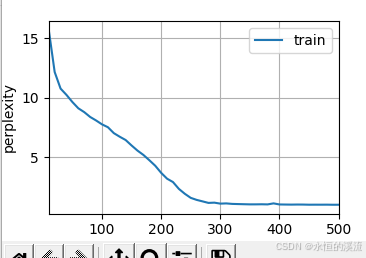

num_epochs,lr = 500,1num_inputs = vocab_size

gru_layer = nn.GRU(num_inputs,num_hiddens)

model = d2l.RNNModel(gru_layer,len(vocab))

model = model.to(device)

print(d2l.train_ch8(model,train_iter,vocab,lr,num_epochs,device))

d2l.plt.show()