Ubuntu 编译SRS和ZLMediaKit用于视频推拉流

SRS实现视频的rtmp webrtc推流

ZLMediaKit编译生成MediaServer实现rtsp推流

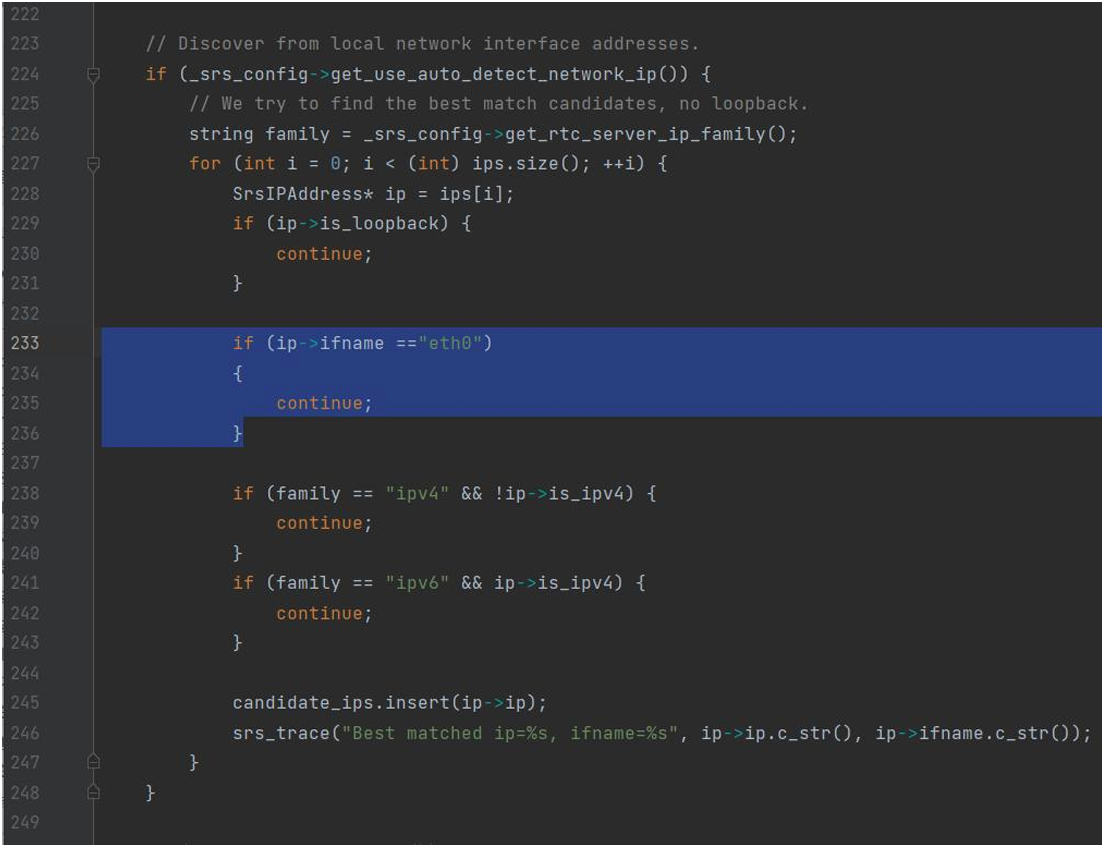

SRS指定某个固定网卡,修改程序后重新编译

打开SRS-4.0.0/trunk/src/app/srs_app_rtc_server.cpp,在 232 行后面添加:

ZLMediaKit编译后文件存放在ZLMediakit/release/linux/Debug/下,需要在执行文件目录放一个auth.json文件,存放rtsp使用用户名admin和密码admin(MD5加密),文件内容:

{"auth": [{"passwd": "21232F297A57A5A743894A0E4A801FC3","username": "admin"}]

}使用FFMPEG实现获取摄像头数据,压缩,推流过程。

FFMPEG编译带webrtc功能:sudo ./configure --enable-libx264 --enable-gpl --enable-nvmpi --enable-shared --prefix=/usr/local/ffmpeg_webrtc

具体程序如下:

FFmpegEncode.h

#ifndef ffmpeg_encode_hpp

#define ffmpeg_encode_hpp#include <iostream>

#include <opencv2/opencv.hpp>

#include <boost/thread.hpp>

#include <boost/smart_ptr.hpp>

#include <boost/bind.hpp>

#include <vector>

#include <map>

#include <stdio.h>

#include <string>

#include <utility>extern "C" {

#include <stdlib.h>

#include <stdio.h>

#include <string.h>

#include <math.h>#include <libavutil/avassert.h>

#include <libavutil/channel_layout.h>

#include <libavutil/opt.h>

#include <libavutil/mathematics.h>

#include <libavutil/timestamp.h>

#include <libavcodec/avcodec.h>

#include <libavformat/avformat.h>

#include <libswscale/swscale.h>

#include <libswresample/swresample.h>#include <libavcodec/avcodec.h>

#include <libavformat/avformat.h>

#include <libswscale/swscale.h>

#include <libavutil/imgutils.h>

#include <libavutil/opt.h>

#include <libavutil/mathematics.h>

#include <libavutil/samplefmt.h>#include <libavutil/time.h>

#include <libavdevice/avdevice.h>

}#include <thread>class FFmpegEncode

{

public:FFmpegEncode();~FFmpegEncode();int open_output(std::string path, std::string type, bool isAudio);// 初始化编码器int init_encoderCodec(std::string type, int32_t fps, uint32_t width, uint32_t height, int IFrame, int code_rate);std::string get_encode_extradata();std::shared_ptr<AVPacket> encode();int write_packet(std::shared_ptr<AVPacket> packet){return av_interleaved_write_frame(outputContext, packet.get());}int mat_to_frame(cv::Mat &mat);private:int initSwsFrame(AVFrame *pSwsFrame, int iWidth, int iHeight);AVFrame *pSwsVideoFrame;AVFormatContext *outputContext = nullptr;AVCodecContext *encodeContext = nullptr;int64_t packetCount = 0;int video_fps = 25;uint8_t *pSwpBuffer = nullptr;

};#endif /* ffmpeg_encode_hpp*/

FFmpegEncode.cpp

#include "ffmpeg_encode.h"FFmpegEncode::FFmpegEncode()

{av_register_all();avcodec_register_all();avformat_network_init();avdevice_register_all();av_log_set_level(AV_LOG_ERROR); // 设置 log 为error级别pSwsVideoFrame = av_frame_alloc();

}FFmpegEncode::~FFmpegEncode()

{if(encodeContext != nullptr)avcodec_free_context(&encodeContext);if(outputContext != nullptr){av_write_trailer(outputContext);for(uint32_t i = 0; i < outputContext->nb_streams; i++){avcodec_close(outputContext->streams[i]->codec);}avformat_close_input(&outputContext);}av_frame_free(&pSwsVideoFrame);if(pSwpBuffer)av_free(pSwpBuffer);

}int FFmpegEncode::open_output(std::string path, std::string type, bool isAudio)

{int ret = avformat_alloc_output_context2(&outputContext, NULL, type.c_str(), path.c_str());std::cout<<"avformat_alloc_output_context2 ret : "<<ret<<std::endl;if (ret < 0){av_log(NULL, AV_LOG_ERROR, "open output context failed\n");return ret;}ret = avio_open(&outputContext->pb, path.c_str(), AVIO_FLAG_WRITE);std::cout<<"avio_open ret : "<<ret<<std::endl;if (isAudio){for (uint32_t i = 0; i < outputContext->nb_streams; i++) // 如果有音频 和 视频 nputContext->nb_streams则为2{if (outputContext->streams[i]->codec->codec_type == AVMediaType::AVMEDIA_TYPE_AUDIO)// 如果有stream 为音频 则不处理{continue;}AVStream * stream = avformat_new_stream(outputContext, encodeContext->codec);ret = avcodec_copy_context(stream->codec, encodeContext);if (ret < 0){av_log(NULL, AV_LOG_ERROR, "copy coddec context failed");return ret;}}}else{AVStream * stream = avformat_new_stream(outputContext, encodeContext->codec);if (!stream){char buf[1024];//获取错误信息av_strerror(ret, buf, sizeof(buf));std::cout << " failed! " << buf << std::endl;return -1;}ret = avcodec_copy_context(stream->codec, encodeContext);std::cout<<"avcodec_copy_context ret : "<<ret<<std::endl;if (ret < 0){char buf[1024];//获取错误信息av_strerror(ret, buf, sizeof(buf));std::cout << " failed! " << buf << std::endl;return ret;}}av_dump_format(outputContext, 0, path.c_str(), 1);ret = avformat_write_header(outputContext, nullptr);if (ret < 0){av_log(NULL, AV_LOG_ERROR, "format write header failed");return ret;}av_log(NULL, AV_LOG_FATAL, " Open output file success: %s\n", path.c_str());return ret;

}int FFmpegEncode::init_encoderCodec(std::string type, int32_t fps, uint32_t width, uint32_t height, int IFrame, int code_rate)

{video_fps = fps;// 终端运行 // ffmpeg -hwaccels// ffmpeg -codecs | grep videotoolbox// ffprobe -codecs -hide_banner| grep h264std::string encoder_name = type + "_nvmpi";AVCodec *enCodec = avcodec_find_encoder_by_name(encoder_name.c_str());if(!enCodec)enCodec = avcodec_find_encoder_by_name("libx264");std::cout<< "使用编码器: "<< enCodec->name<< std::endl;encodeContext = avcodec_alloc_context3(enCodec);encodeContext->codec_id = enCodec->id;encodeContext->time_base = (AVRational){1, video_fps};encodeContext->framerate = (AVRational){video_fps, 1};encodeContext->gop_size = IFrame; //每50帧插入1个I帧encodeContext->max_b_frames = 0; //两个非B帧之间允许出现多少个B帧数,设置0表示不使用B帧,b 帧越多,图片越小encodeContext->pix_fmt = AV_PIX_FMT_YUV420P; //像素的格式,也就是说采用什么样的色彩空间来表明一个像素点encodeContext->width = (width == 0) ? outputContext->streams[0]->codecpar->width : width;encodeContext->height = (height == 0) ? outputContext->streams[0]->codecpar->height : height;encodeContext->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;encodeContext->bit_rate = code_rate*1024;AVDictionary *param = NULL;av_dict_set(¶m, "preset", "fast", 0); // ultrafast, superfast, veryfast, faster, fast, medium, slow, slower, veryslow, placebo.av_dict_set(¶m, "tune", "fastdecode", 0); // film、animation、grain、stillimage、psnr、ssim、fastdecode、zerolatencyav_dict_set(¶m, "profile", "baseline", 0); //baseline, extended, main, highint ret = avcodec_open2(encodeContext, enCodec, ¶m);av_free(param);param = NULL;if (ret < 0){std::cout<< "open video codec failed"<< std::endl;return ret;}initSwsFrame(pSwsVideoFrame, encodeContext->width, encodeContext->height);return 1;

}std::string FFmpegEncode::get_encode_extradata()

{std::string output = "";if(encodeContext->extradata_size > 0){output = "extradata_" + std::to_string(encodeContext->extradata_size);for(int i = 0; i< encodeContext->extradata_size; i++)output = output + "_" + std::to_string(int(encodeContext->extradata[i]));}return output;

}std::shared_ptr<AVPacket> FFmpegEncode::encode()

{int gotOutput = 0;std::shared_ptr<AVPacket> pkt(static_cast<AVPacket*>(av_malloc(sizeof(AVPacket))), [&](AVPacket *p) { av_packet_free(&p); av_freep(&p); });av_init_packet(pkt.get());pkt->data = NULL;pkt->size = 0;int ret = avcodec_encode_video2(encodeContext, pkt.get(), pSwsVideoFrame, &gotOutput);if (ret >= 0 && gotOutput){int64_t pts_time = av_rescale_q(packetCount++, (AVRational){1, video_fps}, outputContext->streams[0]->time_base);pkt->pts = pkt->dts = pts_time;return pkt;}return nullptr;

}int FFmpegEncode::mat_to_frame(cv::Mat &mat)

{int width = mat.cols;int height = mat.rows;int cvLinesizes[1];cvLinesizes[0] = (int)mat.step1();if (pSwsVideoFrame == NULL){pSwsVideoFrame = av_frame_alloc();av_image_alloc(pSwsVideoFrame->data, pSwsVideoFrame->linesize, width, height, AVPixelFormat::AV_PIX_FMT_YUV420P, 1);}SwsContext* conversion = sws_getContext(width, height, AVPixelFormat::AV_PIX_FMT_BGR24, width, height, (AVPixelFormat)pSwsVideoFrame->format, SWS_FAST_BILINEAR, NULL, NULL, NULL);sws_scale(conversion, &mat.data, cvLinesizes , 0, height, pSwsVideoFrame->data, pSwsVideoFrame->linesize);sws_freeContext(conversion);return 0;

}int FFmpegEncode::initSwsFrame(AVFrame *pSwsFrame, int iWidth, int iHeight)

{// 计算一帧的大小int numBytes = av_image_get_buffer_size(encodeContext->pix_fmt, iWidth, iHeight, 1);if(pSwpBuffer)av_free(pSwpBuffer);pSwpBuffer = (uint8_t *)av_malloc(numBytes * sizeof(uint8_t));// 将数据存入 uint8_t 中av_image_fill_arrays(pSwsFrame->data, pSwsFrame->linesize, pSwpBuffer, encodeContext->pix_fmt, iWidth, iHeight, 1);pSwsFrame->width = iWidth;pSwsFrame->height = iHeight;pSwsFrame->format = encodeContext->pix_fmt;return 1;

}

main.cpp

//ZLMediaKit用于播放rtsp,SRS/nginx用于播放rtmp/webrtc

#include <iostream>

#include <opencv2/opencv.hpp>

#include <boost/thread.hpp>

#include <boost/smart_ptr.hpp>

#include <boost/bind.hpp>

#include <vector>

#include <map>

#include <stdio.h>

#include <string>

#include <utility>#include <boost/date_time/posix_time/posix_time.hpp>

#include <boost/thread.hpp>

#include "ffmpeg_encode.hpp"using namespace std;uint32_t rgb_fps = 20; // 可见光视频帧率int main(int argc, char* argv[])

{std::cout << "start" << std::endl;std::string pipeline = "rtsp://admin:123456@192.168.1.235/h264/ch1/main/av_stream";std::string rgb_rtmp_path = "rtmp://127.0.0.1:1935/hls/test_rgb";std::string rgb_rtsp_path = "rtsp://127.0.0.1:8556/hls/test_rgb";std::string rgb_webrtc_path = "webrtc://127.0.0.1:1985/hls/test_rgb";std::string rgb_path;std::string rgb_type;if(argc != 2) {cout<<"1-rtmp 2-rtsp 3-webrtc"<<endl;} else {if (strcmp(argv[1], "1") == 0) {rgb_path = rgb_rtmp_path;rgb_type = "flv";} else if (strcmp(argv[1], "2") == 0) {rgb_path = rgb_rtsp_path;rgb_type = "rtsp";} else if (strcmp(argv[1], "3") == 0) {rgb_path = rgb_webrtc_path;rgb_type = "webrtc";}}cv::VideoCapture capture(pipeline);if (! capture.isOpened()){std::cout<< "RGB摄像头打开失败"<< std::endl;return -1;}FFmpegEncode camera_io;// 初始化编码器if(camera_io.init_encoderCodec("h264", rgb_fps, 1280, 720, 50, 4096) < 0)return -3;std::string rgb_extradata = camera_io.get_encode_extradata() + "_" + "h264";rgb_extradata = rgb_extradata + "&&" + std::to_string(rgb_fps) + "_1280_720";std::cout<<"rgb_extradata = "<<rgb_extradata<<std::endl;cout<<"rgb_path: "<<rgb_path<<" rgb_type: "<<rgb_type<<endl;if(camera_io.open_output(rgb_path, rgb_type, false) < 0)return -4;int64_t fps_start_time = av_gettime();uint64_t fps_count = 0;int64_t get_frame_time = 0;// 获取可见光视频while(capture.isOpened()){cv::Mat rgb_mat;capture >> rgb_mat;get_frame_time = av_gettime();if(rgb_mat.empty()){boost::this_thread::sleep(boost::posix_time::microseconds(5));continue;}// 输出可见光视频帧率if(get_frame_time - fps_start_time < 1 * 1000 * 1000){fps_count++;}else{std::cout<< "rgb fps: "<< fps_count + 1 << std::endl;fps_count = 0;fps_start_time = get_frame_time;}// Mat转frameif(camera_io.mat_to_frame(rgb_mat) < 0)continue;// 编码auto packet_encode = camera_io.encode();if(packet_encode){camera_io.write_packet(packet_encode);av_free_packet(packet_encode.get());}}std::cout<< "RGB摄像头编解码程序退出"<< std::endl;return 0;

}