k8s监控方案实践(一):部署Prometheus与Node Exporter

k8s监控方案实践(一):部署Prometheus与Node Exporter

文章目录

- k8s监控方案实践(一):部署Prometheus与Node Exporter

- 一、Prometheus简介

- 二、Prometheus+Node Exporter实战部署

- 1. 创建Namespace(prometheus-namespace.yaml)

- 2. 创建Node Exporter DaemonSet(node-exporter.yaml)

- 3. 创建ServiceAccount(prometheus-serviceaccount.yaml)

- 4. 创建ClusterRoleBinding(prometheus-clusterrolebinding.yaml)

- 5. 创建ConfigMap(prometheus-config.yaml)

- 5. 创建Service(prometheus-svc.yaml)

- 7. 创建DeployMent(prometheus-deploy.yaml)

- 8. 部署所有资源

- 三、验证部署

- 1. 验证Pod状态

- 2. 访问Prometheus Web UI

- 在这里插入图片描述

- 总结

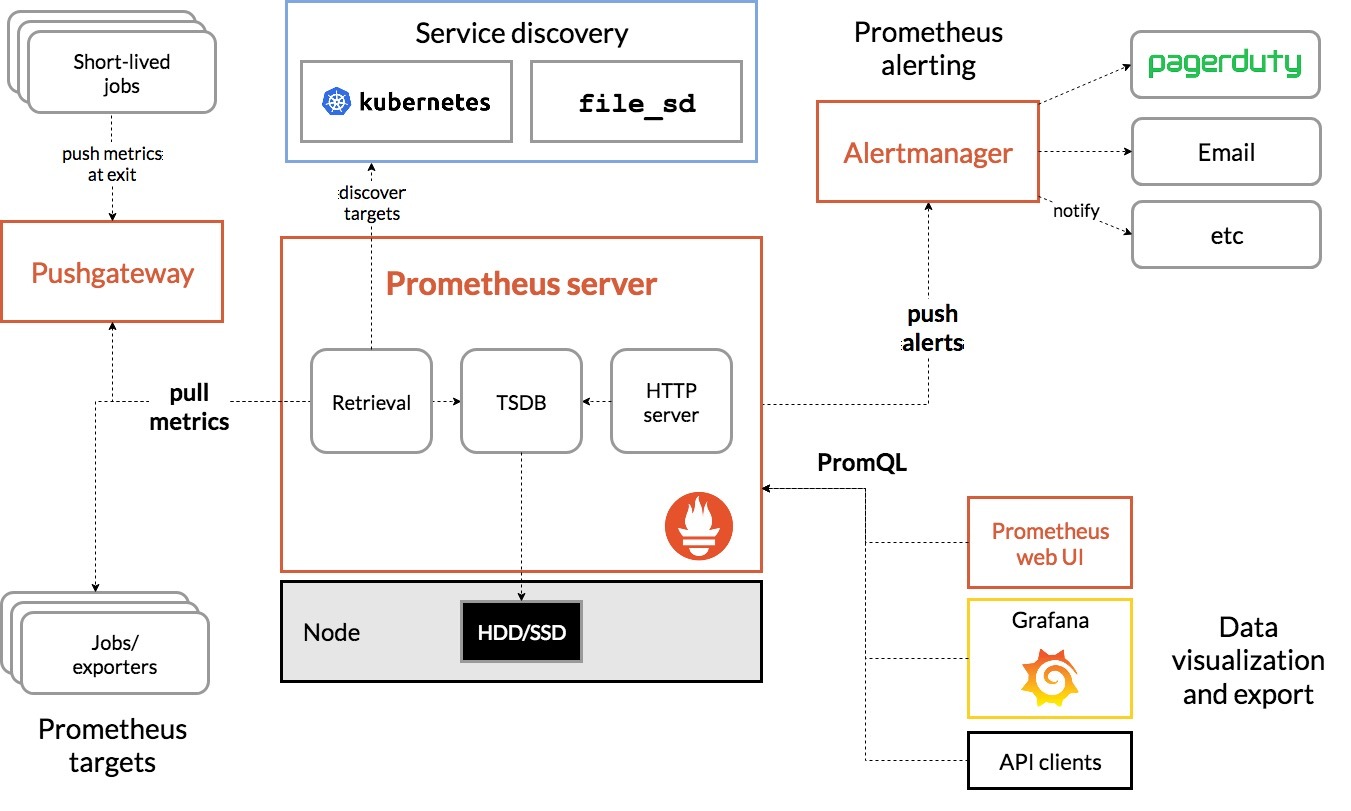

随着容器化和微服务架构的普及,系统架构日益复杂,传统监控工具难以胜任对多服务、多节点环境的全面可观测性需求。Prometheus 作为 Cloud Native Computing Foundation(CNCF) 的核心项目之一,因其灵活的数据模型、强大的查询语言(PromQL)以及对 Kubernetes 的天然支持,逐渐成为容器环境下主流的监控方案。

在本系列中,我们将围绕 Prometheus 在 Kubernetes 中的部署与实践展开介绍。本篇作为第一篇,将聚焦于 Prometheus 的基础部署,并集成 Node Exporter 实现对集群节点资源(如 CPU、内存、磁盘、网络等)的实时监控,为后续服务指标采集与告警体系搭建打下基础。

一、Prometheus简介

Prometheus 采用拉取模型(Pull-based)来定期采集被监控目标暴露的指标数据,所有数据以时间序列的形式存储在本地时间序列数据库中,支持高效压缩和快速查询。其核心组件包括:

- Prometheus Server:主服务组件,负责服务发现、数据抓取、存储和 PromQL 查询处理

- Exporter:用于暴露指标的采集器,常见如 Node Exporter(主机指标)、Kube-State-Metrics(K8s 状态)、Blackbox Exporter(探测)等

- Alertmanager:负责接收和管理 Prometheus 的告警信息,并支持邮件、Slack、WebHook 等通知方式

- Pushgateway(可选):用于支持短生命周期任务的指标上报,如定时任务脚本

- Web UI 与 HTTP API:提供基础的可视化查询界面与外部系统接入能力

在 Kubernetes 环境下,Prometheus 可结合服务发现机制动态发现集群中的 Pod、Service、Node 等资源,从而自动完成监控目标的注册与指标抓取,非常适合用于监控容器化和微服务系统。

二、Prometheus+Node Exporter实战部署

1. 创建Namespace(prometheus-namespace.yaml)

创建名为prometheus的命名空间,用于隔离部署监控相关资源

apiVersion: v1

kind: Namespace

metadata:name: prometheus

2. 创建Node Exporter DaemonSet(node-exporter.yaml)

以守护进程形式在所有节点部署Node Exporter,用于采集节点 CPU、内存、磁盘、网络等基础指标

apiVersion: apps/v1

kind: DaemonSet

metadata:name: node-exporternamespace: prometheuslabels:name: node-exporter

spec:selector:matchLabels:name: node-exportertemplate:metadata:labels:name: node-exporterspec:hostPID: truehostIPC: truehostNetwork: true #使用宿主机网络,便于 Prometheus 拉取指标containers:- name: node-exporterimage: harbor.local/k8s/node-exporter:v1.7.0imagePullPolicy: IfNotPresentports:- containerPort: 9100securityContext:privileged: trueargs:- --path.procfs- /host/proc- --path.sysfs- /host/sys- --collector.filesystem.ignored-mount-points- '"^/(sys|proc|dev|host|etc)($|/)"'volumeMounts:- name: devmountPath: /host/dev- name: procmountPath: /host/proc- name: sysmountPath: /host/sys- name: rootfsmountPath: /rootfstolerations:- key: "node-role.kubernetes.io/control-plane"operator: "Exists"effect: "NoSchedule"volumes:- name: prochostPath:path: /proc- name: devhostPath:path: /dev- name: syshostPath:path: /sys- name: rootfshostPath:path: /root

3. 创建ServiceAccount(prometheus-serviceaccount.yaml)

为 Prometheus 创建专属的ServiceAccount,用于后续绑定权限

apiVersion: v1

kind: ServiceAccount

metadata:name: prometheusnamespace: prometheus

4. 创建ClusterRoleBinding(prometheus-clusterrolebinding.yaml)

将集群管理员权限绑定到 Prometheus 的ServiceAccount,以便其能访问 Kubernetes API 拉取监控目标

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: prometheus-clusterrolebinding

subjects:- kind: ServiceAccountname: prometheusnamespace: prometheus

roleRef:kind: ClusterRolename: cluster-adminapiGroup: rbac.authorization.k8s.io

5. 创建ConfigMap(prometheus-config.yaml)

定义 Prometheus 的主配置文件prometheus.yml,配置抓取规则、服务发现方式、静态目标与黑盒探测等

---

kind: ConfigMap

apiVersion: v1

metadata:labels:app: prometheusname: prometheus-confignamespace: prometheus

data:prometheus.yml: |global:scrape_interval: 15sscrape_timeout: 10sevaluation_interval: 1malerting:alertmanagers:- static_configs:- targets: ['alertmanager.prometheus.svc.cluster.local:9093']rule_files:- "rules/*.yml"scrape_configs:- job_name: 'kubernetes-node'kubernetes_sd_configs:- role: noderelabel_configs:- source_labels: [__address__]regex: '(.*):10250'replacement: '${1}:9100'target_label: __address__action: replace- action: labelmapregex: __meta_kubernetes_node_label_(.+)- job_name: 'kubernetes-node-cadvisor'kubernetes_sd_configs:- role: nodescheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenrelabel_configs:- action: labelmapregex: __meta_kubernetes_node_label_(.+)- target_label: __address__replacement: kubernetes.default.svc:443- source_labels: [__meta_kubernetes_node_name]regex: (.+)target_label: __metrics_path__replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor- job_name: 'kubernetes-apiserver'kubernetes_sd_configs:- role: endpointsscheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenrelabel_configs:- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]action: keepregex: default;kubernetes;https- job_name: 'kubernetes-service-endpoints'kubernetes_sd_configs:- role: endpointsrelabel_configs:- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]action: keepregex: true- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]action: replacetarget_label: __scheme__regex: (https?)- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]action: replacetarget_label: __metrics_path__regex: (.+)- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]action: replacetarget_label: __address__regex: ([^:]+)(?::\d+)?;(\d+)replacement: $1:$2- action: labelmapregex: __meta_kubernetes_service_label_(.+)- source_labels: [__meta_kubernetes_namespace]action: replacetarget_label: kubernetes_namespace- source_labels: [__meta_kubernetes_service_name]action: replacetarget_label: kubernetes_name- job_name: kubernetes-etcdkubernetes_sd_configs:- role: endpointsrelabel_configs:- action: keepregex: kube-system;etcdsource_labels:- __meta_kubernetes_namespace- __meta_kubernetes_service_name - job_name: 'k8s-cluster'scrape_interval: 15sstatic_configs:- targets: ['192.168.100.101:9100']labels:instance: 'master-100.101'- targets: ['192.168.100.102:9100']labels:instance: 'node1-100.102'- targets: ['192.168.100.103:9100']labels:instance: 'node2-100.103'- job_name: 'alertmanager'scrape_interval: 15sstatic_configs:- targets: ['alertmanager.prometheus.svc.cluster.local:9093']- job_name: "blackbox_http"metrics_path: /probeparams:module: [http_2xx]static_configs:- targets:- https://www.baidu.comrelabel_configs:- source_labels: [__address__]target_label: __param_target- source_labels: [__param_target]target_label: instance- target_label: __address__replacement: 192.168.100.104:9115- job_name: "blackbox_tcp"metrics_path: /probeparams:module: [tcp_connect]static_configs:- targets: - 192.168.100.101:3306relabel_configs:- source_labels: [__address__]target_label: __param_target- source_labels: [__param_target]target_label: instance- target_label: __address__replacement: 192.168.100.104:9115

5. 创建Service(prometheus-svc.yaml)

暴露 Prometheus Web UI 服务端口(9090),通过NodePort方式允许外部访问

apiVersion: v1

kind: Service

metadata:name: prometheusnamespace: prometheuslabels:app: prometheus

spec:type: NodePortports:- port: 9090targetPort: 9090nodePort: 30001protocol: TCPselector:app: prometheuscomponent: server

7. 创建DeployMent(prometheus-deploy.yaml)

部署Prometheus Server,指定使用的配置文件和挂载方式,绑定上一步的ServiceAccount,支持高可用部署(当前为单实例)

---

apiVersion: apps/v1

kind: Deployment

metadata:name: prometheus-servernamespace: prometheuslabels:app: prometheus

spec:replicas: 1 #单实例部署,可根据需要扩展为高可用selector:matchLabels:app: prometheuscomponent: server#matchExpressions:#- {key: app, operator: In, values: [prometheus]}#- {key: component, operator: In, values: [server]}template:metadata:labels:app: prometheuscomponent: serverannotations:prometheus.io/scrape: 'false'spec:nodeName: node2 #固定部署在node2节点上(也可不指定)serviceAccountName: prometheuscontainers:- name: prometheusimage: harbor.local/k8s/prometheus:v2.37.6imagePullPolicy: IfNotPresentcommand:- prometheus- --config.file=/etc/prometheus/prometheus.yml- --storage.tsdb.path=/prometheus #设置本地数据存储路径- --storage.tsdb.retention=720h- --web.enable-lifecycle #支持热更新配置- --web.enable-admin-api - --storage.tsdb.retention.time=30dports:- containerPort: 9090protocol: TCPvolumeMounts:- mountPath: /etc/prometheus/name: prometheus-config- mountPath: /prometheus/name: prometheus-storage-volume- mountPath: /etc/prometheus/rulesname: prometheus-rules-volumevolumes:- name: prometheus-configconfigMap:name: prometheus-config- name: prometheus-storage-volumehostPath:#需要去node2节点创建这两个目录并使用chmod -R 777授权path: /kubernetes/prometheus/data/ type: Directory- name: prometheus-rules-volumehostPath:path: /kubernetes/prometheus/rules type: Directory

8. 部署所有资源

kubectl apply -f prometheus-namespace.yaml

kubectl apply -f node-exporter.yaml

kubectl apply -f prometheus-serviceaccount.yaml

kubectl apply -f prometheus-clusterrolebinding.yaml

kubectl apply -f prometheus-config.yaml

kubectl apply -f prometheus-svc.yaml

kubectl apply -f prometheus-deploy.yaml

三、验证部署

1. 验证Pod状态

kubectl get pod -n prometheus

2. 访问Prometheus Web UI

通过浏览器访问 http://ip:30001,进入 Prometheus Web UI

总结

🚀 本文介绍了如何在 Kubernetes 集群中部署 Prometheus,并结合 Node Exporter 实现对节点资源的基础监控。通过配置 ServiceAccount、RBAC 权限、Prometheus 配置文件以及 DaemonSet 部署 Node Exporter,完成了从数据采集到可视化的基本监控体系搭建。

✅ 在下一篇文章中,我们将继续完善监控体系,重点介绍如何在 Prometheus 中集成 Alertmanager,实现基于指标的自动化告警机制,包括告警规则配置、通知渠道设置(如邮件、Webhook 等)以及与 Prometheus 的联动流程,为运维体系提供实时的预警能力。