Linux 实验

Linux 实验

- 准备工作

- 配置仓库、挂载、安装基本软件

- 关闭防火墙,SELinux

- 自动挂载

- 复制文件到另一主机

- nfs

- 1. nfs服务

- 1.1 server

- 1.2 client

- 1.3 测试

- 2. nfs服务与nginx服务

- 2.1 两台主机初始操作

- 2.2 nfs服务主机

- 2.3 nginx服务主机

- dns

- 1. 在内网服务器上可以使用域名进行上网。

- 1.1 dns 主机安装bind软件包

- 1.2 dns 主机配置

- 1.3 client 主机修改DNS地址、重启网卡

- 1.4 client 主机验证DNS解析(安装bind)

- 1.5 报错

- 2. 实现主服务器

- 2.1 Web服务

- 2.2 配置DNS

- 2.3 客户端测试

- 3. 实现主从服务器

- keepalived

- 1. keepalived+nginx

- 1.1 环境准备

- 1.2 安装Nginx

- 1.3 安装keepalived

- 2. keepalived+nginx+tomcat高可用

- 2.1 搭建tomcat、修改tomcat的主页

- 2.2 nginx(master、backup)

- 2.3 keepalived(master、backup)

- 在这个时候如果nginx服务异常,则会报错

- 配置nignx高可用

- 测试高可用

- 2.4 报错

- 3. 三主热备架构

- 3.1 安装 tomcat1、2、3

- 3.2 搭建 nginx、keepalived(master、backup1、backup2)

- 3.3 搭建dns轮询

- 4.

- LVS

- 1. NAT 模式安装

- 1.1 配置 RS(NAT)

- 1.2 配置 LVS(两个网卡)

- 配置仅主机模式网卡(用于虚拟IP,便于用户访问)

- 安装 ipvsamd

- 配置NAT模式网卡(作为后端真实主机的网关)

- 1.3 配置客户端(仅主机)

- 1.4 启动ipvsadm服务(lvs)

- 1.5 LVS 规则匹配

- 1.6 功能测试

- 客户端测试

- NAT模式内核参数配置(LVS主机)

- 2.DR模式(DR模式单网段案例)

- 2.1 配置路由

- 2.2 配置RS真实服务器

- 2.3 配置LVS

- 2.4 给 RS 服务增加 VIP

- 2.5 在 RS 上增加内核参数(防止IP冲突)

- DR模式内核参数(RS主机)

- 2.6 配置 LVS 规则

- 2.7 测试

准备工作

配置仓库、挂载、安装基本软件

#配置仓库

[root@client yum.repos.d]# vi /eetcyum.repos.d/dnf.repo

[root@client yum.repos.d]# cat dnf.repo

[BaseOS]

name=BaseOS

baseurl=/mnt/BaseOS

gpgcheck=0

[AppStream]

name=AppStream

baseurl=/mnt/AppStream

gpgcheck=0

#挂载

[root@client yum.repos.d]# mount /dev/sr0 /mnt

#安装基本软件,bash-completion 用于tab补全

[root@client yum.repos.d]# dnf install net-tools wget curl bash-completion vim -y

关闭防火墙,SELinux

# 关闭防火墙

systemctl disable firewalld #永久关闭,即设置开机的时候不自动启动

systemctl stop firewalld #临时关闭

# 关闭SELINUX

#永久关闭

vim /etc/selinux/config

SELINUX=Permissive

#临时关闭

setenforce 0

自动挂载

写自动挂载文件:

[root@Web1 ~]# vim /etc/sysctl.d/

[root@Web1 ~]# vim /etc/fstab

[root@Web1 ~]# vim /etc/rc.d/init.d/

# 挂载后重新加载

systemctl daemon-reload

[root@Web1 ~]# mount -a #挂载全部

复制文件到另一主机

scp 要复制的文件 root@目标主机IP:要保存的路径

eg.

scp /etc/keepalived/* root@192.168.88.32:/etc/keepalived/

nfs

注意配置/etc/exports时的权限问题

vim /etc/exports

要共享的文件目录 要接收共享文件的主机IP(rw,no_root_squash)

或者

要共享的文件目录 要接收共享文件的主机IP1(rw,sync) IP2(rw,sync)

eg.

/nfs/data 192.168.72.114(rw,sync) 192.168.72.115(rw,sync)

#不同主机IP之间空格隔开,IP和权限配置之间无空格

1. nfs服务

要设置NFS共享,需要至少两台 Linux/Unix 机器

| 角色 | IP | 主机名 | 软件 |

|---|---|---|---|

| 服务器 | 192.168.98.140 | nfs-server | nfs-utils |

| 客户端 | 192.168.98.141 | nfs-client | nfs-utils |

1.1 server

- 配置仓库,挂载,安装软件 nfs-utils

- 放行防火墙,关闭selinux,开启服务

- 创建要共享的文件目录

mkdir -p - 配置NFS/etc/exports,

要共享的文件目录 要接收共享文件的主机IP(rw)(避免后续再去添加权限,可在这里直接写入权限,no_root_squash)或者(rw,sync) - 重启服务,暴露共享位置

showmount -e nfs主机(要共享文件的主机)IP

# 1.配置仓库,挂载,安装软件 nfs-utils

[root@nfs-server ~]# mount /dev/sr0 /mnt

mount: /mnt: WARNING: source write-protected, mounted read-only.

[root@nfs-server ~]# dnf install nfs-utils -y

# 2.放行防火墙,关闭selinux,开启服务

firewall-cmd --permanent --add-service=nfs

setenforce 0

# 3.创建要共享的文件目录

[root@nfs-server ~]# mkdir -p /nfs/share

# 4.配置NFS /etc/exports,要共享的文件目录 要接收共享文件的主机IP(rw,no_root_squash)

[root@nfs-server ~]# vim /etc/exports

[root@nfs-server ~]# cat /etc/exports

/nfs/share 192.168.98.141(rw,no_root_squash)

# 5.重启服务,暴露共享位置 showmount -e nfs主机(要共享文件的主机)IP

[root@nfs-server ~]# systemctl start nfs-server

[root@nfs-server ~]# showmount -e 192.168.98.140

Export list for 192.168.98.140:

/nfs/share 192.168.98.141

1.2 client

- 配置仓库,挂载,安装软件 nfs-utils

- 放行防火墙,关闭selinux,开启服务

- 创建挂载目录

- 挂载到服务端的共享目录

mount -t nfs nfs主机(要共享文件的主机)IP:nfs主机共享目录 挂载目录

# 1.配置仓库,挂载,安装软件 nfs-utils

[root@nfs-client ~]# mount /dev/sr0 /mnt

mount: /mnt: WARNING: source write-protected, mounted read-only.

[root@nfs-client ~]# dnf install nfs-utils -y

# 2.放行防火墙,关闭selinux,开启服务

firewall-cmd --permanent --add-service=nfs

setenforce 0

# 3.创建挂载目录

[root@nfs-client ~]# mkdir -p /nfs/receive

# 4.挂载到服务端的共享目录 mount -t nfs nfs主机(要共享文件的主机)IP:nfs主机共享目录 挂载目录

[root@nfs-client ~]# mount -t nfs 192.168.98.140:/nfs/share /nfs/receive/

[root@nfs-client ~]# df -h /nfs/receive/ #查看挂载

Filesystem Size Used Avail Use% Mounted on

192.168.98.140:/nfs/share 45G 1.7G 43G 4% /nfs/receive

1.3 测试

- 测试客户端读

- 服务端创建文件

- 客户端查看这个文件

#服务端创建文件

[root@nfs-server ~]# cd /nfs/share/

[root@nfs-server share]# ls

[root@nfs-server share]# touch one.txt

[root@nfs-server share]# ls

one.txt

#客户端查看这个文件

[root@nfs-client ~]# cd /nfs/receive/

[root@nfs-client receive]# ls

one.txt

#服务端写文件

[root@nfs-server share]# echo this is a file of nfs >> /nfs/share/one.txt

[root@nfs-server share]# cat one.txt

this is a file of nfs

#客户端查看这个文件内容

[root@nfs-client receive]# cat one.txt

this is a file of nfs

#客户端修改该文件内容

[root@nfs-client receive]# echo add line >> /nfs/receive/one.txt

[root@nfs-client receive]# cat one.txt

this is a file of nfs

add line

- 测试客户端写

客户端写数据时,提示没有权限:需要给服务端的共享目录设置权限- 客户端创建文件

- 服务端查看

#客户端创建文件

[root@nfs-client receive]# touch two.txt

[root@nfs-client receive]# ls

one.txt two.txt

#服务端查看文件

[root@nfs-server share]# ls

one.txt two.txt

#客户端写入

[root@nfs-client receive]# echo client write >> /nfs/receive/two.txt

[root@nfs-client receive]# cat two.txt

client write

#服务端查看

[root@nfs-server share]# cat two.txt

client write

2. nfs服务与nginx服务

配置 NFS服务器 作为 ngxin 服务 的存储目录,并在目录中创建 index.html 文件,当访问 http://你的IP地址时,可以成功显示 index.html 文件的内容。

| 角色 | 软件 | IP | 主机名 | 系统 |

|---|---|---|---|---|

| NFS服务器 | nfs-utils | 192.168.88.40 | server | RHEL 9 |

| WEB服务 | nfs-utils, nginx | 192.168.88.8 | web | openEuler |

2.1 两台主机初始操作

- 挂载,安装nfs-utils

- 开启服务

- 放行防火墙

- 关闭selinux

2.2 nfs服务主机

- 创建共享目录

- 配置/etc/exports

- 重启服务

- showmount -e

2.3 nginx服务主机

- 创建挂载目录

- 执行挂载 mount -t nfs nfs主机IP:nfs主机共享目录 nginx服务主机挂载目录

- 安装 nginx

- 启动 nginx,配置web服务 /etc/nginx/conf.d/nfs.conf

验证配置文件是否有效

[root@web ~]# /usr/sbin/nginx -t - 重启 nginx,放行80端口

firewall-cmd --permanent --add-port=80/tcp

firewall-cmd --reload

irewall-cmd --list-all - 浏览

打开浏览器,输入 http:// web主机IP来访问

# 配置nginx官方仓库

[root@web ~]# vim /etc/yum.repos.d/nginx.repo

[root@web ~]# cat /etc/yum.repos.d/nginx.repo

[nginx-stable]

name=nginx stable repo

baseurl=http://nginx.org/packages/centos/$releasever/$basearch/

gpgcheck=1

enabled=1

gpgkey=https://nginx.org/keys/nginx_signing.key

module_hotfixes=true

[nginx-mainline]

name=nginx mainline repo

baseurl=http://nginx.org/packages/mainline/centos/$releasever/$basearch/

gpgcheck=1

enabled=0

gpgkey=https://nginx.org/keys/nginx_signing.key

module_hotfixes=true

[root@web ~]# dnf install nginx -y

dns

- bind包相关文件

- BIND主程序:/usr/sbin/named

- 服务脚本和Unit名称:/etc/rc.d/init.d/named, /usr/lib/systemd/system/named.service

- 主配置文件:/etc/named.conf,/etc/named.rfc1912.zones,/etc/rndc.key

- 管理工具:/usr/sbin/rndc:remote name domain controller,默认与bind安装在同一主机,且只能通过127.0.0.1连接named进程,提供辅助性的管理功能:953/tcp

- 解析库文件:/var/named/ZONE_NAME.ZONE

注意:

(1) 一台物理服务器可同时为多个区域提供解析

(2) 必须要有根区域文件:named.ca

(3) 应该有两个(如果包括ipv6的,应该更多)实现localhost和本地回环地址的解析库

-

主配置文件

- 全局配置: options{};

- 日志子系统配置: logging{};

- 区域定义: 本机能够为哪些zone进行解析,就要定义哪些zone, zone “ZONE_NAME” IN {};

注意:

(1) 任何服务程序如果期望其能够通过网络被其它主机访问 ,至少应该监听在一个能与外部主机通信的IP地址上

(2) 缓存名称服务器的配置:监听外部地址即可

(3) dnssec:建议关闭dnssec,设为no

1. 在内网服务器上可以使用域名进行上网。

实验目的:在 内网服务器 上可以使用 域名 进行上网

需要将客户端主机的dns指向DNS服务器(dns主机)的IP地址

| 主机 | 相关软件 | IP | dns | 角色 |

|---|---|---|---|---|

| client | 无 | 192.168.98.139 | 192.168.98.138 | 客户端 |

| dns | bind | 192.168.98.138 | 无特定dns | 域名解析服务器 |

若dns主机需要上网,则配置如阿里云dns:223.5.5.5

- 在 dns 主机上安装 bind 软件(仓库–>挂载–>安装)

- 修改主配置文件/etc/named.conf、编写区域数据文件/etc/named.rfc1912.zones,启动dns服务,测试域名解析

- 客户端修改DNS地址、重启网卡

- 客户端测试

1.1 dns 主机安装bind软件包

[root@dns ~]# mount /dev/sr0 /mnt/

mount: /mnt: WARNING: source write-protected, mounted read-only.

[root@dns ~]# dnf install bind -y

[root@dns ~]# nmcli device show ens160

GENERAL.DEVICE: ens160

GENERAL.TYPE: ethernet

GENERAL.HWADDR: 00:0C:29:F4:E3:E2

GENERAL.MTU: 1500

GENERAL.STATE: 100 (connected)

GENERAL.CONNECTION: ens160

GENERAL.CON-PATH: /org/freedesktop/NetworkManager/ActiveConnection/2

WIRED-PROPERTIES.CARRIER: on

IP4.ADDRESS[1]: 192.168.98.138/24

IP4.GATEWAY: 192.168.98.2

IP4.ROUTE[1]: dst = 0.0.0.0/0, nh = 192.168.98.2, mt = 100

IP4.ROUTE[2]: dst = 192.168.98.0/24, nh = 0.0.0.0, mt = 100

IP4.DNS[1]: 192.168.98.2

IP4.DOMAIN[1]: localdomain

IP6.ADDRESS[1]: fe80::20c:29ff:fef4:e3e2/64

IP6.GATEWAY: --

IP6.ROUTE[1]: dst = fe80::/64, nh = ::, mt = 1024

1.2 dns 主机配置

# 修改主配置文件

[root@dns ~]# vim /etc/named.conf

[root@dns ~]# cat /etc/named.conf

options {

listen-on port 53 { 192.168.98.138; }; #自己主机IP(dns服务器IP)

directory "/var/named";

};

zone "kd.com" IN { #域名

type master;

file "kd.com"; #文件目录在 /var/named/kd.com

};

# 编写区域数据文件

[root@dns ~]# vim /etc/named.conf

[root@dns ~]# cat /var/named/kd.com

$TTL 1D

@ IN SOA ns.kd.com. admin.kd.com. (

0

1D

1H

1W

1D)

IN NS ns.kd.com.

ns IN A 192.168.98.138

www IN A 192.168.98.138

# 启动dns服务,测试域名解析

[root@dns ~]# systemctl start named

#NS解析

[root@dns ~]# dig -t NS kd.com @192.168.98.138

; <<>> DiG 9.16.23-RH <<>> -t NS kd.com @192.168.98.138

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 42629

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 2

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 1232

; COOKIE: 7fc9d4754b82ae210100000067ee7d81da7c20a3add364cb (good)

;; QUESTION SECTION:

;kd.com. IN NS

;; ANSWER SECTION:

kd.com. 86400 IN NS ns.kd.com.

;; ADDITIONAL SECTION:

ns.kd.com. 86400 IN A 192.168.98.138

;; Query time: 0 msec

;; SERVER: 192.168.98.138#53(192.168.98.138)

;; WHEN: Thu Apr 03 20:22:25 CST 2025

;; MSG SIZE rcvd: 96

#A解析

[root@dns ~]# dig -t A www.kd.com @192.168.98.138

; <<>> DiG 9.16.23-RH <<>> -t A www.kd.com @192.168.98.138

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 28340

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 1232

; COOKIE: 53b7fa9ca09adb640100000067ee7d930d3e23ff825546dc (good)

;; QUESTION SECTION:

;www.kd.com. IN A

;; ANSWER SECTION:

www.kd.com. 86400 IN A 192.168.98.138

;; Query time: 1 msec

;; SERVER: 192.168.98.138#53(192.168.98.138)

;; WHEN: Thu Apr 03 20:22:43 CST 2025

;; MSG SIZE rcvd: 83

1.3 client 主机修改DNS地址、重启网卡

需要将客户端主机的dns指向DNS服务器(dns主机)的IP地址

- 修改

# 修改DNS地址

[root@client ~]# nmcli connection modify ens160 ipv4.dns 192.168.98.138

[root@client ~]# nmcli c up ens160 # 重启网卡

Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/3)

- 查看当前网络配置

[root@client ~]# nmcli device show ens160

GENERAL.DEVICE: ens160

GENERAL.TYPE: ethernet

GENERAL.HWADDR: 00:0C:29:E1:58:30

GENERAL.MTU: 1500

GENERAL.STATE: 100 (connected)

GENERAL.CONNECTION: ens160

GENERAL.CON-PATH: /org/freedesktop/NetworkManager/ActiveConnection/3

WIRED-PROPERTIES.CARRIER: on

IP4.ADDRESS[1]: 192.168.98.139/24

IP4.GATEWAY: 192.168.98.2

IP4.ROUTE[1]: dst = 192.168.98.0/24, nh = 0.0.0.0, mt = 100

IP4.ROUTE[2]: dst = 0.0.0.0/0, nh = 192.168.98.2, mt = 100

IP4.DNS[1]: 192.168.98.138

IP4.DNS[2]: 192.168.98.2

IP4.DOMAIN[1]: localdomain

IP6.ADDRESS[1]: fe80::20c:29ff:fee1:5830/64

IP6.GATEWAY: --

IP6.ROUTE[1]: dst = fe80::/64, nh = ::, mt = 1024

- 上网正常

[root@client ~]# ping www.baidu.com

PING www.a.shifen.com (183.2.172.17) 56(84) bytes of data.

64 bytes from 183.2.172.17 (183.2.172.17): icmp_seq=1 ttl=128 time=32.6 ms

64 bytes from 183.2.172.17 (183.2.172.17): icmp_seq=2 ttl=128 time=32.7 ms

64 bytes from 183.2.172.17 (183.2.172.17): icmp_seq=3 ttl=128 time=37.5 ms

^C

--- www.a.shifen.com ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2005ms

rtt min/avg/max/mdev = 32.594/34.288/37.529/2.292 ms

1.4 client 主机验证DNS解析(安装bind)

[root@client ~]# mount /dev/sr0 /mnt/

mount: /mnt: WARNING: source write-protected, mounted read-only.

[root@client ~]# dnf install bind -y

[root@client ~]# dig -t NS kd.com

; <<>> DiG 9.16.23-RH <<>> -t NS kd.com

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 51615

;; flags: qr rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 13

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; MBZ: 0x0005, udp: 4096

; COOKIE: 2369aa9b6b20641cc1fc483f67ee7f987d5bf67b983c448d (good)

;; QUESTION SECTION:

;kd.com. IN NS

;; ANSWER SECTION:

kd.com. 5 IN NS bed.dnspod.net.

kd.com. 5 IN NS hamiltion.dnspod.net.

;; ADDITIONAL SECTION:

hamiltion.dnspod.net. 5 IN A 1.12.0.4

hamiltion.dnspod.net. 5 IN A 1.14.119.35

hamiltion.dnspod.net. 5 IN A 111.13.13.35

hamiltion.dnspod.net. 5 IN A 112.80.181.45

hamiltion.dnspod.net. 5 IN A 117.89.178.173

bed.dnspod.net. 5 IN A 36.155.149.176

bed.dnspod.net. 5 IN A 101.227.168.35

bed.dnspod.net. 5 IN A 111.206.98.235

bed.dnspod.net. 5 IN A 129.211.176.239

bed.dnspod.net. 5 IN A 1.12.0.1

hamiltion.dnspod.net. 5 IN AAAA 2402:4e00:1470:2::e

bed.dnspod.net. 5 IN AAAA 2402:4e00:111:fff::c

;; Query time: 41 msec

;; SERVER: 192.168.98.2#53(192.168.98.2)

;; WHEN: Thu Apr 03 20:31:20 CST 2025

;; MSG SIZE rcvd: 331

[root@client ~]# dig -t -A www.kd.com

;; Warning, ignoring invalid type -A

; <<>> DiG 9.16.23-RH <<>> -t -A www.kd.com

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 28030

;; flags: qr rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 2, ADDITIONAL: 12

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; MBZ: 0x0005, udp: 4096

; COOKIE: 355ead1a13d08d367a2ff03c67ee7f7dd07b374d4b42b19b (good)

;; QUESTION SECTION:

;www.kd.com. IN A

;; ANSWER SECTION:

www.kd.com. 5 IN CNAME dn.com.

dn.com. 5 IN A 35.173.228.115

;; AUTHORITY SECTION:

dn.com. 5 IN NS hamiltion.dnspod.net.

dn.com. 5 IN NS bed.dnspod.net.

;; ADDITIONAL SECTION:

hamiltion.dnspod.net. 5 IN AAAA 2402:4e00:1470:2::e

hamiltion.dnspod.net. 5 IN A 117.89.178.173

hamiltion.dnspod.net. 5 IN A 1.12.0.4

hamiltion.dnspod.net. 5 IN A 1.14.119.35

hamiltion.dnspod.net. 5 IN A 111.13.13.35

hamiltion.dnspod.net. 5 IN A 112.80.181.45

bed.dnspod.net. 5 IN A 101.227.168.35

bed.dnspod.net. 5 IN A 111.206.98.235

bed.dnspod.net. 5 IN A 129.211.176.239

bed.dnspod.net. 5 IN A 1.12.0.1

bed.dnspod.net. 5 IN A 36.155.149.176

;; Query time: 75 msec

;; SERVER: 192.168.98.2#53(192.168.98.2)

;; WHEN: Thu Apr 03 20:30:53 CST 2025

;; MSG SIZE rcvd: 340

1.5 报错

# 区域数据文件有写错,比如$TTL的 $

zone kendra.com/IN: loading from master file kendra.com failed: unexpected end of input

...: zone kendra.com/IN: not loaded due to errors.

# 主配置文件写错,file "kd.com"这里对应域名

zone kd.com/IN: loading from master file kd failed: file not found

Apr 03 20:06:56 dns bash[2208]: zone kd.com/IN: not loaded due to errors.

# 检查区域数据文件(vim /var/named/kd.com)

[root@dns ~]# named-checkzone kd.com /var/named/kd.com

zone kd.com/IN: loaded serial 0

OK

2. 实现主服务器

- 主服务器

维护区域数据主副本的权威服务器 称为 主服务器 。通常,它从一些由人工编辑的本地文件加载分区内容 ,或者可能从一些由人工编辑的其他本地文件机械生成分区内容。此文件称为区域文件或主文件。但是,在某些情况下,区域文件可能根本不由人工编辑,而是动态更新操作的结果。

| 主机名 | 软件 | IP | dns | 作用 |

|---|---|---|---|---|

| web | nginx | 192.168.72.8 | web 服务器 | |

| dns | bind | 192.168.72.18 | DNS 服务器 | |

| client | 192.168.72.7 | 192.168.72.18 | 客户端 |

修改客户端的 dns 为 dns 服务器的 IP 地址

2.1 Web服务

- 安装nginx服务

- 编写欢迎页面

- 启动nginx服务

- 防火墙放行 http 服务

# 1.安装nginx服务

# 2.编写欢迎页面

# 3.启动nginx服务

# 4.防火墙放行 http 服务

2.2 配置DNS

- 安装bind

- 修改主配置文件

vim /etc/bind/named.conf

zone “example.com” IN {

type master;

file “/etc/bind/example.com”;

}; - 编写区域数据文件

- 启动named服务

- 放行防火墙

- 测试解析

# 1.安装bind

# 2. 修改主配置文件

# 3. 编写区域数据文件

# 4. 启动named服务

# 5. 放行防火墙

# 6. 测试解析

2.3 客户端测试

修改客户端的 dns 为 dns 服务器的 IP 地址

- 修改dns

nmcli d show | grep DNS

nmcli c modify ens160 ipv4.dns dns服务器的IP地址

nmcli c up ens160

mcli d show | grep DNS

- 测试:

curl www.域名

curl web主机IP

3. 实现主从服务器

keepalived

Keepalived是集群管理中保证集群高可用的一个服务软件,用来防止单点故障。

关闭防火墙、selinux

systemctl stop firewalld

setenfore 0

1. keepalived+nginx

| IP地址 | 主机名 | 软件 | 节点 |

|---|---|---|---|

| 192.168.88.30 | master | keepalived, nginx | 主节点 |

| 192.168.88.32 | backup | keepalived, nginx | 从节点 |

| 192.168.88.100 | Vip地址 |

1.1 环境准备

克隆两个虚拟机,然后个主机名和IP地下,关闭防火墙以及 Selinux。

[root@localhost ~]# hostnamectl hostname master

[root@localhost ~]# nmcli c modify ens160 ipv4.method manual ipv4.addresses 192.168.88.30/24 ipv4.gateway 192.168.88.2 ipv4.dns 223.5.5.5 connection.autoconnect yes

[root@localhost ~]# nmcli c up ens160

[root@master ~]# systemctl stop firewalld

[root@localhost ~]# hostnamectl hostname backup

[root@localhost ~]# nmcli c modify ens160 ipv4.method manual ipv4.addresses 192.168.72.32/24 ipv4.gateway 192.168.72.2 ipv4.dns 223.5.5.5 connection.autoconnect yes

[root@localhost ~]# nmcli c up ens160

[root@backup ~]# systemctl stop firewalld

1.2 安装Nginx

在两台主机上都要安装 nginx

# master

[root@master ~]# dnf install nginx -y

# backup

[root@backkup ~]# dnf install nginx -y

为了能够分辨,分别给两个 nginx 的主页设置不同的内容。

# master

[root@master ~]# echo "master 192.168.88.30" > /usr/share/nginx/html/index.html

# backup

[root@backkup ~]# echo "backup 192.168.88.32" > /usr/share/nginx/html/index.html

启动两台 nginx 服务

[root@master ~]# systemctl start nginx

[root@backkup ~]# systemctl start nginx

测试:

[root@master ~]# curl 192.168.88.30

master 192.168.88.30

[root@backkup ~]# curl 192.168.88.32

backup 192.168.88.32

1.3 安装keepalived

在两台主机上都要安装

[root@master ~]# dnf install keepalived -y

[root@backkup ~]# dnf install keepalived -y

配置keepalivd

# 查找配置文件路径

[root@master ~]# rpm -qc keepalived

/etc/keepalived/keepalived.conf # 配置文件

/etc/sysconfig/keepalived

# 把配置文件备份

[root@master ~]# cp /etc/keepalived/keepalived.conf{,.bak}

[root@master ~]# ls /etc/keepalived/

keepalived.conf keepalived.conf.bak

# 修改配置文件

[root@master ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

# 全局配置,用于服务出现故障时发送路由所使用

global_defs {

router_id master # 这个是在发送邮件是放在标题上,一般指定为本主机名

}

# 配置虚拟IP的地方

vrrp_instance VI_1 {

state MASTER # 这个地方是 MASTER,它只是一个标识,不能决定最终是不是 master 节点,在另一台上这个地方配置为 BUCKUP

interface ens160 # 接口对象,需要通过 ip a 来查看

virtual_router_id 51 # 虚拟路由编号,一般不用修改,主从之间这个值必须一致

priority 100 # 优选级,用于选举谁是 master,值大的的就是 master,值小的就是 backup

advert_int 1 # 通知的方式

authentication { # keepalived 节点之间通信的凭证

auth_type PASS

auth_pass 1111

}

# 定义虚拟IP,如果多个需要第一个IP写书一行

virtual_ipaddress {

192.168.88.100

}

}

配置好后,将文件复制到 backup 节点:

[root@master ~]# scp /etc/keepalived/* root@192.168.88.32:/etc/keepalived/

The authenticity of host '192.168.88.32 (192.168.88.32)' can't be established.

ED25519 key fingerprint is SHA256:IXJJgIYtrMMZ4EALsHR1+6xQNFLC6sUdQWpDW1Ub3fk.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.88.32' (ED25519) to the list of known hosts.

root@192.168.72.32's password:

keepalived.conf 100% 320 257.9KB/s 00:00

keepalived.conf.bak 100% 3550 1.2MB/s 00:00

修改从节点配置文件:

[root@backkup ~]# ls /etc/keepalived/

keepalived.conf keepalived.conf.bak

[root@backkup ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id backup

}

vrrp_instance VI_1 {

state BACKUP

interface ens160

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.88.100

}

}

启动两台主机中的 keepavlied 服务

[root@master ~]# systemctl start keepalived

[root@backkup ~]# systemctl start keepalived

启动好后,查看 IP 地址:

[root@master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:92:0e:25 brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.88.30/24 brd 192.168.88.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet 192.168.88.100/32 scope global ens160

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe92:e25/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@backkup ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:d8:c0:8c brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.88.32/24 brd 192.168.88.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fed8:c08c/64 scope link noprefixroute

valid_lft forever preferred_lft forever

停止 master 节点上的 keepalived 服务,然后查看IP地址:

[root@master ~]# systemctl stop keepalived.service

[root@master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:92:0e:25 brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.88.30/24 brd 192.168.88.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe92:e25/64 scope link noprefixroute

valid_lft forever preferred_lft forever

发现:此时 master 上没有虚拟 IP 地址了

现查看 backup 节点上的 IP 地址:

[root@backkup ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:d8:c0:8c brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.88.32/24 brd 192.168.88.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet 192.168.88.100/32 scope global ens160

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fed8:c08c/64 scope link noprefixroute

valid_lft forever preferred_lft forever

发现:虚拟 IP 已经飘移到了从节点上

2. keepalived+nginx+tomcat高可用

| 角色 | 主机名 | 软件 | IP地址 |

|---|---|---|---|

| 用户 | client | 192.168.98.90 | |

| keepalived | vip | 192.168.98.100 | |

| master | master | keepalived, nginx | 192.168.98.31 |

| backup | backup | keepalived, nginx | 192.168.98.32 |

| web | tomcat1 | tomcat | 192.168.98.41 |

| web | tomcat2 | tomcat | 192.168.98.42 |

2.1 搭建tomcat、修改tomcat的主页

-

下载JDK21,解压

oracle的JDK,进入oracle官网: oracle,复制链接,回到虚拟机wget下载

下载:wget https://download.oracle.com/java/21/latest/jdk-21_linux-x64_bin.tar.gz

解压:tar -zxf jdk-21_linux-x64_bin.tar.gz -C /usr/local,一般安装软件解压目录都为/usr/local,-C:指定解压的目录,z:表示gz这种格式,f:必须放在后面,f后面必须跟要解压的文件

-

下载tomcat,解压

进入tomcat官网: tomcat,复制链接,回到虚拟机wget下载

下载:wget https://dlcdn.apache.org/tomcat/tomcat-11/v11.0.5/bin/apache-tomcat-11.0.5.tar.gz

解压:tar -zxf apache-tomcat-11.0.5.tar.gz -C /usr/local/

-

配置 JDK /etc/profile

vim /etc/profile在末行添加一下内容

export JAVA_HOME=/usr/local/jdk-21.0.6/,export:导出JAVA的安装目录

export PATH=$PATH:$JAVA_HOME/bin,要将JAVA_HOME这个命令放到PATH中

export TOMCAT_HOME=/usr/local/apache-tomcat-11.0.5/

export PATH=$PATH:$TOMCAT_HOME/bin -

刷新文件让配置生效,验证JDK是否安装成功、tomcat是否配置成功

source /etc/profile

java -version -

启动tomcat

startup.sh

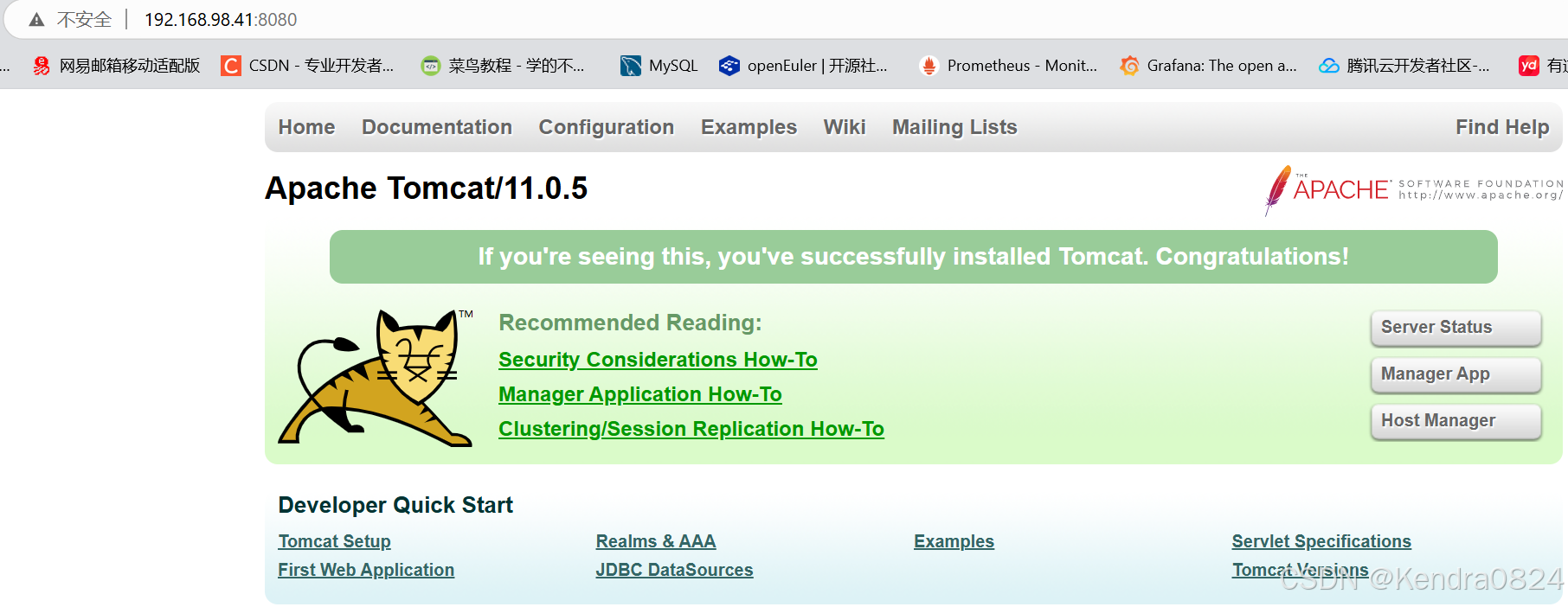

配置好,打开浏览器,输入 http://IP:8080,如果能够看到猫,表示tomcat安装成功

- 修改tomcat的主页

cd /usr/local/apache-tomcat-11.0.5/webapps/

rm -rf docs examples host-manager manager

cd ROOT/

rm -rf *

vim index.jsp(vim /usr/local/apache-tomcat-11.0.5/webapps/ROOT/index.jsp) 输入内容:tomcat1 IP - curl IP:8080

查看tomcat主页内容

# 1.下载JDK21,解压

[root@tomcat1 ~]# wget https://download.oracle.com/java/21/latest/jdk-21_linux-x64_bin.tar.gz

......

# 查看下载文件

[root@tomcat1 ~]# ls

anaconda-ks.cfg jdk-21_linux-x64_bin.tar.gz

# 解压JDK21

[root@tomcat1 ~]# tar -zxf jdk-21_linux-x64_bin.tar.gz -C /usr/local

# 一般安装软件解压目录都为 /usr/local

# -C:指定解压的目录,不加的话就解压到当前目录

# z:表示gz这种格式

# x:表示解压

# f:必须放在后面,f后面必须跟要解压的文件

[root@tomcat1 ~]# ls /usr/local

bin etc games include jdk-21.0.6 lib lib64 libexec sbin share src

[root@tomcat1 ~]# ls /usr/local/jdk-21.0.6/ (这个目录用在后面配置文件上)

bin conf include jmods legal lib LICENSE man README release

可执行的二进制文件 配置文件 c语言的头文件 法律文件

# 2.下载tomcat,解压

[root@tomcat1 ~]# wget https://dlcdn.apache.org/tomcat/tomcat-11/v11.0.5/bin/apache-tomcat-11.0.5.tar.gz

.......

# 解压tomcat

[root@tomcat1 ~]# tar -zxf apache-tomcat-11.0.5.tar.gz -C /usr/local/

[root@tomcat1 ~]# cd /usr/local/

[root@tomcat1 local]# ls

apache-tomcat-11.0.5 bin etc games include jdk-21.0.6 lib lib64 libexec sbin share src

[root@tomcat1 local]# ls /usr/local/apache-tomcat-11.0.5/(这个目录用在后面配置文件上)

bin conf lib logs README.md RUNNING.txt webapps

BUILDING.txt CONTRIBUTING.md LICENSE NOTICE RELEASE-NOTES temp work

# 3.配置 JDK:/etc/profile,vim /etc/profile 在末行添加以下内容

[root@tomcat1 ~]# vim /etc/profile

export JAVA_HOME=/usr/local/jdk-21.0.6/ export:#导出JAVA的安装目录

export PATH=$PATH:$JAVA_HOME/bin #要将JAVA_HOME这个命令放到 PATH 中,加上bin目录路径赋给PATH

# $PATH:将之前PATH的变量值拿过来用

export TOMCAT_HOME=/usr/local/apache-tomcat-11.0.5/

export PATH=$PATH:$TOMCAT_HOME/bin

# 4.刷新文件让配置生效,验证JDK是否安装成功、tomcat是否配置成功

# 刷新文件让配置生效

[root@tomcat1 ~]# source /etc/profile

# 验证JDK是否安装成功

[root@tomcat1 ~]# java -version

java version "21.0.6" 2025-01-21 LTS

Java(TM) SE Runtime Environment (build 21.0.6+8-LTS-188)

Java HotSpot(TM) 64-Bit Server VM (build 21.0.6+8-LTS-188, mixed mode, sharing)

# 5.启动tomcat:startup.sh

[root@tomcat1 ~]# startup.sh

Using CATALINA_BASE: /usr/local/apache-tomcat-11.0.5

Using CATALINA_HOME: /usr/local/apache-tomcat-11.0.5

Using CATALINA_TMPDIR: /usr/local/apache-tomcat-11.0.5/temp

Using JRE_HOME: /usr/local/jdk-21.0.6/

Using CLASSPATH: /usr/local/apache-tomcat-11.0.5/bin/bootstrap.jar:/usr/local/apache-tomcat-11.0.5/bin/tomcat-juli.jar

Using CATALINA_OPTS:

Tomcat started.

[root@tomcat1 ~]# systemctl stop firewalld

配置好,关闭防火墙,打开浏览器,输入 http://IP:8080,如果能够看到猫,表示tomcat安装成功

- 写入tomcat1内容后:

# 6.修改tomcat的主页(vim /usr/local/apache-tomcat-11.0.5/webapps/ROOT/index.jsp,但要先删除原本index.jsp文件)输入内容:tomcat1 IP

[root@tomcat1 ~]# cd /usr/local/apache-tomcat-11.0.5/webapps/

[root@tomcat1 webapps]# ls

docs examples host-manager manager ROOT

[root@tomcat1 webapps]# rm -rf docs examples host-manager manager

[root@tomcat1 webapps]# ls

ROOT

[root@tomcat1 webapps]# cd ROOT/

[root@tomcat1 ROOT]# ls

asf-logo-wide.svg bg-middle.png bg-upper.png index.jsp tomcat.css WEB-INF

bg-button.png bg-nav.png favicon.ico RELEASE-NOTES.txt tomcat.svg

[root@tomcat1 ROOT]# rm -rf *

[root@tomcat1 ROOT]# vim index.jsp

[root@tomcat1 ROOT]# cat index.jsp

tomcat1 192.168.98.41

# 7.curl IP:8080,查看tomcat主页内容

[root@tomcat1 ROOT]# curl 192.168.98.41:8080

tomcat1 192.168.98.41

- tomcat2

[root@tomcat2 ~]# wget https://download.oracle.com/java/21/latest/jdk-21_linux-x64_bin.tar.gz

--2025-03-25 22:23:38-- https://download.oracle.com/java/21/latest/jdk-21_linux-x64_bin.tar.gz

......

jdk-21_linux-x64_bin.tar.g 100%[========================================>] 188.26M 1.45MB/s in 2m 23s

2025-03-25 22:26:02 (1.32 MB/s) - ‘jdk-21_linux-x64_bin.tar.gz’ saved [197405999/197405999]

[root@tomcat2 ~]# ls

anaconda-ks.cfg jdk-21_linux-x64_bin.tar.gz

[root@tomcat2 ~]# tar -zxf jdk-21_linux-x64_bin.tar.gz -C /usr/local

[root@tomcat2 ~]# ls /usr/local

bin etc games include jdk-21.0.6 lib lib64 libexec sbin share src

[root@tomcat2 ~]# ls /usr/local/jdk-21.0.6/

bin conf include jmods legal lib LICENSE man README release

[root@tomcat2 ~]# wget https://dlcdn.apache.org/tomcat/tomcat-11/v11.0.5/bin/apache-tomcat-11.0.5.tar.gz

--2025-03-25 22:27:53-- https://dlcdn.apache.org/tomcat/tomcat-11/v11.0.5/bin/apache-tomcat-11.0.5.tar.gz

.....

[root@tomcat2 ~]# tar -zxf apache-tomcat-11.0.5.tar.gz -C /usr/local/

[root@tomcat2 ~]# vim /etc/profile

[root@tomcat2 ~]# source /etc/profile

[root@tomcat2 ~]# java -version

java version "21.0.6" 2025-01-21 LTS

Java(TM) SE Runtime Environment (build 21.0.6+8-LTS-188)

Java HotSpot(TM) 64-Bit Server VM (build 21.0.6+8-LTS-188, mixed mode, sharing)

[root@tomcat2 ~]# startup.sh

Using CATALINA_BASE: /usr/local/apache-tomcat-11.0.5

Using CATALINA_HOME: /usr/local/apache-tomcat-11.0.5

Using CATALINA_TMPDIR: /usr/local/apache-tomcat-11.0.5/temp

Using JRE_HOME: /usr/local/jdk-21.0.6/

Using CLASSPATH: /usr/local/apache-tomcat-11.0.5/bin/bootstrap.jar:/usr/local/apache-tomcat-11.0.5/bin/tomcat-juli.jar

Using CATALINA_OPTS:

Tomcat started.

[root@tomcat2 ~]# systemctl stop firewalld

[root@tomcat2 ~]# rm -rf /usr/local/apache-tomcat-11.0.5/webapps/ROOT/index.jsp

[root@tomcat2 ~]# vim /usr/local/apache-tomcat-11.0.5/webapps/ROOT/index.jsp

[root@tomcat2 ~]# cat /usr/local/apache-tomcat-11.0.5/webapps/ROOT/index.jsp

tomcat2 192.168.98.42

[root@tomcat2 ~]# curl 192.168.98.42:8080

tomcat2 192.168.98.42

- 配置完成

- 写入tomcat2内容后:

2.2 nginx(master、backup)

- 安装 nginx

- 配置 /etc/nginx/conf.d/master.conf

upstream tomcat {

server tomcat1IP:8080;

server tomcat2IP:8080;

}

server {

listen 80;

server_name 本机IP;

access_log /var/log/nginx/master_access.log;

error_log /var/log/nginx/master_error.log;

location / {

proxy_pass http://tomcat;

}

} - 启动nginx服务

systemctl start nginx - 测试

curl http://nginx主机IP,得到tomcat主页内容

# 1.安装 nginx

[root@master ~]# mount /dev/sr0 /mnt

mount: /mnt: WARNING: source write-protected, mounted read-only.

[root@master ~]# dnf install nginx -y

........

# 2.配置 /etc/nginx/conf.d/master.conf

[root@master ~]# vim /etc/nginx/conf.d/master.conf

[root@master ~]# cat /etc/nginx/conf.d/master.conf

upstream tomcat1 {

server 192.168.98.41:8080; #tomcat主机IP

server 192.168.98.42:8080;

}

server {

listen 80;

server_name 192.168.98.31;#本机IP,后续要进行keepalived的VIP漂移,要将这里改为虚拟IP

access_log /var/log/nginx/master_access.log;

error_log /var/log/nginx/master_error.log;

location / {

proxy_pass http://tomcat1;

}

}

# 3.启动nginx服务

[root@master ~]# systemctl start nginx

# 4.测试,curl http://nginx主机IP,得到tomcat主页内容

[root@master ~]# curl http://192.168.98.31

<html>

<head><title>502 Bad Gateway</title></head>

<body>

<center><h1>502 Bad Gateway</h1></center>

<hr><center>nginx/1.20.1</center>

</body>

</html>

[root@master ~]# setenforce 0

[root@master ~]# curl http://192.168.98.31

<html>

<head><title>502 Bad Gateway</title></head>

<body>

<center><h1>502 Bad Gateway</h1></center>

<hr><center>nginx/1.20.1</center>

</body>

</html>

[root@master ~]# systemctl stop firewalld

[root@master ~]# curl http://192.168.98.31

tomcat2 192.168.98.42

[root@master ~]# curl http://192.168.98.31

tomcat1 192.168.98.41

- backup主机:

[root@backup ~]# mount /dev/sr0 /mnt

mount: /mnt: WARNING: source write-protected, mounted read-only.

[root@backup ~]# dnf install nginx -y

[root@backup ~]# vim /etc/nginx/conf.d/backup.conf

[root@backup ~]# cat /etc/nginx/conf.d/backup.conf

upstream tomcat2 {

server 192.168.98.41:8080;

server 192.168.98.42:8080;

}

server {

listen 80;

server_name 192.168.98.32;

access_log /var/log/nginx/backup_access.log;

error_log /var/log/nginx/backup_error.log;

location / {

proxy_pass http://tomcat2;

}

}

[root@backup ~]# systemctl start nginx

[root@backup ~]# setenforce 0

[root@backup ~]# systemctl stop firewalld

[root@backup ~]# curl http://192.168.98.32

tomcat1 192.168.98.41

[root@backup ~]# curl http://192.168.98.32

tomcat2 192.168.98.42

2.3 keepalived(master、backup)

- 安装keepalived

- 配置keepalived/etc/keepalived/keepalived.conf

删除多余语句

虚拟IP为192.168.98.100 - 启动keepalived服务

systemctl start keepalived

ip a show ens160查看主机虚拟IP

# 1.安装keepalived

[root@master ~]# dnf install keepalived -y

# 2.配置keepalived /etc/keepalived/keepalived.conf

[root@master ~]# vim /etc/keepalived/keepalived.conf

[root@master ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_master #注意名称

}

vrrp_instance VI_1 {

state MASTER #主,反之BACKUP

interface ens160 #注意看自己的网卡 ip ad 或 nmcli connection show查看

virtual_router_id 51 #同一台主机的keepalived的配置下id不能相同

priority 100 #优先级,每个虚拟IP下keepalived的配置的优先级不能相同

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.98.100 #虚拟IP

}

}

比如三主热备时,三个虚拟IP配置,其余部分都相同,不同如下

# 3.启动keepalived服务,ip a show ens160查看主机虚拟IP

[root@master ~]# systemctl start keepalived

[root@master ~]# ip a show ens160

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:2a:3f:65 brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.98.31/24 brd 192.168.98.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet 192.168.98.100/32 scope global ens160 #虚拟IP

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe2a:3f65/64 scope link noprefixroute

valid_lft forever preferred_lft forever

- backup主机:

[root@backup ~]# dnf install keepalived -y

[root@backup ~]# vim /etc/keepalived/keepalived.conf

[root@backup ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_backup

}

vrrp_instance VI_1 {

state BACKUP

interface ens160

virtual_router_id 51

priority 90 #比master优先级低

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.98.100

}

}

[root@backup ~]# systemctl start keepalived

[root@backup ~]# ip a show ens160

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:25:66:fb brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.98.32/24 brd 192.168.98.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe25:66fb/64 scope link noprefixroute

valid_lft forever preferred_lft forever

#此时backup主机时没有虚拟IP的,在两台主机有任意一台主机服务异常时,虚拟IP会漂移到正常的主机上

在这个时候如果nginx服务异常,则会报错

[root@master ~]# systemctl stop nginx

[root@master ~]# curl 192.168.98.100

curl: (7) Failed to connect to 192.168.98.100 port 80: Connection refused

下面则写脚本实现高可用

配置nignx高可用

- 修改nginx配置/etc/nginx/conf.d/master.conf中server_name改为虚拟IP

- 重启nginx服务

systemctl restart nginx - 编写检测脚本/etc/keepalived/check_nginx.sh

vim /etc/keepalived/check_nginx.sh - 给脚本赋予可执行权限

chmod +x /etc/keepalived/check_nginx.sh - 修改keepavlied.conf文件/etc/keepalived/keepalived.conf,在这个文件上增加执行脚本的函数以及调用,增加内容:

vrrp_script chk_nginx {

script “/etc/keepalived/check_nginx.sh”

interval 2

}

track_script {

chk_nginx

} - 重新启动keepalived服务

# 1.修改nginx配置/etc/nginx/conf.d/master.conf中server_name改为虚拟IP

[root@master ~]# vim /etc/nginx/conf.d/master.conf

[root@master ~]# cat /etc/nginx/conf.d/master.conf

upstream tomcat1 {

server 192.168.98.41:8080;

server 192.168.98.42:8080;

}

server {

listen 80;

server_name 192.168.98.100; #虚拟IP

access_log /var/log/nginx/master_access.log;

error_log /var/log/nginx/master_error.log;

location / {

proxy_pass http://tomcat1;

}

}

# 2.重启nginx服务

[root@master ~]# systemctl restart nginx

# 3.编写检测脚本 /etc/keepalived/check_nginx.sh

[root@master ~]# vim /etc/keepalived/check_nginx.sh

[root@master ~]# cat /etc/keepalived/check_nginx.sh

#!/bin/bash

counter=$(ps -C nginx --no-header|wc -l)

if [ $counter -eq 0]; then #如果nginx服务没有

systemctl start nginx #如果nginx服务没有成功启动,则杀死keepalived,虚拟IP则漂移到从服务器上去

if [ `ps -C nginx --no-header|wc -l` -eq 0];then #不可用counter,因为此时counter的值已为第一次执行这个命令的,这里需要新的拿取nginx进程的值

systemctl stop keepalived

fi

fi

ps -C nginx --no-header #拿nginx进程(去标题)

#相同效果:

[root@master ~]# pidof nginx

5530 5529 5528 5527 5526

wc -l #统计行数

- 脚本命令:

[root@master ~]# ps -C nginx #拿nginx进程

PID TTY TIME CMD

5526 ? 00:00:00 nginx

5527 ? 00:00:00 nginx

5528 ? 00:00:00 nginx

5529 ? 00:00:00 nginx

5530 ? 00:00:00 nginx

[root@master ~]# ps -C nginx --no-header #拿nginx进程(去标题)

5526 ? 00:00:00 nginx

5527 ? 00:00:00 nginx

5528 ? 00:00:00 nginx

5529 ? 00:00:00 nginx

5530 ? 00:00:00 nginx

[root@master ~]# ps -C nginx --no-header|wc -l #统计行数

5 #如果为零,则表示nginx服务没有

# 4. 给脚本赋予 可执行权限

[root@master ~]# chmod +x /etc/keepalived/check_nginx.sh

[root@master ~]# ll /etc/keepalived/check_nginx.sh

-rwxr-xr-x. 1 root root 188 Mar 26 22:20 /etc/keepalived/check_nginx.sh

# 5.修改keepavlied.conf文件 /etc/keepalived/keepalived.conf,在这个文件上增加执行脚本的函数以及调用

[root@master ~]# vim /etc/keepalived/keepalived.conf

[root@master ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_master

}

vrrp_script chk_nginx { #添加部分

script "/etc/keepalived/check_nginx.sh"

interval 2 #2秒执行一次

}

vrrp_instance VI_1 {

state MASTER

interface ens160

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.98.100

}

track_script {

chk_nginx #添加部分

}

}

# 6.重新启动keepalived服务

[root@master ~]# systemctl restart keepalived

- backup主机:

[root@backup ~]# vim /etc/nginx/conf.d/backup.conf

[root@backup ~]# systemctl restart nginx

[root@backup ~]# vim /etc/keepalived/check_nginx.sh

[root@backup ~]# chmod +x /etc/keepalived/check_nginx.sh

[root@backup ~]# vim /etc/keepalived/keepalived.conf

[root@backup ~]# systemctl restart keepalived

测试高可用

目前,两台主机均处于正常连接状态:

#master主机

[root@master ~]# systemctl restart keepalived

[root@master ~]# systemctl restart nginx

[root@master ~]# curl 192.168.98.100

tomcat2 192.168.98.42

[root@master ~]# curl 192.168.98.100

tomcat1 192.168.98.41

#backup主机

[root@backup ~]# systemctl restart keepalived

[root@backup ~]# systemctl restart nginx

[root@backup ~]# curl 192.168.98.100

tomcat1 192.168.98.41

[root@backup ~]# curl 192.168.98.100

tomcat2 192.168.98.42

- 关闭master主机上的nginx服务

[root@master ~]# systemctl stop nginx

[root@master ~]# curl 192.168.98.100

tomcat1 192.168.98.41

[root@master ~]# curl 192.168.98.100

tomcat2 192.168.98.42

当关闭 nginx 服务后,脚本就会执行,从而自动把 nginx 服务启动起来

- 关闭keepalived和nginx

[root@master ~]# systemctl stop keepalived

[root@master ~]# curl 192.168.98.100

tomcat1 192.168.98.41

[root@master ~]# curl 192.168.98.100

tomcat2 192.168.98.42

[root@master ~]# systemctl stop nginx

[root@master ~]# curl 192.168.98.100

tomcat1 192.168.98.41

[root@master ~]# curl 192.168.98.100

tomcat2 192.168.98.42

[root@master ~]# ps -ef | grep nginx

root 5498 1470 0 22:46 pts/0 00:00:00 grep --color=auto nginx

[root@master ~]# systemctl start keepalived

[root@master ~]# ps -ef | grep nginx

root 5526 1 0 22:46 ? 00:00:00 nginx: master process /usr/sbin/nginx

nginx 5527 5526 0 22:46 ? 00:00:00 nginx: worker process

nginx 5528 5526 0 22:46 ? 00:00:00 nginx: worker process

nginx 5529 5526 0 22:46 ? 00:00:00 nginx: worker process

nginx 5530 5526 0 22:46 ? 00:00:00 nginx: worker process

root 5539 1470 0 22:46 pts/0 00:00:00 grep --color=auto nginx

至此,keepalived + nginx + tomcat 的高可用就搭建完成。

2.4 报错

- 成功启动nginx服务后,通过curl命令访问本机IP进入连接到tomcat报错502 Bad Gateway

setenforce 0

[root@master ~]# vim /etc/nginx/nginx.conf

[root@master ~]# systemctl restart nginx

[root@master ~]# curl http://192.168.98.31

<html>

<head><title>502 Bad Gateway</title></head>

<body>

<center><h1>502 Bad Gateway</h1></center>

<hr><center>nginx/1.20.1</center>

</body>

</html>

[root@master ~]# setenforce 0

[root@master ~]# curl http://192.168.98.31

<html>

<head><title>502 Bad Gateway</title></head>

<body>

<center><h1>502 Bad Gateway</h1></center>

<hr><center>nginx/1.20.1</center>

</body>

</html>

[root@master ~]# systemctl stop firewalld

[root@master ~]# curl http://192.168.98.31

tomcat2 192.168.98.42

[root@master ~]# curl http://192.168.98.31

tomcat1 192.168.98.41

3. 三主热备架构

| 角色 | 主机名 | 软件 | IP地址 |

|---|---|---|---|

| 用户 | client | 192.168.88.90 | |

| keepalived | vip | 192.168.88.100 | |

| keepalived | vip | 192.168.88.101 | |

| keepalived | vip | 192.168.88.102 | |

| master | master | keepalived, nginx | 192.168.88.20 |

| backup | backup1 | keepalived, nginx | 192.168.88.30 |

| backup | backup2 | keepalived, nginx | 192.168.88.35 |

| web | tomcat1 | tomcat | 192.168.88.40 |

| web | tomcat2 | tomcat | 192.168.88.50 |

| web | tomcat3 | tomcat | 192.168.88.55 |

| DNS轮询 | dns | nginx | 192.168.88.10 |

第一个节点serverA配置:

Vrrp instance 1:MASTER,优先级100 VIP 100

Vrrp instance 2:BACKUP,优先级80 VIP 101

Vrrp instance 3:BACKUP,优先级60 VIP 102

第二个节点serverB配置:

Vrrp instance 1:BACKUP,优先级60

Vrrp instance 2:MASTER,优先级100

Vrrp instance 3:BACKUP,优先级80

第三个节点serverC配置:

Vrrp instance 1:BACKUP,优先级80

Vrrp instance 2:BACKUP,优先级60

Vrrp instance 3:MASTER,优先级100

3.1 安装 tomcat1、2、3

setenforce 0

systemctl stop firewalld

# tomcat1: IP:192.168.88.40

wget https://download.oracle.com/java/21/latest/jdk-21_linux-x64_bin.tar.gz

tar -zxf jdk-21_linux-x64_bin.tar.gz -C /usr/local

wget https://dlcdn.apache.org/tomcat/tomcat-11/v11.0.5/bin/apache-tomcat-11.0.5.tar.gz

tar -zxf apache-tomcat-11.0.5.tar.gz -C /usr/local/

vim /etc/profile

export JAVA_HOME=/usr/local/jdk-21.0.6/

export PATH=$PATH:$JAVA_HOME/bin

export TOMCAT_HOME=/usr/local/apache-tomcat-11.0.5/

export PATH=$PATH:$TOMCAT_HOME/bin

source /etc/profile

java -version

startup.sh

# 复制jdk安装目录

tomcat2:scp -r /usr/local/jdk-21.0.6/ root@192.168.88.50:/usr/local/ > /dev/null

tomcat3:scp -r /usr/local/jdk-21.0.6/ root@192.168.88.55:/usr/local/ > /dev/null

# 复制tomcat安装目录

tomcat2:scp -r /usr/local/apache-tomcat-11.0.5/ root@192.168.80.50:/usr/local/ > /dev/null

tomcat3:scp -r /usr/local/apache-tomcat-11.0.5/ root@192.168.80.55:/usr/local/ > /dev/null

cd /usr/local/apache-tomcat-11.0.5/webapps/

ls

rm -rf docs examples host-manager manager

ls

cd ROOT/

rm -rf *

ls

vim index.jsp

#内容:

tomcat1 192.168.88.40(tomcat1主机)

tomcat1 192.168.88.50(tomcat2主机)

tomcat1 192.168.88.55(tomcat3主机)

3.2 搭建 nginx、keepalived(master、backup1、backup2)

[root@backup1 ~]# mount /dev/sr0 /mnt

mount: /mnt: WARNING: source write-protected, mounted read-only.

[root@master ~]# dnf install nginx keepalived -y

[root@backup1 ~]# dnf install nginx keepalived -y

[root@backup2 ~]# dnf install nginx keepalived -y

- 配置nginx

- 配置nginx /etc/nginx/conf.d

- 启动服务

# master主机

[root@master ~]# vim /etc/nginx/conf.d/master.conf

[root@master ~]# cat /etc/nginx/conf.d/master.conf

upstream tomcat1 {

server 192.168.88.40:8080;

server 192.168.88.50:8080;

server 192.168.88.55:8080;

}

server {

listen 80;

server_name 192.168.88.100;

access_log /var/log/nginx/master_access.log;

error_log /var/log/nginx/master_error.log;

location / {

proxy_pass http://tomcat1;

}

}

#启动nginx服务

[root@master ~]# systemctl start nginx

#backup1主机

[root@backup1 ~]# vim /etc/nginx/conf.d/backup1

[root@backup1 ~]# cat /etc/nginx/conf.d/backup1

upstream tomcat2 {

server 192.168.88.40:8080;

server 192.168.88.50:8080;

server 192.168.88.55:8080;

}

server {

listen 80;

server_name 192.168.88.101;

access_log /var/log/nginx/master_access.log;

error_log /var/log/nginx/master_error.log;

location / {

proxy_pass http://tomcat2;

}

}

[root@backup1 ~]# systemctl start nginx

#backup2主机

[root@backup2 ~]# vim /etc/nginx/conf.d/backup2.conf

[root@backup2 ~]# cat /etc/nginx/conf.d/backup2.conf

upstream tomcat3 {

server 192.168.88.40:8080;

server 192.168.88.50:8080;

server 192.168.88.55:8080;

}

server {

listen 80;

server_name 192.168.88.102;

access_log /var/log/nginx/master_access.log;

error_log /var/log/nginx/master_error.log;

location / {

proxy_pass http://tomcat3;

}

}

[root@backup2 ~]# systemctl start nginx

- 搭建Keepalived

- 配置 Keepalived /etc/keepalived/keepalived.conf

- 启动服务

- 编写检测脚本/etc/keepalived/check_nginx.sh

- 给脚本赋予可执行权限,

chmod +x /etc/keepalived/check_nginx.sh

ll /etc/keepalived/check_nginx.sh可查看权限 - 修改 /etc/keepalived/keepalived.conf文件,添加脚本部分代码

# master主机

[root@master ~]# vim /etc/keepalived/keepalived.conf

[root@master ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_master

}

vrrp_instance VI_1 {

state MASTER

interface ens160

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.88.100

}

}

vrrp_instance VI_2 {

state MASTER

interface ens160

virtual_router_id 50

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.88.101

}

}

vrrp_instance VI_3 {

state BACKUP2

interface ens160

virtual_router_id 53

priority 60

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.88.102

}

}

- 编写检测脚本

# 3. 编写检测脚本/etc/keepalived/check_nginx.sh

[root@master ~]# vim /etc/keepalived/check_nginx.sh

[root@master ~]# cat /etc/keepalived/check_nginx.sh

#!/bin/bash

counter=$(ps -C nginx --no-header|wc -l)

if [ $counter -eq 0 ]; then

systemctl start nginx

if [ `ps -C nginx --no-header|wc -l` -eq 0 ]; then

systemctl stop keepalived

fi

fi

[root@master ~]# chmod +x /etc/keepalived/check_nginx.sh

[root@master ~]# ll /etc/keepalived/check_nginx.sh

-rwxr-xr-x. 1 root root 191 Mar 21 22:49 /etc/keepalived/check_nginx.sh

# 将脚本文件复制到backup主机中

scp /etc/keepalived/check_nginx.sh root@192.168.88.30:/etc/keepalived/

scp /etc/keepalived/check_nginx.sh root@192.168.88.35:/etc/keepalived/

[root@master ~]# scp /etc/keepalived/check_nginx.sh root@192.168.88.30:/etc/keepalived/

The authenticity of host '192.168.88.30 (192.168.88.30)' can't be established.

ED25519 key fingerprint is SHA256:I3/lsrnTEnXOE3LFvTLRUXAJ+AhSVrIEWtqTnleRz9w.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.88.30' (ED25519) to the list of known hosts.

root@192.168.88.30's password:

check_nginx.sh 100% 191 359.3KB/s 00:00

[root@master ~]# scp /etc/keepalived/check_nginx.sh root@192.168.88.35:/etc/keepalived/

The authenticity of host '192.168.88.35 (192.168.88.35)' can't be established.

ED25519 key fingerprint is SHA256:I3/lsrnTEnXOE3LFvTLRUXAJ+AhSVrIEWtqTnleRz9w.

This host key is known by the following other names/addresses:

~/.ssh/known_hosts:1: 192.168.88.30

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.88.35' (ED25519) to the list of known hosts.

root@192.168.88.35's password:

check_nginx.sh 100% 191 379.3KB/s 00:00

- 修改配置文件 /etc/keepalived/keepalived.conf文件,添加脚本部分代码

添加内容:

vrrp_script chk_nginx {

script "/etc/keepalived/check_nginx.sh"

interval 2

}

vrrp_instance VI_1 {

track_script {

chk_nginx

}

}

[root@master ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_master

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_nginx.sh"

interval 2

}

vrrp_instance VI_1 {

state MASTER

interface ens160

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.88.100

}

track_script {

chk_nginx

}

}

vrrp_instance VI_2 {

state MASTER

interface ens160

virtual_router_id 50

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.88.101

}

track_script {

chk_nginx

}

}

vrrp_instance VI_3 {

state BACKUP2

interface ens160

virtual_router_id 53

priority 60

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.88.102

}

track_script {

chk_nginx

}

}

- backup1

[root@backup1 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id backup1

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_nginx.sh"

interval 2

}

vrrp_instance VI_1 {

state BACKUP

interface ens160

virtual_router_id 51

priority 60

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.88.100

}

}

vrrp_instance VI_2 {

state MASTER

interface ens160

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.88.101

}

}

vrrp_instance VI_3 {

state BACKUP

interface ens160

virtual_router_id 53

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.88.102

}

track_script {

chk_nginx

}

}

- backup2

[root@backup2 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id backup2

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_nginx.sh"

interval 2

}

vrrp_instance VI_1 {

state BACKUP

interface ens160

virtual_router_id 51

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.88.100

}

}

vrrp_instance VI_2 {

state BACKUP

interface ens160

virtual_router_id 52

priority 60

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.88.101

}

}

vrrp_instance VI_3 {

state MASTER

interface ens160

virtual_router_id 53

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.88.102

}

track_script {

chk_nginx

}

}

3.3 搭建dns轮询

[root@dns ~]# systemctl stop firewalld

[root@dns ~]# setenforce 0

[root@dns ~]# vim /etc/nginx/conf.d/dns.conf

[root@dns ~]# cat /etc/nginx/conf.d/dns.conf

upstream web {

server 192.168.88.100;

server 192.168.88.101;

server 192.168.88.102;

}

server {

listen 80;

server_name 192.168.88.10;

access_log /var/log/nginx/dns_access.log;

error_log /var/log/nginx/dns_error.log;

location / {

proxy_pass http://web;

}

}

[root@dns ~]# systemctl start nginx

[root@dns ~]# curl 192.168.88.10

4.

LVS

在LVS中,RS(Real Server) 是指后端真实的工作服务器,负责处理具体的业务逻辑

保险起见:防止不能上外网,开启虚拟机后,先把 ipvsamd 软件安装上,再进入虚拟机进行网卡、IP等操作的修改

- ipvsadm软件包安装目录

[root@lvs ~]# rpm -ql ipvsadm

/etc/sysconfig/ipvsadm-config #配置文件

/usr/lib/.build-id

/usr/lib/.build-id/0b

/usr/lib/.build-id/0b/d10d85dc0121855898c34f27a7730b50772fcc

/usr/lib/systemd/system/ipvsadm.service #服务启动文件

/usr/sbin/ipvsadm #主程序

/usr/sbin/ipvsadm-restore #规则重载工具

/usr/sbin/ipvsadm-save #规则保存工具

/usr/share/doc/ipvsadm

/usr/share/doc/ipvsadm/MAINTAINERS

/usr/share/doc/ipvsadm/README

/usr/share/man/man8/ipvsadm-restore.8.gz

/usr/share/man/man8/ipvsadm-save.8.gz

/usr/share/man/man8/ipvsadm.8.gz

- 命令使用 - ipvsadm --help

#管理集群服务规则:

ipvsadm -A|E virtual-service [-s scheduler] [-p [timeout]] [-M netmask] [--pe persistence_engine] [-b sched-flags](创建规则及指定算法)

-A:指定规则

-E:修改算法

-t:TCP协议的端口,VIP:TCP_PORT

-u:UDP协议的端口,VIP:UDP_PORT

-f:Firewall MARK,标记,一个数字

[-p [timeout]]:持久化

[-s scheduler]:指定集群的调度算法,默认为wlc

ipvsadm -D virtual-service #删除某一个配置信息

ipvsadm -C #清空

ipvsadm -R #重载,相当于ipvsadm-restore

ipvsadm -S [-n] #保存,相当于ipvsadm-save

#管理集群中的RS

ipvsadm -a|e virtual-service -r server-address [options]

-a:加真实的服务器

ipvsadm -d virtual-service -r server-address

ipvsadm -L|l [virtual-service] [options] #查看

ipvsadm -Z [virtual-service] #清空计数器

ipvsadm --set tcp tcpfin udp

ipvsadm --start-daemon {master|backup} [daemon-options]

ipvsadm --stop-daemon {master|backup}

ipvsadm -h

#指定模式

--gatewaying -g gatewaying (direct routing) (default)默认

--ipip -i ipip encapsulation (tunneling)模式

--masquerading -m masquerading (NAT)模式

1. NAT 模式安装

-

LVS服务器需要有两块网卡,一块用于虚拟IP,便于用户访问;另一块作为后端真实主机的网关。

-

在配置规则时,需要通过 -m 参数来指定 NAT 模式

-

需要在 LVS 服务器上配置ip转发内核参数

vim /etc/sysctl.conf

net.ipv4.ip_forward=1

保险起见:防止不能上外网,开启虚拟机后,先把 ipvsamd 软件安装上,再进入虚拟机进行网卡、IP等操作的修改

- 架构:

RS的网关为LVS主机的IP

节点规划:

| 主机 | 角色 | 软件 | 网络 | IP | dns |

|---|---|---|---|---|---|

| client | client | 仅主机 | 192.168.204.100/24 | ||

| lvs | lvs | ipvsadm | 仅主机 NAT | VIP192.168.204.200/24 DIP192.168.88.8/24 | |

| nginx | rs1 | nginx | NAT | 192.168.88.7/24 | 192.168.88.8 |

| nginx | rs2 | nginx | NAT | 192.168.88.17/24 | 192.168.88.8 |

1.1 配置 RS(NAT)

在LVS中,RS(Real Server) 是指后端真实的工作服务器,负责处理具体的业务逻辑

- 安装 nginx

dnf install nginx -y - 修改默认访问页

echo $(hostname -I) > /usr/share/nginx/html/index.html - 启动服务

systemctl start nginx - 测试

curl localhost

网关:192.168.88.8(LVS主机NAT网卡的IP-DIP)

- RS1:

[root@localhost ~]# hostnamectl hostname rs1

[root@localhost ~]# nmcli c modify ens160 ipv4.method manual ipv4.addresses 192.168.88.7/24 ipv4.gateway 192.168.88.8 connection.autoconnect yes

[root@localhost ~]# nmcli c up ens160

# 安装nginx

[root@rs1 ~]# dnf install nginx -y

# 修改默认访问页

[root@rs1 ~]# echo $(hostname -I) > /usr/share/nginx/html/index.html

# 启动服务

[root@rs1 ~]# systemctl start nginx

# 测试

[root@rs1 ~]# curl localhost

192.168.88.7

- RS2:

[root@localhost ~]# hostnamectl hostname rs2

[root@localhost ~]# nmcli c modify ens160 ipv4.method manual ipv4.addresses 192.168.88.17/24 ipv4.gateway 192.168.88.8 connection.autoconnect yes

[root@localhost ~]# nmcli c up ens160

# 安装nginx

[root@rs1 ~]# dnf install nginx -y

# 修改默认访问页

[root@rs2 ~]# echo $(hostname -I) > /usr/share/nginx/html/index.html

# 启动服务

[root@rs2 ~]# systemctl start nginx

# 测试

[root@rs2 ~]# curl localhost

192.168.88.17

1.2 配置 LVS(两个网卡)

LVS服务器需要有两块网卡,一块用于虚拟IP,便于用户访问;另一块作为后端真实主机的网关

开启虚拟机之前,先对 LVS 主机进行网卡添加

lvs服务器有两块网卡

第一块网卡采用仅主机模式,IP 地址为 192.168.204.200

第二块网卡采用 NAT 模式,IP 地址为192.168.88.8

# 查看网络设备连接名称:

[root@localhost ~]# nmcli c show

NAME UUID TYPE DEVICE

Wired connection 1 716944d5-683d-3297-9f0b-39530d65c935 ethernet ens224

ens160 c6adadcb-89ef-3ed8-a265-09260f58abe1 ethernet ens160

lo 3919112a-6bb6-4ad3-a213-3ce8394ca9a9 loopback lo

# 修改连接名称

[root@localhost ~]# nmcli c modify 'Wired connection 1' connection.id ens224

# 查看修改结果

[root@localhost ~]# nmcli c show

NAME UUID TYPE DEVICE

ens224 716944d5-683d-3297-9f0b-39530d65c935 ethernet ens224

ens160 c6adadcb-89ef-3ed8-a265-09260f58abe1 ethernet ens160

lo 3919112a-6bb6-4ad3-a213-3ce8394ca9a9 loopback lo

[root@localhost ~]# hostnamectl hostname lvs

配置仅主机模式网卡(用于虚拟IP,便于用户访问)

[root@localhost ~]# nmcli c modify ens160 ipv4.method manual ipv4.addresses 192.168.204.200/24 ipv4.gateway 192.168.204.2 ipv4.dns 223.5.5.5 connection.autoconnect yes

[root@localhost ~]# nmcli c up ens160

安装 ipvsamd

为了防止等会不能上外网,先把 ipvsamd 软件安装上

[root@lvs ~]# dnf install ipvsadm -y

配置NAT模式网卡(作为后端真实主机的网关)

[root@lvs ~]# nmcli c modify ens224 ipv4.method manual ipv4.addresses 192.168.88.8/24 ipv4.gateway 192.168.88.2 connection.autoconnect yes

[root@lvs ~]# nmcli c up ens224

Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/5)

[root@lvs ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

# 仅主机

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:26:e1:1b brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.204.200/24 brd 192.168.204.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe26:e11b/64 scope link noprefixroute

valid_lft forever preferred_lft forever

# NAT

3: ens224: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:26:e1:25 brd ff:ff:ff:ff:ff:ff

altname enp19s0

inet 192.168.88.8/24 brd 192.168.88.255 scope global noprefixroute ens224

valid_lft forever preferred_lft forever

inet6 fe80::54c2:3ed3:5085:89a0/64 scope link noprefixroute

valid_lft forever preferred_lft forever

1.3 配置客户端(仅主机)

客户端的网络采用的是仅主机模式。

[root@localhost ~]# hostnamectl hostname client

[root@localhost ~]# nmcli c modify ens160 ipv4.method manual ipv4.addresses 192.168.204.100/24 ipv4.gateway 192.168.204.2 ipv4.dns 223.5.5.5 connection.autoconnect yes

[root@localhost ~]# nmcli c up ens160

[root@client ~]# nmcli d show ens160

GENERAL.DEVICE: ens160

GENERAL.TYPE: ethernet

GENERAL.HWADDR: 00:0C:29:11:46:49

GENERAL.MTU: 1500

GENERAL.STATE: 100 (connected)

GENERAL.CONNECTION: ens160

GENERAL.CON-PATH: /org/freedesktop/NetworkManager/ActiveConnection/3

WIRED-PROPERTIES.CARRIER: on

IP4.ADDRESS[1]: 192.168.10.100/24

IP4.GATEWAY: 192.168.10.2

IP4.ROUTE[1]: dst = 192.168.204.0/24, nh = 0.0.0.0, mt = 100

IP4.ROUTE[2]: dst = 0.0.0.0/0, nh = 192.168.204.2, mt = 100

IP4.DNS[1]: 223.5.5.5

IP6.ADDRESS[1]: fe80::20c:29ff:fe11:4649/64

IP6.GATEWAY: --

IP6.ROUTE[1]: dst = fe80::/64, nh = ::, mt = 1024

1.4 启动ipvsadm服务(lvs)

[root@lvs ~]# ipvsadm-save > /etc/sysconfig/ipvsadm

[root@lvs ~]# systemctl start ipvsadm

在客户端上访问 VIP 来测试是否能够成功访问到后端的 RS 服务器

#在客户端上访问 VIP 来测试是否能够成功访问到后端的 RS 服务器

[root@client ~]# curl 192.168.204.200

curl: (7) Failed to connect to 192.168.10.200 port 80: Connection refused

#在 lvs 服务器中可以访问:

[root@lvs ~]# curl 192.168.204.200

192.168.88.17

[root@lvs ~]# curl 192.168.204.200

192.168.88.7

# 由于没有做 LVS 规则匹配

1.5 LVS 规则匹配

由于没有做 LVS 规则匹配

# 配置一条规则

[root@lvs ~]# ipvsadm -A -t 192.168.10.200:80 -s rr

-A:虚拟的服务器地址

-t:指定虚拟的主机的地址

-s:算法 rr

# 为规则增加RS真实服务器

[root@lvs ~]# ipvsadm -a -t 192.168.10.200:80 -r 192.168.72.7:80 -m -w 2

[root@lvs ~]# ipvsadm -a -t 192.168.10.200:80 -r 192.168.72.17:80 -m -w 2

-a:虚拟的服务器地址

-r:真实的服务器地址

-m:NAT模式-Masq(不指定即为DR模式-route)

-W:权重

#去除规则:-d

#删除:-C

[root@lvs ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.10.200:80 rr

-> 192.168.72.7:80 Masq 2 0 0

-> 192.168.72.17:80 Masq 2 0 0

# 配置完后,重启服务器

[root@lvs ~]# systemctl restart ipvsadm

1.6 功能测试

客户端测试

[root@lvs ~]# echo "net.ipv4.ip_forward=1" >> /etc/sysctl.conf

不报错,但是没有数据返回。原因是需要配置内核转发参数

net.ipv4.ip_forward=1

NAT模式内核参数配置(LVS主机)

[root@lvs ~]# vim /etc/sysctl.conf

[root@lvs ~]# cat /etc/sysctl.conf

# sysctl settings are defined through files in

# /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/.

#

# Vendors settings live in /usr/lib/sysctl.d/.

# To override a whole file, create a new file with the same in

# /etc/sysctl.d/ and put new settings there. To override

# only specific settings, add a file with a lexically later

# name in /etc/sysctl.d/ and put new settings there.

#

# For more information, see sysctl.conf(5) and sysctl.d(5).

net.ipv4.ip_forward=1 #添加内容

# 执行如下的命令来生效

[root@lvs ~]# sysctl -p

net.ipv4.ip_forward = 1

[root@lvs ~]# systemctl restart ipvsadm

客户端再次测试

[root@client ~]# curl 192.168.204.200

192.168.88.17

[root@client ~]# curl 192.168.204.200

192.168.88.7

2.DR模式(DR模式单网段案例)

DR工作模式:

在LVS-DR模式下,负载均衡器仅修改请求报文的目标MAC地址,而不修改IP地址。负载均衡器将请求发送到后端服务器后,后端服务器直接将响应报文发回客户端。由于不修改IP地址,该模式具有较高的处理效率。

节点规划:

| 主机 | 角色 | 系统 | 网络 | IP |

|---|---|---|---|---|

| client | client | redhat 9.5 | 仅主机 | 192.168.98.100/24 |

| lvs | lvs | redhat 9.5 | 仅主机 NAT | 192.168.98.8/24 VIP:192.168.98.100/32 |

| nginx | rs1 | redhat 9.5 | NAT | 192.168.98.7/24 VIP:192.168.98.100/32 |

| nginx | rs2 | redhat 9.5 | NAT | 192.168.98.17/24 VIP:192.168.98.100/32 |

| router | 路由 | redhat 9.5 | 仅主机 NAT | 192.168.86.130/24、192.168.98.135/24 |

所有主机的防火墙和Selinux都要关闭

systemctl disable --now firewalld

临时关闭Selinux

setenforce 0

永久关闭Selinux

sed -i "s/SELINUX=enforcing/SELINUX=permissive"

2.1 配置路由

需要两块网卡,一块为仅主机模式,一块为NAT模式

[root@router ~]# ip ad

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:65:57:9e brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.86.130/24 brd 192.168.86.255 scope global dynamic noprefixroute ens160

valid_lft 1521sec preferred_lft 1521sec

inet6 fe80::20c:29ff:fe65:579e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens224: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:65:57:a8 brd ff:ff:ff:ff:ff:ff

altname enp19s0

inet 192.168.98.135/24 brd 192.168.98.255 scope global dynamic noprefixroute ens224

valid_lft 1521sec preferred_lft 1521sec

inet6 fe80::4144:bf6f:b3ce:99b8/64 scope link noprefixroute

valid_lft forever preferred_lft forever

- 第一块网卡(ens160):

不需要上网,所以dns和gateway不需要配置

[root@router ~]# nmcli c modify ens160 ipv4.method manual ipv4.addresses 192.168.86.200/24 connection.autoconnect yes

[root@router ~]# nmcli c up ens160

Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/4)

[root@router ~]# nmcli d show ens160

GENERAL.DEVICE: ens160

GENERAL.TYPE: ethernet

GENERAL.HWADDR: 00:0C:29:65:57:9E

GENERAL.MTU: 1500

GENERAL.STATE: 100 (connected)

GENERAL.CONNECTION: ens160

GENERAL.CON-PATH: /org/freedesktop/NetworkManager/ActiveConnection/4

WIRED-PROPERTIES.CARRIER: on

IP4.ADDRESS[1]: 192.168.86.200/24

IP4.GATEWAY: --

IP4.ROUTE[1]: dst = 192.168.86.0/24, nh = 0.0.0.0, mt = 102

IP6.ADDRESS[1]: fe80::20c:29ff:fe65:579e/64

IP6.GATEWAY: --

IP6.ROUTE[1]: dst = fe80::/64, nh = ::, mt = 1024

- 第二块网卡(ens224):

#修改网卡连接名称

[root@router ~]# nmcli c show

NAME UUID TYPE DEVICE

Wired connection 1 bac67df3-eb21-31f8-bb92-92f73e2470e6 ethernet ens224

ens160 80630323-1c6a-381f-817b-4d1d206850e3 ethernet ens160

lo 4725cdc0-d053-4703-952b-a33bb54887b9 loopback lo

[root@router ~]# nmcli c modify 'Wired connection 1' connection.id ens224

[root@router ~]# nmcli c show

NAME UUID TYPE DEVICE

ens224 bac67df3-eb21-31f8-bb92-92f73e2470e6 ethernet ens224

ens160 80630323-1c6a-381f-817b-4d1d206850e3 ethernet ens160

lo 4725cdc0-d053-4703-952b-a33bb54887b9 loopback lo

#为了后续安装软件,配置网关和dns

[root@router ~]# nmcli c modify ens224 ipv4.method manual ipv4.addresses 192.168.98.200/24 ipv4.gateway 192.168.98.2 ipv4.dns 223.5.5.5 connection.autoconnect yes

[root@router ~]# nmcli c up ens224

[root@router ~]# nmcli d show ens224

GENERAL.DEVICE: ens224

GENERAL.TYPE: ethernet

GENERAL.HWADDR: 00:0C:29:65:57:A8

GENERAL.MTU: 1500

GENERAL.STATE: 100 (connected)

GENERAL.CONNECTION: ens224

GENERAL.CON-PATH: /org/freedesktop/NetworkManager/ActiveConnection/5

WIRED-PROPERTIES.CARRIER: on

IP4.ADDRESS[1]: 192.168.98.200/24

IP4.GATEWAY: 192.168.98.2

IP4.ROUTE[1]: dst = 192.168.98.0/24, nh = 0.0.0.0, mt = 103

IP4.ROUTE[2]: dst = 0.0.0.0/0, nh = 192.168.98.2, mt = 103

IP4.DNS[1]: 223.5.5.5

IP6.ADDRESS[1]: fe80::4144:bf6f:b3ce:99b8/64

IP6.GATEWAY: --

IP6.ROUTE[1]: dst = fe80::/64, nh = ::, mt = 1024

2.2 配置RS真实服务器

- rs1

[root@rs1 ~]# nmcli c modify ens160 ipv4.method manual ipv4.addresses 192.168.98.7/24 ipv4.gateway 192.168.98.200 ipv4.dns 223.5.5.5 connection.autoconnect yes

[root@rs1 ~]# nmcli c up ens160

[root@rs1 ~]# nmcli d show ens160

GENERAL.DEVICE: ens160

GENERAL.TYPE: ethernet

GENERAL.HWADDR: 00:0C:29:BA:BD:60

GENERAL.MTU: 1500

GENERAL.STATE: 100 (connected)

GENERAL.CONNECTION: ens160

GENERAL.CON-PATH: /org/freedesktop/NetworkManager/ActiveConnection/3

WIRED-PROPERTIES.CARRIER: on

IP4.ADDRESS[1]: 192.168.98.7/24

IP4.GATEWAY: 192.168.98.200

IP4.ROUTE[1]: dst = 192.168.98.0/24, nh = 0.0.0.0, mt = 100

IP4.ROUTE[2]: dst = 0.0.0.0/0, nh = 192.168.98.200, mt = 100

IP4.DNS[1]: 223.5.5.5

IP6.ADDRESS[1]: fe80::20c:29ff:feba:bd60/64

IP6.GATEWAY: --

IP6.ROUTE[1]: dst = fe80::/64, nh = ::, mt = 1024

配置IP后ping不了上网

[root@rs1 ~]# ping www.baidu.com

^C^C

[root@rs1 ~]# nmcli c m ens160 ipv4.gateway 192.168.98.2

[root@rs1 ~]# nmcli c up ens160

Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/4)

[root@rs1 ~]# ping www.baidu.com

PING www.a.shifen.com (183.2.172.17) 56(84) bytes of data.

64 bytes from 183.2.172.17 (183.2.172.17): icmp_seq=1 ttl=128 time=28.3 ms

64 bytes from 183.2.172.17 (183.2.172.17): icmp_seq=2 ttl=128 time=32.7 ms

^C

--- www.a.shifen.com ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 28.316/30.484/32.653/2.168 ms

#安装好软件后改回网关

[root@rs1 ~]# nmcli c m ens160 ipv4.gateway 192.168.98.200

[root@rs1 ~]# nmcli c up ens160

Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/5)

安装nginx

[root@rs1 ~]# mount /dev/sr0 /mnt

mount: /mnt: WARNING: source write-protected, mounted read-only.

[root@rs1 ~]# dnf install nginx -y

[root@rs1 ~]# echo $(hostname) $(hostname -I) > /usr/share/nginx/html/index.html

[root@rs1 ~]# systemctl start nginx

[root@rs1 ~]# curl localhost

rs1 192.168.98.7

[root@rs1 ~]# curl 192.168.98.7

rs1 192.168.98.7

#路由也可访问

[root@router ~]# curl 192.168.98.7

rs1 192.168.98.7

- rs2:

[root@rs2 ~]# nmcli c modify ens160 ipv4.method manual ipv4.addresses 192.168.98.17/24 ipv4.gateway 192.168.98.200 ipv4.dns 223.5.5.5 connection.autoconnect yes

[root@rs2 ~]# nmcli c up ens160

[root@rs2 ~]# nmcli d show ens160

GENERAL.DEVICE: ens160

GENERAL.TYPE: ethernet

GENERAL.HWADDR: 00:0C:29:38:37:87

GENERAL.MTU: 1500

GENERAL.STATE: 100 (connected)

GENERAL.CONNECTION: ens160

GENERAL.CON-PATH: /org/freedesktop/NetworkManager/ActiveConnection/3

WIRED-PROPERTIES.CARRIER: on

IP4.ADDRESS[1]: 192.168.98.17/24

IP4.GATEWAY: 192.168.98.200

IP4.ROUTE[1]: dst = 192.168.98.0/24, nh = 0.0.0.0, mt = 100

IP4.ROUTE[2]: dst = 0.0.0.0/0, nh = 192.168.98.200, mt = 100

IP4.DNS[1]: 223.5.5.5

IP6.ADDRESS[1]: fe80::20c:29ff:fe38:3787/64

IP6.GATEWAY: --

IP6.ROUTE[1]: dst = fe80::/64, nh = ::, mt = 1024

IP配置后不能上网

[root@rs2 ~]# ping wwww.baidu.com

^C

[root@rs2 ~]# nmcli c m ens160 ipv4.gateway 192.168.98.2

[root@rs2 ~]# nmcli c up ens160

Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/4)

[root@rs2 ~]# ping www.baidu.com

PING www.a.shifen.com (183.2.172.17) 56(84) bytes of data.

64 bytes from 183.2.172.17 (183.2.172.17): icmp_seq=1 ttl=128 time=28.8 ms

64 bytes from 183.2.172.17 (183.2.172.17): icmp_seq=2 ttl=128 time=30.7 ms

^C

--- www.a.shifen.com ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 28.807/29.757/30.707/0.950 ms

#安装好软件nginx后,将网关改回来

[root@rs2 ~]# nmcli c m ens160 ipv4.gateway 192.168.98.200

[root@rs2 ~]# nmcli c up ens160

Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/5)

- 安装nginx

[root@rs2 ~]# mount /dev/sr0 /mnt

mount: /mnt: WARNING: source write-protected, mounted read-only.

[root@rs2 ~]# dnf install nginx -y

[root@rs2 ~]# echo $(hostname) $(hostname -I) > /usr/share/nginx/html/index.html

[root@rs2 ~]# systemctl start nginx

[root@rs2 ~]# curl localhost

rs2 192.168.98.17

[root@rs2 ~]# curl 192.168.98.17

rs2 192.168.98.17

#router服务器访问

[root@router ~]# curl 192.168.98.17

rs2 192.168.98.17

2.3 配置LVS

[root@client ~]# hostnamectl hostname lvs

[root@client ~]# nmcli c modify ens160 ipv4.method manual ipv4.addresses 192.168.98.8/24 ipv4.gateway 192.168.98.200 connection.autoconnect yes

[root@client ~]# nmcli c up ens160

[root@lvs ~]# nmcli d show ens160

GENERAL.DEVICE: ens160

GENERAL.TYPE: ethernet

GENERAL.HWADDR: 00:0C:29:26:E1:1B

GENERAL.MTU: 1500

GENERAL.STATE: 100 (connected)

GENERAL.CONNECTION: ens160

GENERAL.CON-PATH: /org/freedesktop/NetworkManager/ActiveConnection/3

WIRED-PROPERTIES.CARRIER: on

IP4.ADDRESS[1]: 192.168.98.8/24

IP4.GATEWAY: 192.168.98.200

IP4.ROUTE[1]: dst = 192.168.98.0/24, nh = 0.0.0.0, mt = 100

IP4.ROUTE[2]: dst = 0.0.0.0/0, nh = 192.168.98.200, mt = 100

IP6.ADDRESS[1]: fe80::20c:29ff:fe26:e11b/64

IP6.GATEWAY: --

IP6.ROUTE[1]: dst = fe80::/64, nh = ::, mt = 1024

- 安装ipvsadm

[root@lvs ~]# mount /dev/sr0 /mnt/

mount: /mnt: WARNING: source write-protected, mounted read-only.

[root@lvs ~]# dnf install ipvsadm -y

# 初始化文件

[root@lvs ~]# ipvsadm-save -n > /etc/sysconfig/ipvsadm

[root@lvs ~]# vim /usr/lib/systemd/system/ipvsadm.service

- 配置虚拟IP(VIP)

[root@lvs ~]# ip addr add 192.168.98.100/32 dev lo

[root@lvs ~]# ip a show lo

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 192.168.98.100/32 scope global lo

valid_lft forever preferred_lft forever