flume单机版安装

1、安装jdk(提前安装准备)

[root@keep-hadoop ~]# java -version

java version "1.8.0_172"

Java(TM) SE Runtime Environment (build 1.8.0_172-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.172-b11, mixed mode)2、下载安装包

https://archive.apache.org/dist/flume/

3、解压安装包到指定目录

[root@keep-hadoop ~]# tar -zxvf apache-flume-1.6.0-bin.tar.gz -C /usr/local/src4、配置环境变量

[root@cenots ~]# vim /etc/profile

export FLUME_HOME=/usr/local/src/apache-flume-1.6.0-bin

export PATH=$PATH:$FLUME_HOME/bin

export FLUME_CONF_DIR=$FLUME_HOME/conf[root@cenots ~]# source /etc/profile5、检查环境

[root@keep-hadoop apache-flume-1.6.0-bin]# flume-ng version

Flume 1.6.0

Source code repository: https://git-wip-us.apache.org/repos/asf/flume.git

Revision: 2561a23240a71ba20bf288c7c2cda88f443c2080

Compiled by hshreedharan on Mon May 11 11:15:44 PDT 2015

From source with checksum b29e416802ce9ece3269d34233baf43f6、修改Flume配置

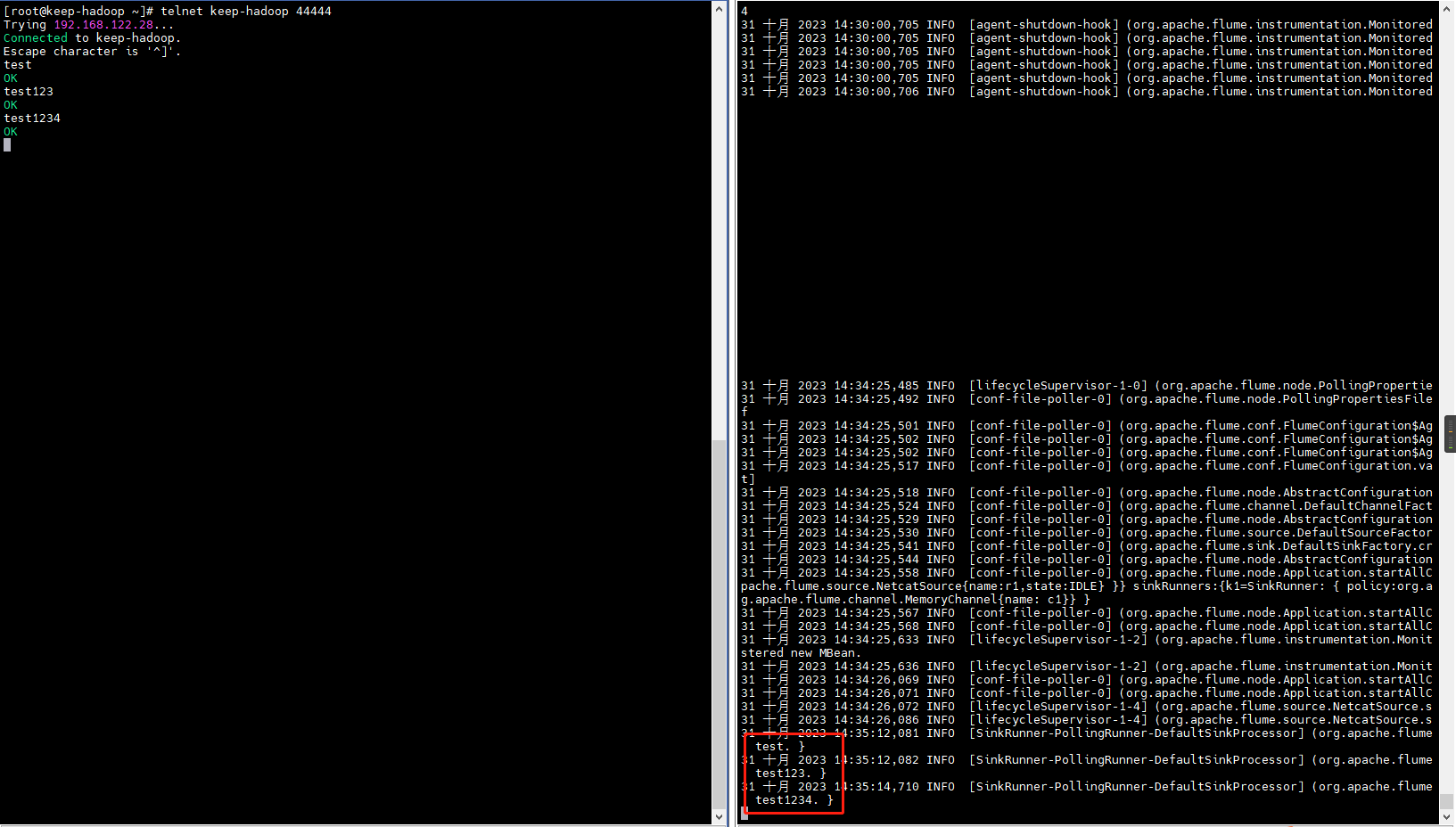

# NetCat模式

[root@keep-hadoop apache-flume-1.6.0-bin]# vim conf/flume-netcat.conf

# Name the components on this agent

agent.sources = r1

agent.sinks = k1

agent.channels = c1# Describe/configuration the source

agent.sources.r1.type = netcat

agent.sources.r1.bind = keep-hadoop

agent.sources.r1.port = 44444# Describe the sink

agent.sinks.k1.type = logger# Use a channel which buffers events in memory

agent.channels.c1.type = memory

agent.channels.c1.capacity = 1000

agent.channels.c1.transactionCapacity = 100# Bind the source and sink to the channel

agent.sources.r1.channels = c1

agent.sinks.k1.channel = c1# 验证

# Server

[root@keep-hadoop ~]# bin/flume-ng agent --conf conf --conf-file conf/flume-netcat.conf --name=agent -D flume.root.logger=INFO,console# Client

[root@keep-hadoop ~]# telnet keep-hadoop 44444# 查看日志

[root@keep-hadoop apache-flume-1.6.0-bin]# tail -f logs/flume.log

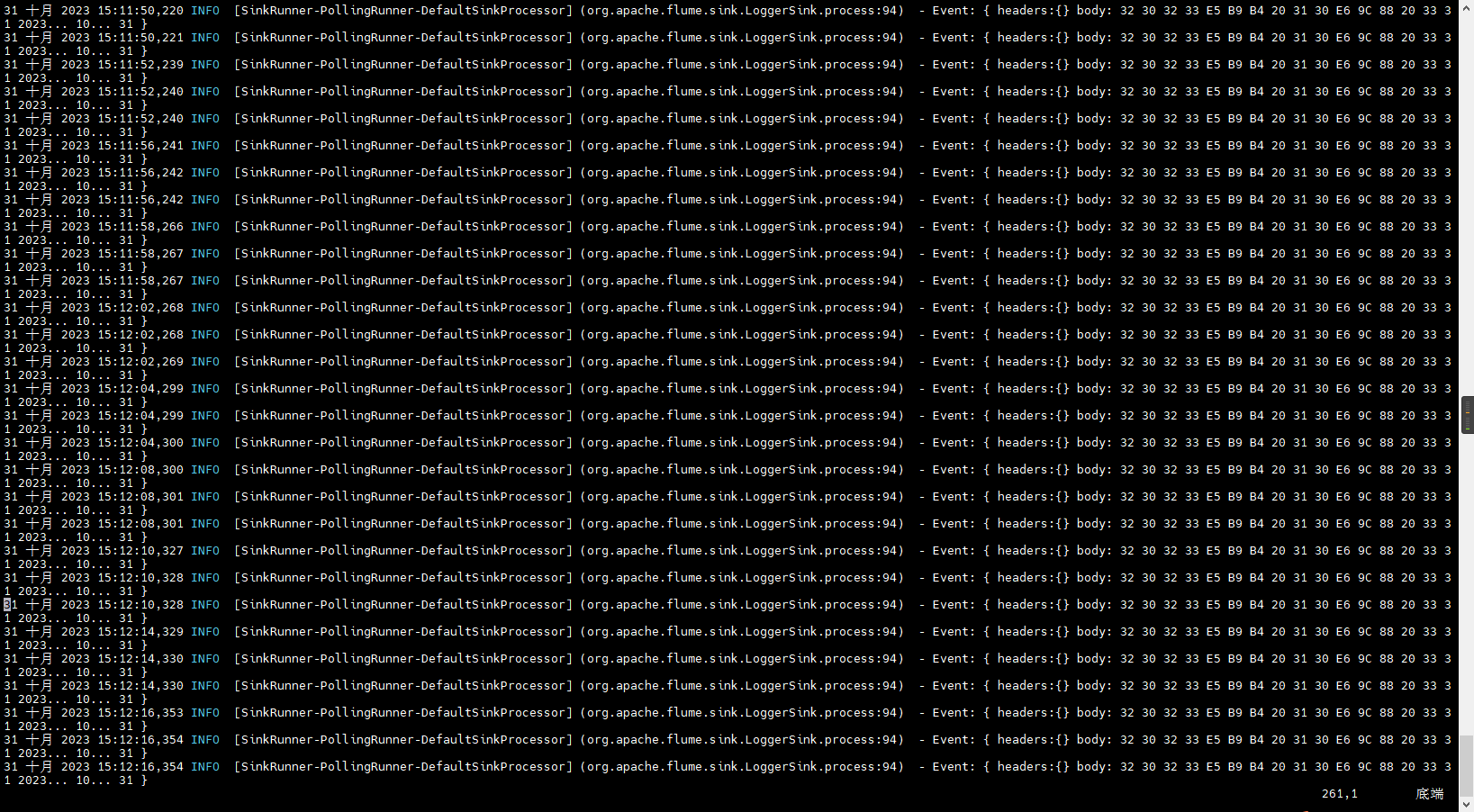

# Exec模式

[root@keep-hadoop apache-flume-1.6.0-bin]# vim conf/flume-exec.conf

# Name the components on this agent

agent.sources = r1

agent.sinks = k1

agent.channels = c1# Describe/configuration the source

agent.sources.r1.type = exec

# 这里如果先执行,文件不存在的话。会退出

agent.sources.r1.command = tail -f /usr/local/src/apache-flume-1.6.0-bin/test.txt# Describe the sink

agent.sinks.k1.type = logger# Use a channel which buffers events in memory

agent.channels.c1.type = memory

agent.channels.c1.capacity = 1000

agent.channels.c1.transactionCapacity = 100# Bind the source and sink to the channel

agent.sources.r1.channels = c1

agent.sinks.k1.channel = c1# Server

[root@keep-hadoop apache-flume-1.6.0-bin]# bin/flume-ng agent --conf conf --conf-file conf/flume-exec.conf --name=agent -D flume.root.logger=INFO,console# Client

[root@keep-hadoop apache-flume-1.6.0-bin]# while true;do echo `date` >> /usr/local/src/apache-flume-1.6.0-bin/test.txt ; sleep 1; done# 查看日志

[root@keep-hadoop apache-flume-1.6.0-bin]# tail -f logs/flume.log

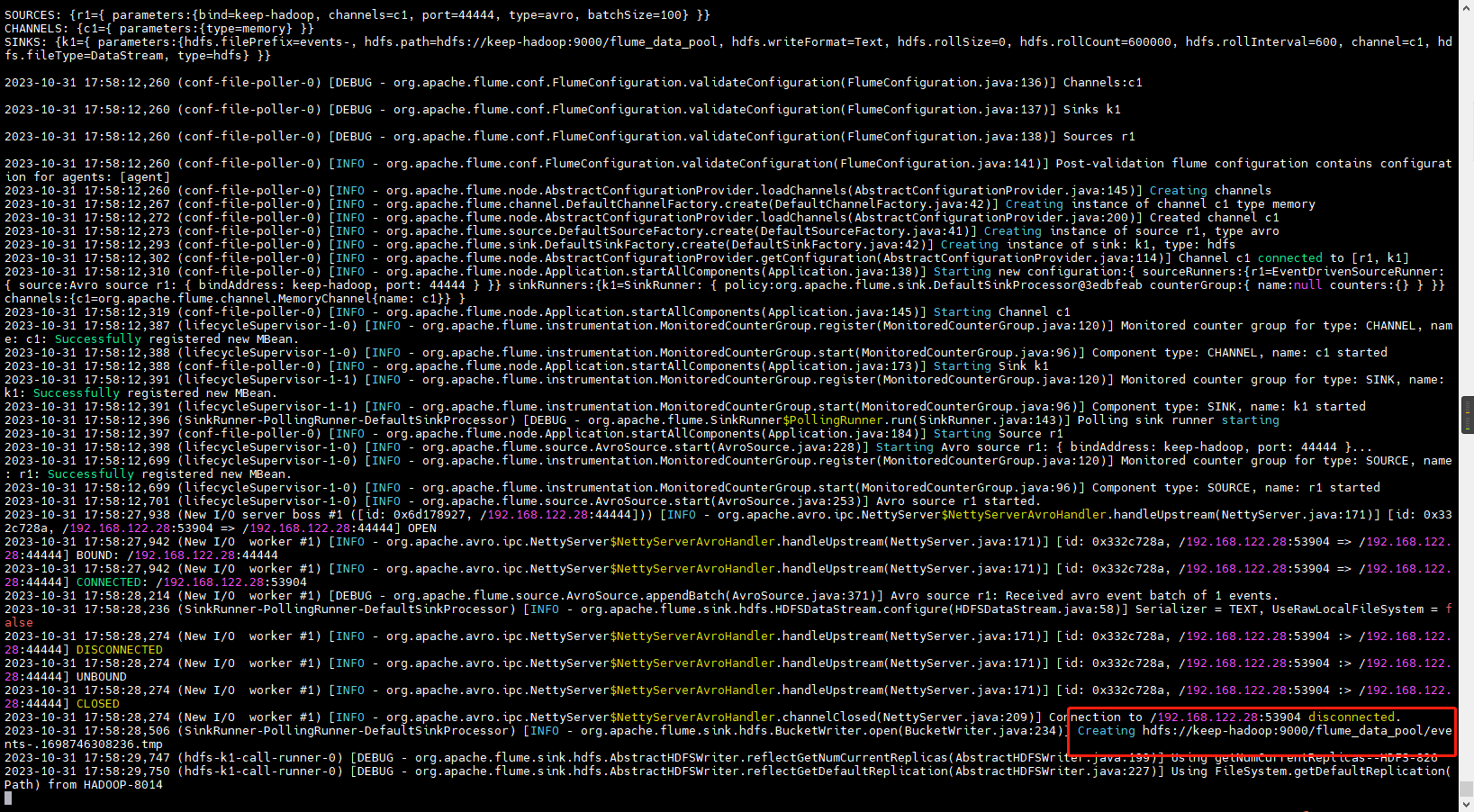

# Avro模式

# 这里是avro格式,telnet不能做转换

[root@keep-hadoop apache-flume-1.6.0-bin]# vim conf/flume-avro.conf

# Define a memory channel called c1 on agent

agent.channels.c1.type = memory# Define an avro source alled r1 on agent and tell it

agent.sources.r1.channels = c1

agent.sources.r1.type = avro

agent.sources.r1.bind = keep-hadoop

agent.sources.r1.port = 44444# Describe/configuration the source

agent.sinks.k1.type = hdfs

agent.sinks.k1.channel = c1

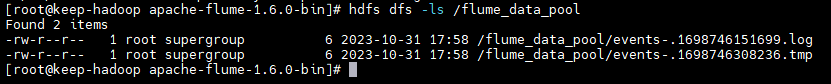

agent.sinks.k1.hdfs.path = hdfs://keep-hadoop:9000/flume_data_pool

agent.sinks.k1.hdfs.filePrefix = events-

agent.sinks.k1.hdfs.fileType = DataStream

agent.sinks.k1.hdfs.writeFormat = Text

agent.sinks.k1.hdfs.rollSize = 0

agent.sinks.k1.hdfs.rollCount= 600000

agent.sinks.k1.hdfs.rollInterval = 600agent.channels = c1

agent.sources = r1

agent.sinks = k1# 验证

# Server

[root@keep-hadoop apache-flume-1.6.0-bin]# bin/flume-ng agent --conf conf --conf-file conf/flume-avro.conf --name=agent -Dflume.root.logger=DEBUG,console# Client

[root@keep-hadoop apache-flume-1.6.0-bin]# cat helloworld.txt

test1[root@keep-hadoop apache-flume-1.6.0-bin]# bin/flume-ng avro-client --host keep-hadoop --port 44444 -F ./helloworld.txt