OpenAI responses使用教程(三) ——Responses create python SDK 介绍

OpenAI responses系列教程:

OpenAI responses使用教程(一) ——导论

OpenAI responses使用教程(二) ——Responses create API介绍

OpenAI responses使用教程(三) ——Responses create python SDK 介绍

前面提到过,OpenAI SDK的代码,并非由手写代码完成,是使用AI根据OpenAI的API介绍自动生成的,仅有少部分需要人工参与,因此SDK的同步周期非常短,可以始终与API文档保持一致,同时也需要开发者在开发新项目时或者学习时,先更新SDK到最新版本。

目录

一、最简单文字用法

二、最简单图片用法

三、最简单文件用法

四、最简单function tool用法

五、完整的function tool使用举例:

一、最简单文字用法

python SDK的使用相当精简且统一,我们以官方最简单的示例开始:

from openai import OpenAIclient = OpenAI()response = client.responses.create(model="gpt-4.1",input="Tell me a three sentence bedtime story about a unicorn."

)print(response)

与之前常用的chat.completion API相比,主要是使用input替换掉了原来的参数。上一篇介绍过,input其实相当相当复杂,它不仅支持直接使用字符串,还支持字典。以下是python sdk的代码部分,我们可以从代码部分来直观感受下:

@overloaddef create(self,*,stream: Literal[True],background: Optional[bool] | Omit = omit,conversation: Optional[response_create_params.Conversation] | Omit = omit,include: Optional[List[ResponseIncludable]] | Omit = omit,input: Union[str, ResponseInputParam] | Omit = omit,instructions: Optional[str] | Omit = omit,max_output_tokens: Optional[int] | Omit = omit,max_tool_calls: Optional[int] | Omit = omit,metadata: Optional[Metadata] | Omit = omit,model: ResponsesModel | Omit = omit,parallel_tool_calls: Optional[bool] | Omit = omit,previous_response_id: Optional[str] | Omit = omit,prompt: Optional[ResponsePromptParam] | Omit = omit,prompt_cache_key: str | Omit = omit,reasoning: Optional[Reasoning] | Omit = omit,safety_identifier: str | Omit = omit,service_tier: Optional[Literal["auto", "default", "flex", "scale", "priority"]] | Omit = omit,store: Optional[bool] | Omit = omit,stream_options: Optional[response_create_params.StreamOptions] | Omit = omit,temperature: Optional[float] | Omit = omit,text: ResponseTextConfigParam | Omit = omit,tool_choice: response_create_params.ToolChoice | Omit = omit,tools: Iterable[ToolParam] | Omit = omit,top_logprobs: Optional[int] | Omit = omit,top_p: Optional[float] | Omit = omit,truncation: Optional[Literal["auto", "disabled"]] | Omit = omit,user: str | Omit = omit,# Use the following arguments if you need to pass additional parameters to the API that aren't available via kwargs.# The extra values given here take precedence over values defined on the client or passed to this method.extra_headers: Headers | None = None,extra_query: Query | None = None,extra_body: Body | None = None,timeout: float | httpx.Timeout | None | NotGiven = not_given,) -> Stream[ResponseStreamEvent]:注意,这里的'overload', 这表示不仅仅这一套签名,目前sdk代码中有四种签名,所以create的真是参数系统会更复杂。好在大多数情况,我们根本不需要全都使用,只需要使用常用的几个即可。

二、最简单图片用法

官方示例:

from openai import OpenAIclient = OpenAI()response = client.responses.create(model="gpt-4.1",input=[{"role": "user","content": [{ "type": "input_text", "text": "what is in this file?" },{"type": "input_file","file_url": "https://www.berkshirehathaway.com/letters/2024ltr.pdf"}]}]

)print(response)

三、最简单文件用法

官方示例:

from openai import OpenAIclient = OpenAI()response = client.responses.create(model="gpt-4.1",tools=[{"type": "file_search","vector_store_ids": ["vs_1234567890"],"max_num_results": 20}],input="What are the attributes of an ancient brown dragon?",

)print(response)

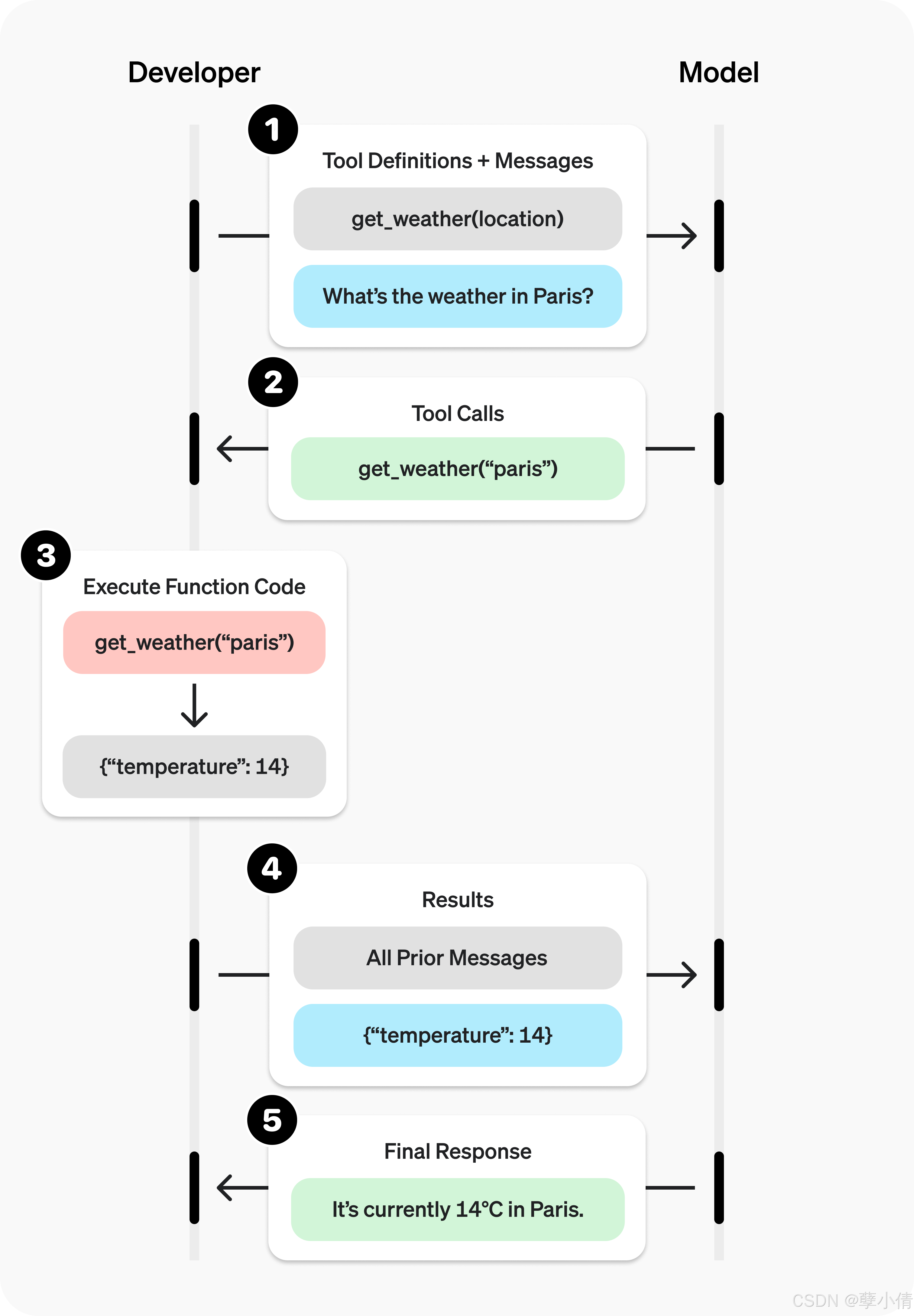

四、最简单function tool用法

from openai import OpenAIclient = OpenAI()tools = [{"type": "function","name": "get_current_weather","description": "Get the current weather in a given location","parameters": {"type": "object","properties": {"location": {"type": "string","description": "The city and state, e.g. San Francisco, CA",},"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]},},"required": ["location", "unit"],}}

]response = client.responses.create(model="gpt-4.1",tools=tools,input="What is the weather like in Boston today?",tool_choice="auto"

)print(response)

这样,response中包含了所有的返回信息,但实际使用中,我们需要的不仅是把工具告诉大模型,还要跟大模型交互,接下来的例子,在官方示例的基础上改造,完成与实际项目中一致的需求。

我们先来看以上简单场景下的response返回内容,以下是真是请求响应的内容:

Response(id='resp_abcdc38752e835a6006911a1c8ef4481a3805065a6a620fa35', created_at=1762763208.0, error=None, incomplete_details=None, instructions=None, metadata={}, model='gpt-4.1-2025-04-14', object='response', output=[ResponseFunctionToolCall(arguments='{"location":"Boston, MA","unit":"celsius"}', call_id='call_ABCDpNPI4FBEy8B8jxaLfAPC', name='get_current_weather', type='function_call', id='fc_abcdc38752e835a6006911a1cb148481a3b4c0ad9d6d9423b3', status='completed')], parallel_tool_calls=True, temperature=1.0, tool_choice='auto', tools=[FunctionTool(name='get_current_weather', parameters={'type': 'object', 'properties': {'location': {'type': 'string', 'description': 'The city and state, e.g. San Francisco, CA'}, 'unit': {'type': 'string', 'enum': ['celsius', 'fahrenheit']}},'required': ['location', 'unit'], 'additionalProperties': False}, strict=True, type='function', description='Get the current weather in a given location')], top_p=1.0, background=False, conversation=None, max_output_tokens=None, max_tool_calls=None, previous_response_id=None, prompt=None, prompt_cache_key=None, reasoning=Reasoning(effort=None, 'generate_summary=None, summary=None), safety_identifier=None, service_tier='default', status='completed', text=ResponseTextConfig(format=ResponseFormatText(type='text'),verbosity='medium'), top_logprobs=0, truncation='disabled', usage=ResponseUsage(input_tokens=75, input_tokens_details=InputTokensDetails(cached_tokens=0), output_tokens=23, output_tokens_details=OutputTokensDetails(reasoning_tokens=0), total_tokens=98),user=None, billing={'payer': 'developer'}, prompt_cache_retention=None, store=True

)从上述内容来看,response返回了get_current_weather所需要的参数,因此我们接下来的工作,就是从response中提取参数,并调用get_current_weather函数。

下面是一个更完整的示例。

五、完整的function tool使用举例

from openai import OpenAI

import jsonclient = OpenAI()# 工具定义

tools = [{"type": "function","name": "get_horoscope","description": "Get today's horoscope for an astrological sign.","parameters": {"type": "object","properties": {"sign": {"type": "string","description": "An astrological sign like Taurus or Aquarius",},},"required": ["sign"],},},

]def get_horoscope(sign):return f"{sign}: Next Tuesday you will befriend a baby otter."# 构造输入内容

input_list = [{"role": "user", "content": "What is my horoscope? I am an Aquarius."}

]# 2. Prompt the model with tools defined

response = client.responses.create(model="gpt-5",tools=tools,input=input_list,

)# 关键点,需要把输出内容附加到输入中再次回灌

input_list += response.outputfor item in response.output:if item.type == "function_call":if item.name == "get_horoscope":# 3. Execute the function logic for get_horoscopehoroscope = get_horoscope(json.loads(item.arguments))# 4. Provide function call results to the modelinput_list.append({"type": "function_call_output","call_id": item.call_id,"output": json.dumps({"horoscope": horoscope})})print("Final input:")

print(input_list)response = client.responses.create(model="gpt-5",instructions="Respond only with a horoscope generated by a tool.",tools=tools,input=input_list,

)# 最终响应

print("Final output:")

print(response.model_dump_json(indent=2))

print("\n" + response.output_text)