Kubernetes1.23版本搭建(三台机器)

Ubuntu部署 Kubernetes1.23

资源列表

准备三台Ubuntu的服务器,配置好网络。

| 主机名 | IP | 所需软件 |

|---|---|---|

| master | 192.168.221.21 | Docker Ce、kube-apiserver、kube-controller-manager、kube-scheduler、kubelet、Etcd、kube-proxy |

| node1 | 192.168.221.22 | Docker CE、kubectl、kube-proxy、Flnnel |

| node2 | 192.168.221.23 | Docker CE、kubectl、kube-proxy、Flnnel |

基础环境(三台机器都执行)

# 分别修改主机名hostnamectl set-hostname masterhostnamectl set-hostname node1hostnamectl set-hostname node2# 切换root用户(已经是的话就省略)su -# 绑定hosts解析cat >> /etc/hosts << EOF192.168.221.21 master192.168.221.22 node1192.168.221.23 node2EOF### 一、环境准备# 1.1、安装常用软件# 更新软件仓库apt update# 安装常用软件apt install vim lrzsz unzip wget net-tools tree bash-completion telnet -y# 1.2、关闭交换分区- kubeadm不支持swap交换分区# 临时关闭swapoff -a# 或者永久关闭sed -i '/swap/s/^/#/' /etc/fstab# 1.3、开启IPv4转发和内核优化cat <<EOF | sudo tee /etc/sysctl.d/k8s.confnet.bridge.bridge-nf-call-iptables = 1net.bridge.bridge-nf-call-ip6tables = 1net.ipv4.ip_forward = 1EOFsysctl --system# 1.4、时间同步apt -y install ntpdatentpdate ntp.aliyun.com### 二、安装Docker# 2.1、卸载残留Docker软件包(没有下载过就跳过)for pkg in docker.io docker-doc docker-compose docker-compose-v2 podman-docker containerd runc; do sudo apt-get remove $pkg; done# 2.2、更新软件包- 在终端中执行以下命令来更新Ubuntu软件包列表和已安装软件的版本升级sudo apt updatesudo apt upgrade# 2.3、安装Docker依赖- Docker在Ubuntu上依赖一些软件包,执行以下命令来安装这些依赖apt-get -y install ca-certificates curl gnupg lsb-release# 2.4、添加Docker官方GPG密钥- 执行以下命令来添加Docker官方的GPG密钥# 最终回显OK表示运行命令正确curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -# 2.5、添加Docker软件源# 需要管理员交互式按一下回车键sudo add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"# 2.6、安装Docker- 执行以下命令安装Docker-20.10版本,新版本Docker和k8s-1.23不兼容apt install docker-ce=5:20.10.14~3-0~ubuntu-jammy docker-ce-cli=5:20.10.14~3-0~ubuntu-jammy containerd.io -y# 2.7、配置用户组(可选)- 默认情况下,只有root用户和Docker组的用户才能运行Docker命令。我们可以将当前用户添加到Docker组,以避免每次使用时都需要使用sudo。- 注意:重新登录才能使更改生效sudo usermod -aG docker $USER# 2.8、安装工具apt-get -y install apt-transport-https ca-certificates curl software-properties-common# 2.9、开启Dockersystemctl start dockersystemctl enable docker# 2.10、配置Docker加速器vim /etc/docker/daemon.json{"registry-mirrors": ["https://0c105db5188026850f80c001def654a0.mirror.swr.myhuaweicloud.com","https://registry.docker-cn.com","http://hub-mirror.c.163.com","https://docker.mirrors.ustc.edu.cn","https://kfwkfulq.mirror.aliyuncs.com","https://docker.rainbond.cc","https://5tqw56kt.mirror.aliyuncs.com","https://docker.1panel.live","http://mirrors.ustc.edu.cn","http://mirror.azure.cn","https://hub.rat.dev","https://docker.chenby.cn","https://docker.hpcloud.cloud","https://docker.m.daocloud.io","https://docker.unsee.tech","https://dockerpull.org","https://dockerhub.icu","https://proxy.1panel.live","https://docker.1panel.top","https://docker.1ms.run","https://docker.ketches.cn"],"insecure-registries": ["http://192.168.57.200:8099"]}# 重启Dockersystemctl daemon-reloadsystemctl restart docker### 三、通过kubeadm部署Kubernetes集群# 3.1、配置Kubernetes的APT源- 这里使用aliyun的源# 安装软件包apt-get install -y apt-transport-https ca-certificates curl# 下载Kubernetes GPG密钥curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg# 将GPG密钥添加到APT的密钥管理中cat /usr/share/keyrings/kubernetes-archive-keyring.gpg | sudo apt-key add -# 指定软件仓库位置echo "deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list# 更新软件仓库apt-get update# 3.2、查看Kubernetes可用版本apt-cache madison kubeadm# 3.3、安装kubeadm管理工具- kubectl:命令行管理工具、kubeadm:安装K8S集群工具、kubelet管理容器工具# 安装1.23版本的Kubernetes,因为1.23以后Kubernetes就不再支持Docker做底层容器运行时apt-get install -y kubelet=1.23.0-00 kubeadm=1.23.0-00 kubectl=1.23.0-00# 锁定版本,防止自动升级apt-mark hold kubelet kubeadm kubectl docker docker-ce docker-ce-cli# 查看版本kubelet --versionkubeadm versionkubectl version# 3.4、设置Kubelet开机启动systemctl enable kubeletkubeadm初始化集群、安装flannel网络插件(节点分开操作)

# 4.1、master节点生成初始化配置文件root@master:~# kubeadm config print init-defaults > init-config.yaml# ### 4.2、master节点修改初始化配置文件root@master:~# vim init-config.yaml+++++++++++++++++++++++++++++++++++++++++++apiVersion: kubeadm.k8s.io/v1beta3bootstrapTokens:- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authenticationkind: InitConfigurationlocalAPIEndpoint:advertiseAddress: 192.168.221.21 # master节点IP地址bindPort: 6443nodeRegistration:criSocket: /var/run/dockershim.sockimagePullPolicy: IfNotPresentname: master # 如果使用域名保证可以解析,或直接使用IP地址taints: null---apiServer:timeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta3certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrollerManager: {}dns: {}etcd:local:dataDir: /var/lib/etcdimageRepository: registry.aliyuncs.com/google_containers # 默认地址国内无法访问,修改为国内地址kind: ClusterConfigurationkubernetesVersion: 1.23.0 # 指定kubernetes部署的版本networking:dnsDomain: cluster.localserviceSubnet: 10.96.0.0/12 # service资源的网段,集群内部的网络podSubnet: 10.244.0.0/16 # 新增加Pod资源网段,需要与下面的pod网络插件地址一致scheduler: {}+++++++++++++++++++++++++++++++++++++++++++++++++++++++++# 4.3、master节点拉取所需镜像# 在教室局域网有打包好的镜像wget http://192.168.57.200/Software/k8s-1.23.tar.xztar -xvf k8s-1.23.tar.xzcd k8s-1.23/# 批量导入镜像for img in `ls *.tar`;do docker load -i $img;done补充内容:

或者可以使用公网安装镜像(与教室局域网二选一即可)

# 查看初始化需要的镜像

root@master:~# kubeadm config images list --config=init-config.yaml

registry.aliyuncs.com/google_containers/kube-apiserver:v1.23.0

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.23.0

registry.aliyuncs.com/google_containers/kube-scheduler:v1.23.0

registry.aliyuncs.com/google_containers/kube-proxy:v1.23.0

registry.aliyuncs.com/google_containers/pause:3.6

registry.aliyuncs.com/google_containers/etcd:3.5.1-0

registry.aliyuncs.com/google_containers/coredns:v1.8.6

# 拉取所需镜像

root@master:~# kubeadm config images pull --config=init-config.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.23.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.23.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.23.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.23.0

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.6

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.1-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.8.6

# 查看拉取的镜像

root@master:~# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-apiserver v1.23.0 e6bf5ddd4098 2 years ago 135MB

registry.aliyuncs.com/google_containers/kube-proxy v1.23.0 e03484a90585 2 years ago 112MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.23.0 37c6aeb3663b 2 years ago 125MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.23.0 56c5af1d00b5 2 years ago 53.5MB

registry.aliyuncs.com/google_containers/etcd 3.5.1-0 25f8c7f3da61 2 years ago 293MB

registry.aliyuncs.com/google_containers/coredns v1.8.6 a4ca41631cc7 2 years ago 46.8MB

hello-world latest feb5d9fea6a5 2 years ago 13.3kB

registry.aliyuncs.com/google_containers/pause 3.6 6270bb605e12 2 years ago 683kB

# 4.4、master初始化集群cdroot@master:~# kubeadm init --config=init-config.yaml最后记下这两段话就行#############################################################mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config#############################################################kubeadm join 192.168.221.21:6443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:6e96cc6ec35a69175035a4a056c05df77c24399da83e394ff12b11768db419a3 ############################################################## 4.5、master节点复制k8s认证文件到用户的home目录# 4.6、Node节点加入集群- 直接把`master`节点初始化之后的最后回显的token复制粘贴到node节点回车即可,无须做任何配置root@node:~# kubeadm join 192.168.221.21:6443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:6e96cc6ec35a69175035a4a056c05df77c24399da83e394ff12b11768db419a3 [preflight] Running pre-flight checks······最后显示Run 'kubectl get nodes' on the control-plane to see this node join the cluster.# 4.7、在master主机查看节点状态- 在初始化k8s-master时并没有网络相关的配置,所以无法跟node节点通信,因此状态都是“Not Ready”。但是通过kubeadm join加入的node节点已经在master上可以看到root@master:~# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster NotReady control-plane,master 101s v1.23.0node1 NotReady <none> 27s v1.23.0node2 NotReady <none> 21s v1.23.0## 5.1、安装flannel网络插件master(创建了一个覆盖整个集群的群集网络,使得Pod可以跨节点通信)# 下载kube-flannel.yml文件root@master:~# wget https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml# 如果失败就用后面这种方法手动创建文件######放在文章末尾了# 安装kube-flannelroot@master:~# kubectl apply -f kube-flannel.yml# 5.2、查看Node节点状态(需要等待一会儿)root@master:~# kubectl get nodeNAME STATUS ROLES AGE VERSIONmaster Ready control-plane,master 13m v1.23.0node1 Ready <none> 12m v1.23.0node2 Ready <none> 12m v1.23.0# 查看所有Pod状态root@master:~# kubectl get pod -ANAMESPACE NAME READY STATUS RESTARTS AGEkube-flannel kube-flannel-ds-pc7gw 1/1 Running 0 89skube-flannel kube-flannel-ds-xxcqj 1/1 Running 0 89skube-system coredns-6d8c4cb4d-fx6vd 1/1 Running 0 44mkube-system coredns-6d8c4cb4d-pgwcc 1/1 Running 0 44mkube-system etcd-master 1/1 Running 1 44mkube-system kube-apiserver-master 1/1 Running 1 44mkube-system kube-controller-manager-master 1/1 Running 1 44mkube-system kube-proxy-2gghk 1/1 Running 0 44mkube-system kube-proxy-qf26c 1/1 Running 0 20mkube-system kube-scheduler-master 1/1 Running 1 44m# 查看组件状态root@master:~# kubectl get csWarning: v1 ComponentStatus is deprecated in v1.19+NAME STATUS MESSAGE ERRORcontroller-manager Healthy ok scheduler Healthy ok etcd-0 Healthy {"health":"true","reason":""}# 5.3、开启kubectl命令补全功能apt install bash-completion -ysource /usr/share/bash-completion/bash_completionsource <(kubectl completion bash)echo "source <(kubectl completion bash)" >> ~/.bashrc############################################################## 新增节点到K8S集群# 1.配置hosts文件cat >> /etc/hosts << EOF192.168.221.23 node2EOF# 2.通过 kubeadm 将节点加入集群# 主节点上获取token(然后在新节点上运行)kubeadm token create --print-join-command# 查看节点是否加入成功——显示Ready(主节点操作)kubectl get node## 从K8S集群中删除节点# 1.先确保节点安全下线(主操作)kubectl cordon node2kubectl drain node2 --ignore-daemonsets --delete-emptydir-data# 2.从集群中移除节点(主操作)kubectl delete node node2kubectl get node# 3.清理被移除的节点(工作节点操作)systemctl stop kubeletkubeadm resetrm -rf /etc/cni/net.d /var/lib/kubelet /var/lib/etcd补充内容:

# 如果下载失败也可以手动创建kube-flannel.yml文件

root@master:~# vim kube-flannel.yml

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

k8s-app: flannel

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: flannel

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: flannel

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: flannel

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

k8s-app: flannel

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"EnableNFTables": false,

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

k8s-app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

#image: docker.io/flannel/flannel-cni-plugin:v1.6.0-flannel1

image: 192.168.57.200:8099/k8s-1.23/flannel-cni-plugin:v1.6.0-flannel1 # 使用局域网镜像

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

#image: docker.io/flannel/flannel:v0.26.1

image: 192.168.57.200:8099/k8s-1.23/flannel:v0.26.1 # 使用局域网镜像

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

#image: docker.io/flannel/flannel:v0.26.1

image: 192.168.57.200:8099/k8s-1.23/flannel:v0.26.1 # 使用局域网镜像

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

最后一步,安装KubePi

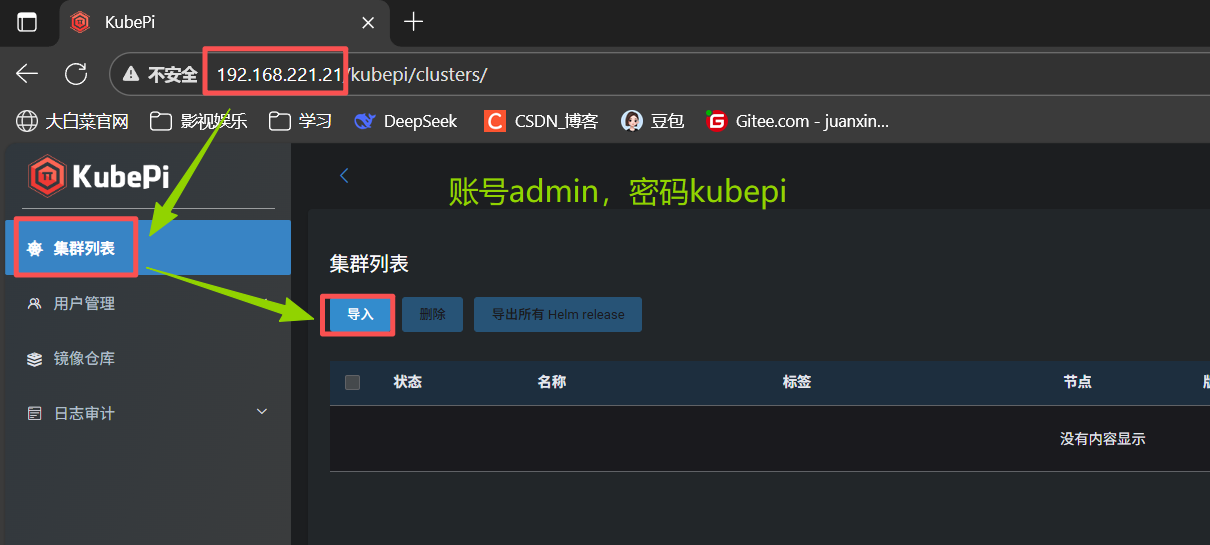

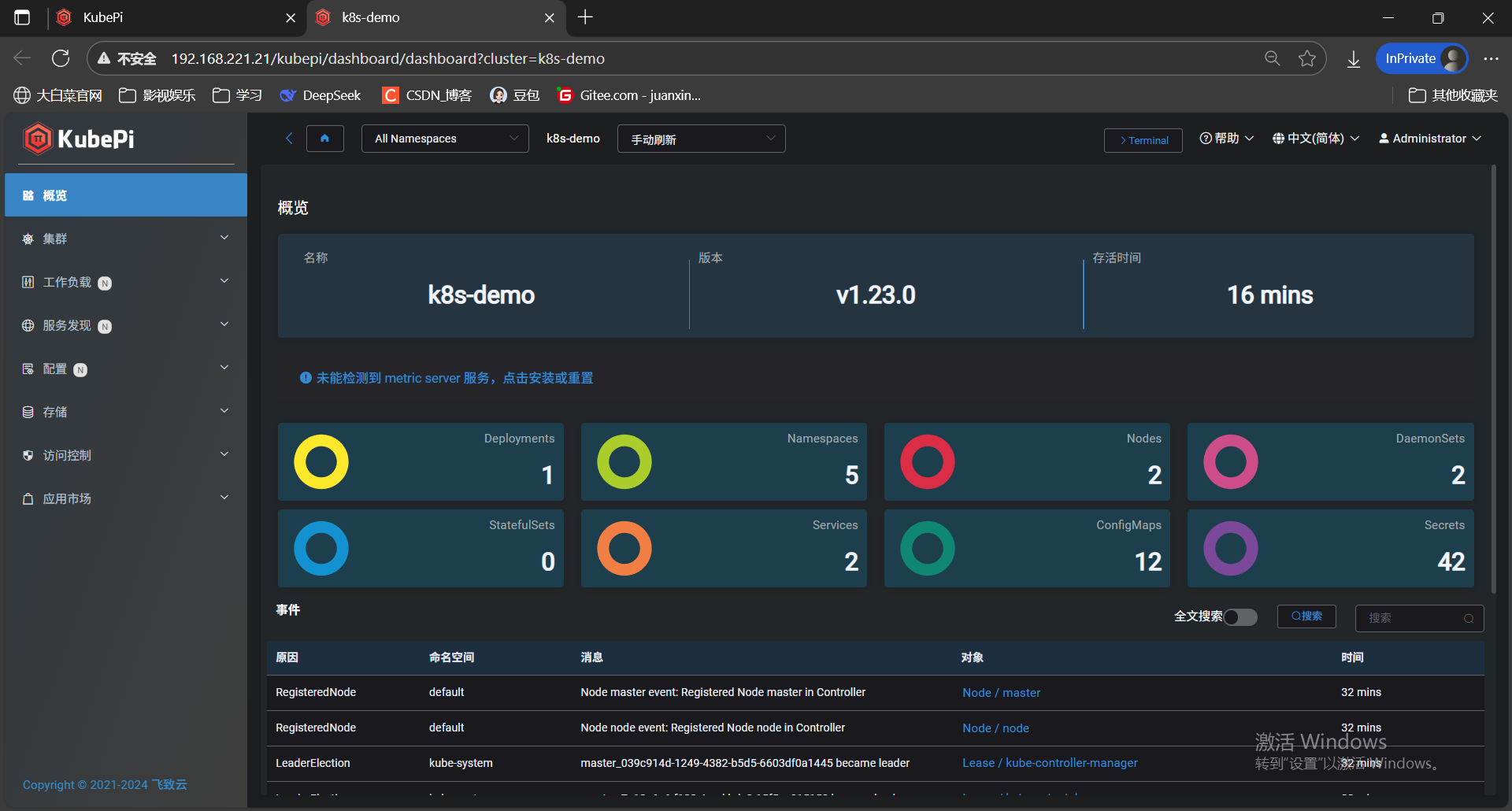

KubePi 是一个现代化的 K8s 面板。KubePi 允许管理员导入多个 Kubernetes 集群,并且通过权限控制,将不同 cluster、namespace 的权限分配给指定用户;允许开发人员管理 Kubernetes 集群中运行的应用程序并对其进行故障排查,供开发人员更好地处理 Kubernetes 集群中的复杂性。

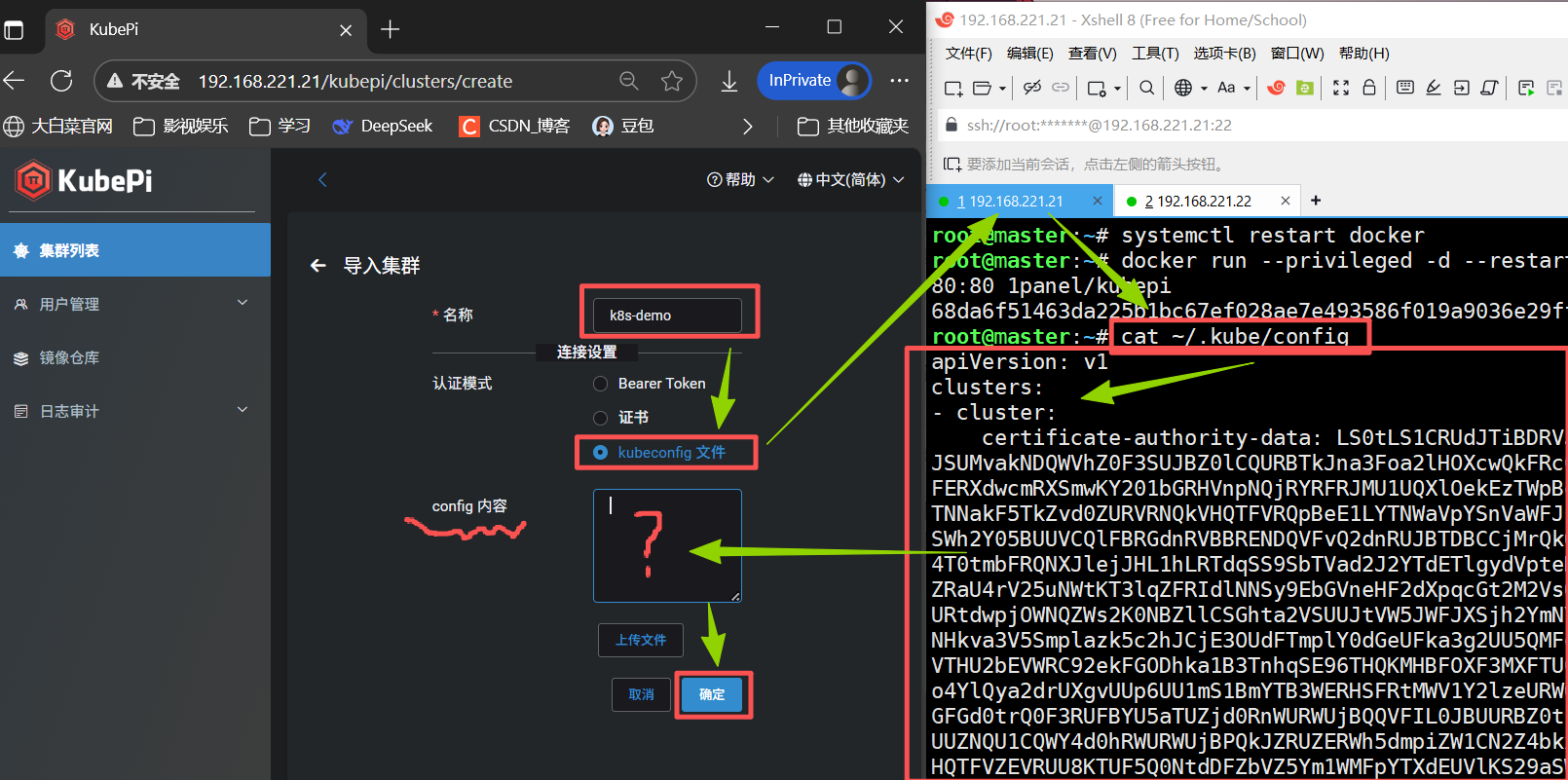

# 6.1快速开始

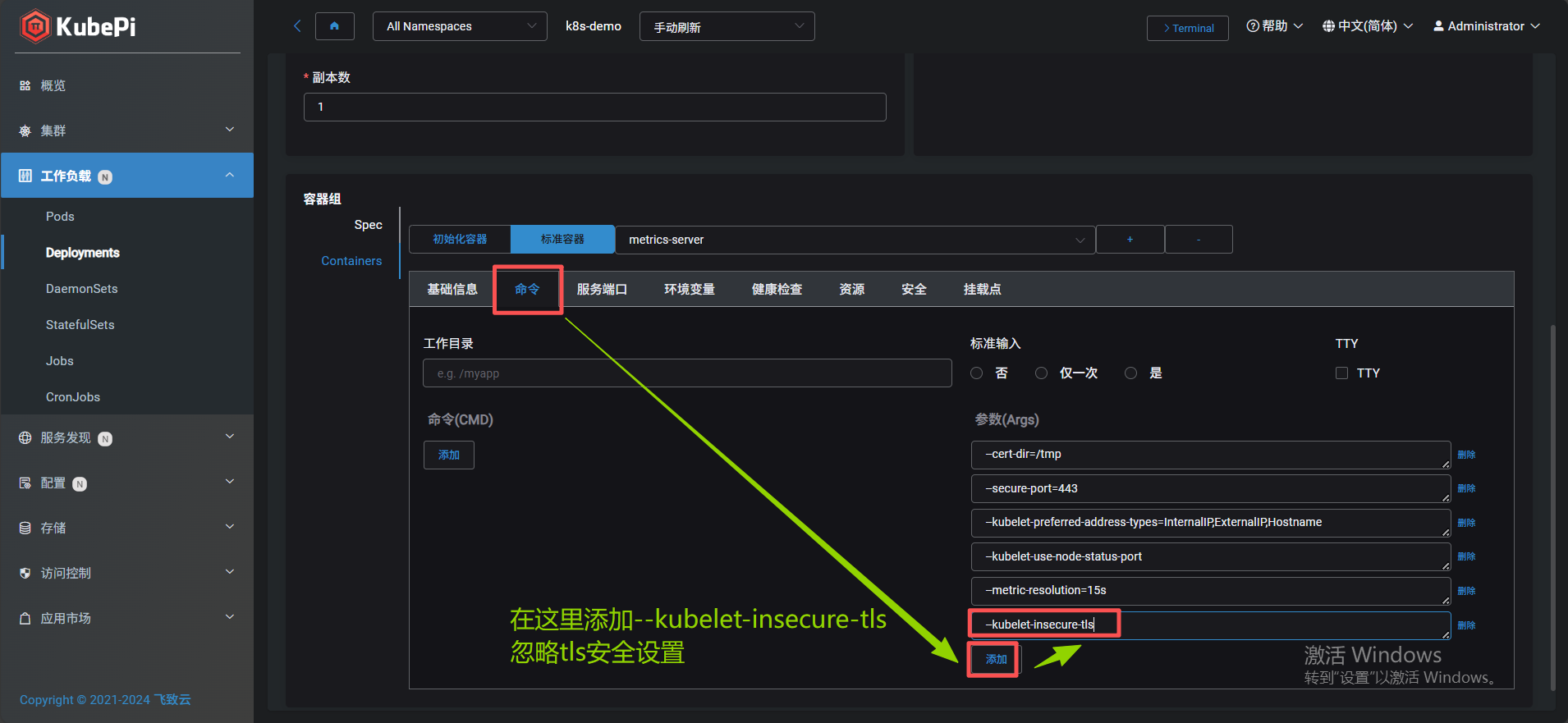

docker run --privileged -d --restart=unless-stopped -p 80:80 1panel/kubepi# 如果这里出错就重启一次再运行systemctl restart docker浏览器访问192.168.221.21即可显示kubepi# 用户名: admin# 密码: kubepi# 查看master上的kubeconfig文件root@master:~# cat ~/.kube/config# 忽略tls安全设置--kubelet-insecure-tls

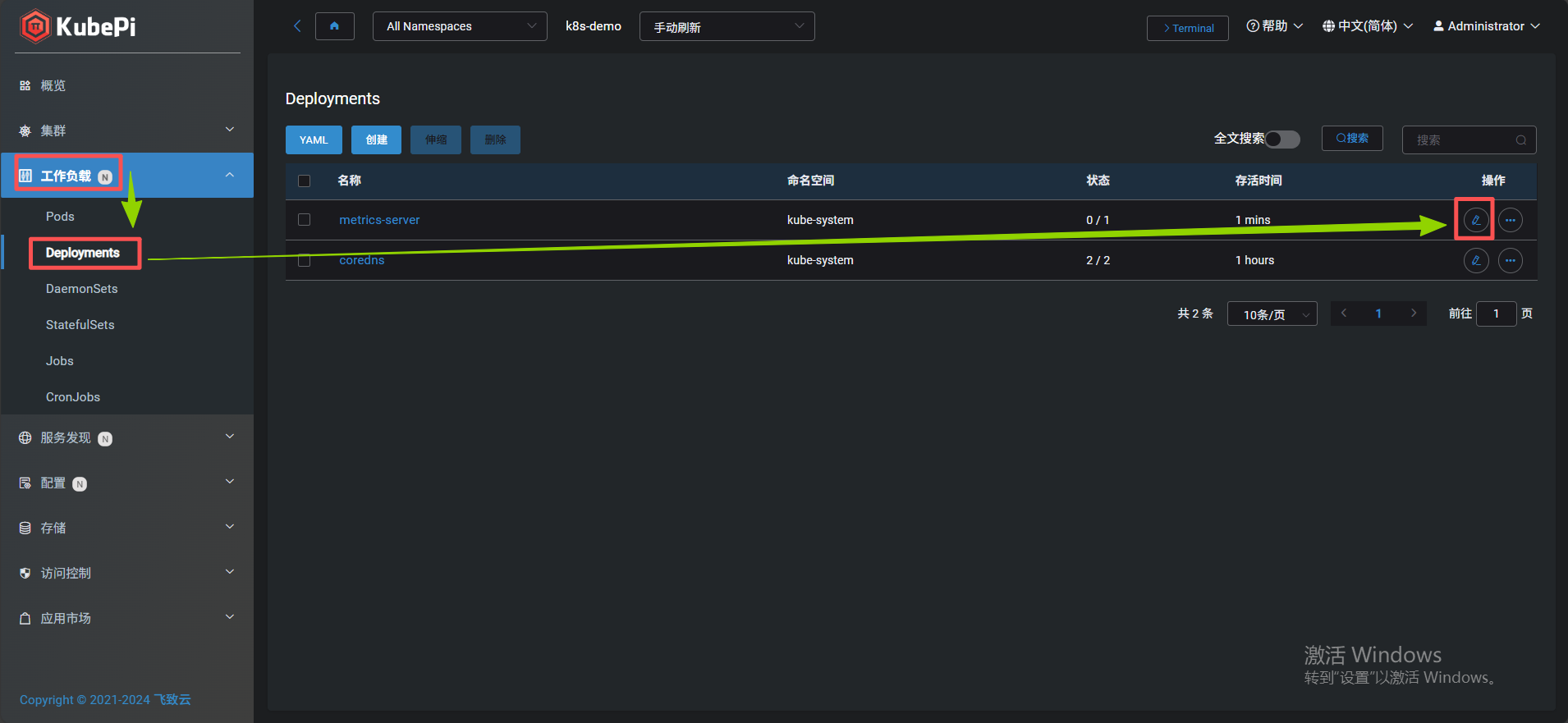

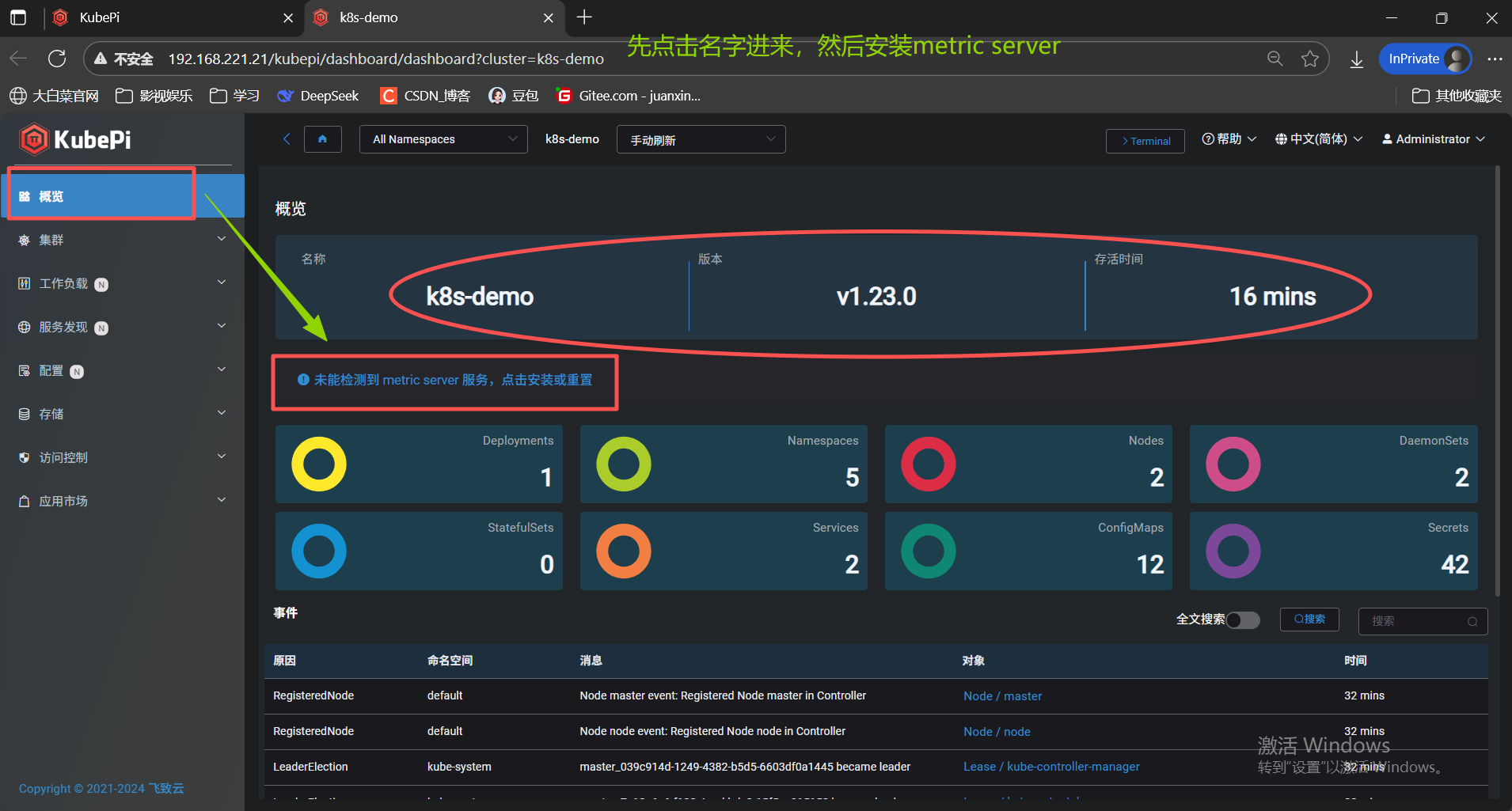

点击蓝色警告安装metric server

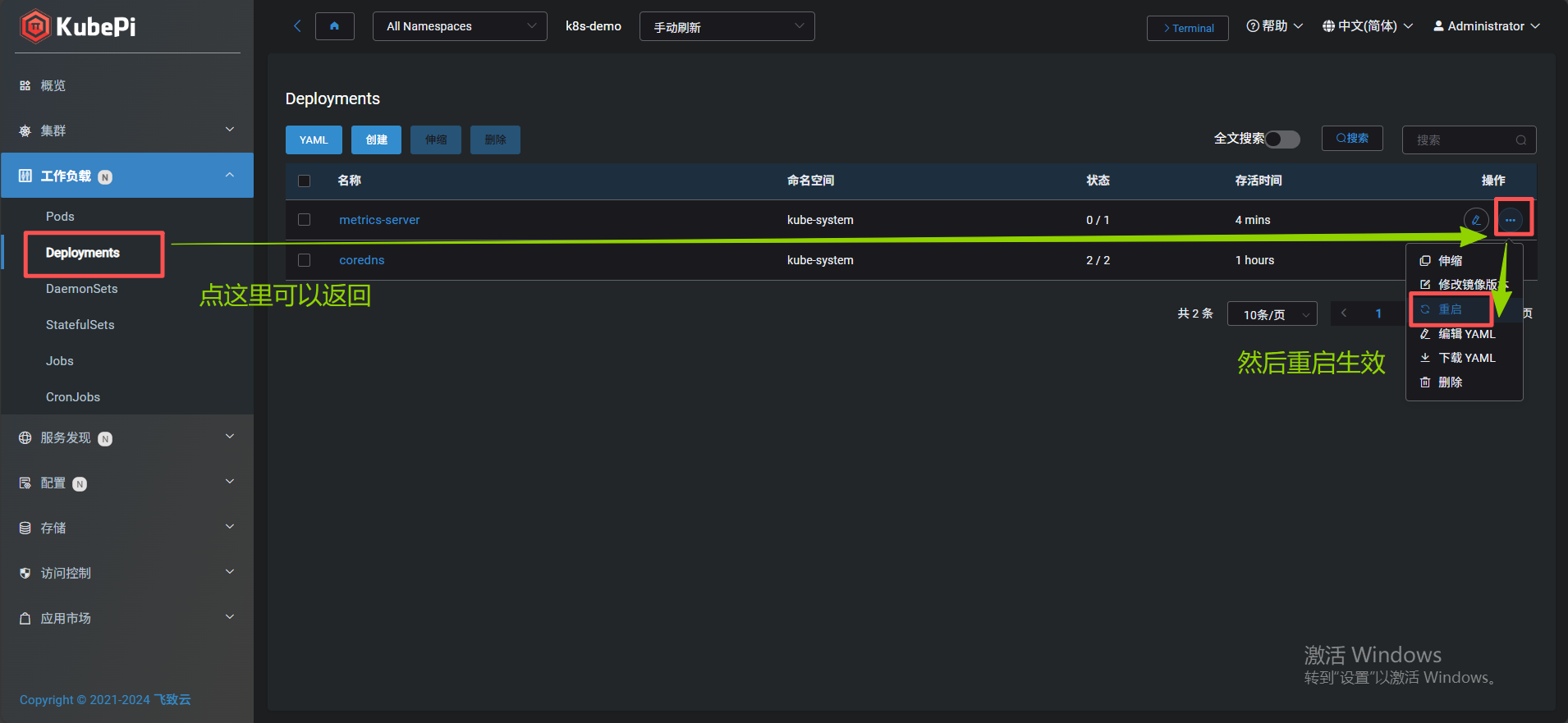

修改配置