【大模型本地对话页面开发】

大模型本地对话页面@大模型本地对话页面开发

大模型本地对话页面

使用gradio、langchain_community开发本地模型对话页面。

安装python模块

pip install gradio

pip install llama-index-llms-ollama

查找是否安装模块

pip list | findstr "模块名称"

比如:pip list | findstr "gradio"

丰富版

import gradio as gr

from langchain_community.llms import Ollama

import time

import json

from datetime import datetimedef msgs(model, msg, temperature=0.7, max_tokens=512):""" 调用Ollama模型生成回复 """try:llm = Ollama(base_url="http://host.docker.internal:11434",model=model,temperature=temperature,num_predict=max_tokens)response = llm.invoke(msg)return responseexcept Exception as e:return f"错误: {str(e)}"

# 创建Gradio界面

with gr.Blocks(title="大模型对话页面") as demo:gr.Markdown("# 🤖 大模型对话助手")gr.Markdown("与本地部署的大语言模型进行对话,支持多种模型选择")# 存储聊天历史chat_history = gr.State([])with gr.Row():with gr.Column(scale=1):gr.Markdown("### ⚙️ 设置")# 模型选择下拉框model_choice = gr.Dropdown(choices=["deepseek-v3.1:671b-cloud", "gpt-oss:20b", "llama3.2-vision:latest", "qwen3:8b", "deepseek-r1:la", "qwen2.5vl:latest"],value="llama3.2-vision:latest",label="🧠 选择模型")# 参数设置temperature = gr.Slider(minimum=0.0,maximum=1.0,value=0.7,step=0.1,label="🌡️ 温度 (创造性)")max_tokens = gr.Slider(minimum=100,maximum=2048,value=512,step=100,label="📝 最大长度")system_prompt = gr.Textbox(label="⚙️ 系统提示词",placeholder="可选:为模型提供上下文或指令",lines=13)with gr.Row(scale=1):# 清除按钮# clear_btn = gr.Button("🗑️ 清除对话", variant="secondary")# # 导出按钮# export_btn = gr.Button("💾 导出对话", variant="secondary")clear_btn = gr.Button("🗑️ 清除对话",variant="secondary")# 导出按钮export_btn = gr.Button("💾 导出对话",variant="secondary")with gr.Column(scale=3):# 聊天历史记录chatbot = gr.Chatbot(label="对话记录",height=500,show_label=True)# 用户输入框msg = gr.Textbox(label="输入消息",placeholder="请输入您的问题... (按Enter发送,Shift+Enter换行)",lines=3)with gr.Row():submit_btn = gr.Button("发送", variant="primary")stop_btn = gr.Button("停止", variant="stop")# 导出文件export_file = gr.File(label="导出文件", visible=False)def respond(message, chat_history, model, temperature, max_tokens, system_prompt):"""处理用户消息并生成回复"""if message == "":return "", chat_history, None# 如果有系统提示词,将其添加到消息前if system_prompt:full_message = f"System: {system_prompt}\n\nUser: {message}"else:full_message = message# 添加用户消息到聊天历史chat_history.append((message, None))yield "", chat_history, Nonetry:# 调用模型生成回复response = msgs(model, full_message, temperature, max_tokens)# 逐字显示回复以增加交互感bot_message = ""for char in response:bot_message += charchat_history[-1] = (message, bot_message)yield "", chat_history, Nonetime.sleep(0.01) # 控制打字速度except Exception as e:error_msg = f"错误: {str(e)}"chat_history[-1] = (message, error_msg)yield "", chat_history, Nonedef clear_history():"""清除聊天历史"""return [], []def export_chat(chat_history):"""导出聊天记录为JSON文件"""if not chat_history:return None# 创建聊天记录的副本并添加时间戳export_data = {"timestamp": datetime.now().isoformat(),"chat_history": chat_history}# 生成文件名filename = f"chat_export_{datetime.now().strftime('%Y%m%d_%H%M%S')}.json"# 写入文件with open(filename, "w", encoding="utf-8") as f:json.dump(export_data, f, ensure_ascii=False, indent=2)return filename# 绑定事件submit_btn.click(respond,[msg, chatbot, model_choice, temperature, max_tokens, system_prompt],[msg, chatbot, export_file])msg.submit(respond,[msg, chatbot, model_choice, temperature, max_tokens, system_prompt],[msg, chatbot, export_file])clear_btn.click(clear_history,None,[chatbot, chat_history])export_btn.click(export_chat,[chatbot],[export_file])

if __name__ == "__main__":# 获取环境变量中的端口或使用默认端口# port = int(os.environ.get("GRADIO_SERVER_PORT", 7861))demo.launch(server_name="localhost", server_port=7878, share=False)

##丰富版效果展示

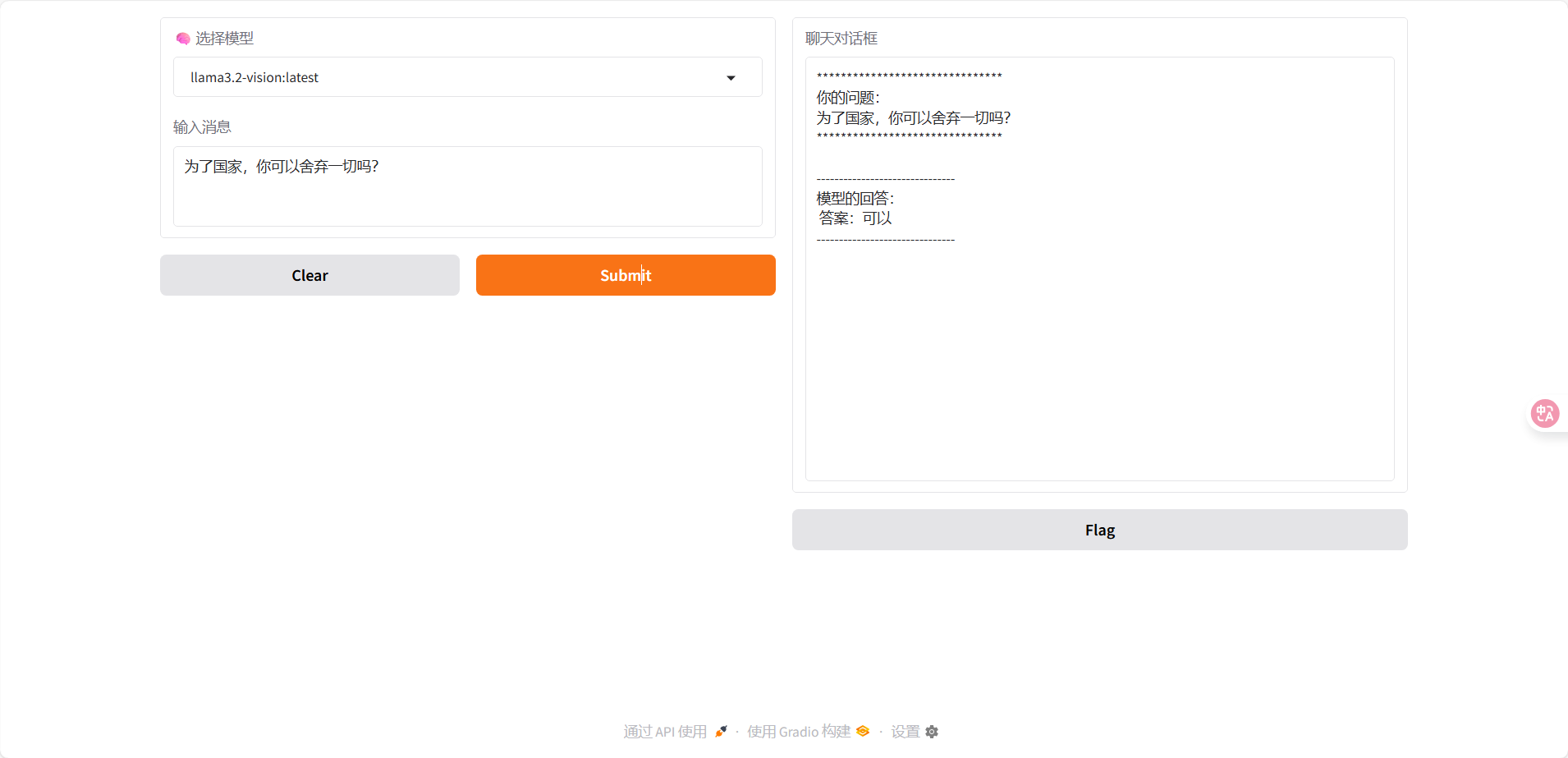

简单版

import gradio as gr

from langchain_community.llms import Ollama

def msgs(model, msg,temperature=0.7, max_tokens=512):"""调用Ollama模型生成回复"""try:llm = Ollama(base_url="http://host.docker.internal:11434",model=model,temperature=temperature,num_predict=max_tokens)response = llm.invoke(msg)return f"<strong>你的问题:{msg}<strong>\n<strong>模型的回答:{response}<strong>"except Exception as e:return f"错误: {str(e)}"interface=gr.Interface(fn=msgs,inputs=[gr.Dropdown(choices=["deepseek-v3.1:671b-cloud", "gpt-oss:20b", "llama3.2-vision:latest", "qwen3:8b", "deepseek-r1:la", "qwen2.5vl:latest"],value="llama3.2-vision:latest",label="🧠 选择模型"),gr.Textbox(label="输入消息",lines=3,placeholder="输入你的问题")],outputs=gr.Textbox(label="聊天对话框", lines=20),#outputs=gr.ChatInterface.

)

if __name__ == "__main__":interface.launch(server_port=8081)

简单版效果展示

gradio使用方法地址

gradio地址