利用Java API与HDFS进行交互

1.环境

Ubuntu

2.安装Eclipse

1.在主机下载Eclipse安装包并挂载(传输)到虚拟机

具体方法:

https://blog.csdn.net/2401_86886401/article/details/151230102?spm=1001.2014.3001.5501

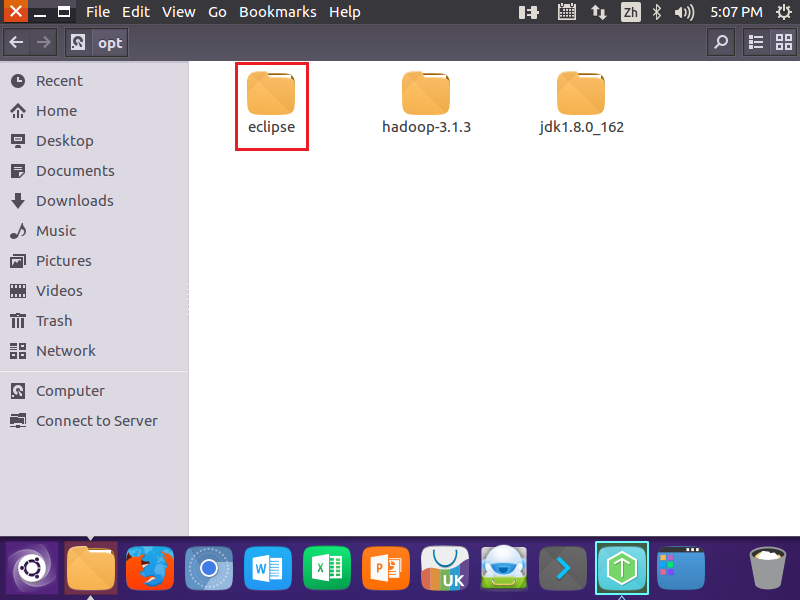

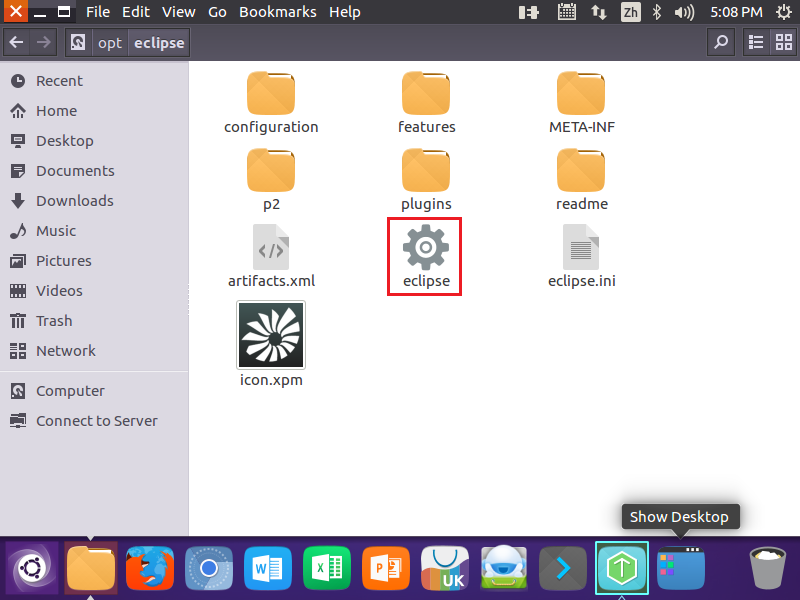

2.解压安装包并检查能否正常打开

1.解压

sudo tar -zxvf eclipse-4.7.0-linux.gtk.x86_64.tar.gz -C /opt/

//解压安装包![]()

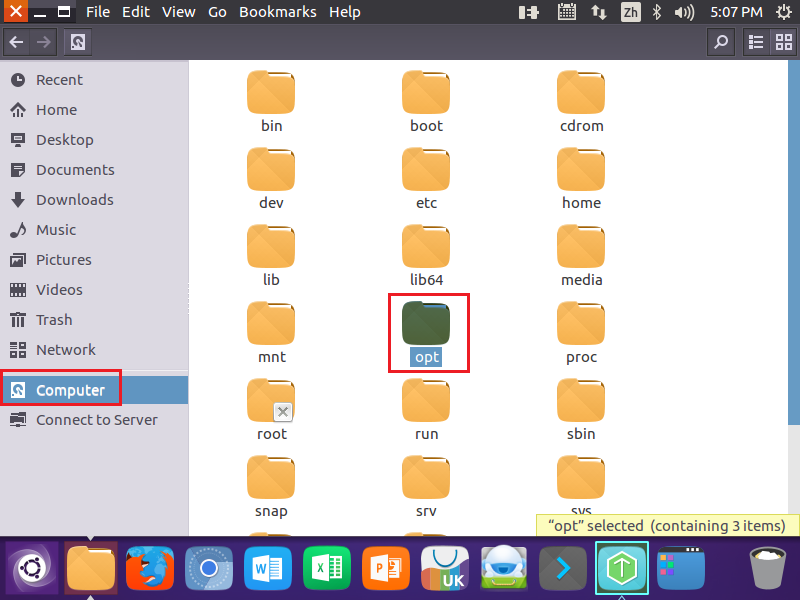

2.在文件夹找到下载文件

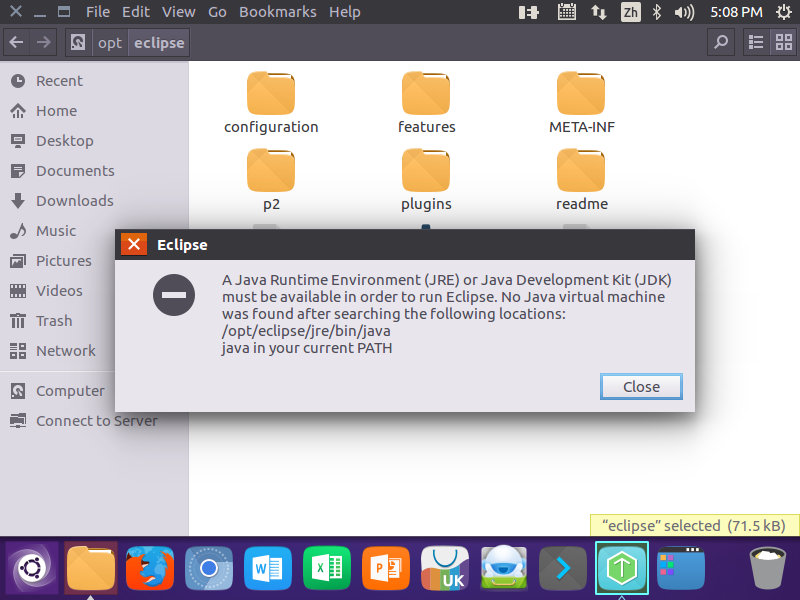

3.问题(Eclipse找不到Java虚拟机)

解决:

cd /opt/eclipse

//进入eclipse安装目录![]()

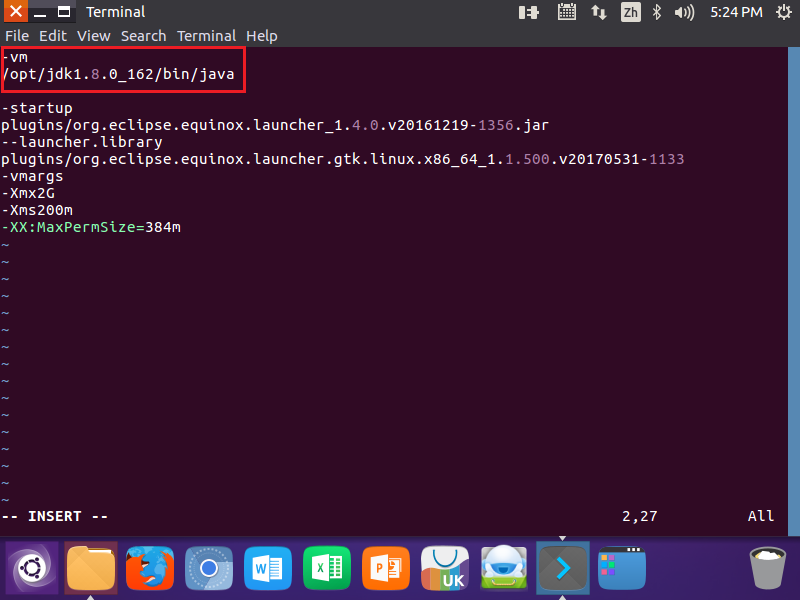

sudo vim eclipse.ini

//编辑文件![]()

-vm

/opt/jdk1.8.0_162/bin/java

//在eclipse.ini中添加Java路径(确保与你自己相同)

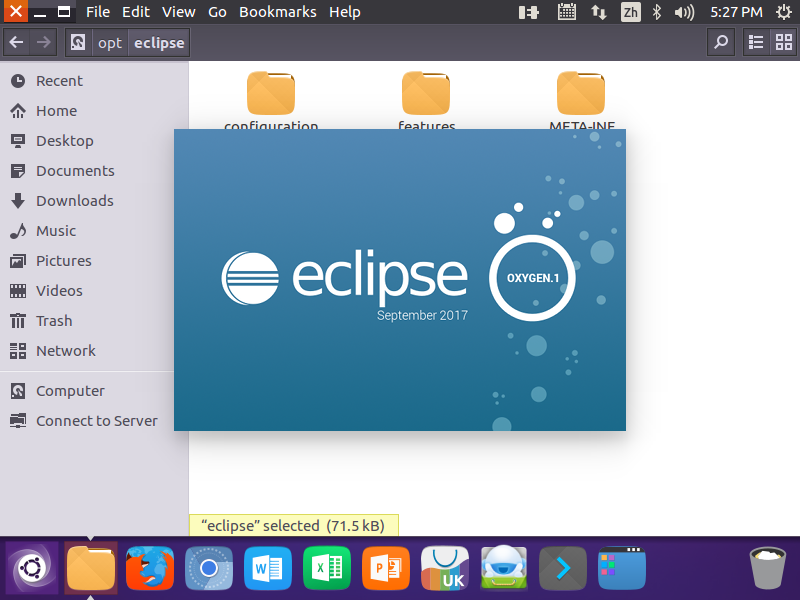

重新打开eclipse出现此图就是解决成功:

3.使用Eclipse开发调试HDFS Java程序

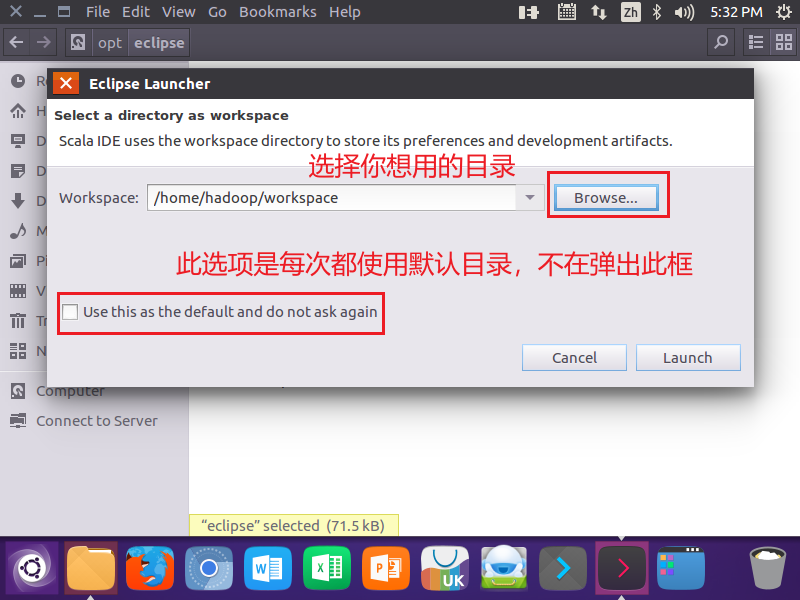

1.设置工作空间

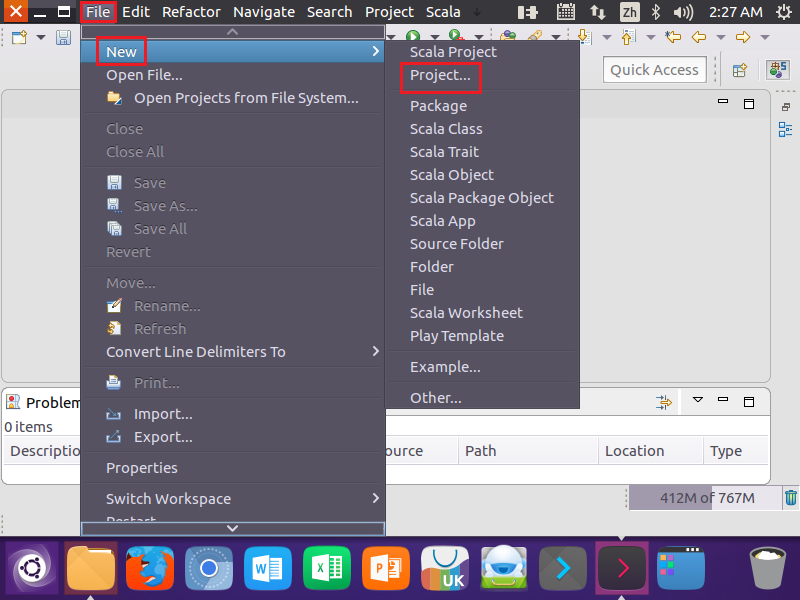

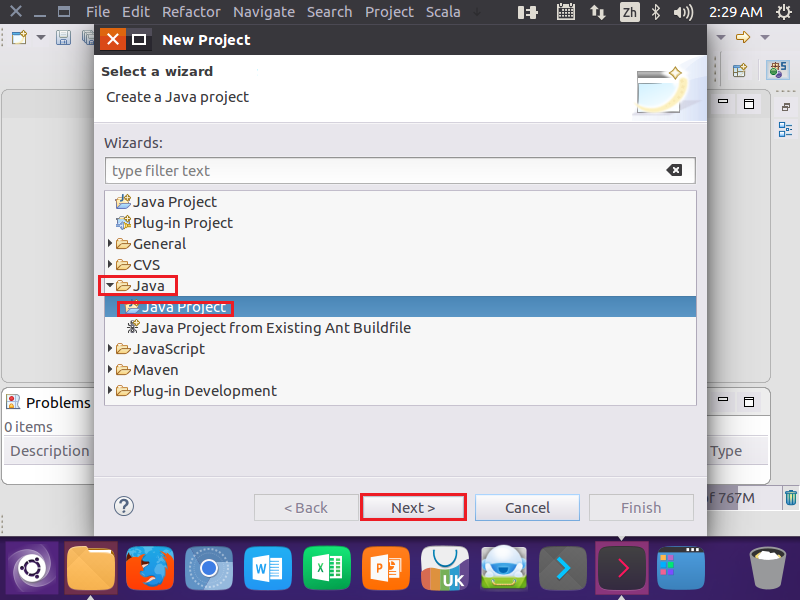

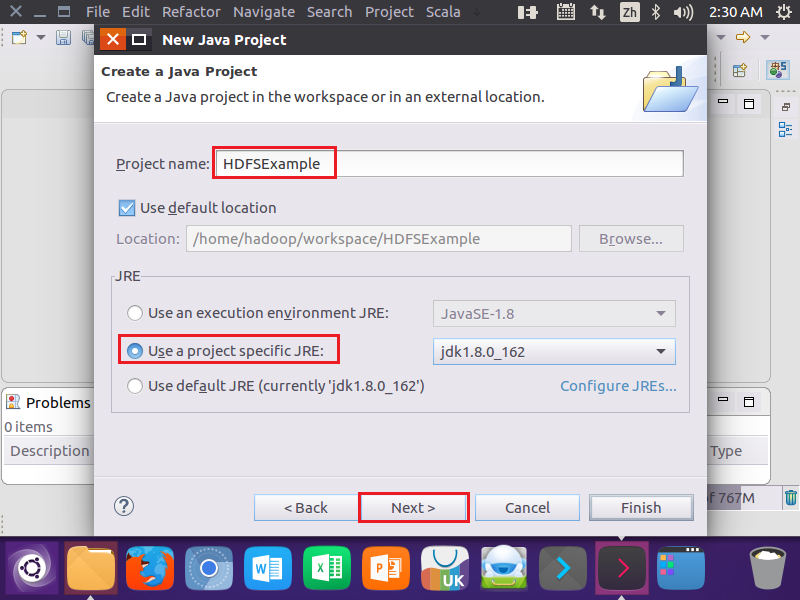

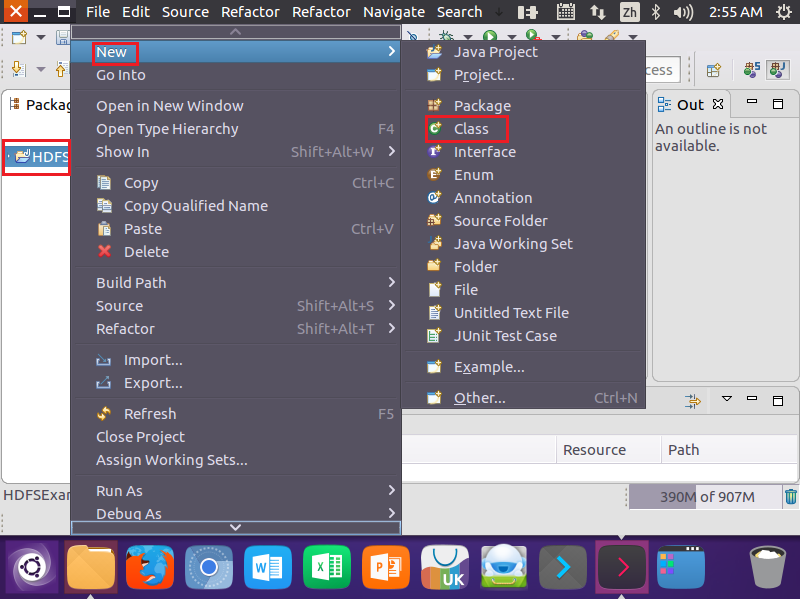

2.创建java工程

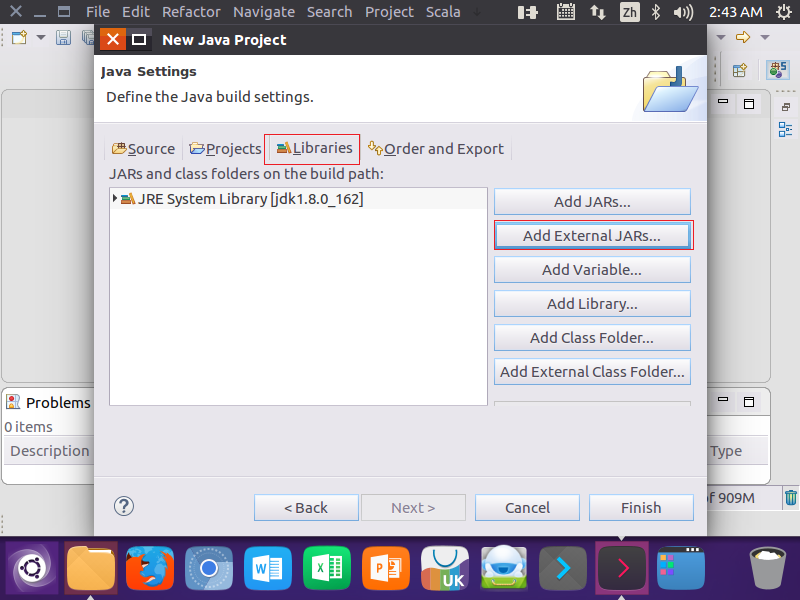

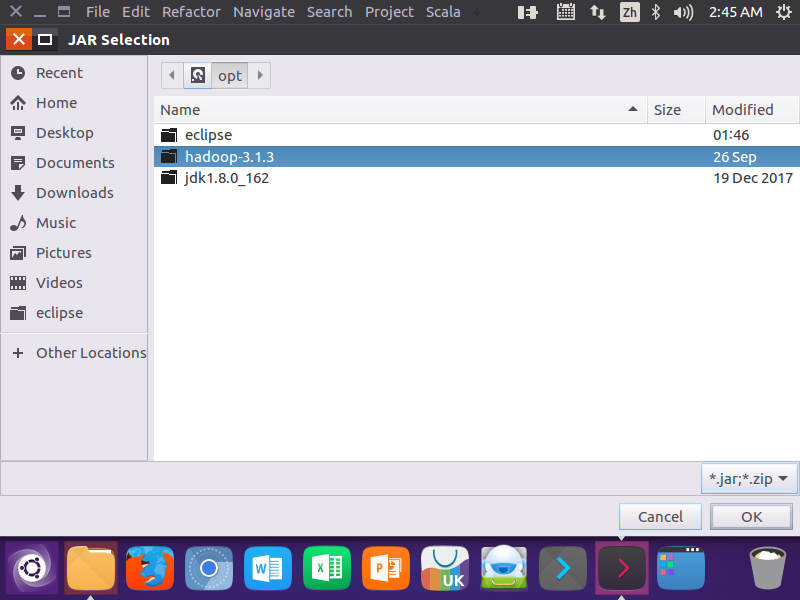

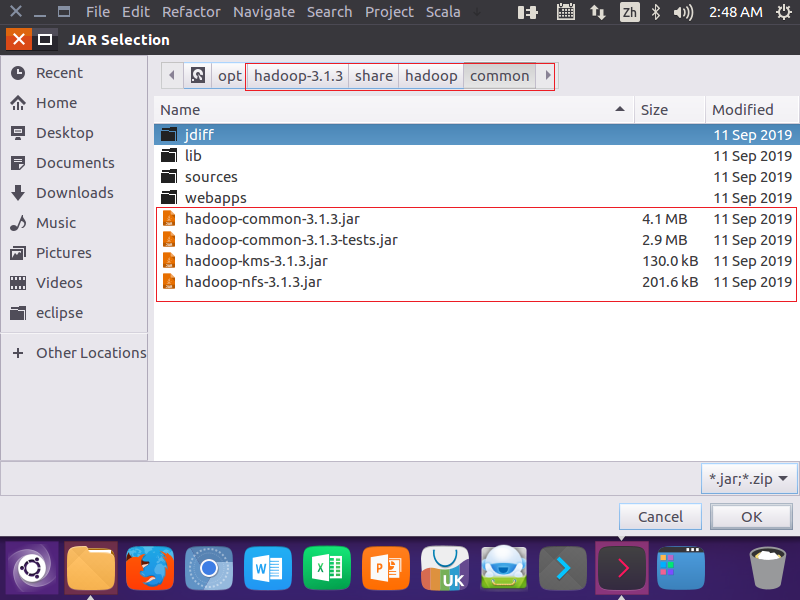

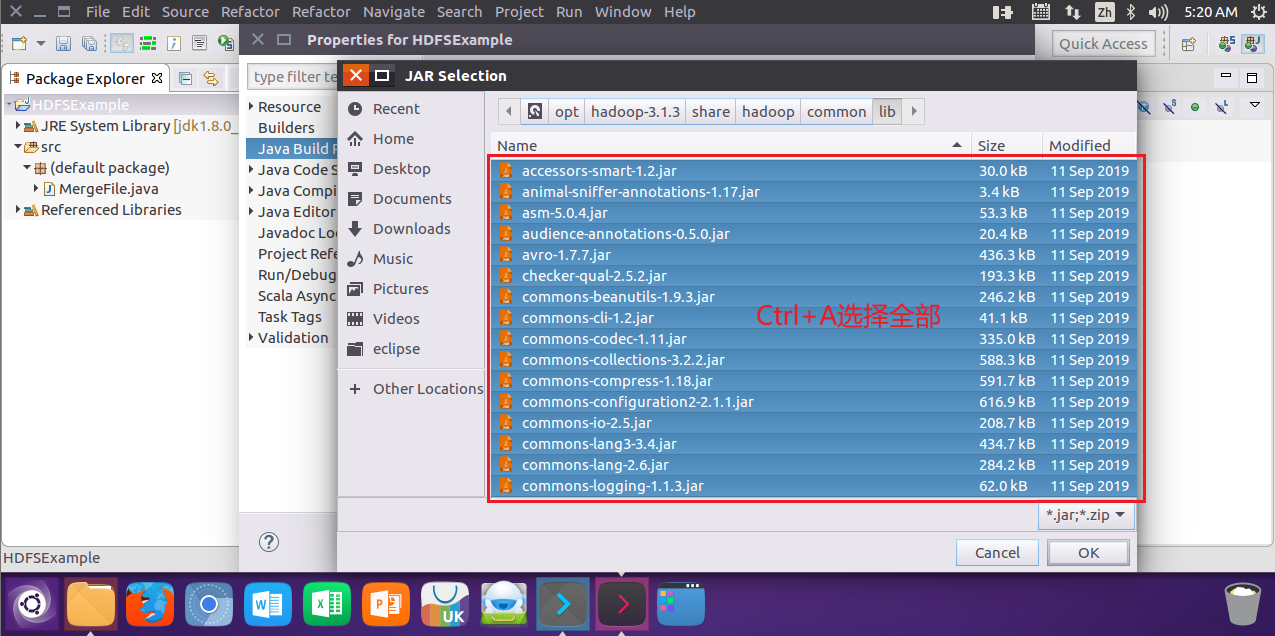

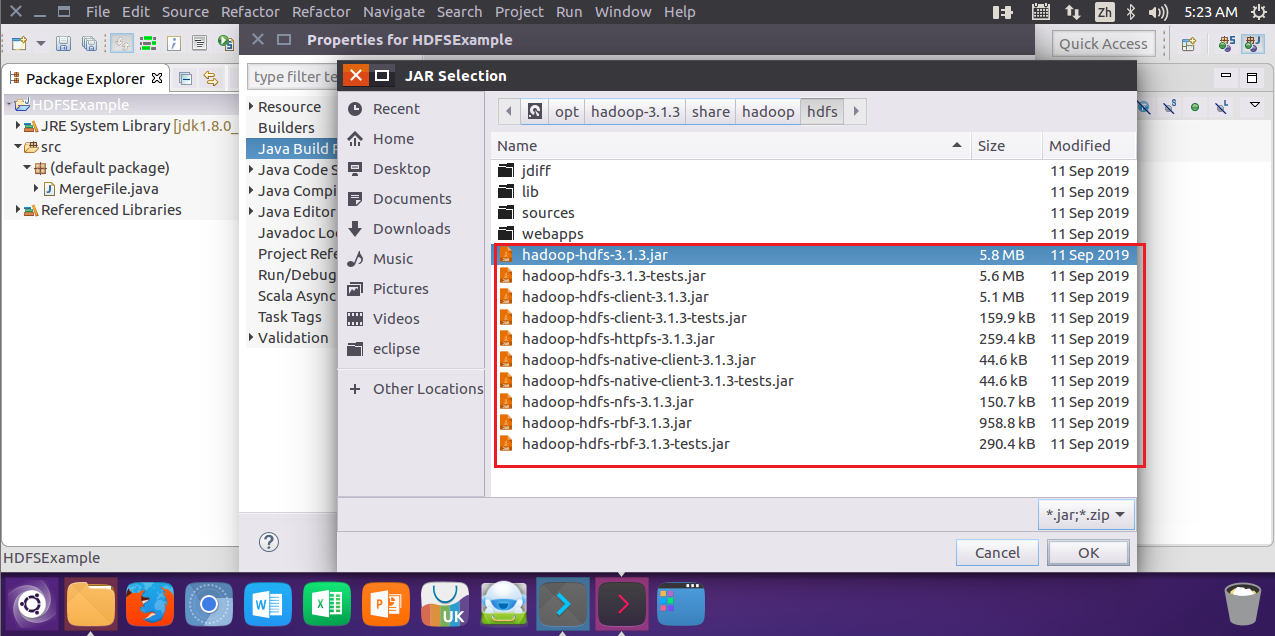

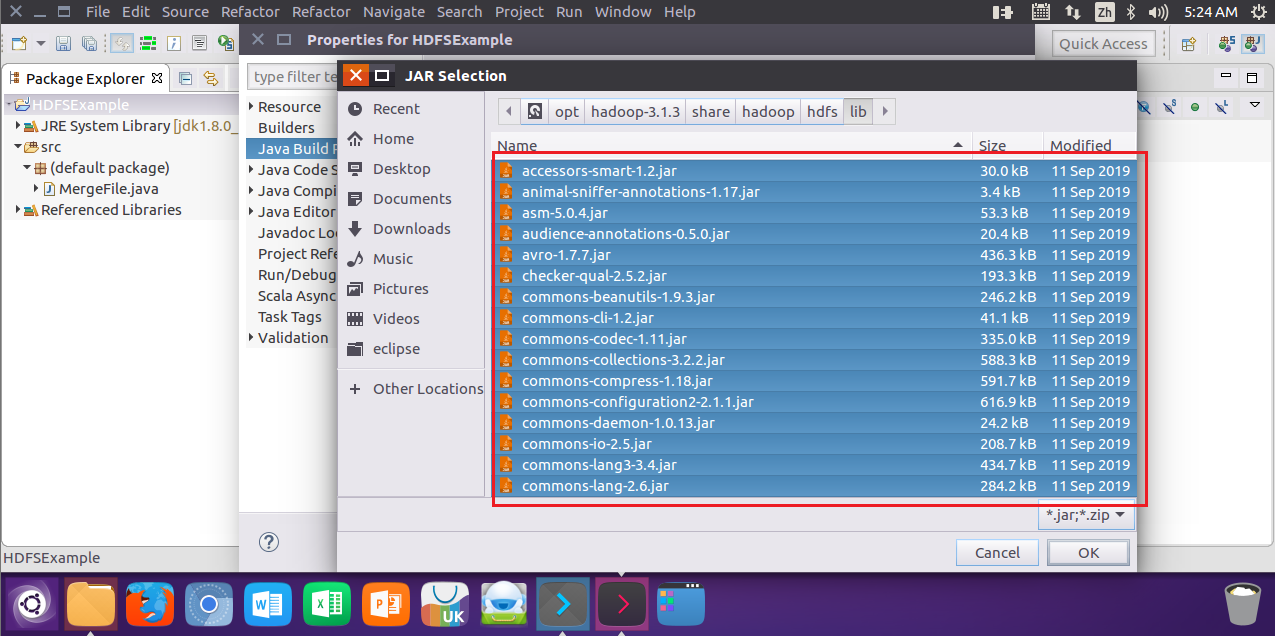

3.为项目添加需要的JAR包

选择hadoop文件目录(自己安装的路径)

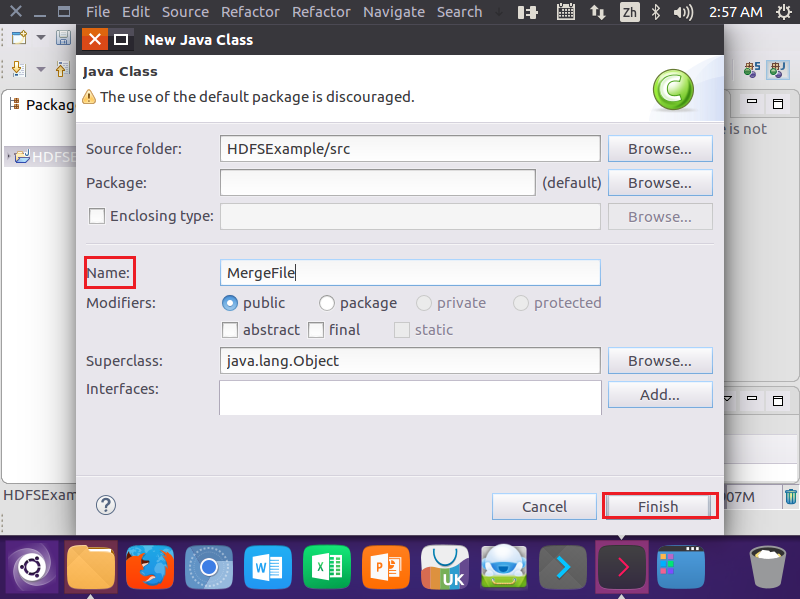

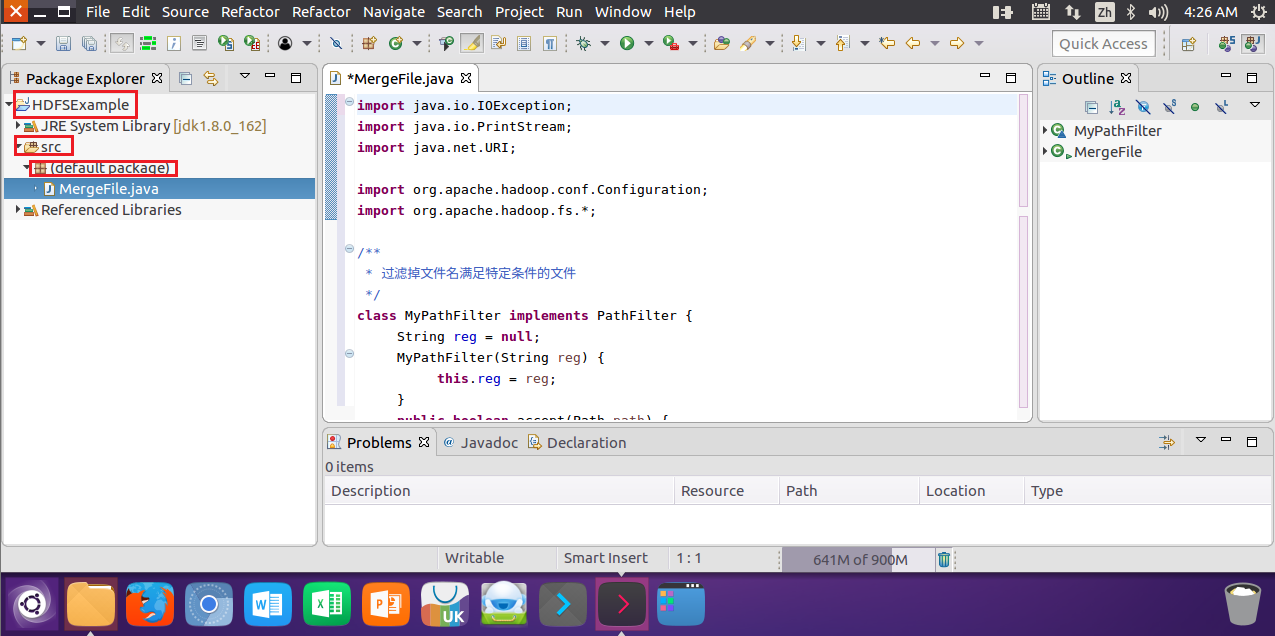

4.编写Java应用程序

点开源代码文件

输入以下代码

import java.io.IOException;

import java.io.PrintStream;

import java.net.URI;import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;/*** 过滤掉文件名满足特定条件的文件 */

class MyPathFilter implements PathFilter {String reg = null; MyPathFilter(String reg) {this.reg = reg;}public boolean accept(Path path) {if (!(path.toString().matches(reg)))return true;return false;}

}

/**** 利用FSDataOutputStream和FSDataInputStream合并HDFS中的文件*/

public class MergeFile {Path inputPath = null; //待合并的文件所在的目录的路径Path outputPath = null; //输出文件的路径public MergeFile(String input, String output) {this.inputPath = new Path(input);this.outputPath = new Path(output);}public void doMerge() throws IOException {Configuration conf = new Configuration();conf.set("fs.defaultFS","hdfs://localhost:9000");conf.set("fs.hdfs.impl","org.apache.hadoop.hdfs.DistributedFileSystem");FileSystem fsSource = FileSystem.get(URI.create(inputPath.toString()), conf);FileSystem fsDst = FileSystem.get(URI.create(outputPath.toString()), conf);//下面过滤掉输入目录中后缀为.abc的文件FileStatus[] sourceStatus = fsSource.listStatus(inputPath,new MyPathFilter(".*\\.abc")); FSDataOutputStream fsdos = fsDst.create(outputPath);PrintStream ps = new PrintStream(System.out);//下面分别读取过滤之后的每个文件的内容,并输出到同一个文件中for (FileStatus sta : sourceStatus) {//下面打印后缀不为.abc的文件的路径、文件大小System.out.print("路径:" + sta.getPath() + " 文件大小:" + sta.getLen()+ " 权限:" + sta.getPermission() + " 内容:");FSDataInputStream fsdis = fsSource.open(sta.getPath());byte[] data = new byte[1024];int read = -1;while ((read = fsdis.read(data)) > 0) {ps.write(data, 0, read);fsdos.write(data, 0, read);}fsdis.close(); }ps.close();fsdos.close();}public static void main(String[] args) throws IOException {MergeFile merge = new MergeFile("hdfs://localhost:9000/user/hadoop/","hdfs://localhost:9000/user/hadoop/merge.txt");merge.doMerge();}

}5.编译运行程序

1.开始编译前要确保Hadoop已经运行

start-dfs.sh

//开启Hadoop![]()

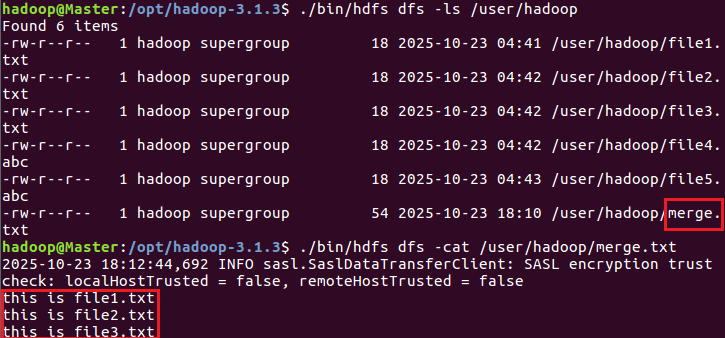

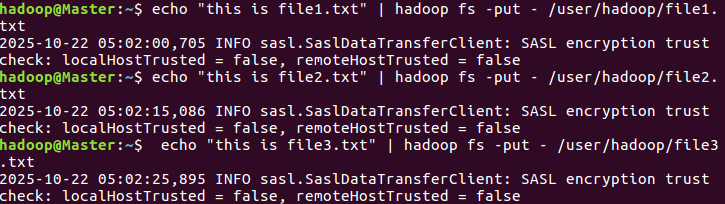

2.开始编译前确保HDFS的hadoop目录下存在file1.txt、file2.txt、file3.txt、file4.abc、file5.abc,且文件里面有内容

echo "this is file1.txt" | hadoop fs -put - /user/hadoop/file1.txtecho "this is file2.txt" | hadoop fs -put - /user/hadoop/file2.txtecho "this is file3.txt" | hadoop fs -put - /user/hadoop/file3.txt

//创建文件并加入内容

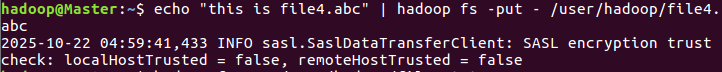

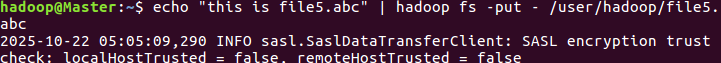

echo "this is file4.abc" | hadoop fs -put - /user/hadoop/file4.abc

echo "this is file5.abc" | hadoop fs -put - /user/hadoop/file5.abc

//创建文件并加入内容

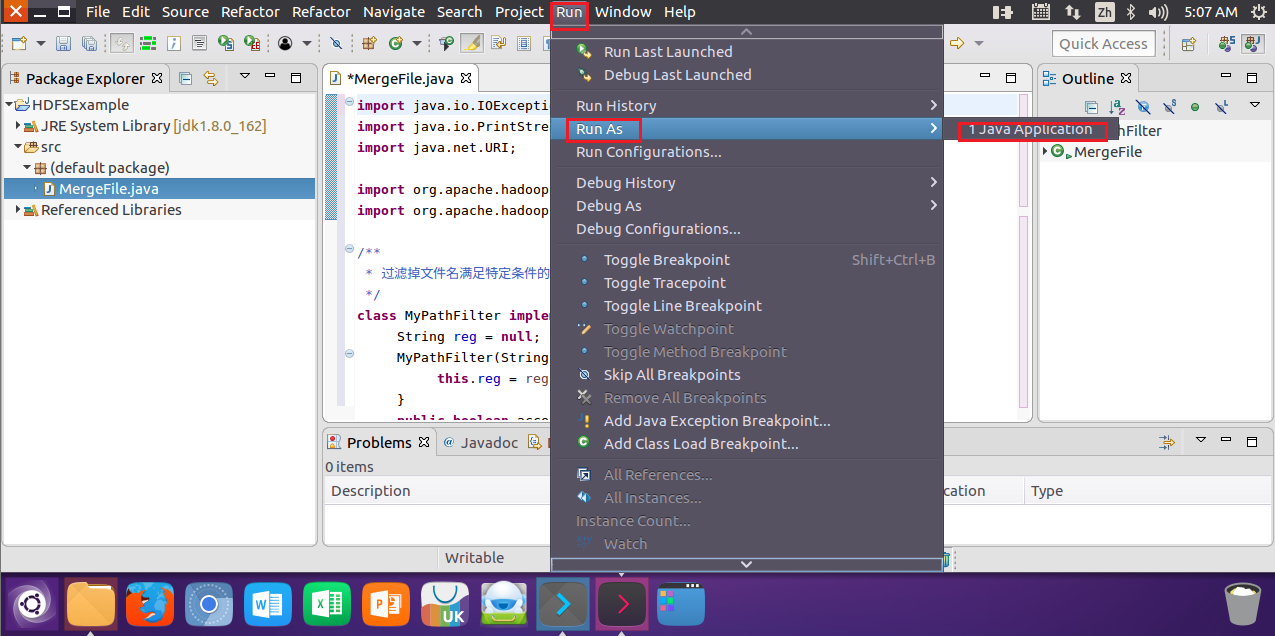

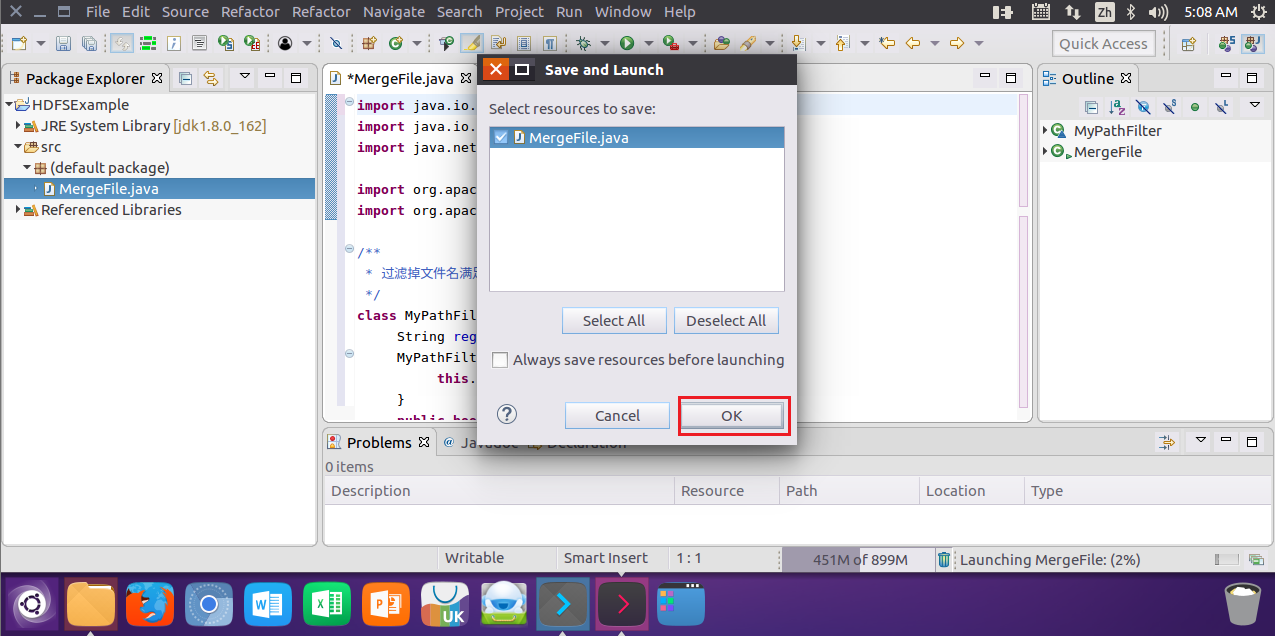

3.运行

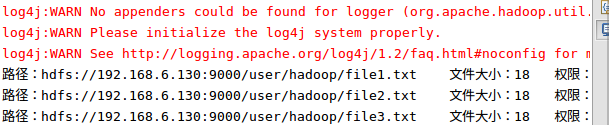

4.结果

6.应用程序的部署

1.创建myapp目录(在Hadoop安装目录下)

cd /opt/hadoop-3.1.3

mkdir myapp![]()

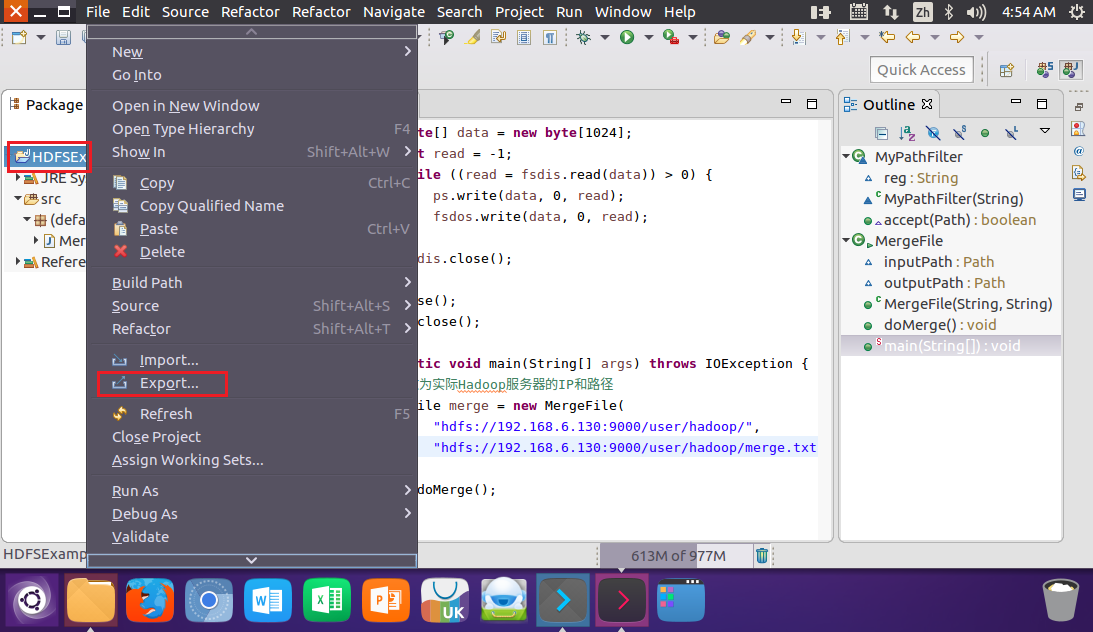

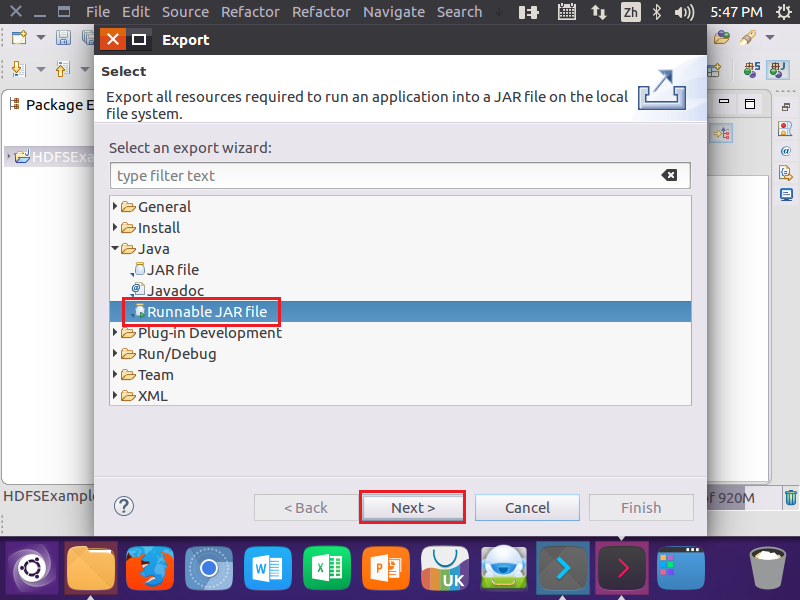

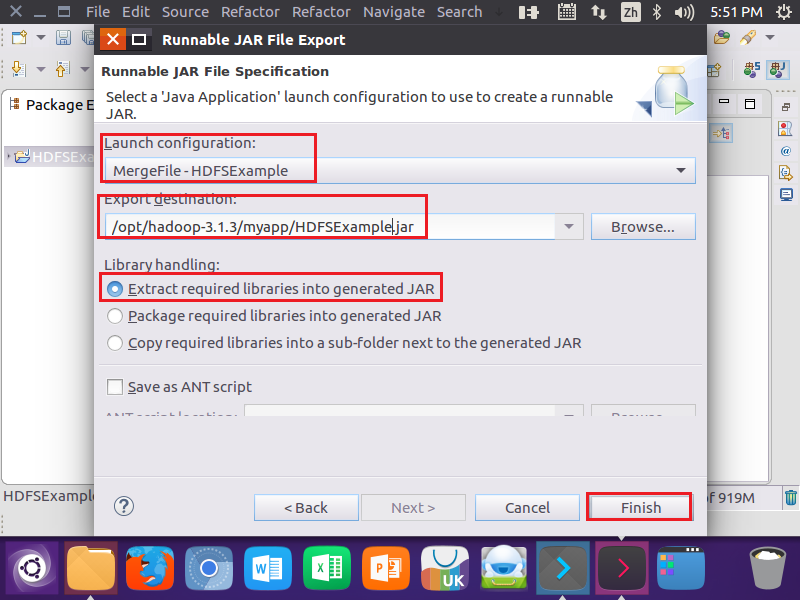

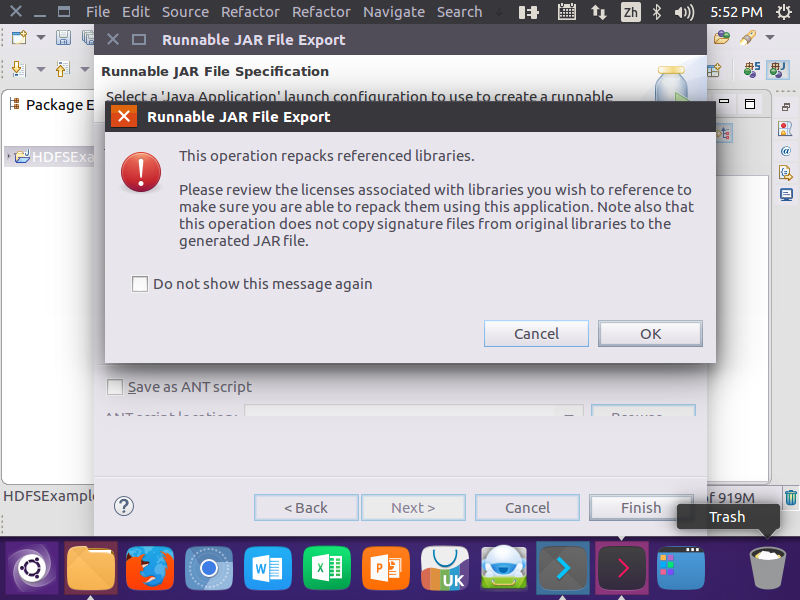

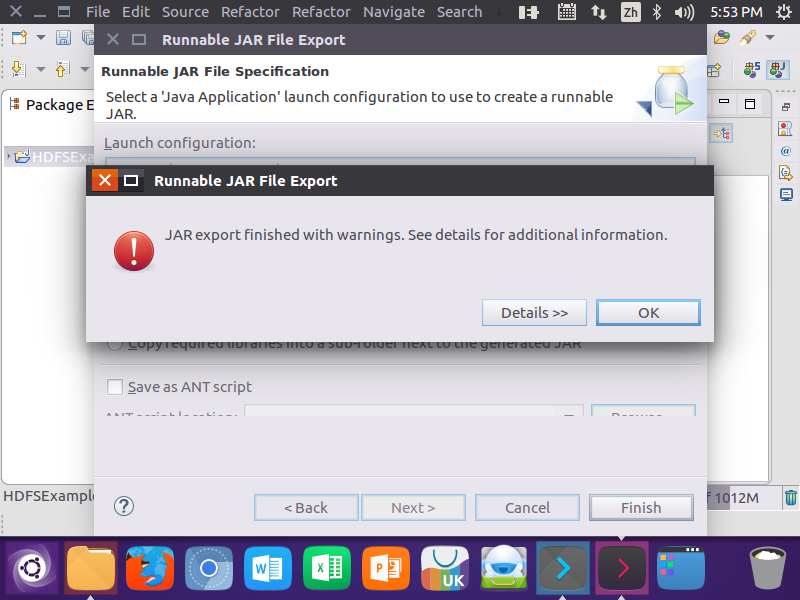

2.生成JAR包

下面两个提示框直接点“OK”就可以

3.查看JAR包和使用

1.查看

cd /opt/hadoop-3.1.3/myapp

ls

//查看JAR包![]()

![]()

2.使用

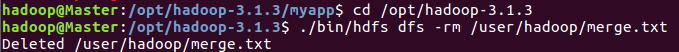

因为之前运行过一次文件生成了merge.txt文件,所有要先删除

cd /opt/hadoop-3.1.3

./bin/hdfs dfs -rm /user/hadoop/merge.txt

//删除merge.txt文件

使用hadoop jar命令运行程序

cd /opt/hadoop-3.1.3

./bin/hadoop jar ./myapp/HDFSExample.jar

//运行程序![]()

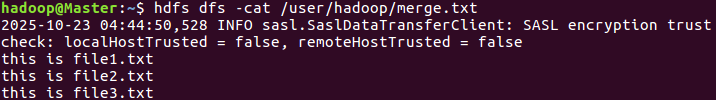

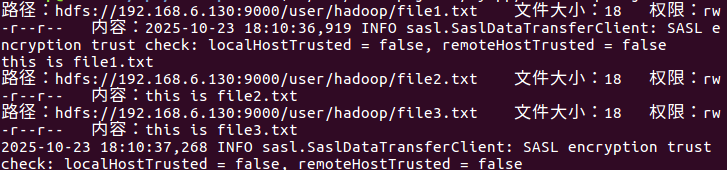

查看merge.txt文件

cd /opt/hadoop-3.1.3

./bin/hdfs dfs -ls /user/hadoop

./bin/hdfs dfs -cat /user/hadoop/merge.txt

//查看生成文件