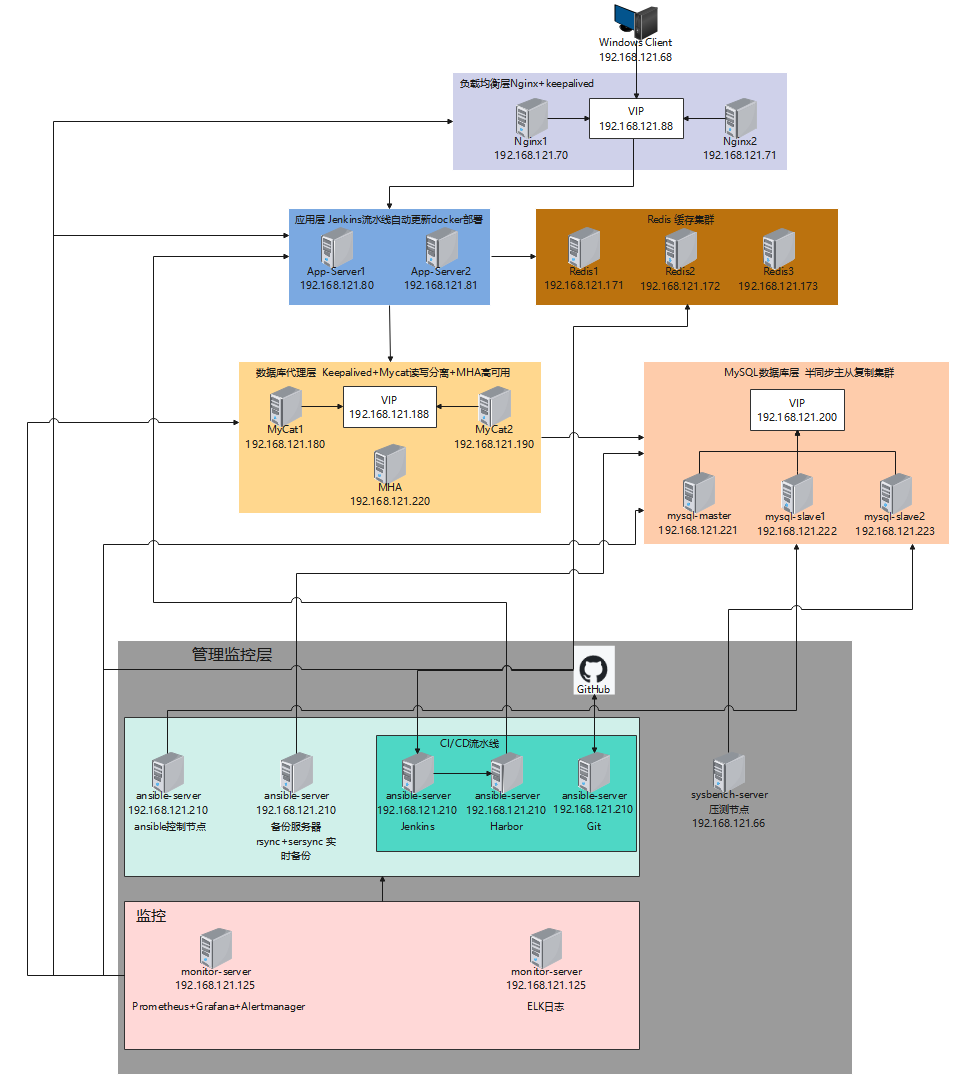

基于容器化云原生的 MySQL 及中间件高可用自动化集群项目

1 项目概述

本项目旨在构建一个高可用、高性能的 MySQL 集群,能够处理大规模并发业务。通过容器化部署、多级缓存、完善的监控和备份策略,确保数据库服务的连续性和数据安全性。

架构总览

预期目标

数据库服务可用性达到 99.99%

支持每秒 thousands 级别的并发访问

实现秒级故障检测和自动切换

数据备份 RPO<5 分钟,RTO<30 分钟

完善的监控告警体系

2 环境准备

2.1 硬件环境要求

| 角色 | 配置建议 | 数量 |

|---|---|---|

| MySQL 主库 | 1 核 CPU/2GB 内存 / 50GB SSD | 1 |

| MySQL 从库 | 1 核 CPU/2GB 内存 / 50GB SSD | 2 |

| MyCat 节点 | 1 核 CPU/2GB 内存 | 2 |

| MHA 管理节点 | 1 核 CPU/2GB 内存 | 1 |

| Redis 集群 | 1 核 CPU/2GB 内存 | 3 |

| 监控节点 | 2 核 CPU/6GB 内存 / 50GB 存储 | 1 |

| 备份节点 | 1 核 CPU/2GB 内存 / 100GB 存储 | 1 |

| 压测节点 | 1 核 CPU/2GB 内存 | 1 |

| Ansible 控制节点 | 1 核 CPU/2GB 内存 | 1 |

| Nginx节点 | 1 核 CPU/2GB 内存 | 2 |

| app-server节点 | 1 核 CPU/2GB 内存 | 2 |

2.2 网络规划

| 主机名 | IP 地址 | 角色 | VIP |

|---|---|---|---|

| windows-client | 192.168.121.68 | 客户端 | - |

| mycat1 | 192.168.121.180 | MyCat 节点 1 | 192.168.121.188 |

| mycat2 | 192.168.121.190 | MyCat 节点 2 | 192.168.121.199 |

| mha-manager | 192.168.121.220 | MHA 管理节点 | - |

| mysql-master | 192.168.121.221 | MySQL 主库 | 192.168.121.200 |

| mysql-slave1 | 192.168.121.222 | MySQL 从库 1 (候选主库) | 192.168.121.200 |

| mysql-slave2 | 192.168.121.223 | MySQL 从库 2 | - |

| ansible-server | 192.168.121.210 | Ansible 控制节点 / 备份服务器/CI/CD | - |

| sysbench-server | 192.168.121.66 | 压测服务器 | - |

| monitor-server | 192.168.121.125 | Prometheus+Grafana+ELK+alertmanager | - |

| redis1 | 192.168.121.171 | Redis 节点 1 | - |

| redis2 | 192.168.121.172 | Redis 节点 2 | - |

| redis3 | 192.168.121.173 | Redis 节点 3 | - |

| nginx1 | 192.168.121.70 | Nginx 主负载节点 | 192.168.121.88 |

| nginx2 | 192.168.121.71 | Nginx 备负载节点 | 192.168.121.88 |

| app-server1 | 192.168.121.80 | 应用服务器主节点 | - |

| app-server2 | 192.168.121.81 | 应用服务器备节点 | - |

2.3 软件版本规划

| 软件 | 版本 |

|---|---|

| 操作系统 | CentOS 7.9 |

| Docker | 26.1.4 |

| Docker Compose | 1.29.2 |

| Ansible | 2.9.27 |

| MySQL | 8.0.28 |

| MyCat2 | 1.21 |

| MHA | 0.58 |

| Redis | 6.2.6 |

| Prometheus | 2.33.5 |

| Grafana | 8.4.5 |

| ELK | 7.17.0 |

| Keepalived | 1.3.5 |

| Sysbench | 1.0.20 |

| Nginx | 1.27 |

3 基础环境部署

3.1 操作系统初始化

所有节点执行以下操作

# 按照网络规划设置静态ip

vi /etc/sysconfig/network-scripts/ifcfg-ens32 # ens32根据实际修改可能是ens33

BOOTPROTO=static # static表示静态ip地址

NAME=ens32 # 网络接口名称

DEVICE=ens32 # 网络接口的设备名称

ONBOOT=yes # yes表示自启动

IPADDR=192.168.121.180 # 静态ip地址

NETMASK=255.255.255.0 # 子网掩码

GATEWAY=192.168.121.2 # 网关地址,具体见VMware虚拟网络编辑器设置

DNS1=114.114.114.114 # DNS首选服务器

DNS2=8.8.8.8# 重启网卡

systemctl restart network# 配置/etc/hosts文件ip 主机名映射

vim /etc/hosts

192.168.121.180 mycat1

192.168.121.190 mycat2

192.168.121.220 mha-manager

192.168.121.221 mysql-master

192.168.121.222 mysql-slave1

192.168.121.223 mysql-slave2

192.168.121.210 ansible-server

192.168.121.66 sysbench-server

192.168.121.125 monitor-server

192.168.121.171 redis1

192.168.121.172 redis2

192.168.121.173 redis3

192.168.121.70 nginx1

192.168.121.71 nginx2

192.168.121.80 app-server1

192.168.121.81 app-server2

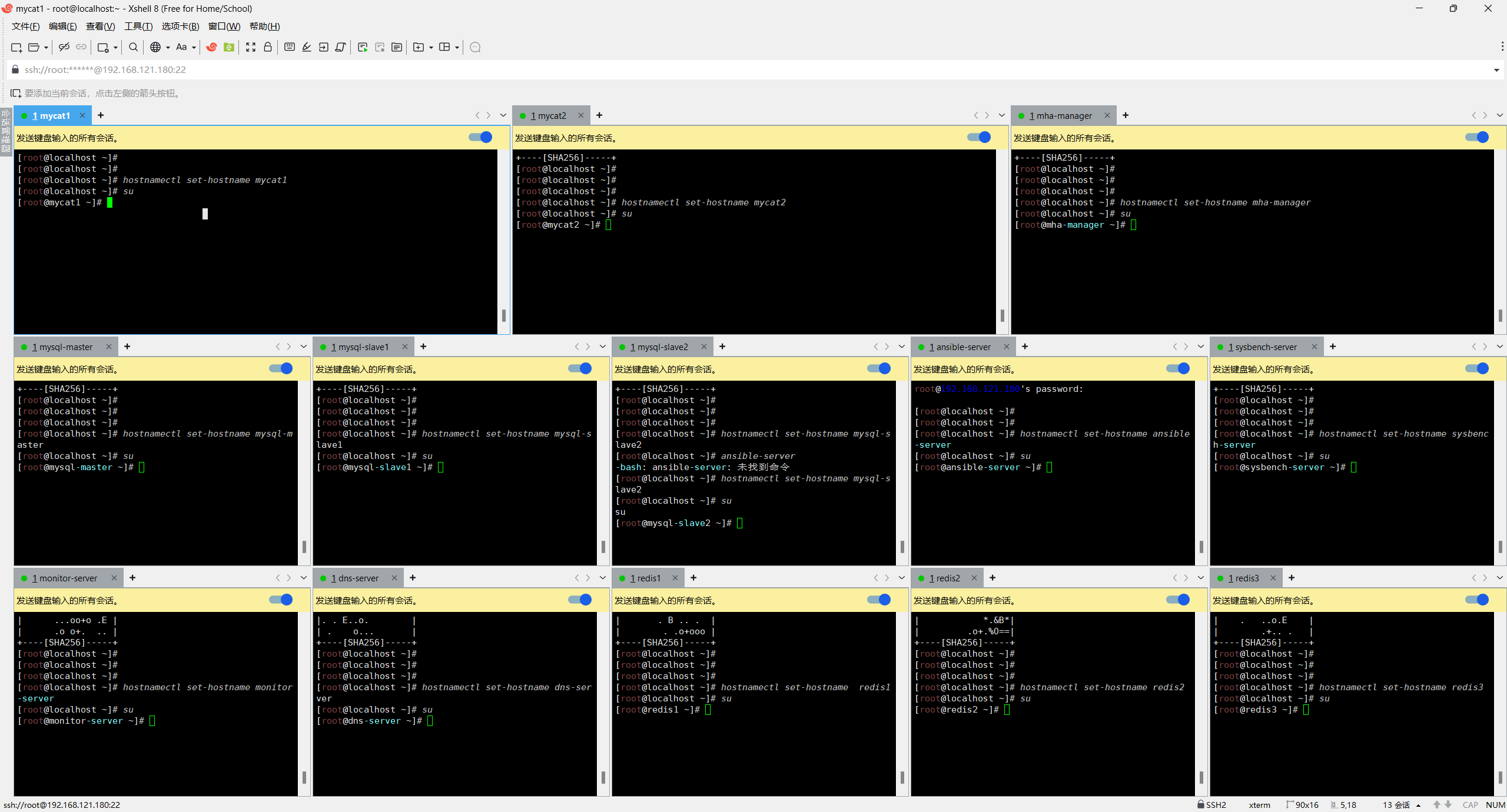

# 按照网络规划修改主机名

hostnamectl set-hostname 主机名

su # 关闭SELinux

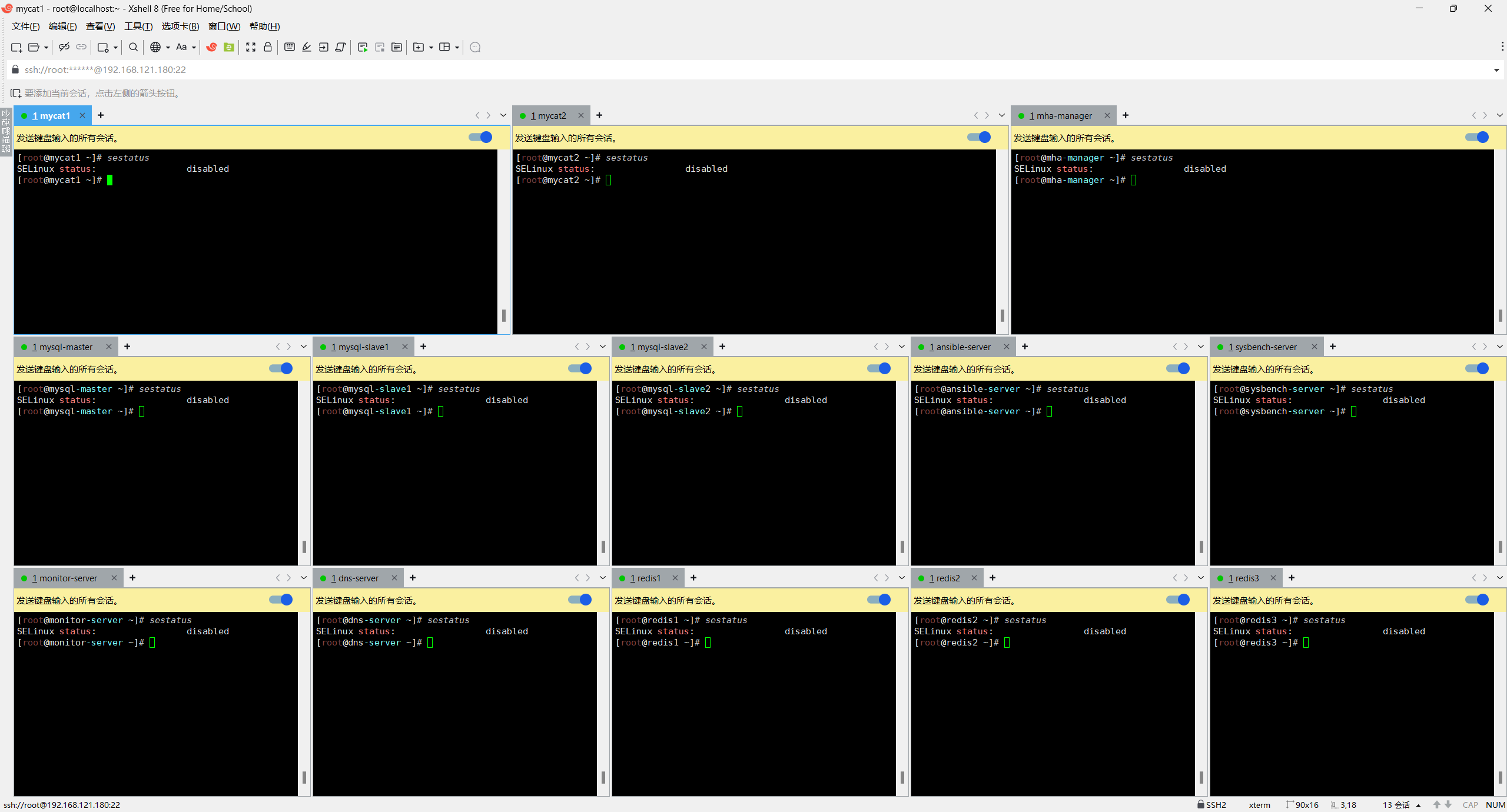

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

setenforce 0# 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld# 安装必要工具

yum install -y vim net-tools wget curl lrzsz telnet# 配置时间同步

yum install -y chrony

systemctl start chronyd

systemctl enable chronyd

chronyc sources# 重启关闭selinux生效

reboot# 查看selinux状态

sestatus

显示disabled表示关闭成功

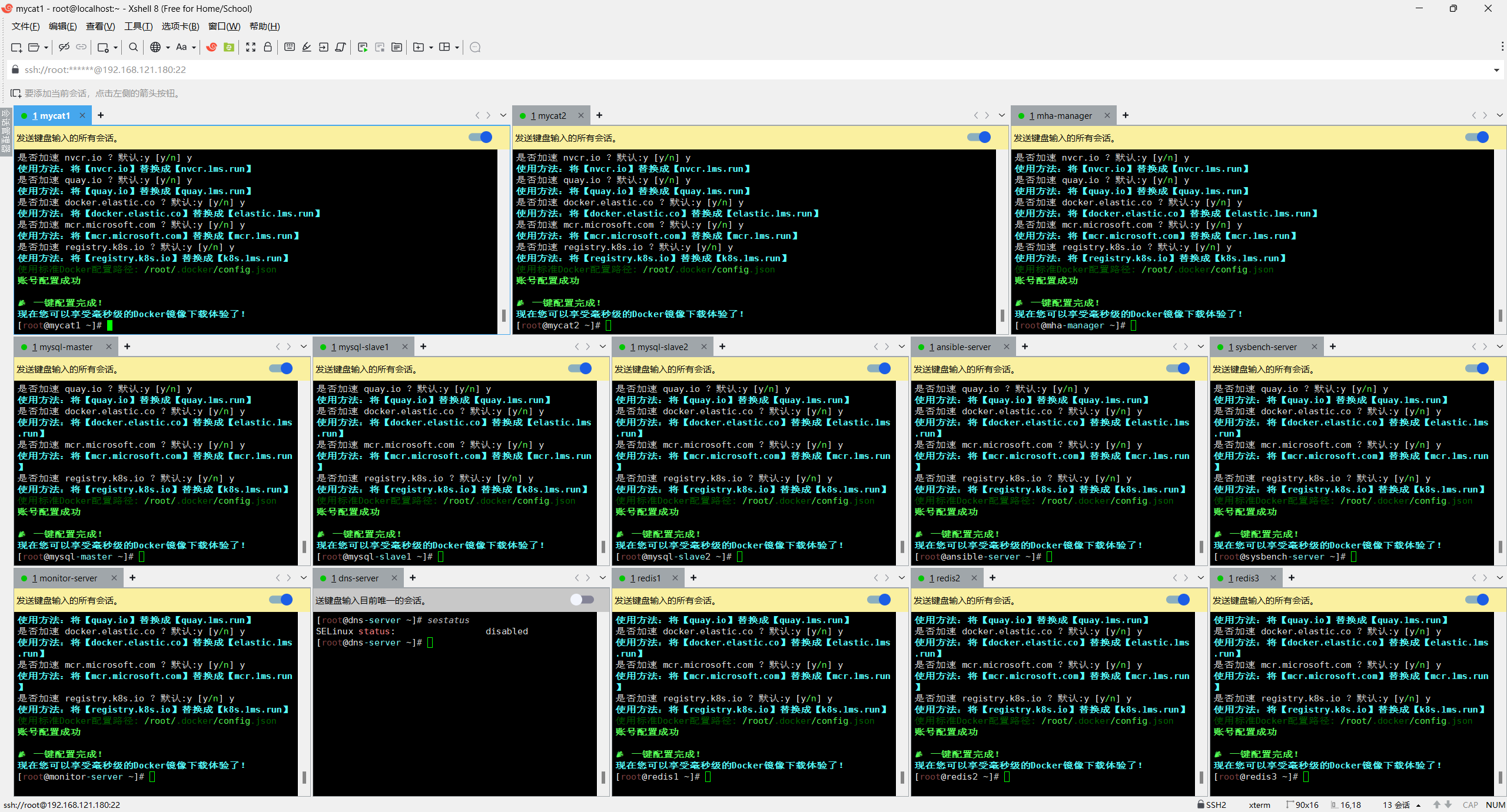

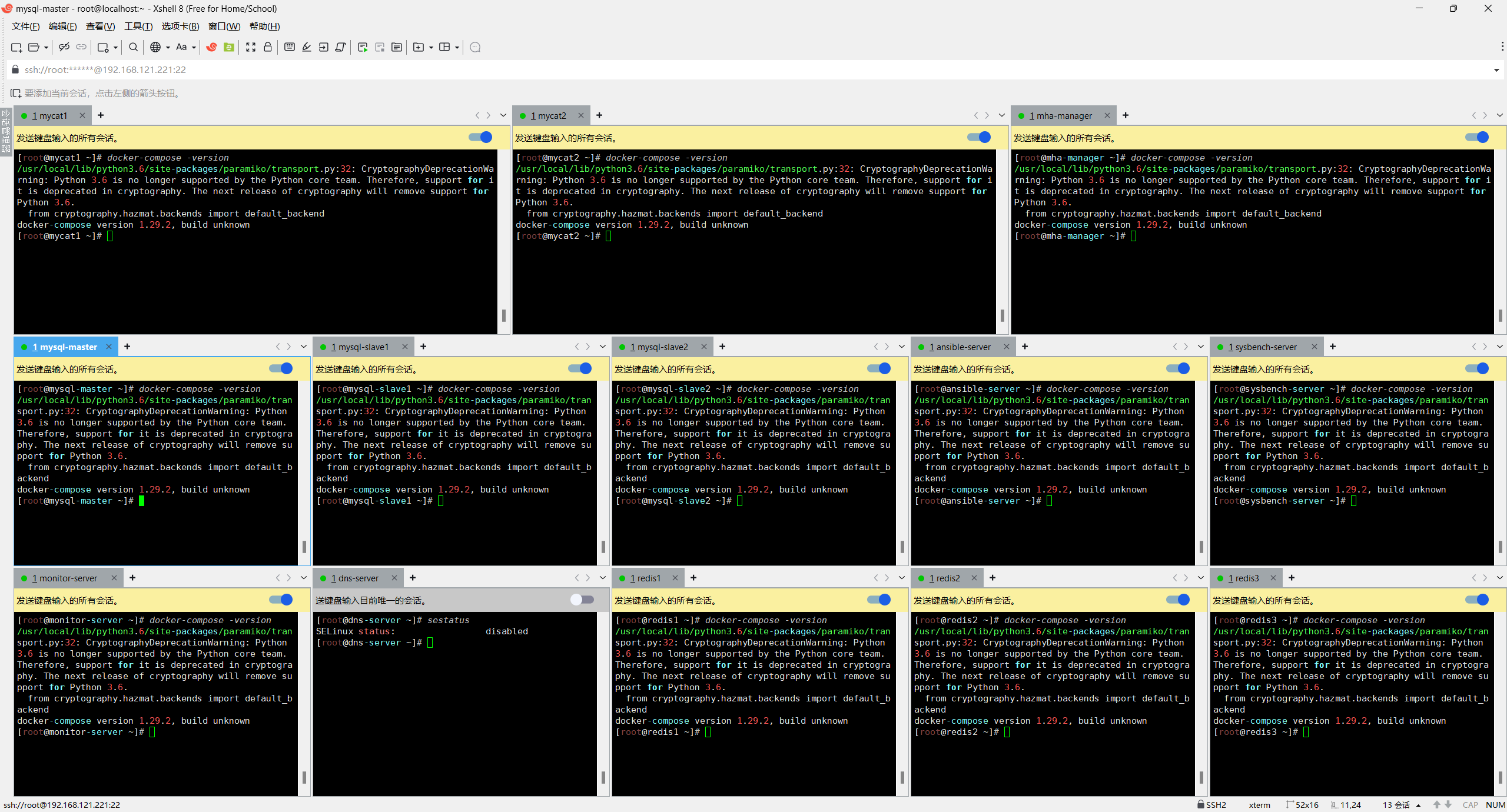

3.2 部署 Docker 环境

Docker镜像极速下载服务 - 毫秒镜像

在所有需要运行容器的节点(除了dns服务器节点)执行:

# 一键安装

bash <(curl -f -s --connect-timeout 10 --retry 3 https://linuxmirrors.cn/docker.sh) --source mirrors.tencent.com/docker-ce --source-registry docker.1ms.run --protocol https --install-latested true --close-firewall false --ignore-backup-tips# 一键配置,简单快捷,告别拉取超时

bash <(curl -sSL https://n3.ink/helper)# 安装docker-compose

yum install -y gcc python3-devel rust cargopip3 install --upgrade pippip3 install setuptools-rustpip3 install docker-compose

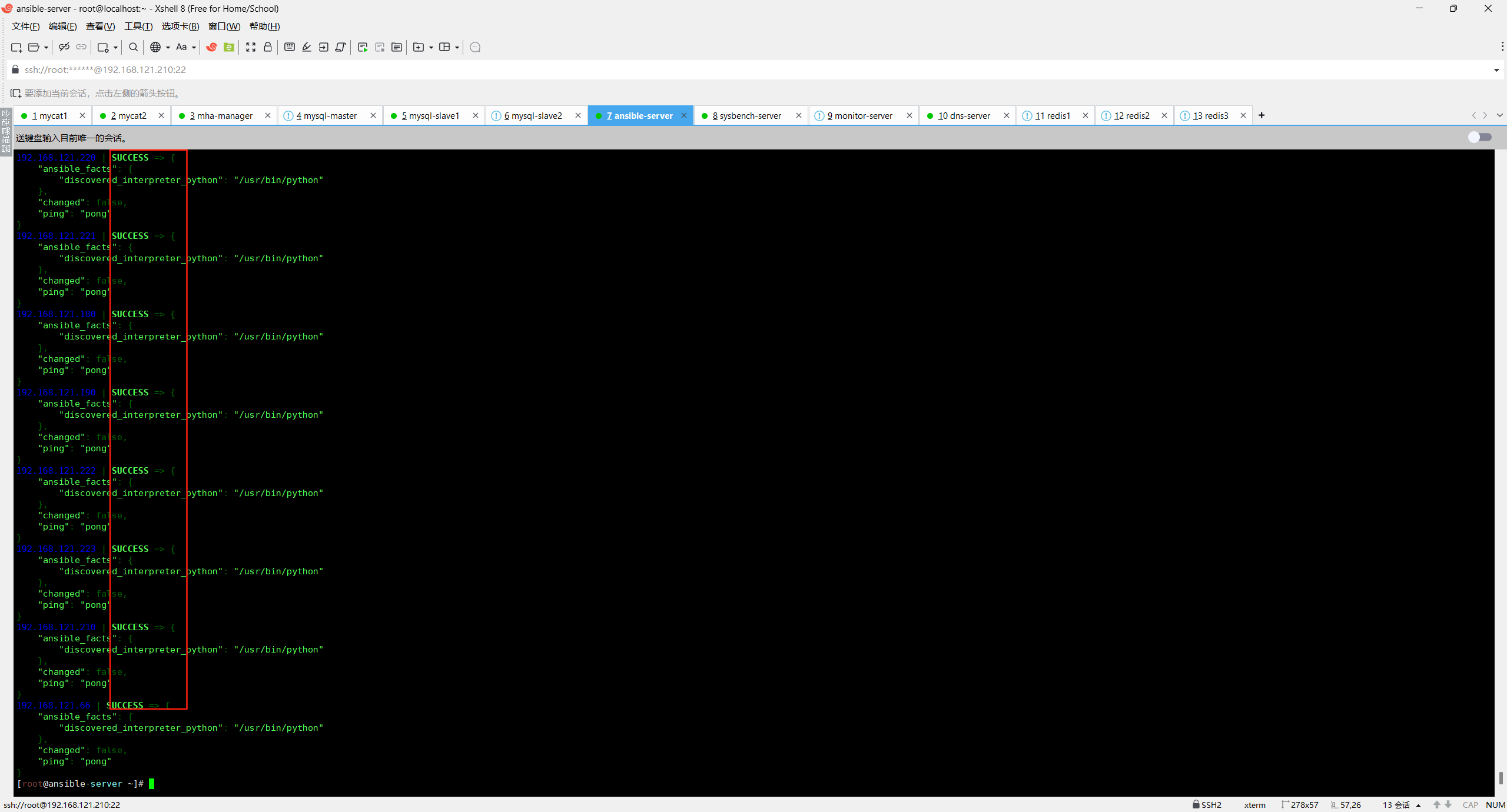

3.3 部署 Ansible 控制节点

在 ansible-server (192.168.121.210) 上执行:

yum install -y epel-release

yum install -y ansible# 配置Ansible主机清单

cat > /etc/ansible/hosts << EOF

[mysql]

192.168.121.221

192.168.121.222

192.168.121.223[mycat]

192.168.121.180

192.168.121.190[mha]

192.168.121.220[redis]

192.168.121.171

192.168.121.172

192.168.121.173[monitor]

192.168.121.125[backup]

192.168.121.210[sysbench]

192.168.121.66[nginx]

192.168.121.70

192.168.121.71[app-server]

192.168.121.80

192.168.121.81

EOF# 配置免密登录

ssh-keygen -t rsa -N "" -f ~/.ssh/id_rsa# 批量分发公钥

for ip in 192.168.121.180 192.168.121.190 192.168.121.220 192.168.121.221 192.168.121.222 192.168.121.223 192.168.121.210 192.168.121.66 192.168.121.125 192.168.121.171 192.168.121.172 192.168.121.173 192.168.121.70 192.168.121.71; dossh-copy-id root@$ip

done# 测试Ansible连通性

ansible all -m ping

4 MySQL 集群部署

4.1 准备 MySQL Docker 镜像

在 ansible-server 上创建 Dockerfile:

mkdir -p /data/docker/mysql

cd /data/docker/mysql[root@ansible-server tasks]# cat /data/docker/mysql/Dockerfile

FROM docker.1ms.run/mysql:8.0.28

# 安装必要工具

RUN yum clean all && \yum makecache fast && \yum install -y \vim \net-tools \iputils && \yum clean all && \rm -rf /var/cache/yum/* \

# 配置MySQL

COPY my.cnf /etc/mysql/conf.d/my.cnf

# 配置MHA相关脚本

COPY master_ip_failover /usr/local/bin/

COPY master_ip_online_change /usr/local/bin/

RUN chmod +x /usr/local/bin/master_ip_failover

RUN chmod +x /usr/local/bin/master_ip_online_change

# 设置时区

ENV TZ=Asia/Shanghai# 分发Dokcerfile到三台mysql服务器

mkdir -p /data/docker/mysql # 三台数据库服务器建立目录

scp /data/docker/mysql/Dockerfile mysql-master:/data/docker/mysql/Dockerfile

scp /data/docker/mysql/Dockerfile mysql-master:/data/docker/mysql/Dockerfile

scp /data/docker/mysql/Dockerfile mysql-master:/data/docker/mysql/Dockerfile创建 MySQL 配置文件模板:

[root@ansible-server tasks]# cat /data/docker/mysql/my.cnf

[mysqld]

user = mysql

default-storage-engine = InnoDB

character-set-server = utf8mb4

collation-server = utf8mb4_general_ci

max_connections = 1000

wait_timeout = 600

interactive_timeout = 600

table_open_cache = 2048

max_heap_table_size = 64M

tmp_table_size = 64M

slow_query_log = 1

slow_query_log_file = /var/log/mysql/slow.log

long_query_time = 2

log_queries_not_using_indexes = 1

server-id = {{ server_id }}

log_bin = /var/lib/mysql/mysql-bin

binlog_format = row

binlog_rows_query_log_events = 1

expire_logs_days = 7

gtid_mode = ON

enforce_gtid_consistency = ON

log_slave_updates = ON

relay_log_recovery = 1

sync_binlog = 1

innodb_flush_log_at_trx_commit = 1

plugin-load = "rpl_semi_sync_master=semisync_master.so;rpl_semi_sync_slave=semisync_slave.so"

loose_rpl_semi_sync_master_enabled = 1

loose_rpl_semi_sync_slave_enabled = 1

loose_rpl_semi_sync_master_timeout = 1000[mysqld_safe]

log-error = /var/log/mysql/error.log

创建 MHA 相关脚本:

[root@ansible-server tasks]# cat /data/docker/mysql/master_ip_failover

#!/usr/bin/env perl

use strict;

use warnings FATAL => 'all';use Getopt::Long;my ($command, $ssh_user, $orig_master_host, $orig_master_ip,$orig_master_port, $new_master_host, $new_master_ip, $new_master_port

);my $vip = '192.168.121.200'; #指定vip的地址,自己指定

my $brdc = '192.168.121.255'; #指定vip的广播地址

my $ifdev = 'ens32'; #指定vip绑定的网卡

my $key = '1'; #指定vip绑定的虚拟网卡序列号

my $ssh_start_vip = "/sbin/ifconfig ens32:$key $vip"; #代表此变量值为ifconfig ens32:1 192.168.121.200

my $ssh_stop_vip = "/sbin/ifconfig ens32:$key down"; #代表此变量值为ifconfig ens32:1 192.168.121.200 down

my $exit_code = 0; #指定退出状态码为0GetOptions('command=s' => \$command,'ssh_user=s' => \$ssh_user,'orig_master_host=s' => \$orig_master_host,'orig_master_ip=s' => \$orig_master_ip,'orig_master_port=i' => \$orig_master_port,'new_master_host=s' => \$new_master_host,'new_master_ip=s' => \$new_master_ip,'new_master_port=i' => \$new_master_port,

);exit &main();sub main {print "\n\nIN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===\n\n";if ( $command eq "stop" || $command eq "stopssh" ) {my $host = $orig_master_host;my $ip = $orig_master_ip;print "Disabling the VIP on old master: $host \n";&stop_vip();$exit_code = 0;}elsif ( $command eq "start" ) {my $host = $new_master_host;my $ip = $new_master_ip;print "Enabling the VIP - $vip on the new master - $host \n";&start_vip();$exit_code = 0;}elsif ( $command eq "status" ) {print "Checking the Status of the script.. OK \n";$exit_code = 0;}else {&usage();$exit_code = 1;}return $exit_code;

}sub start_vip() {`ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`;

}sub stop_vip() {return 0 unless ($orig_master_host);`ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`;

}sub usage {print"Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n";

}

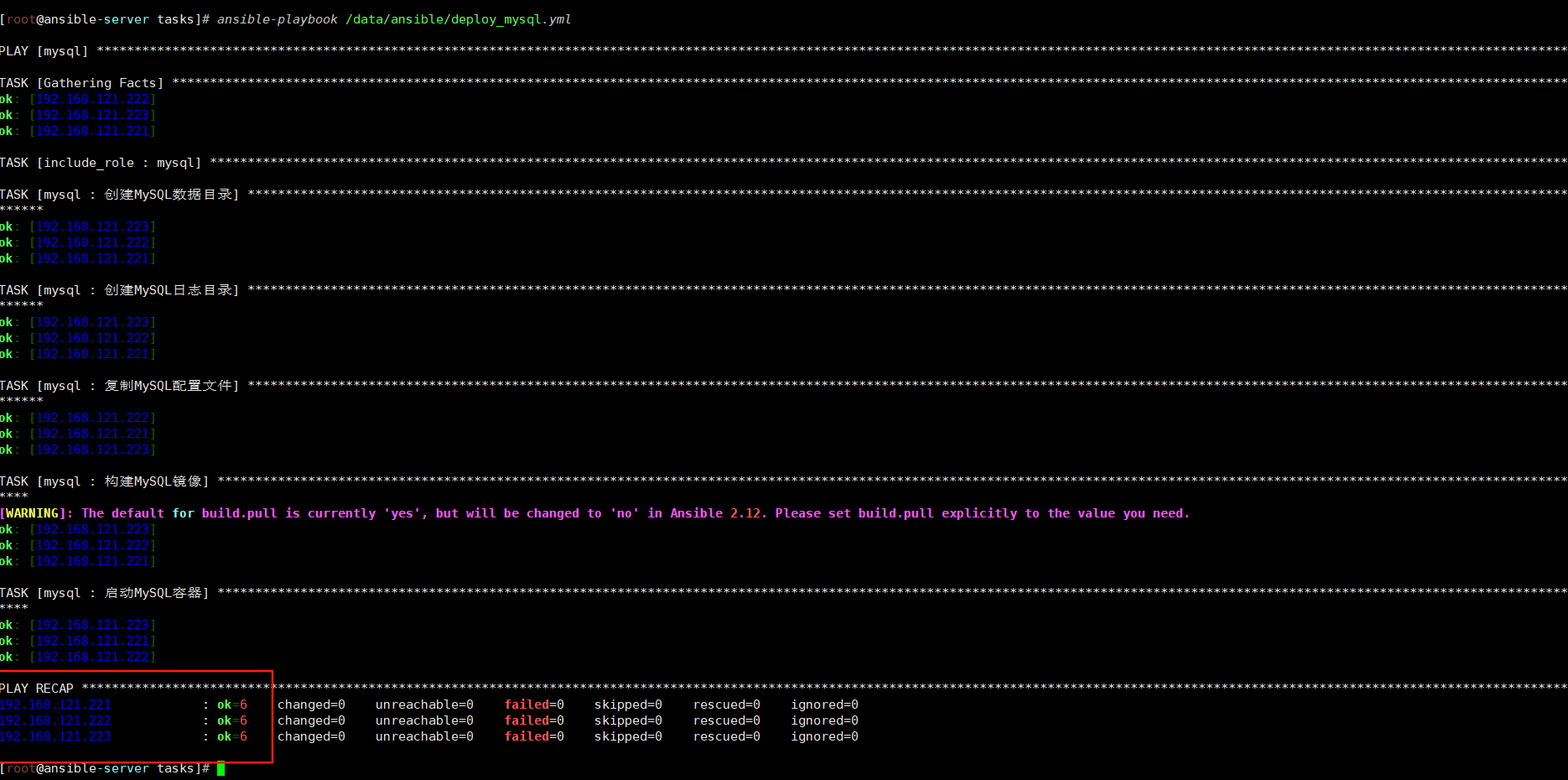

4.2 使用 Ansible 部署 MySQL 集群

创建 Ansible Playbook:

mkdir -p /data/ansible/roles/mysql/tasks

cd /data/ansible/roles/mysql/tasks[root@ansible-server tasks]# pwd

/data/ansible/roles/mysql/tasks

[root@ansible-server tasks]# cat main.yml

- name: 创建MySQL数据目录file:path: /data/mysql/datastate: directorymode: '0755'- name: 创建MySQL日志目录file:path: /data/mysql/logsstate: directorymode: '0755'- name: 复制MySQL配置文件template:src: /data/docker/mysql/my.cnfdest: /data/mysql/my.cnfmode: '0644'- name: 构建MySQL镜像docker_image:name: docker.1ms.run/mysql:8.0.28build:path: /data/docker/mysqlsource: build- name: 启动MySQL容器docker_container:name: mysqlimage: docker.1ms.run/mysql:8.0.28state: startedrestart_policy: alwaysports:- "3306:3306"volumes:- /data/mysql/data:/var/lib/mysql- /data/mysql/logs:/var/log/mysql- /data/mysql/my.cnf:/etc/mysql/conf.d/my.cnfenv:MYSQL_ROOT_PASSWORD: "{{ mysql_root_password }}"privileged: yes# 创建主Playbook

[root@ansible-server tasks]# cd /data/ansible

[root@ansible-server ansible]# cat deploy_mysql.yml

- hosts: mysqlvars:mysql_root_password: "123456"server_id: "{{ 221 if inventory_hostname == '192.168.121.221' else 222 if inventory_hostname == '192.168.121.222' else 223 }}"tasks:- include_role:name: mysql分别为三个 MySQL 节点生成不同的配置文件:

# 为主库生成配置

sed 's/{{ server_id }}/1/' /data/docker/mysql/my.cnf > /data/mysql/master_my.cnf

scp /data/mysql/master_my.cnf root@192.168.121.221:/data/mysql/my.cnf# 为从库1生成配置

sed 's/{{ server_id }}/2/' /data/docker/mysql/my.cnf > /data/mysql/slave1_my.cnf

scp /data/mysql/slave1_my.cnf root@192.168.121.222:/data/mysql/my.cnf# 为从库2生成配置

sed 's/{{ server_id }}/3/' /data/docker/mysql/my.cnf > /data/mysql/slave2_my.cnf

scp /data/mysql/slave2_my.cnf root@192.168.121.223:/data/mysql/my.cnf执行部署:

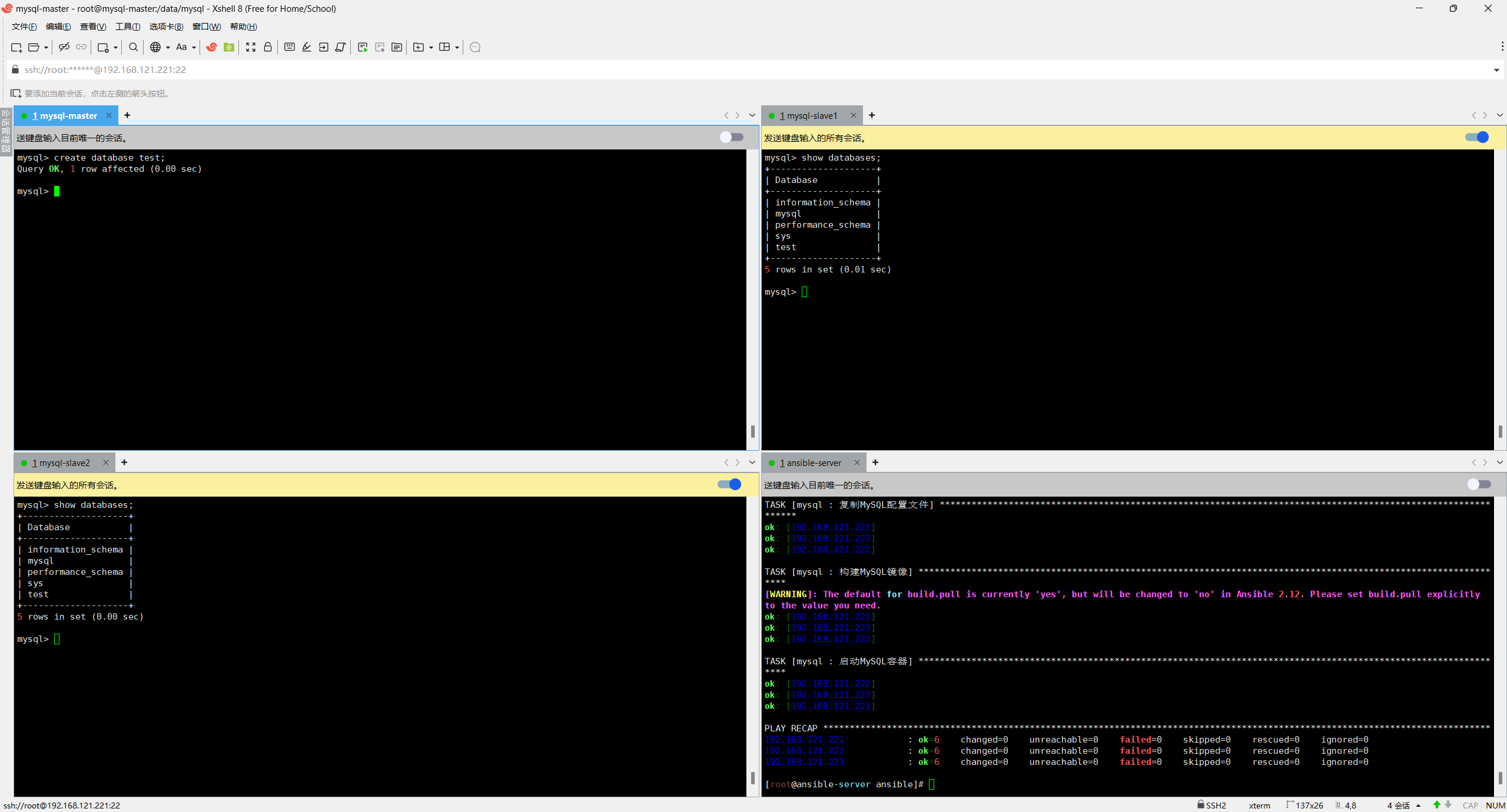

ansible-playbook /data/ansible/deploy_mysql.yml

全部显示ok表示部署完成

4.3 配置 MySQL 主从复制

分别在主库 (192.168.121.221) 从库(192.168.121.222,192.168.121.223)上操作:

#说明:auto.cnf文件里保存的是每个数据库实例的UUID信息,代表数据库的唯一标识

[root@mysql-master]# rm -rf /data/mysql/data/auto.cnf

[root@mysql-slave1]# rm -rf /data/mysql/data # 删除从库 data数据目录

[root@mysql-slave2]# rm -rf /data/mysql/data # 删除从库 data数据目录

# 主从数据同步

[root@mysql-master]# scp -r /data/mysql/data mysql-slave1:/data/mysql/

[root@mysql-master]# scp -r /data/mysql/data mysql-slave2:/data/mysql/# 进入容器

[root@mysql-master]# docker exec -it mysql bash# 登录MySQL

mysql -uroot -p123456# 创建复制用户

CREATE USER 'copy'@'%' IDENTIFIED BY '123456';

GRANT REPLICATION SLAVE ON *.* TO 'copy'@'%';

FLUSH PRIVILEGES;# MySQL 8.0 及以上版本默认使用 caching_sha2_password 认证插件,该插件要求使用加密连接(SSL)或在特定配置下才能允许非加密连接。从库在连接主库时,由于未配置 SSL 且主库未放宽限制,导致认证失败。

# 使用mysql_native_password插件进行身份验证

ALTER USER 'copy'@'%'

IDENTIFIED WITH mysql_native_password BY '123456';

FLUSH PRIVILEGES;

# 查看主库状态

SHOW MASTER STATUS;

# 记录File和Position信息mysql> show master status;

+------------------+----------+--------------+------------------+------------------------------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+------------------+----------+--------------+------------------+------------------------------------------+

| mysql-bin.000003 | 902 | | | 965d216d-7d64-11f0-8771-000c29111b7d:1-8 |

+------------------+----------+--------------+------------------+------------------------------------------+

1 row in set (0.00 sec)

在从库 1 (192.168.121.222) 上操作:

# 进入容器

docker exec -it mysql bahs# 登录MySQL

mysql -uroot -p123456# 停止从库

mysql> STOP SLAVE;

Query OK, 0 rows affected, 2 warnings (0.00 sec)# 配置主从复制

mysql> change master to master_host='192.168.121.221',master_user='copy',master_password='123456',master_port=3306,master_log_file='mysql-bin.000003',master_log_pos=902;

Query OK, 0 rows affected, 9 warnings (0.01 sec)

说明:

master_host master的IP

master_user 复制的用户

master_password 复制用户密码

master_port master的端口号

master_log_file master正在写的二进制文件名,锁表后查看的

master_log_pos master正在写的二进制位置# 启动从库

mysql> start slave;

Query OK, 0 rows affected, 1 warning (0.00 sec)# 查看从库状态确保Slave_IO_Running和Slave_SQL_Running都是Yes

mysql> show slave status\G

*************************** 1. row ***************************Slave_IO_State: Waiting for source to send eventMaster_Host: 192.168.121.221Master_User: chenjunMaster_Port: 3306Connect_Retry: 60Master_Log_File: mysql-bin.000003Read_Master_Log_Pos: 1354Relay_Log_File: mysql-slave1-relay-bin.000003Relay_Log_Pos: 366Relay_Master_Log_File: mysql-bin.000003Slave_IO_Running: YesSlave_SQL_Running: YesReplicate_Do_DB: Replicate_Ignore_DB: Replicate_Do_Table: Replicate_Ignore_Table: Replicate_Wild_Do_Table: Replicate_Wild_Ignore_Table: Last_Errno: 0Last_Error: Skip_Counter: 0Exec_Master_Log_Pos: 1354Relay_Log_Space: 1204Until_Condition: NoneUntil_Log_File: Until_Log_Pos: 0Master_SSL_Allowed: NoMaster_SSL_CA_File: Master_SSL_CA_Path: Master_SSL_Cert: Master_SSL_Cipher: Master_SSL_Key: Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: NoLast_IO_Errno: 0Last_IO_Error: Last_SQL_Errno: 0Last_SQL_Error: Replicate_Ignore_Server_Ids: Master_Server_Id: 221Master_UUID: 965d216d-7d64-11f0-8771-000c29111b7dMaster_Info_File: mysql.slave_master_infoSQL_Delay: 0SQL_Remaining_Delay: NULLSlave_SQL_Running_State: Replica has read all relay log; waiting for more updatesMaster_Retry_Count: 86400Master_Bind: Last_IO_Error_Timestamp: Last_SQL_Error_Timestamp: Master_SSL_Crl: Master_SSL_Crlpath: Retrieved_Gtid_Set: 965d216d-7d64-11f0-8771-000c29111b7d:9-10Executed_Gtid_Set: 965d216d-7d64-11f0-8771-000c29111b7d:9-10,

966066a6-7d64-11f0-9760-000c29236169:1-6Auto_Position: 0Replicate_Rewrite_DB: Channel_Name: Master_TLS_Version: Master_public_key_path: Get_master_public_key: 0Network_Namespace:

1 row in set, 1 warning (0.00 sec)

在从库 2 (192.168.121.223) 上操作:

# 停止从库

mysql> stop slave;

Query OK, 0 rows affected, 2 warnings (0.00 sec)# 配置同步信息

mysql> change master to -> master_host='192.168.121.221',-> master_user='copy',-> master_password='123456',-> master_port=3306,-> master_log_file='mysql-bin.000003',-> master_log_pos=902;mysql> start slave;

Query OK, 0 rows affected, 1 warning (0.01 sec)mysql> show slave status\G

*************************** 1. row ***************************Slave_IO_State: Waiting for source to send eventMaster_Host: 192.168.121.221Master_User: copyMaster_Port: 3306Connect_Retry: 60Master_Log_File: mysql-bin.000003Read_Master_Log_Pos: 1354Relay_Log_File: mysql-slave2-relay-bin.000002Relay_Log_Pos: 326Relay_Master_Log_File: mysql-bin.000003Slave_IO_Running: YesSlave_SQL_Running: No # 这里发现是no没有成功Replicate_Do_DB: Replicate_Ignore_DB: Replicate_Do_Table: Replicate_Ignore_Table: Replicate_Wild_Do_Table: Replicate_Wild_Ignore_Table: Last_Errno: 1396Last_Error: Coordinator stopped because there were error(s) in the worker(s). The most recent failure being: Worker 1 failed executing transaction '965d216d-7d64-11f0-8771-000c29111b7d:9' at master log mysql-bin.000003, end_log_pos 1187. See error log and/or performance_schema.replication_applier_status_by_worker table for more details about this failure or others, if any.Skip_Counter: 0Exec_Master_Log_Pos: 902Relay_Log_Space: 995Until_Condition: NoneUntil_Log_File: Until_Log_Pos: 0Master_SSL_Allowed: NoMaster_SSL_CA_File: Master_SSL_CA_Path: Master_SSL_Cert: Master_SSL_Cipher: Master_SSL_Key: Seconds_Behind_Master: NULL

Master_SSL_Verify_Server_Cert: NoLast_IO_Errno: 0Last_IO_Error: Last_SQL_Errno: 1396Last_SQL_Error: Coordinator stopped because there were error(s) in the worker(s). The most recent failure being: Worker 1 failed executing transaction '965d216d-7d64-11f0-8771-000c29111b7d:9' at master log mysql-bin.000003, end_log_pos 1187. See error log and/or performance_schema.replication_applier_status_by_worker table for more details about this failure or others, if any.Replicate_Ignore_Server_Ids: Master_Server_Id: 221Master_UUID: 965d216d-7d64-11f0-8771-000c29111b7dMaster_Info_File: mysql.slave_master_infoSQL_Delay: 0SQL_Remaining_Delay: NULLSlave_SQL_Running_State: Master_Retry_Count: 86400Master_Bind: Last_IO_Error_Timestamp: Last_SQL_Error_Timestamp: 250820 01:59:29Master_SSL_Crl: Master_SSL_Crlpath: Retrieved_Gtid_Set: 965d216d-7d64-11f0-8771-000c29111b7d:9-10Executed_Gtid_Set: 96621d3c-7d64-11f0-9a8b-000c290f45a7:1-5Auto_Position: 0Replicate_Rewrite_DB: Channel_Name: Master_TLS_Version: Master_public_key_path: Get_master_public_key: 0Network_Namespace:

1 row in set, 1 warning (0.00 sec)

在搭建第二台从库的的时候发现了一个小问题,第一台从库配置完主从复制之后主库的Position发生了变化导致第二台从库Slave_SQL_Running: No # no没有成功,

接下来去主库重新获取file和position主库 (192.168.121.221) 上操作:

mysql> SHOW MASTER STATUS;

+------------------+----------+--------------+------------------+-------------------------------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+------------------+----------+--------------+------------------+-------------------------------------------+

| mysql-bin.000003 | 1354 | | | 965d216d-7d64-11f0-8771-000c29111b7d:1-10 |

+------------------+----------+--------------+------------------+-------------------------------------------+

1 row in set (0.00 sec)

# 发现position从原来的902变成了1354在从库 2 (192.168.121.223) 上操作:

# 停止从库

mysql> stop slave;

Query OK, 0 rows affected, 1 warning (0.01 sec)# 清除主从同步规则

mysql> reset slave;

Query OK, 0 rows affected, 1 warning (0.00 sec)# 重新同步配置信息

mysql> change master to -> master_host='192.168.121.221',-> master_user='copy',-> master_password='123456',-> master_port=3306,-> master_log_file='mysql-bin.000003',-> master_log_pos=1354; # 注意修改pos为目前主库的值

Query OK, 0 rows affected, 9 warnings (0.01 sec)mysql> start slave;

Query OK, 0 rows affected, 1 warning (0.00 sec)mysql> show slave status\G

*************************** 1. row ***************************Slave_IO_State: Waiting for source to send eventMaster_Host: 192.168.121.221Master_User: chenjunMaster_Port: 3306Connect_Retry: 60Master_Log_File: mysql-bin.000003Read_Master_Log_Pos: 1354Relay_Log_File: mysql-slave2-relay-bin.000002Relay_Log_Pos: 326Relay_Master_Log_File: mysql-bin.000003Slave_IO_Running: YesSlave_SQL_Running: Yes # 发现主从复制成功了Replicate_Do_DB: Replicate_Ignore_DB: Replicate_Do_Table: Replicate_Ignore_Table: Replicate_Wild_Do_Table: Replicate_Wild_Ignore_Table: Last_Errno: 0Last_Error: Skip_Counter: 0Exec_Master_Log_Pos: 1354Relay_Log_Space: 543Until_Condition: NoneUntil_Log_File: Until_Log_Pos: 0Master_SSL_Allowed: NoMaster_SSL_CA_File: Master_SSL_CA_Path: Master_SSL_Cert: Master_SSL_Cipher: Master_SSL_Key: Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: NoLast_IO_Errno: 0Last_IO_Error: Last_SQL_Errno: 0Last_SQL_Error: Replicate_Ignore_Server_Ids: Master_Server_Id: 221Master_UUID: 965d216d-7d64-11f0-8771-000c29111b7dMaster_Info_File: mysql.slave_master_infoSQL_Delay: 0SQL_Remaining_Delay: NULLSlave_SQL_Running_State: Replica has read all relay log; waiting for more updatesMaster_Retry_Count: 86400Master_Bind: Last_IO_Error_Timestamp: Last_SQL_Error_Timestamp: Master_SSL_Crl: Master_SSL_Crlpath: Retrieved_Gtid_Set: Executed_Gtid_Set: 96621d3c-7d64-11f0-9a8b-000c290f45a7:1-5Auto_Position: 0Replicate_Rewrite_DB: Channel_Name: Master_TLS_Version: Master_public_key_path: Get_master_public_key: 0Network_Namespace:

1 row in set, 1 warning (0.00 sec)

4.4 配置半同步复制

编辑主库配置文件在主库 2 (192.168.121.221) 上操作

vim /data/mysql/my.cnf# 在[mysqld]模块下添加以下参数:

[mysqld]

# 启用半同步主库模式(核心参数)

rpl_semi_sync_master_enabled = 1# 半同步超时时间(单位:毫秒,默认10000ms=10秒,建议根据网络延迟调整)

# 若从库在超时时间内未确认,主库会降级为异步复制

rpl_semi_sync_master_timeout = 30000

编辑从库配置文件在从库1 (192.168.121.222)和从库2(192.168.121.223) 上操作

vim /data/mysql/my.cnf

同样在[mysqld]模块下添加以下参数:

[mysqld]

# 启用半同步从库模式(核心参数)

rpl_semi_sync_slave_enabled = 1# 可选参数:从库是否在接收到binlog后立即发送确认(1=立即发送,0=等待SQL线程执行后发送)

# 建议保持默认1(仅确认接收,不等待执行,减少主库等待时间)

rpl_semi_sync_slave_trace_level = 32重启主库和从库服务

docker restart mysql验证持久化配置是否生效

-- 主库验证

SHOW GLOBAL VARIABLES LIKE 'rpl_semi_sync_master_enabled'; -- 应返回ON

SHOW GLOBAL VARIABLES LIKE 'rpl_semi_sync_master_timeout'; -- 应返回配置的超时值-- 从库验证

SHOW GLOBAL VARIABLES LIKE 'rpl_semi_sync_slave_enabled'; -- 应返回ON确认半同步状态已激活

-- 主库

SHOW GLOBAL STATUS LIKE 'Rpl_semi_sync_master_status'; -- 应返回ON-- 从库

SHOW GLOBAL STATUS LIKE 'Rpl_semi_sync_slave_status'; -- 应返回ON如果报错

mysql> SHOW GLOBAL STATUS LIKE 'Rpl_semi_sync_slave_status';

+----------------------------+-------+

| Variable_name | Value |

+----------------------------+-------+

| Rpl_semi_sync_slave_status | OFF |

+----------------------------+-------+

1 row in set (0.01 sec)mysql> STOP SLAVE;

Query OK, 0 rows affected, 2 warnings (0.00 sec)mysql> START SLAVE;

ERROR 1200 (HY000): The server is not configured as slave; fix in config file or with CHANGE MASTER TO重新配置两个从库连接主库的信息原因是主库binlog发生了变化

mysql> change master to -> master_host='192.168.121.221',-> master_user='copy',-> master_password='123456',-> master_port=3306,-> master_log_file='mysql-bin.000005',-> master_log_pos=197;

Query OK, 0 rows affected, 9 warnings (0.01 sec)mysql> start slave;

Query OK, 0 rows affected, 1 warning (0.01 sec)mysql> show slave status\G

*************************** 1. row ***************************Slave_IO_State: Waiting for source to send eventMaster_Host: 192.168.121.221Master_User: chenjunMaster_Port: 3306Connect_Retry: 60Master_Log_File: mysql-bin.000005Read_Master_Log_Pos: 197Relay_Log_File: mysql-slave1-relay-bin.000002Relay_Log_Pos: 326Relay_Master_Log_File: mysql-bin.000005Slave_IO_Running: YesSlave_SQL_Running: YesReplicate_Do_DB: Replicate_Ignore_DB: Replicate_Do_Table: Replicate_Ignore_Table: Replicate_Wild_Do_Table: Replicate_Wild_Ignore_Table: Last_Errno: 0Last_Error: Skip_Counter: 0Exec_Master_Log_Pos: 197Relay_Log_Space: 543Until_Condition: NoneUntil_Log_File: Until_Log_Pos: 0Master_SSL_Allowed: NoMaster_SSL_CA_File: Master_SSL_CA_Path: Master_SSL_Cert: Master_SSL_Cipher: Master_SSL_Key: Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: NoLast_IO_Errno: 0Last_IO_Error: Last_SQL_Errno: 0Last_SQL_Error: Replicate_Ignore_Server_Ids: Master_Server_Id: 221Master_UUID: ebd87b10-7d6c-11f0-965d-000c29111b7dMaster_Info_File: mysql.slave_master_infoSQL_Delay: 0SQL_Remaining_Delay: NULLSlave_SQL_Running_State: Replica has read all relay log; waiting for more updatesMaster_Retry_Count: 86400Master_Bind: Last_IO_Error_Timestamp: Last_SQL_Error_Timestamp: Master_SSL_Crl: Master_SSL_Crlpath: Retrieved_Gtid_Set: Executed_Gtid_Set: 965d216d-7d64-11f0-8771-000c29111b7d:1-5Auto_Position: 0Replicate_Rewrite_DB: Channel_Name: Master_TLS_Version: Master_public_key_path: Get_master_public_key: 0Network_Namespace:

1 row in set, 1 warning (0.00 sec)

测试主从复制

# 主库执行

create database test;# 从库执行

show databases;

半同步测试

在主库(192.168.121.221)上操作:

# 记录初始事务计数,用于后续对比

mysql> SHOW GLOBAL STATUS LIKE 'Rpl_semi_sync_master_yes_tx'; #成功等待从库确认的事务数

+-----------------------------+-------+

| Variable_name | Value |

+-----------------------------+-------+

| Rpl_semi_sync_master_yes_tx | 6 |

+-----------------------------+-------+

1 row in set (0.00 sec)mysql> SHOW GLOBAL STATUS LIKE 'Rpl_semi_sync_master_no_tx'; #未等待确认的事务数(异步)

+----------------------------+-------+

| Variable_name | Value |

+----------------------------+-------+

| Rpl_semi_sync_master_no_tx | 0 |

+----------------------------+-------+

1 row in set (0.00 sec)# 主库创建测试库表并插入数据

mysql> CREATE DATABASE IF NOT EXISTS test;

Query OK, 1 row affected (0.00 sec)mysql> USE test;

Database changed

mysql> CREATE TABLE IF NOT EXISTS t (id INT PRIMARY KEY, val VARCHAR(50));

Query OK, 0 rows affected (0.01 sec)# 执行事务

mysql> INSERT INTO t VALUES (1, 'semi-sync-test');

Query OK, 1 row affected (0.00 sec)mysql> SHOW GLOBAL STATUS LIKE 'Rpl_semi_sync_master_yes_tx';

+-----------------------------+-------+

| Variable_name | Value |

+-----------------------------+-------+

| Rpl_semi_sync_master_yes_tx | 9 |

+-----------------------------+-------+

1 row in set (0.00 sec)mysql> SHOW GLOBAL STATUS LIKE 'Rpl_semi_sync_master_no_tx';

+----------------------------+-------+

| Variable_name | Value |

+----------------------------+-------+

| Rpl_semi_sync_master_no_tx | 0 |

+----------------------------+-------+

1 row in set (0.00 sec)# 若yes_tx增加,说明事务在收到从库确认后才提交,半同步正常。

# 若no_tx增加,说明半同步未生效(需排查从库连接或配置)5 MHA 部署与配置

5.1 部署 MHA 节点

在 mha-manager (192.168.121.220) 上操作:

# 安装依赖

yum install -y perl-DBD-MySQL perl-Config-Tiny perl-Log-Dispatch perl-Parallel-ForkManager perl-ExtUtils-CBuilder perl-ExtUtils-MakeMaker epel-release # 安装MHA Node

wget https://github.com/yoshinorim/mha4mysql-node/releases/download/v0.58/mha4mysql-node-0.58-0.el7.centos.noarch.rpm

rpm -ivh mha4mysql-node-0.58-0.el7.centos.noarch.rpm# 安装MHA Manager

wget https://github.com/yoshinorim/mha4mysql-manager/releases/download/v0.58/mha4mysql-manager-0.58-0.el7.centos.noarch.rpm

rpm -ivh mha4mysql-manager-0.58-0.el7.centos.noarch.rpm# 创建MHA配置目录

mkdir -p /etc/mha/mysql_cluster

mkdir -p /var/log/mha/mysql_cluster# 创建MHA配置文件

cat > /etc/mha/mysql_cluster.cnf << EOF

[server default]

manager_workdir=/var/log/mha/mysql_cluster

manager_log=/var/log/mha/mysql_cluster/manager.log

master_binlog_dir=/var/lib/mysql

user=mha

password=123456

ping_interval=1

remote_workdir=/tmp

repl_user=mha

repl_password=123456

ssh_user=root

master_ip_failover_script=/usr/local/bin/master_ip_failover

master_ip_online_change_script=/usr/local/bin/master_ip_online_change

secondary_check_script=masterha_secondary_check -s 192.168.121.222 -s 192.168.121.223

shutdown_script=""[server1]

hostname=192.168.121.221

port=3306

candidate_master=1[server2]

hostname=192.168.121.222

port=3306

candidate_master=1[server3]

hostname=192.168.121.223

port=3306

candidate_master=0

EOFscp root@192.168.121.210:/data/docker/mysql/master_ip_failover /usr/local/bin/

scp root@192.168.121.210:/data/docker/mysql/master_ip_online_change /usr/local/bin/

chmod +x /usr/local/bin/master_ip_failover

chmod +x /usr/local/bin/master_ip_online_change

在所有 MySQL 节点上安装 MHA Node:

# 在ansible-server上执行

ansible mysql -m shell -a 'yum install -y perl-DBD-MySQL'

cd /data/docker

wget https://github.com/yoshinorim/mha4mysql-node/releases/download/v0.58/mha4mysql-node-0.58-0.el7.centos.noarch.rpmansible mysql -m copy -a 'src=/data/docker/mha4mysql-node-0.58-0.el7.centos.noarch.rpm dest=/tmp/'ansible mysql -m shell -a 'rpm -ivh /tmp/mha4mysql-node-0.58-0.el7.centos.noarch.rpm'5.2 配置 MySQL 监控用户

在主库上创建 MHA 监控用户(主从复制从库会同步):

# 登录MySQL

mysql -uroot -p123456# 创建监控用户

CREATE USER 'mha'@'%' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON *.* TO 'mha'@'%';

ALTER USER 'mha'@'%' IDENTIFIED WITH mysql_native_password BY '123456';

FLUSH PRIVILEGES;5.3 测试 MHA 配置

# 测试SSH连接,如果没用配置ssh免密登录可能会报错

[root@mha-manager mha]# masterha_check_ssh --conf=/etc/mha/mysql_cluster.cnf

Thu Aug 21 15:40:49 2025 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Thu Aug 21 15:40:49 2025 - [info] Reading application default configuration from /etc/mha/mysql_cluster.cnf..

Thu Aug 21 15:40:49 2025 - [info] Reading server configuration from /etc/mha/mysql_cluster.cnf..

Thu Aug 21 15:40:49 2025 - [info] Starting SSH connection tests..

Thu Aug 21 15:40:50 2025 - [debug]

Thu Aug 21 15:40:49 2025 - [debug] Connecting via SSH from root@192.168.121.221(192.168.121.221:22) to root@192.168.121.222(192.168.121.222:22)..

Thu Aug 21 15:40:50 2025 - [debug] ok.

Thu Aug 21 15:40:50 2025 - [debug] Connecting via SSH from root@192.168.121.221(192.168.121.221:22) to root@192.168.121.223(192.168.121.223:22)..

Thu Aug 21 15:40:50 2025 - [debug] ok.

Thu Aug 21 15:40:50 2025 - [debug]

Thu Aug 21 15:40:50 2025 - [debug] Connecting via SSH from root@192.168.121.222(192.168.121.222:22) to root@192.168.121.221(192.168.121.221:22)..

Thu Aug 21 15:40:50 2025 - [debug] ok.

Thu Aug 21 15:40:50 2025 - [debug] Connecting via SSH from root@192.168.121.222(192.168.121.222:22) to root@192.168.121.223(192.168.121.223:22)..

Thu Aug 21 15:40:50 2025 - [debug] ok.

Thu Aug 21 15:40:51 2025 - [debug]

Thu Aug 21 15:40:50 2025 - [debug] Connecting via SSH from root@192.168.121.223(192.168.121.223:22) to root@192.168.121.221(192.168.121.221:22)..

Thu Aug 21 15:40:51 2025 - [debug] ok.

Thu Aug 21 15:40:51 2025 - [debug] Connecting via SSH from root@192.168.121.223(192.168.121.223:22) to root@192.168.121.222(192.168.121.222:22)..

Thu Aug 21 15:40:51 2025 - [debug] ok.

Thu Aug 21 15:40:51 2025 - [info] All SSH connection tests passed successfully.# 测试MySQL复制

[root@mha-manager mha]# masterha_check_repl --conf=/etc/mha/mysql_cluster.cnf

Thu Aug 21 15:40:33 2025 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Thu Aug 21 15:40:33 2025 - [info] Reading application default configuration from /etc/mha/mysql_cluster.cnf..

Thu Aug 21 15:40:33 2025 - [info] Reading server configuration from /etc/mha/mysql_cluster.cnf..

Thu Aug 21 15:40:33 2025 - [info] MHA::MasterMonitor version 0.58.

Thu Aug 21 15:40:34 2025 - [info] GTID failover mode = 1

Thu Aug 21 15:40:34 2025 - [info] Dead Servers:

Thu Aug 21 15:40:34 2025 - [info] Alive Servers:

Thu Aug 21 15:40:34 2025 - [info] 192.168.121.221(192.168.121.221:3306)

Thu Aug 21 15:40:34 2025 - [info] 192.168.121.222(192.168.121.222:3306)

Thu Aug 21 15:40:34 2025 - [info] 192.168.121.223(192.168.121.223:3306)

Thu Aug 21 15:40:34 2025 - [info] Alive Slaves:

Thu Aug 21 15:40:34 2025 - [info] 192.168.121.222(192.168.121.222:3306) Version=8.0.28 (oldest major version between slaves) log-bin:enabled

Thu Aug 21 15:40:34 2025 - [info] GTID ON

Thu Aug 21 15:40:34 2025 - [info] Replicating from 192.168.121.221(192.168.121.221:3306)

Thu Aug 21 15:40:34 2025 - [info] Primary candidate for the new Master (candidate_master is set)

Thu Aug 21 15:40:34 2025 - [info] 192.168.121.223(192.168.121.223:3306) Version=8.0.28 (oldest major version between slaves) log-bin:enabled

Thu Aug 21 15:40:34 2025 - [info] GTID ON

Thu Aug 21 15:40:34 2025 - [info] Replicating from 192.168.121.221(192.168.121.221:3306)

Thu Aug 21 15:40:34 2025 - [info] Current Alive Master: 192.168.121.221(192.168.121.221:3306)

Thu Aug 21 15:40:34 2025 - [info] Checking slave configurations..

Thu Aug 21 15:40:34 2025 - [info] read_only=1 is not set on slave 192.168.121.222(192.168.121.222:3306).

Thu Aug 21 15:40:34 2025 - [info] read_only=1 is not set on slave 192.168.121.223(192.168.121.223:3306).

Thu Aug 21 15:40:34 2025 - [info] Checking replication filtering settings..

Thu Aug 21 15:40:34 2025 - [info] binlog_do_db= , binlog_ignore_db=

Thu Aug 21 15:40:34 2025 - [info] Replication filtering check ok.

Thu Aug 21 15:40:34 2025 - [info] GTID (with auto-pos) is supported. Skipping all SSH and Node package checking.

Thu Aug 21 15:40:34 2025 - [info] Checking SSH publickey authentication settings on the current master..

Thu Aug 21 15:40:34 2025 - [info] HealthCheck: SSH to 192.168.121.221 is reachable.

Thu Aug 21 15:40:34 2025 - [info]

192.168.121.221(192.168.121.221:3306) (current master)+--192.168.121.222(192.168.121.222:3306)+--192.168.121.223(192.168.121.223:3306)Thu Aug 21 15:40:34 2025 - [info] Checking replication health on 192.168.121.222..

Thu Aug 21 15:40:34 2025 - [info] ok.

Thu Aug 21 15:40:34 2025 - [info] Checking replication health on 192.168.121.223..

Thu Aug 21 15:40:34 2025 - [info] ok.

Thu Aug 21 15:40:34 2025 - [info] Checking master_ip_failover_script status:

Thu Aug 21 15:40:34 2025 - [info] /usr/local/bin/master_ip_failover --command=status --ssh_user=root --orig_master_host=192.168.121.221 --orig_master_ip=192.168.121.221 --orig_master_port=3306 IN SCRIPT TEST====/sbin/ifconfig ens32:1 down==/sbin/ifconfig ens32:1 192.168.121.200===Checking the Status of the script.. OK

Thu Aug 21 15:40:34 2025 - [info] OK.

Thu Aug 21 15:40:34 2025 - [warning] shutdown_script is not defined.

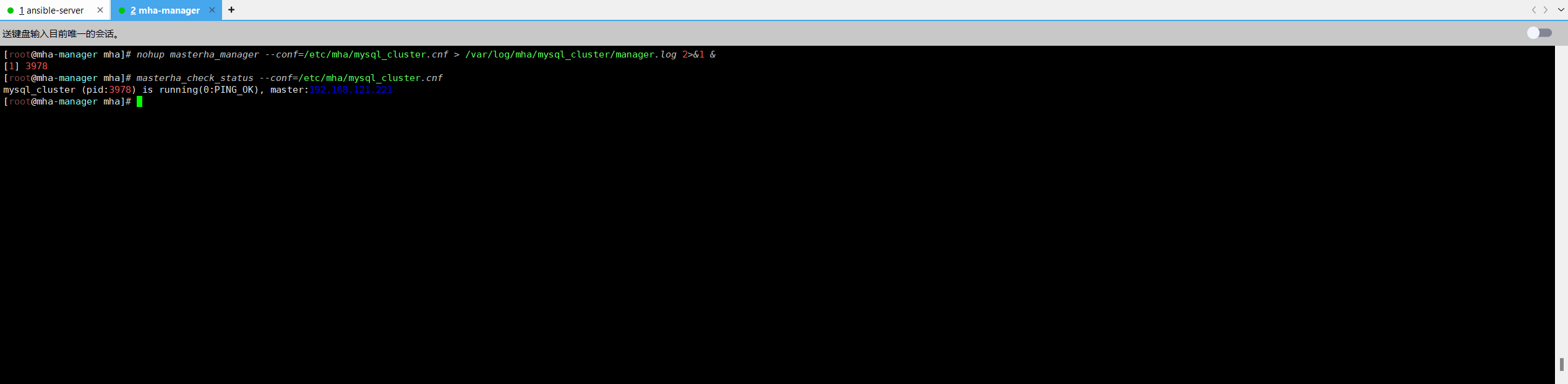

Thu Aug 21 15:40:34 2025 - [info] Got exit code 0 (Not master dead).MySQL Replication Health is OK.# 启动MHA Manager

nohup masterha_manager --conf=/etc/mha/mysql_cluster.cnf > /var/log/mha/mysql_cluster/manager.log 2>&1 &# 查看MHA状态

[root@mha-manager mha]# masterha_check_status --conf=/etc/mha/mysql_cluster.cnf

mysql_cluster (pid:2942) is running(0:PING_OK), master:192.168.121.221

出现以下内容表示启动成功

5.4 故障转移效果测试,模拟mysql-matser宕机,指定mysql-slave1成为新的master

5.4.1 在mysql主节点手动开启vip

ifconfig ens32:1 192.168.121.200/245.4.2 mha-manager节点监控日志记录

[root@mha-manager mha]# tail -f /var/log/mha/mysql_cluster/manager.log 5.4.3 模拟mysql-master宕机,停掉master

[root@mysql-master ~]# docker stop mysql

mysql

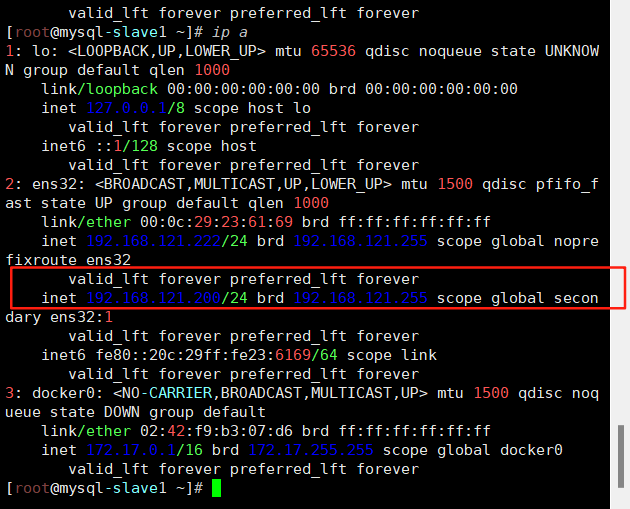

查看vip是否漂移到了mysql-slave1

5.4.4 查看状态master是不是salve1的ip

[root@mha-manager mha]# masterha_check_status --conf=/etc/mha/mysql_cluster.cnf

mysql_cluster (pid:4680) is running(0:PING_OK), master:192.168.121.222

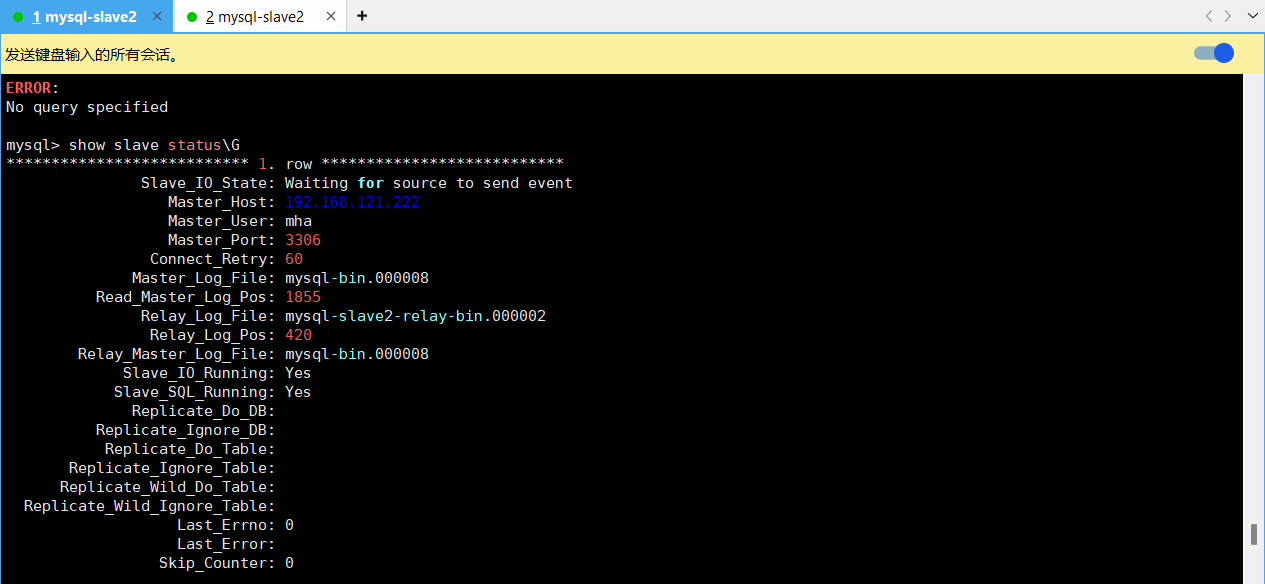

在看看mysql-slave2的主节点信息

至此测试完成,故障主备自动切换master主切换到slave1为主节点,slave2也指向了slave1为主节点。

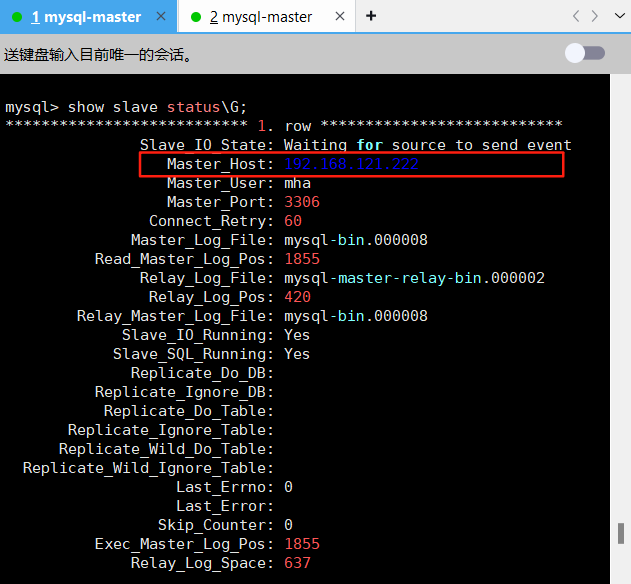

5.4.5 原mysql-master节点故障恢复

[root@mysql-master ~]# docker start mysql

mysql

[root@mysql-master ~]# docker exec -it mysql bash

root@mysql-master:/# mysql -uroot -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 10

Server version: 8.0.28 MySQL Community Server - GPLCopyright (c) 2000, 2022, Oracle and/or its affiliates.Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.mysql> change master to master_host='192.168.121.222',master_user='mha',master_password='123456',master_port=3306,master_auto_positioon=1;

Query OK, 0 rows affected, 8 warnings (0.01 sec)mysql> start slave;

Query OK, 0 rows affected, 1 warning (0.00 sec)mysql> show slave status\G;

*************************** 1. row ***************************Slave_IO_State: Waiting for source to send eventMaster_Host: 192.168.121.222Master_User: mhaMaster_Port: 3306Connect_Retry: 60Master_Log_File: mysql-bin.000008Read_Master_Log_Pos: 1855Relay_Log_File: mysql-master-relay-bin.000002Relay_Log_Pos: 420Relay_Master_Log_File: mysql-bin.000008Slave_IO_Running: YesSlave_SQL_Running: YesReplicate_Do_DB: Replicate_Ignore_DB: Replicate_Do_Table: Replicate_Ignore_Table: Replicate_Wild_Do_Table: Replicate_Wild_Ignore_Table: Last_Errno: 0Last_Error: Skip_Counter: 0Exec_Master_Log_Pos: 1855Relay_Log_Space: 637Until_Condition: NoneUntil_Log_File: Until_Log_Pos: 0Master_SSL_Allowed: NoMaster_SSL_CA_File: Master_SSL_CA_Path: Master_SSL_Cert: Master_SSL_Cipher: Master_SSL_Key: Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: NoLast_IO_Errno: 0Last_IO_Error: Last_SQL_Errno: 0Last_SQL_Error: Replicate_Ignore_Server_Ids: Master_Server_Id: 222Master_UUID: e6b13ba9-7d6c-11f0-8a0b-000c29236169Master_Info_File: mysql.slave_master_infoSQL_Delay: 0SQL_Remaining_Delay: NULLSlave_SQL_Running_State: Replica has read all relay log; waiting for more updatesMaster_Retry_Count: 86400Master_Bind: Last_IO_Error_Timestamp: Last_SQL_Error_Timestamp: Master_SSL_Crl: Master_SSL_Crlpath: Retrieved_Gtid_Set: Executed_Gtid_Set: 965d216d-7d64-11f0-8771-000c29111b7d:1-10,

e6b13ba9-7d6c-11f0-8a0b-000c29236169:1-4,

ebd87b10-7d6c-11f0-965d-000c29111b7d:1-56Auto_Position: 1Replicate_Rewrite_DB: Channel_Name: Master_TLS_Version: Master_public_key_path: Get_master_public_key: 0Network_Namespace:

1 row in set, 1 warning (0.00 sec)

5.4.6 重启mha manager,并检查此时的master节点

[root@mha-manager mha]# systemctl restart mha

[root@mha-manager mha]# masterha_check_status --conf=/etc/mha/mysql_cluster.cnf

mysql_cluster (pid:5425) is running(0:PING_OK), master:192.168.121.222

5.5 配置 MHA 自动启动

[root@mha-manager mha]# nohup masterha_manager --conf=/etc/mha/mysql_cluster.cnf > /var/log/mha/mysql_cluster/manager.log 2>&1 &

[1] 3978

[root@mha-manager mha]# masterha_check_status --conf=/etc/mha/mysql_cluster.cnf

mysql_cluster (pid:3978) is running(0:PING_OK), master:192.168.121.221

[root@mha-manager mha]# vim /etc/systemd/system/mha.service

[root@mha-manager mha]# systemctl daemon-reload

[root@mha-manager mha]# systemctl enable mha

Created symlink from /etc/systemd/system/multi-user.target.wants/mha.service to /etc/systemd/system/mha.service.

[root@mha-manager mha]# systemctl start mha

[root@mha-manager mha]# systemctl status mha

● mha.service - MHA Manager for MySQL ClusterLoaded: loaded (/etc/systemd/system/mha.service; enabled; vendor preset: disabled)Active: active (running) since 三 2025-08-20 02:24:22 CST; 4s agoMain PID: 4164 (perl)Tasks: 1Memory: 16.9MCGroup: /system.slice/mha.service└─4164 perl /usr/bin/masterha_manager --conf=/etc/mha/mysql_cluster.cnf8月 20 02:24:22 mha-manager systemd[1]: Started MHA Manager for MySQL Cluster.

8月 20 02:24:22 mha-manager masterha_manager[4164]: Wed Aug 20 02:24:22 2025 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

8月 20 02:24:22 mha-manager masterha_manager[4164]: Wed Aug 20 02:24:22 2025 - [info] Reading application default configuration from /etc/mha/mysql_cluster.cnf..

8月 20 02:24:22 mha-manager masterha_manager[4164]: Wed Aug 20 02:24:22 2025 - [info] Reading server configuration from /etc/mha/mysql_cluster.cnf..

6 MyCat2 部署与配置

6.1 安装部署MyCat2

6.1.1 在mycat1和mycat2服务器部署环境所需要的MySQL数据库

[root@mycat1 ~]# docker run -d --name mysql --restart=always -p 3306:3306 -v /data/mysql/data:/var/lib/mysql -v /data/mysql/logs:/var/log/mysql -e MYSQL_ROOT_PASSWORD=123456 docker.1ms.run/mysql:8.0.28[root@mycat2 ~]# docker run -d --name mysql --restart=always -p 3306:3306 -v /data/mysql/data:/var/lib/mysql -v /data/mysql/logs:/var/log/mysql -e MYSQL_ROOT_PASSWORD=123456 docker.1ms.run/mysql:8.0.286.1.2 安装java环境

# 安装mycat需要的Java环境

[root@mycat1 ~]# yum install -y java

[root@mycat2 ~]# yum install -y java6.1.3安装java环境下载mycat安装包和jar包

链接: https://pan.baidu.com/s/1w9hr2EH9Cpqt6LFjn8MPrw?pwd=63hu 提取码: 63hu

6.1.4 解压mycat ZIP包

[root@mycat1 ~]# yum install -y unzip

[root@mycat2 ~]# yum install -y unzip[root@mycat1 ~]# unzip mycat2-install-template-1.21.zip

[root@mycat2 ~]# unzip mycat2-install-template-1.21.zip

6.1.5 把解压后的mycat目录移动到 /usr/local/目录下

[root@mycat1 ~]# mv mycat /usr/local/

[root@mycat2 ~]# mv mycat /usr/local/6.1.6 将jar包放入/usr/local/mycat/lib下

[root@mycat1 ~]# mv mycat2-1.22-release-jar-with-dependencies-2022-10-13.jar /usr/local/mycat/lib/

[root@mycat2 ~]# mv mycat2-1.22-release-jar-with-dependencies-2022-10-13.jar /usr/local/mycat/lib/6.1.7 授予bin目录可执行权限,防止启动报错

[root@mycat1 ~]# cd /usr/local/mycat/

[root@mycat1 mycat]# ls

bin conf lib logs

[root@mycat1 mycat]# chmod +x bin/*

[root@mycat1 mycat]# cd bin/

[root@mycat1 bin]# ll

总用量 2588

-rwxr-xr-x 1 root root 15666 3月 5 2021 mycat

-rwxr-xr-x 1 root root 3916 3月 5 2021 mycat.bat

-rwxr-xr-x 1 root root 281540 3月 5 2021 wrapper-aix-ppc-32

-rwxr-xr-x 1 root root 319397 3月 5 2021 wrapper-aix-ppc-64

-rwxr-xr-x 1 root root 253808 3月 5 2021 wrapper-hpux-parisc-64

-rwxr-xr-x 1 root root 140198 3月 5 2021 wrapper-linux-ppc-64

-rwxr-xr-x 1 root root 99401 3月 5 2021 wrapper-linux-x86-32

-rwxr-xr-x 1 root root 111027 3月 5 2021 wrapper-linux-x86-64

-rwxr-xr-x 1 root root 114052 3月 5 2021 wrapper-macosx-ppc-32

-rwxr-xr-x 1 root root 233604 3月 5 2021 wrapper-macosx-universal-32

-rwxr-xr-x 1 root root 253432 3月 5 2021 wrapper-macosx-universal-64

-rwxr-xr-x 1 root root 112536 3月 5 2021 wrapper-solaris-sparc-32

-rwxr-xr-x 1 root root 148512 3月 5 2021 wrapper-solaris-sparc-64

-rwxr-xr-x 1 root root 110992 3月 5 2021 wrapper-solaris-x86-32

-rwxr-xr-x 1 root root 204800 3月 5 2021 wrapper-windows-x86-32.exe

-rwxr-xr-x 1 root root 220672 3月 5 2021 wrapper-windows-x86-64.exe[root@mycat2 ~]# cd /usr/local/mycat/

[root@mycat2 mycat]# ls

bin conf lib logs

[root@mycat2 mycat]# chmod +x bin/*

[root@mycat2 mycat]# cd bin/

[root@mycat2 bin]# ll

总用量 2588

-rwxr-xr-x 1 root root 15666 3月 5 2021 mycat

-rwxr-xr-x 1 root root 3916 3月 5 2021 mycat.bat

-rwxr-xr-x 1 root root 281540 3月 5 2021 wrapper-aix-ppc-32

-rwxr-xr-x 1 root root 319397 3月 5 2021 wrapper-aix-ppc-64

-rwxr-xr-x 1 root root 253808 3月 5 2021 wrapper-hpux-parisc-64

-rwxr-xr-x 1 root root 140198 3月 5 2021 wrapper-linux-ppc-64

-rwxr-xr-x 1 root root 99401 3月 5 2021 wrapper-linux-x86-32

-rwxr-xr-x 1 root root 111027 3月 5 2021 wrapper-linux-x86-64

-rwxr-xr-x 1 root root 114052 3月 5 2021 wrapper-macosx-ppc-32

-rwxr-xr-x 1 root root 233604 3月 5 2021 wrapper-macosx-universal-32

-rwxr-xr-x 1 root root 253432 3月 5 2021 wrapper-macosx-universal-64

-rwxr-xr-x 1 root root 112536 3月 5 2021 wrapper-solaris-sparc-32

-rwxr-xr-x 1 root root 148512 3月 5 2021 wrapper-solaris-sparc-64

-rwxr-xr-x 1 root root 110992 3月 5 2021 wrapper-solaris-x86-32

-rwxr-xr-x 1 root root 204800 3月 5 2021 wrapper-windows-x86-32.exe

-rwxr-xr-x 1 root root 220672 3月 5 2021 wrapper-windows-x86-64.exe6.1.8 mycat2加入PATH环境变量,并设置开机启动

[root@mycat1 ~]# echo "PATH=/usr/local/mycat/bin/:$PATH" >>/root/.bashrc

[root@mycat1 ~]# PATH=/usr/local/mycat/bin/:$PATH[root@mycat2 ~]# echo "PATH=/usr/local/mycat/bin/:$PATH" >>/root/.bashrc

[root@mycat2 ~]# PATH=/usr/local/mycat/bin/:$PATH6.1.9 编辑prototypeDs.datasource.json默认数据源文件,并启动mycat(连接本机docker容器mysql数据库环境)

[root@mycat1 ~]# cd /usr/local/mycat/

[root@mycat1 mycat]# ls

bin conf lib logs

[root@mycat1 mycat]# cd conf/datasources/

[root@mycat1 datasources]# ls

prototypeDs.datasource.json

[root@mycat1 datasources]# vim prototypeDs.datasource.json[root@mycat2 ~]# cd /usr/local/mycat/

[root@mycat2 mycat]# ls

bin conf lib logs

[root@mycat2 mycat]# cd conf/datasources/

[root@mycat2 datasources]# ls

prototypeDs.datasource.json

[root@mycat2 datasources]# vim prototypeDs.datasource.json{"dbType":"mysql","idleTimeout":60000,"initSqls":[],"initSqlsGetConnection":true,"instanceType":"READ_WRITE","maxCon":1000,"maxConnectTimeout":3000,"maxRetryCount":5,"minCon":1,"name":"prototypeDs","password":"123456", # 本机MySQL密码"type":"JDBC","url":"jdbc:mysql://localhost:3306/mysql?useUnicode=true&serverTimezone=Asia/Shanghai&characterEncoding=UTF-8", # localhost表示本机可以改可不改"user":"root", # 本机MySQL用户名"weight":0

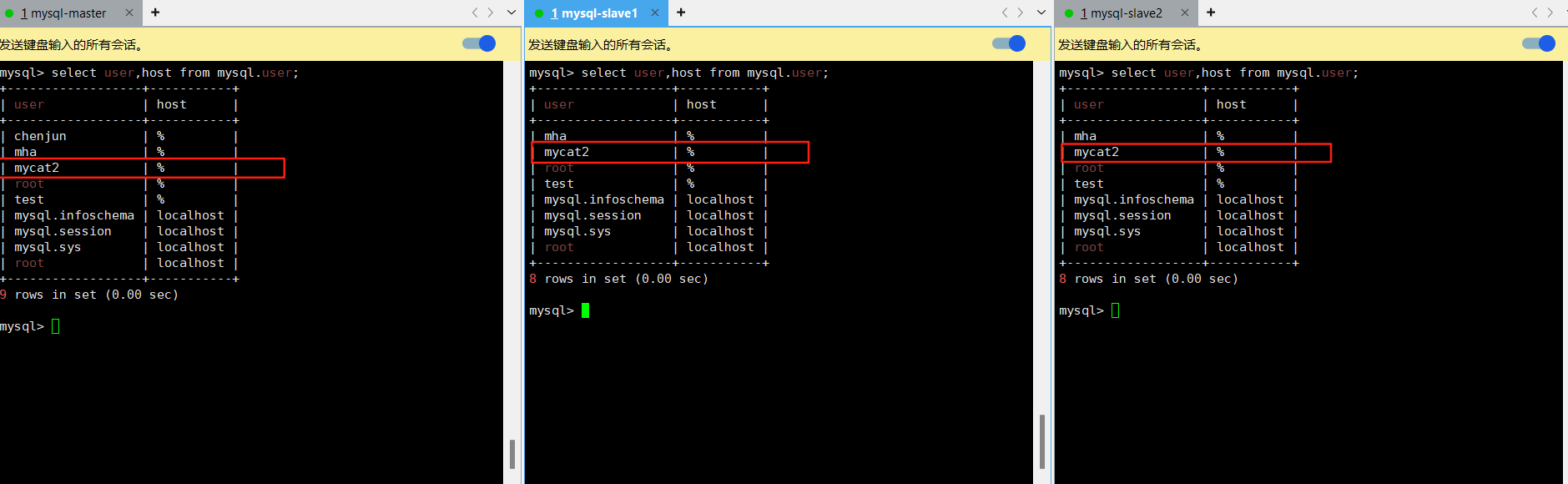

}[root@mycat2 datasources]# mycat start6.2 mysql集群(mysql-master、mysql-slave1、mysql-slave2)新建授权用户,允许mycat2访问

# 登录mysql-master

mysql -uroot -p123456mysql> CREATE USER 'mycat2'@'%' IDENTIFIED BY '123456';

Query OK, 0 rows affected (0.01 sec)mysql> GRANT ALL PRIVILEGES ON *.* TO 'mycat2'@'%';

Query OK, 0 rows affected (0.00 sec)# 由于 MySQL 客户端与服务器端的认证插件不兼容,MySQL 8.0 及以上版本默认使用caching_sha2_password认证插件,而较旧的客户端或中间件(如 MyCat)可能不支持该插件。

# 修改 MySQL 用户的认证插件

mysql> ALTER USER 'mycat2'@'%' IDENTIFIED WITH mysql_native_password BY '123456';

Query OK, 0 rows affected (0.00 sec)mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.01 sec)mysql> select user,host from mysql.user;

+------------------+-----------+

| user | host |

+------------------+-----------+

| chenjun | % |

| mha | % |

| mycat2 | % |

| root | % |

| test | % |

| mysql.infoschema | localhost |

| mysql.session | localhost |

| mysql.sys | localhost |

| root | localhost |

+------------------+-----------+

9 rows in set (0.00 sec)

之前已经实现了mysql集群主从复制,所以只需要在mysql-master主节点进行创建授权用户就可以了。

6.3 验证数据库访问情况

[root@mycat1 datasources]# mysql -umycat2 -p123456 -h 192.168.121.221

[root@mycat1 datasources]# mysql -umycat2 -p123456 -h 192.168.121.222

[root@mycat1 datasources]# mysql -umycat2 -p123456 -h 192.168.121.223

6.4 登录mycat2客户端,新建逻辑库testdb(先要在mysql-master主节点新建testdb数据库并插入一条测试数据)

----------------------- mysql-master ---------------------------------

mysql> create database testdb;

Query OK, 1 row affected (0.01 sec)mysql> use testdb;

Database changedmysql> create table test_table(id int, name varchar(50),age int);

Query OK, 0 rows affected (0.01 sec)mysql> insert into test_table(id,name,age) values(1,'test',20);

Query OK, 1 row affected (0.00 sec)mysql> show tables;

+------------------+

| Tables_in_testdb |

+------------------+

| test_table |

+------------------+

1 row in set (0.00 sec)

----------------------- mysql-master ---------------------------------

----------------------- mycat1 mycat2---------------------------------

[root@mycat1 datasources]# mysql -uroot -p123456 -P8066 -h 192.168.121.180

# 新建逻辑库test

create database testdb;

[root@mycat2 datasources]# mysql -uroot -p123456 -P8066 -h 192.168.121.190

# 新建逻辑库test

create database testdb;新建完成之后会产生一个逻辑库test.db.schema.json的文件

[root@mycat1 conf]# cd /usr/local/mycat/conf/schemas/

[root@mycat1 schemas]# ls

information_schema.schema.json mysql.schema.json testdb.schema.json[root@mycat2 conf]# cd /usr/local/mycat/conf/schemas/

[root@mycat2 schemas]# ls

information_schema.schema.json mysql.schema.json testdb.schema.json![]()

6.5 修改配置testdb.schema.json配置文件,配置逻辑库对应的集群

[root@mycat1 schemas]# vim testdb.schema.json

[root@mycat2 schemas]# vim testdb.schema.json{"customTables":{},"globalTables":{},"normalProcedures":{},"normalTables":{},"schemaName":"testdb","targetName":"cluster", # 添加此行,指向后端数据源集群的名字时cluster"shardingTables":{},"views":{}

}# 重启mycat

[root@mycat1 schemas]# mycat restart

[root@mycat2 schemas]# mycat restart6.6 MyCat2 支持通过注解方配置数据源和集群,这种方式相比传统的 JSON 配置文件更灵活,以下是通过注解方式添加集群和数据源的完整步骤:

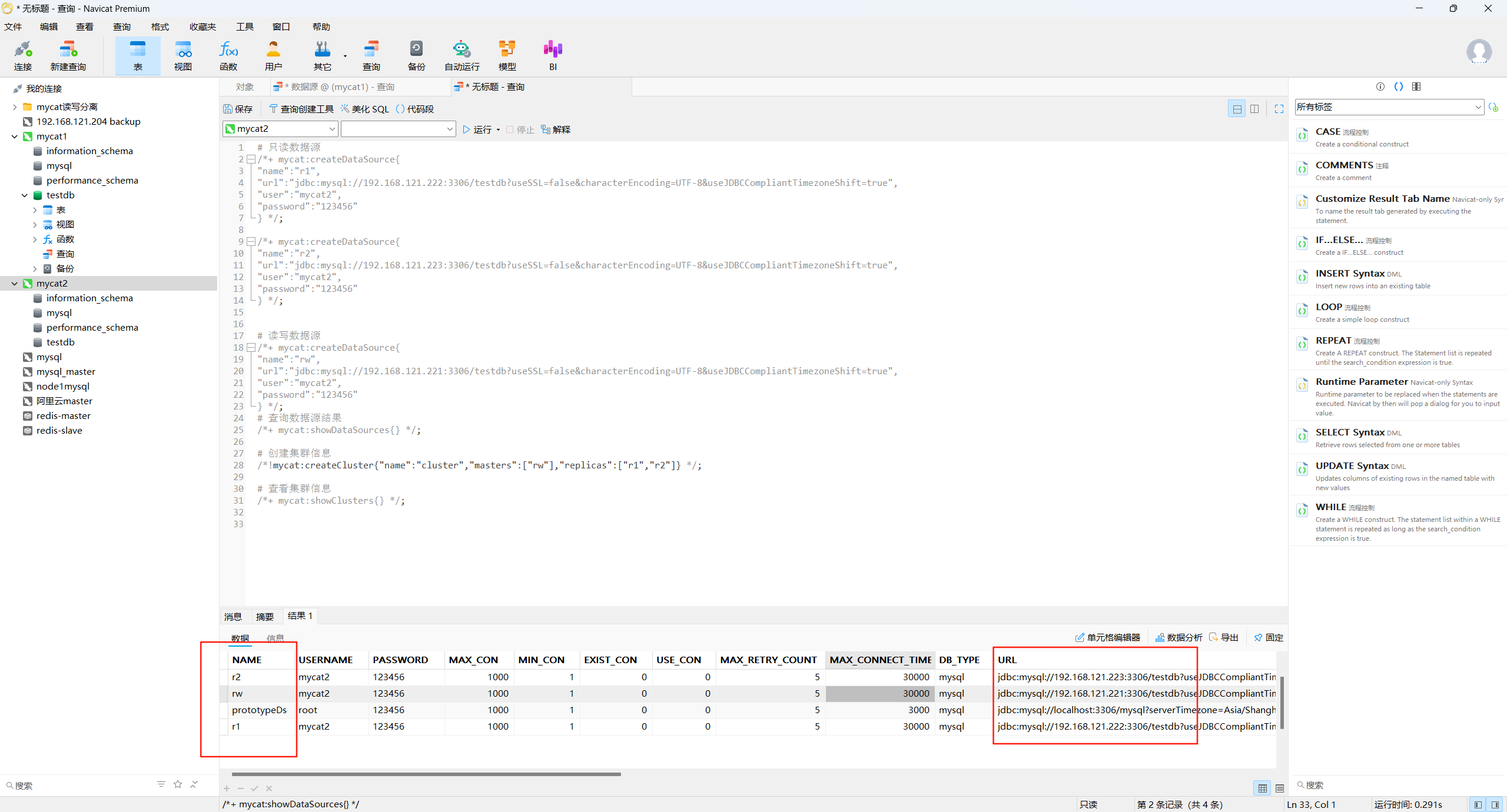

# 两台mycat服务器都需要运行 推荐使用navicat进行连接写查询语句

# 登录mycat

[root@mycat1 ~]# mysql -uroot -p123456 -P8066 -h 192.168.121.190#添加读写数据源也就是对应的msyql主节点192.168.121.200,之前mha故障主备切换测试已经切换成slave1所以读写数据源填写vip的地址/*+ mycat:createDataSource{

"name":"rw",

"url":"jdbc:mysql://192.168.121.200:3306/testdb?useSSL=false&characterEncoding=UTF-8&useJDBCCompliantTimezoneShift=true",

"user":"mycat2",

"password":"123456"

} */;#添加只读数据源也就是对应的原msyql主节点192.168.121.221

/*+ mycat:createDataSource{

"name":"r1",

"url":"jdbc:mysql://192.168.121.221:3306/testdb?useSSL=false&characterEncoding=UTF-8&useJDBCCompliantTimezoneShift=true",

"user":"mycat2",

"password":"123456"

} */;#添加只读数据源对应的msyql从节点192.168.121.223

/*+ mycat:createDataSource{

"name":"r2",

"url":"jdbc:mysql://192.168.121.223:3306/testdb?useSSL=false&characterEncoding=UTF-8&useJDBCCompliantTimezoneShift=true",

"user":"mycat2",

"password":"123456"

} */;# 查询数据源结果

/*+ mycat:showDataSources{} */;# 创建集群信息

/*!mycat:createCluster{"name":"cluster","masters":["rw"],"replicas":["r1","r2"]} */;# 查看集群信息

/*+ mycat:showClusters{} */;

6.7 验证读写分离

[root@mycat1 schemas]# mysql -uroot -p123456 -P8066 -h192.168.121.180

[root@mycat2 schemas]# mysql -uroot -p123456 -P8066 -h192.168.121.190---------------------------mycat1-------------------------------------------

[root@mycat1 datasources]# mysql -uroot -p123456 -P8066 -h192.168.121.180

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.7.33-mycat-2.0 MySQL Community Server - GPLCopyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.MySQL [(none)]> show databases;

+--------------------+

| `Database` |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| testdb |

+--------------------+

4 rows in set (0.01 sec)MySQL [(none)]> use testdb;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -ADatabase changed

MySQL [testdb]> show tables;

+------------------+

| Tables_in_testdb |

+------------------+

| test_table |

+------------------+

1 row in set (0.00 sec)MySQL [testdb]> select * from test_table;

+------+------+------+

| id | name | age |

+------+------+------+

| 1 | test | 20 |

+------+------+------+

1 row in set (0.07 sec)---------------------------mycat1----------------------------------------------------------------------mycat2-------------------------------------------

[root@mycat2 datasources]# mysql -uroot -p123456 -P8066 -h192.168.121.190

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 1

Server version: 5.7.33-mycat-2.0 MySQL Community Server - GPLCopyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.MySQL [(none)]> show databases;

+--------------------+

| `Database` |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| testdb |

+--------------------+

4 rows in set (0.11 sec)MySQL [(none)]> use testdb;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -ADatabase changed

MySQL [testdb]> show tables;

+------------------+

| Tables_in_testdb |

+------------------+

| test_table |

+------------------+

1 row in set (0.00 sec)MySQL [testdb]> select * from test_table;

+------+------+------+

| id | name | age |

+------+------+------+

| 1 | test | 20 |

+------+------+------+

1 row in set (0.07 sec)---------------------------mycat2-------------------------------------------可以看到两台mycat逻辑库testdb已经成功关联到了后端真实mysql数据库

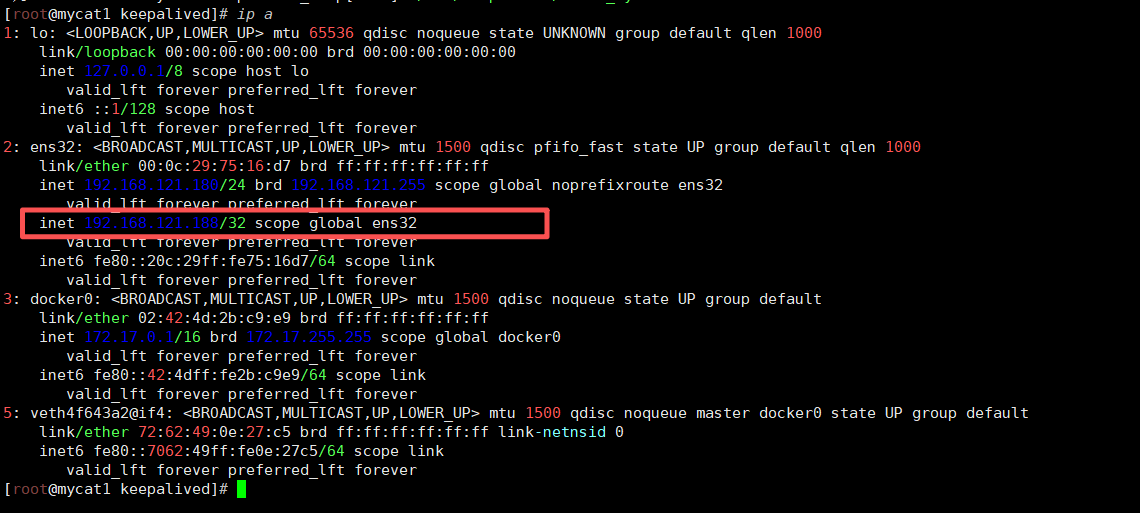

6.7.1 验证读写分离为了让读写分离的效果更明显,依次关闭从节点的主从复制

# mysal-slave2 停止主从复制

mysql> stop slave;# mysal-slave1 现主节点 插入数据

mysql> insert into test_table(id,name,age)values(3,'li',23);

Query OK, 1 row affected (0.00 sec)# mysal-master 原主节点 停止主从复制

mysql> stop slave;# mysal-slave1 现主节点 插入数据

mysql> insert into test_table(id,name,age)values(4,'w',24);

Query OK, 1 row affected (0.01 sec)# 登录mycat客户端,查询test_table表数据[root@mycat1 datasources]# mysql -uroot -p123456 -P8066 -h 192.168.121.180

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 3

Server version: 5.7.33-mycat-2.0 MySQL Community Server - GPLCopyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.MySQL [(none)]> use testdb;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -ADatabase changed

MySQL [testdb]> select * from test_table;

+------+----------+------+

| id | name | age |

+------+----------+------+

| 1 | test | 20 |

| 2 | chenzong | 21 |

| 3 | li | 23 |

| 4 | w | 24 |

+------+----------+------+

4 rows in set (0.00 sec)MySQL [testdb]> select * from test_table;

+------+----------+------+

| id | name | age |

+------+----------+------+

| 1 | test | 20 |

| 2 | chenzong | 21 |

| 3 | li | 23 |

| 4 | w | 24 |

+------+----------+------+

4 rows in set (0.01 sec)MySQL [testdb]> select * from test_table;

+------+----------+------+

| id | name | age |

+------+----------+------+

| 1 | test | 20 |

| 2 | chenzong | 21 |

| 3 | li | 23 |

+------+----------+------+

3 rows in set (0.01 sec)MySQL [testdb]> select * from test_table;

+------+----------+------+

| id | name | age |

+------+----------+------+

| 1 | test | 20 |

| 2 | chenzong | 21 |

| 3 | li | 23 |

+------+----------+------+

3 rows in set (0.00 sec)MySQL [testdb]> select * from test_table;

+------+----------+------+

| id | name | age |

+------+----------+------+

| 1 | test | 20 |

| 2 | chenzong | 21 |

| 3 | li | 23 |

| 4 | w | 24 |

| 5 | r | 25 |

+------+----------+------+

5 rows in set (0.00 sec)MySQL [testdb]> select * from test_table;

+------+----------+------+

| id | name | age |

+------+----------+------+

| 1 | test | 20 |

| 2 | chenzong | 21 |

| 3 | li | 23 |

| 4 | w | 24 |

+------+----------+------+

4 rows in set (0.00 sec)MySQL [testdb]> select * from test_table;

+------+----------+------+

| id | name | age |

+------+----------+------+

| 1 | test | 20 |

| 2 | chenzong | 21 |

| 3 | li | 23 |

+------+----------+------+

3 rows in set (0.01 sec)发现数据再不断的发生变化,这就是所需要的读请求的负载均衡效果

对mycat逻辑库test_table的读操作,负载均衡的将读请求转发到了后端3个真实数据库

而对mycat逻辑库test_table的写操作,只会转发到后端的主服务器上

7 配置 Keepalived 实现 MyCat 高可用

7.1 在两个 MyCat 节点上安装 Keepalived(在ansible-server上执行):

[root@ansible-server ~]# ansible mycat -m shell -a 'yum install -y keepalived'

[WARNING]: Consider using the yum module rather than running 'yum'. If you need to use

command because yum is insufficient you can add 'warn: false' to this command task or set

'command_warnings=False' in ansible.cfg to get rid of this message.

192.168.121.180 | CHANGED | rc=0 >>

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

正在解决依赖关系

--> 正在检查事务

---> 软件包 keepalived.x86_64.0.1.3.5-19.el7 将被 安装

--> 正在处理依赖关系 libnetsnmpmibs.so.31()(64bit),它被软件包 keepalived-1.3.5-19.el7.x86_64 需要

--> 正在处理依赖关系 libnetsnmpagent.so.31()(64bit),它被软件包 keepalived-1.3.5-19.el7.x86_64 需要

--> 正在处理依赖关系 libnetsnmp.so.31()(64bit),它被软件包 keepalived-1.3.5-19.el7.x86_64 需要

--> 正在检查事务

---> 软件包 net-snmp-agent-libs.x86_64.1.5.7.2-49.el7_9.4 将被 安装

--> 正在处理依赖关系 libsensors.so.4()(64bit),它被软件包 1:net-snmp-agent-libs-5.7.2-49.el7_9.4.x86_64 需要

---> 软件包 net-snmp-libs.x86_64.1.5.7.2-49.el7_9.4 将被 安装

--> 正在检查事务

---> 软件包 lm_sensors-libs.x86_64.0.3.4.0-8.20160601gitf9185e5.el7_9.1 将被 安装

--> 解决依赖关系完成依赖关系解决================================================================================Package 架构 版本 源 大小

================================================================================

正在安装:keepalived x86_64 1.3.5-19.el7 base 332 k

为依赖而安装:lm_sensors-libs x86_64 3.4.0-8.20160601gitf9185e5.el7_9.1 updates 42 knet-snmp-agent-libs x86_64 1:5.7.2-49.el7_9.4 updates 707 knet-snmp-libs x86_64 1:5.7.2-49.el7_9.4 updates 752 k事务概要

================================================================================

安装 1 软件包 (+3 依赖软件包)总下载量:1.8 M

安装大小:6.0 M

Downloading packages:

--------------------------------------------------------------------------------

总计 164 kB/s | 1.8 MB 00:11

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction正在安装 : 1:net-snmp-libs-5.7.2-49.el7_9.4.x86_64 1/4 正在安装 : lm_sensors-libs-3.4.0-8.20160601gitf9185e5.el7_9.1.x86_64 2/4 正在安装 : 1:net-snmp-agent-libs-5.7.2-49.el7_9.4.x86_64 3/4 正在安装 : keepalived-1.3.5-19.el7.x86_64 4/4 验证中 : 1:net-snmp-agent-libs-5.7.2-49.el7_9.4.x86_64 1/4 验证中 : keepalived-1.3.5-19.el7.x86_64 2/4 验证中 : lm_sensors-libs-3.4.0-8.20160601gitf9185e5.el7_9.1.x86_64 3/4 验证中 : 1:net-snmp-libs-5.7.2-49.el7_9.4.x86_64 4/4 已安装:keepalived.x86_64 0:1.3.5-19.el7 作为依赖被安装:lm_sensors-libs.x86_64 0:3.4.0-8.20160601gitf9185e5.el7_9.1 net-snmp-agent-libs.x86_64 1:5.7.2-49.el7_9.4 net-snmp-libs.x86_64 1:5.7.2-49.el7_9.4 完毕!

192.168.121.190 | CHANGED | rc=0 >>

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

正在解决依赖关系

--> 正在检查事务

---> 软件包 keepalived.x86_64.0.1.3.5-19.el7 将被 安装

--> 正在处理依赖关系 libnetsnmpmibs.so.31()(64bit),它被软件包 keepalived-1.3.5-19.el7.x86_64 需要

--> 正在处理依赖关系 libnetsnmpagent.so.31()(64bit),它被软件包 keepalived-1.3.5-19.el7.x86_64 需要

--> 正在处理依赖关系 libnetsnmp.so.31()(64bit),它被软件包 keepalived-1.3.5-19.el7.x86_64 需要

--> 正在检查事务

---> 软件包 net-snmp-agent-libs.x86_64.1.5.7.2-49.el7_9.4 将被 安装

--> 正在处理依赖关系 libsensors.so.4()(64bit),它被软件包 1:net-snmp-agent-libs-5.7.2-49.el7_9.4.x86_64 需要

---> 软件包 net-snmp-libs.x86_64.1.5.7.2-49.el7_9.4 将被 安装

--> 正在检查事务

---> 软件包 lm_sensors-libs.x86_64.0.3.4.0-8.20160601gitf9185e5.el7_9.1 将被 安装

--> 解决依赖关系完成依赖关系解决================================================================================Package 架构 版本 源 大小

================================================================================

正在安装:keepalived x86_64 1.3.5-19.el7 base 332 k

为依赖而安装:lm_sensors-libs x86_64 3.4.0-8.20160601gitf9185e5.el7_9.1 updates 42 knet-snmp-agent-libs x86_64 1:5.7.2-49.el7_9.4 updates 707 knet-snmp-libs x86_64 1:5.7.2-49.el7_9.4 updates 752 k事务概要

================================================================================

安装 1 软件包 (+3 依赖软件包)总下载量:1.8 M

安装大小:6.0 M

Downloading packages:

--------------------------------------------------------------------------------

总计 291 kB/s | 1.8 MB 00:06

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction正在安装 : 1:net-snmp-libs-5.7.2-49.el7_9.4.x86_64 1/4 正在安装 : lm_sensors-libs-3.4.0-8.20160601gitf9185e5.el7_9.1.x86_64 2/4 正在安装 : 1:net-snmp-agent-libs-5.7.2-49.el7_9.4.x86_64 3/4 正在安装 : keepalived-1.3.5-19.el7.x86_64 4/4 验证中 : 1:net-snmp-agent-libs-5.7.2-49.el7_9.4.x86_64 1/4 验证中 : keepalived-1.3.5-19.el7.x86_64 2/4 验证中 : lm_sensors-libs-3.4.0-8.20160601gitf9185e5.el7_9.1.x86_64 3/4 验证中 : 1:net-snmp-libs-5.7.2-49.el7_9.4.x86_64 4/4 已安装:keepalived.x86_64 0:1.3.5-19.el7 作为依赖被安装:lm_sensors-libs.x86_64 0:3.4.0-8.20160601gitf9185e5.el7_9.1 net-snmp-agent-libs.x86_64 1:5.7.2-49.el7_9.4 net-snmp-libs.x86_64 1:5.7.2-49.el7_9.4 完毕!

7.2 创建 keepalived_script 用户:

[root@ansible-server ~]# ansible mycat -m shell -a 'useradd -r -s /sbin/nologin keepalived_script'

192.168.121.190 | CHANGED | rc=0 >>192.168.121.180 | CHANGED | rc=0 >>7.3 在 mycat1 (192.168.121.180) 上配置:

vim /etc/keepalived/keepalived.conf# 输入dG删除全部内容

# 粘贴以下配置! Configuration File for keepalived

# Keepalived配置文件

# 用于实现MyCAT服务的高可用,通过VRRP协议实现主备切换# 全局定义部分

global_defs {router_id MYCAT1 # 路由器唯一标识,通常使用主机名或服务名,同一集群应不同script_security User keepalived_script # 启用脚本安全,并指定执行用户enable_script_security # 允许脚本执行(部分版本需要此参数)

}# 定义VRRP脚本(健康检查脚本)

vrrp_script check_mycat {script "/etc/keepalived/check_mycat.sh" # 指向MyCAT健康检查脚本的路径interval 2 # 检查间隔时间(秒),每2秒执行一次检查weight 2 # 权重调整值:如果脚本返回0(健康),节点优先级+2;非0则不调整

}# VRRP实例配置(定义高可用集群实例)

vrrp_instance VI_1 {state MASTER # 节点角色:MASTER(主节点)或BACKUP(备节点)interface ens32 # 绑定的网络接口,修改成实际网络接口名称如ens32,ens33virtual_router_id 51 # 虚拟路由ID(0-255),同一集群的主备节点必须相同priority 100 # 节点优先级(1-254),MASTER应高于BACKUP(如主100,备90)advert_int 1 # VRRP通告发送间隔(秒),主备节点需一致authentication { # 主备节点之间的认证配置,必须一致auth_type PASS # 认证类型:PASS(密码认证)auth_pass 1111 # 认证密码(最多8位字符)}virtual_ipaddress { # 虚拟IP地址(VIP),故障转移时会在主备节点间漂移192.168.121.188 # 业务访问的VIP地址}track_script { # 引用上面定义的健康检查脚本check_mycat # 启用MyCAT服务健康检查}

}

7.4 创建健康脚本

vim /etc/keepalived/check_mycat.sh#!/bin/bash

if ! nc -z 127.0.0.1 8066; thensystemctl stop keepalived

fichmod +x /etc/keepalived/check_mycat.sh

chown keepalived_script:keepalived_script /etc/keepalived/check_mycat.sh7.5 在 mycat2 (192.168.121.190) 上配置:

# 把配置文件和健康脚本复制到mycat2

[root@mycat1 keepalived]# scp keepalived.conf check_mycat.sh mycat2:/etc/keepalived/

keepalived.conf 100% 1766 2.0MB/s 00:00

check_mycat.sh# 修改配置文件! Configuration File for keepalived

# Keepalived配置文件

# 用于实现MyCAT服务的高可用,通过VRRP协议实现主备切换# 全局定义部分

global_defs {router_id MYCAT2 # 修改路由器唯一标识,通常使用主机名或服务名,同一集群应不同script_security User keepalived_script # 启用脚本安全,并指定执行用户enable_script_security # 允许脚本执行(部分版本需要此参数)

}# 定义VRRP脚本(健康检查脚本)

vrrp_script check_mycat {script "/etc/keepalived/check_mycat.sh" # 指向MyCAT健康检查脚本的路径interval 2 # 检查间隔时间(秒),每2秒执行一次检查weight 2 # 权重调整值:如果脚本返回0(健康),节点优先级+2;非0则不调整

}# VRRP实例配置(定义高可用集群实例)

vrrp_instance VI_1 {state BACKUP # 修改成BACKUP(备节点)interface ens32virtual_router_id 51priority 90 # 节点优先级(1-254),MASTER应高于BACKUP(如主100,备90)advert_int 1authentication {auth_type PASSauth_pass 1111}virtual_ipaddress { # 虚拟IP地址(VIP),故障转移时会在主备节点间漂移192.168.121.188 # 业务访问的VIP地址}track_script { # 引用上面定义的健康检查脚本check_mycat # 启用MyCAT服务健康检查}

}7.6 启动keepalived

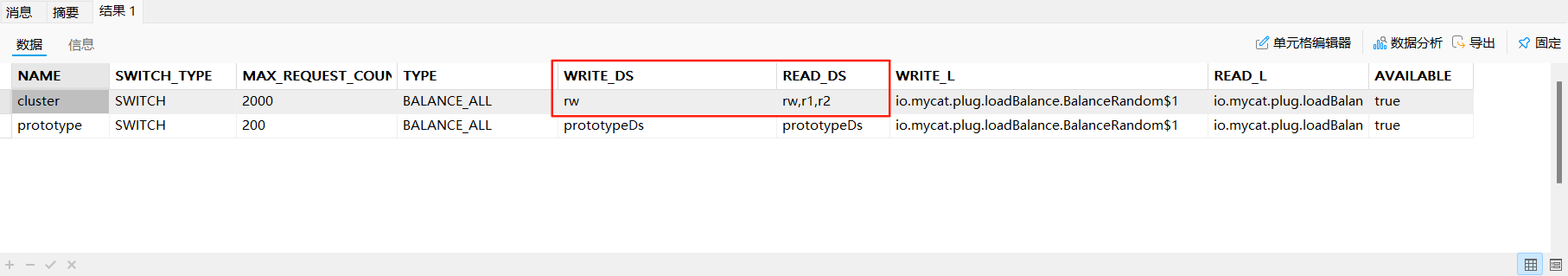

在ansible服务器批量设置开机自启动并启动keepalived,查看状态显示running则启动成功,在mycat1服务器上输入ip a查看是否多了一个188的vip

[root@ansible-server ~]# ansible mycat -m shell -a 'systemctl enable keepalived'

192.168.121.180 | CHANGED | rc=0 >>192.168.121.190 | CHANGED | rc=0 >>[root@ansible-server ~]# ansible mycat -m shell -a 'systemctl start keepalived'

192.168.121.180 | CHANGED | rc=0 >>192.168.121.190 | CHANGED | rc=0 >>[root@ansible-server ~]# ansible mycat -m shell -a 'systemctl status keepalived'

192.168.121.180 | CHANGED | rc=0 >>

● keepalived.service - LVS and VRRP High Availability MonitorLoaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)Active: active (running) since 一 2025-08-25 10:57:00 CST; 58s agoMain PID: 3110 (keepalived)CGroup: /system.slice/keepalived.service├─3110 /usr/sbin/keepalived -D├─3111 /usr/sbin/keepalived -D└─3112 /usr/sbin/keepalived -D8月 25 10:57:40 mycat1 Keepalived_vrrp[3112]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:42 mycat1 Keepalived_vrrp[3112]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:44 mycat1 Keepalived_vrrp[3112]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:46 mycat1 Keepalived_vrrp[3112]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:48 mycat1 Keepalived_vrrp[3112]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:50 mycat1 Keepalived_vrrp[3112]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:52 mycat1 Keepalived_vrrp[3112]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:54 mycat1 Keepalived_vrrp[3112]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:56 mycat1 Keepalived_vrrp[3112]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:58 mycat1 Keepalived_vrrp[3112]: /etc/keepalived/check_mycat.sh exited with status 1

192.168.121.190 | CHANGED | rc=0 >>

● keepalived.service - LVS and VRRP High Availability MonitorLoaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)Active: active (running) since 一 2025-08-25 10:57:00 CST; 58s agoMain PID: 3150 (keepalived)CGroup: /system.slice/keepalived.service├─3150 /usr/sbin/keepalived -D├─3151 /usr/sbin/keepalived -D└─3153 /usr/sbin/keepalived -D8月 25 10:57:40 mycat2 Keepalived_vrrp[3153]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:42 mycat2 Keepalived_vrrp[3153]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:44 mycat2 Keepalived_vrrp[3153]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:46 mycat2 Keepalived_vrrp[3153]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:48 mycat2 Keepalived_vrrp[3153]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:50 mycat2 Keepalived_vrrp[3153]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:52 mycat2 Keepalived_vrrp[3153]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:54 mycat2 Keepalived_vrrp[3153]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:56 mycat2 Keepalived_vrrp[3153]: /etc/keepalived/check_mycat.sh exited with status 1

8月 25 10:57:58 mycat2 Keepalived_vrrp[3153]: /etc/keepalived/check_mycat.sh exited with status 1

7.7 模拟服务器不可用VIP切换

7.7.1 模拟主服务器断电关闭

# 关闭主服务器 VIP所在服务器

[root@mycat1 keepalived]# shutdown -h now

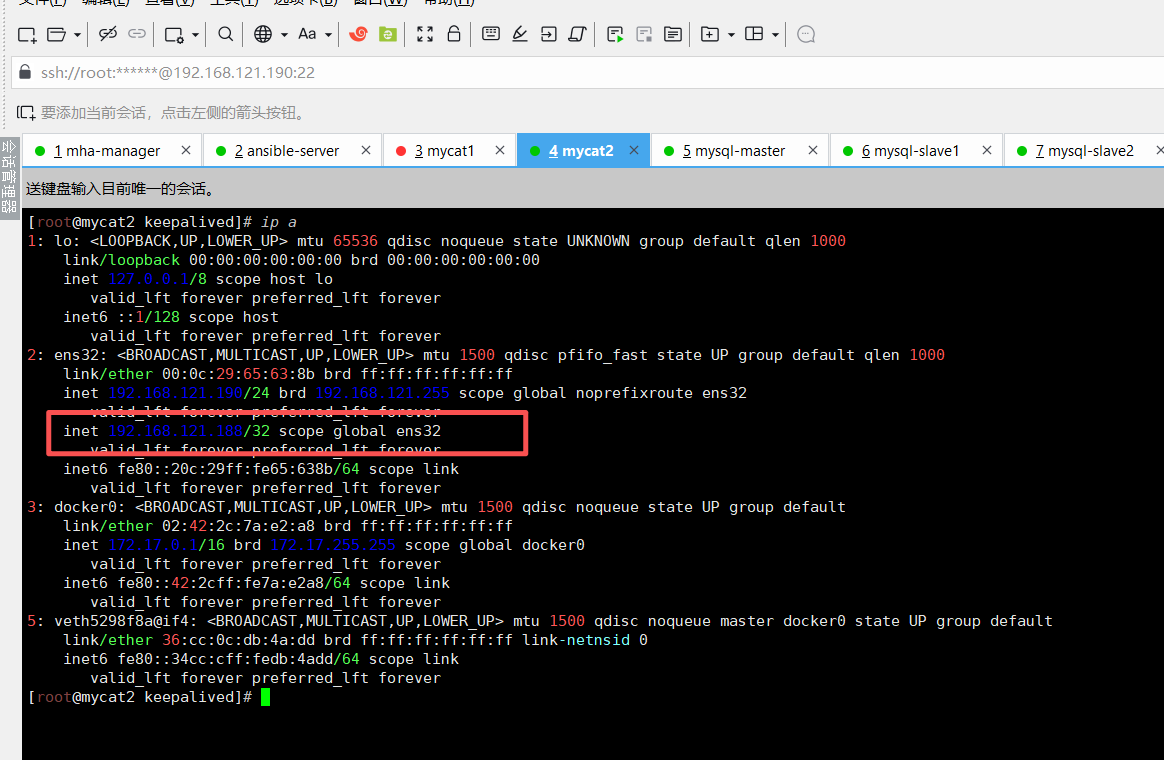

在mycat2服务器上可以看到vip漂移过来了

mycat1服务器开启keepalived之后vip自动回归主服务器

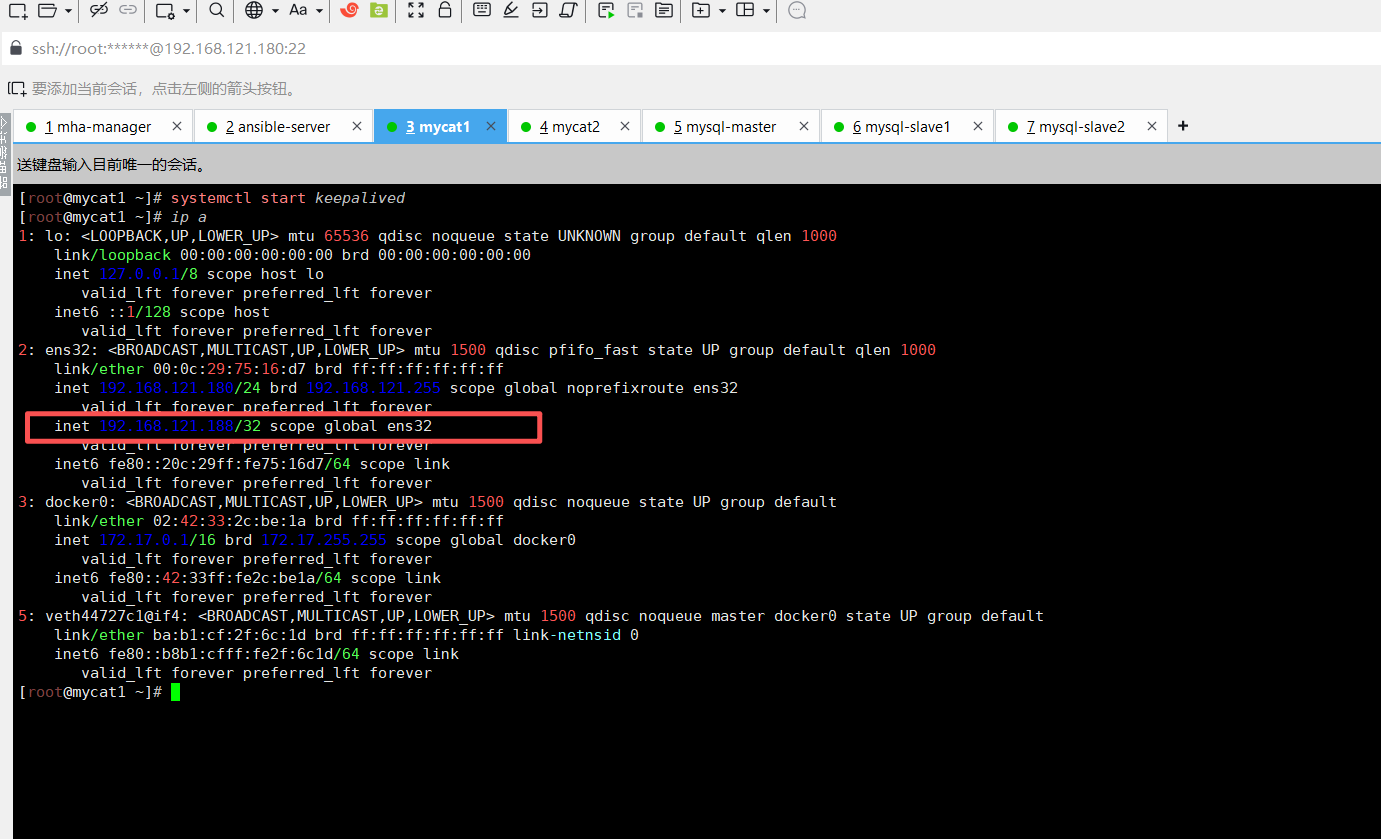

[root@mycat1 ~]# systemctl start keepalived

[root@mycat1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 00:0c:29:75:16:d7 brd ff:ff:ff:ff:ff:ffinet 192.168.121.180/24 brd 192.168.121.255 scope global noprefixroute ens32valid_lft forever preferred_lft foreverinet 192.168.121.188/32 scope global ens32valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:fe75:16d7/64 scope link valid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:33:2c:be:1a brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft foreverinet6 fe80::42:33ff:fe2c:be1a/64 scope link valid_lft forever preferred_lft forever

5: veth44727c1@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default link/ether ba:b1:cf:2f:6c:1d brd ff:ff:ff:ff:ff:ff link-netnsid 0inet6 fe80::b8b1:cfff:fe2f:6c1d/64 scope link valid_lft forever preferred_lft forever

8 备份系统部署

8.1 配置 rsync+sersync 实时备份

在备份服务器 (192.168.121.210) 上操作:

# 安装rsync

[root@ansible-server ]# yum install -y rsync# 配置rsync

[root@ansible-server ]# /etc/rsyncd.conf

uid = root

gid = root

use chroot = no

max connections = 200

timeout = 300

pid file = /var/run/rsyncd.pid

lock file = /var/run/rsync.lock

log file = /var/log/rsyncd.log[mysql_backup]

path = /data/backup/mysql

comment = MySQL backup

read only = no

list = yes

hosts allow = 192.168.121.0/24

auth users = backup

secrets file = /etc/rsync.passwd# 创建备份目录

[root@ansible-server ]# mkdir -p /data/backup/mysql# 配置rsync密码

[root@ansible-server ]# echo "backup:123456" > /etc/rsync.passwd

[root@ansible-server ]# chmod 600 /etc/rsync.passwd# 启动rsync服务

[root@ansible-server ]# systemctl start rsyncd

[root@ansible-server ]# systemctl enable rsyncd在 MySQL 主库 (192.168.121.221) 上安装 sersync:

通过网盘分享的文件:sersync2.5.4_64bit_binary_stable_final.tar.gz

链接: https://pan.baidu.com/s/1uWRYq1IMAEQ8g4o9J_34bw?pwd=ea9p 提取码: ea9p

[root@mysql-master]# yum install -y inotify-tools rsync# 上传软件包到服务器并解压

[root@mysql-master]# tar xzf sersync2.5.4_64bit_binary_stable_final.tar.gz[root@mysql-master]# rm -rf sersync2.5.4_64bit_binary_stable_final.tar.gz[root@mysql-master]# mv GNU-Linux-x86/ /usr/local/sersync[root@mysql-master]# cd /usr/local/sersync[root@mysql-master]# vim confxml.xml<?xml version="1.0" encoding="ISO-8859-1"?>

<head version="2.5"><host hostip="localhost" port="8008"></host><debug start="false"/><fileSystem xfs="false"/><filter start="false"><exclude expression="(.*)\.svn"></exclude><exclude expression="(.*)\.gz"></exclude><exclude expression="^info/*"></exclude><exclude expression="^static/*"></exclude></filter><inotify><delete start="true"/><createFolder start="true"/><createFile start="true"/><closeWrite start="true"/><moveFrom start="true"/><moveTo start="true"/><attrib start="true"/><modify start="true"/></inotify><sersync><localpath watch="/data/mysql/data"> # 指定监控的本地目录<remote ip="192.168.121.210" name="mysql_backup"/> # 指定要同步的目标服务器的IP地址,及目标服务器rsync的[模块]</localpath><rsync><commonParams params="-artuz"/><auth start="true" users="backup" passwordfile="/etc/rsync.passwd"/> #是否开启rsync的认证模式,需要配置users及passwordfile,根据情况开启(如果开启,注意密码文件权限一定要是600)<userDefinedPort start="false" port="873"/><timeout start="true" time="100"/><ssh start="false"/></rsync><failLog path="/tmp/rsync_fail_log.sh" timeToExecute="60"/><crontab start="true" schedule="600"><crontabfilter start="false"><exclude expression="*.php"></exclude><exclude expression="info/*"></exclude></crontabfilter></crontab><plugin start="false" name="command"/></sersync><plugin name="command"><param prefix="/bin/sh" suffix="" ignoreError="true"/><filter start="false"><include expression="(.*)\.php"/><include expression="(.*)\.sh"/></filter></plugin><plugin name="socket"><localpath watch="/opt/tongbu"><deshost ip="192.168.138.20" port="8009"/></localpath></plugin><plugin name="refreshCDN"><localpath watch="/data0/htdocs/cms.xoyo.com/site/"><cdninfo domainname="ccms.chinacache.com" port="80" username="xxxx" passwd="xxxx"/><sendurl base="http://pic.xoyo.com/cms"/><regexurl regex="false" match="cms.xoyo.com/site([/a-zA-Z0-9]*).xoyo.com/images"/></localpath></plugin>

</head>----------------------------------------分割线----------------------------------------

# 配置rsync密码

[root@mysql-master]# echo "123456" > /etc/rsync.passwd[root@mysql-master]# chmod 600 /etc/rsync.passwd# 创建启动脚本

[root@mysql-master]# vim /etc/systemd/system/sersync.service[Unit]

Description=Sersync for real-time file synchronization

After=network.target[Service]

Type=forking

ExecStart=/usr/local/sersync/sersync2 -d -r -o /usr/local/sersync/confxml.xml

Restart=on-failure

RestartSec=5

ExecStop=/bin/pkill -f 'sersync2 -d -r -o /usr/local/sersync/confxml.xml'[Install]

WantedBy=multi-user.target# 启动sersync

[root@mysql-master]# systemctl daemon-reload

[root@mysql-master]# systemctl enable sersync

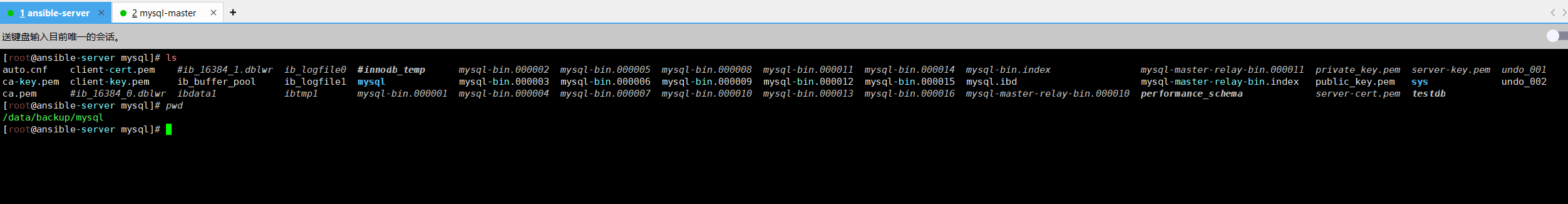

[root@mysql-master]# systemctl start sersync# 手动进行一次全量备份测试是否同步[root@mysql-master data]# cd /data/mysql/data && rsync -artuz -R --delete ./ backup@192.168.121.210::mysql_backup --password-file=/etc/rsync.passwd

备份服务器上看到有内容说明同步成功

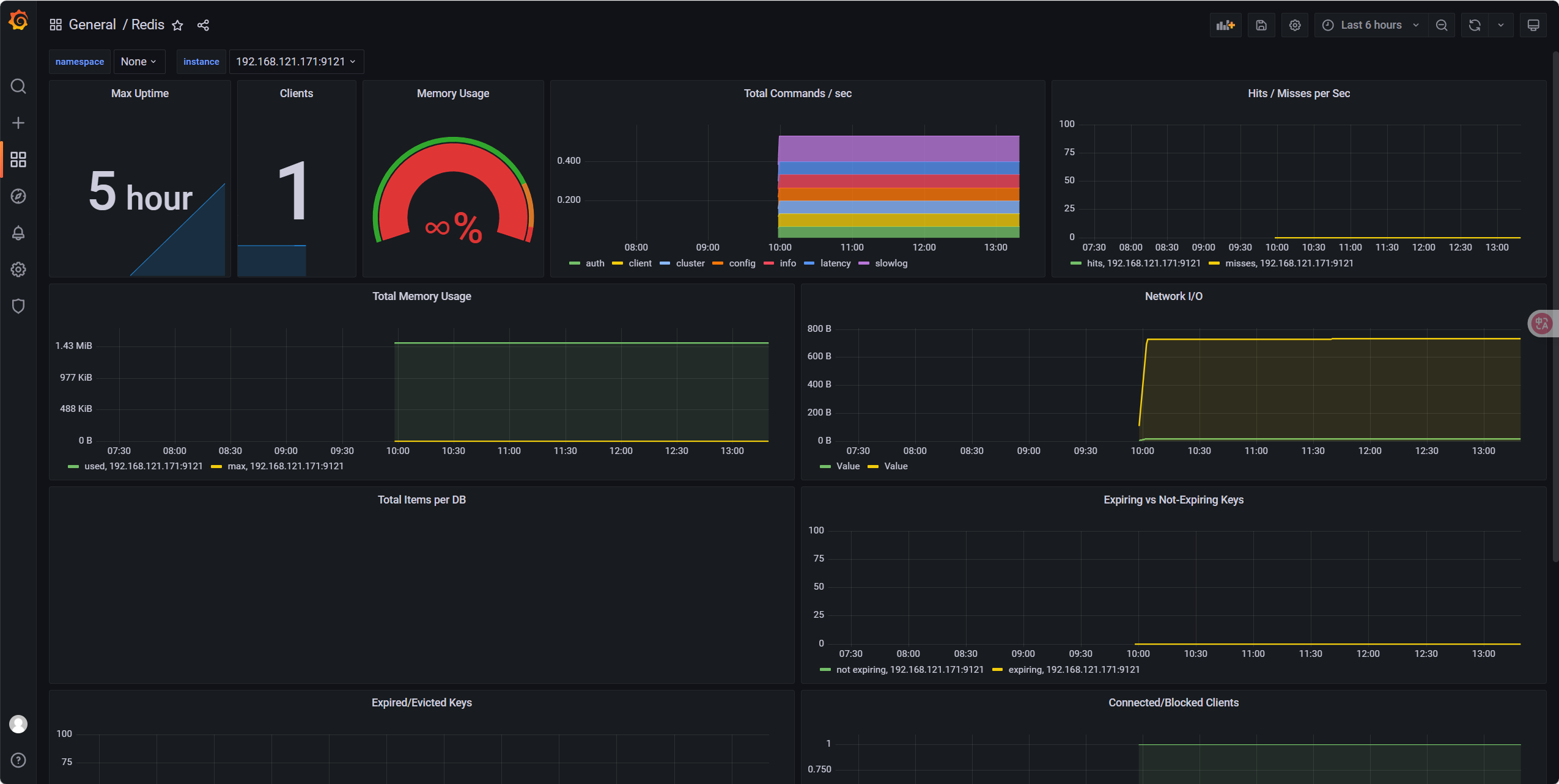

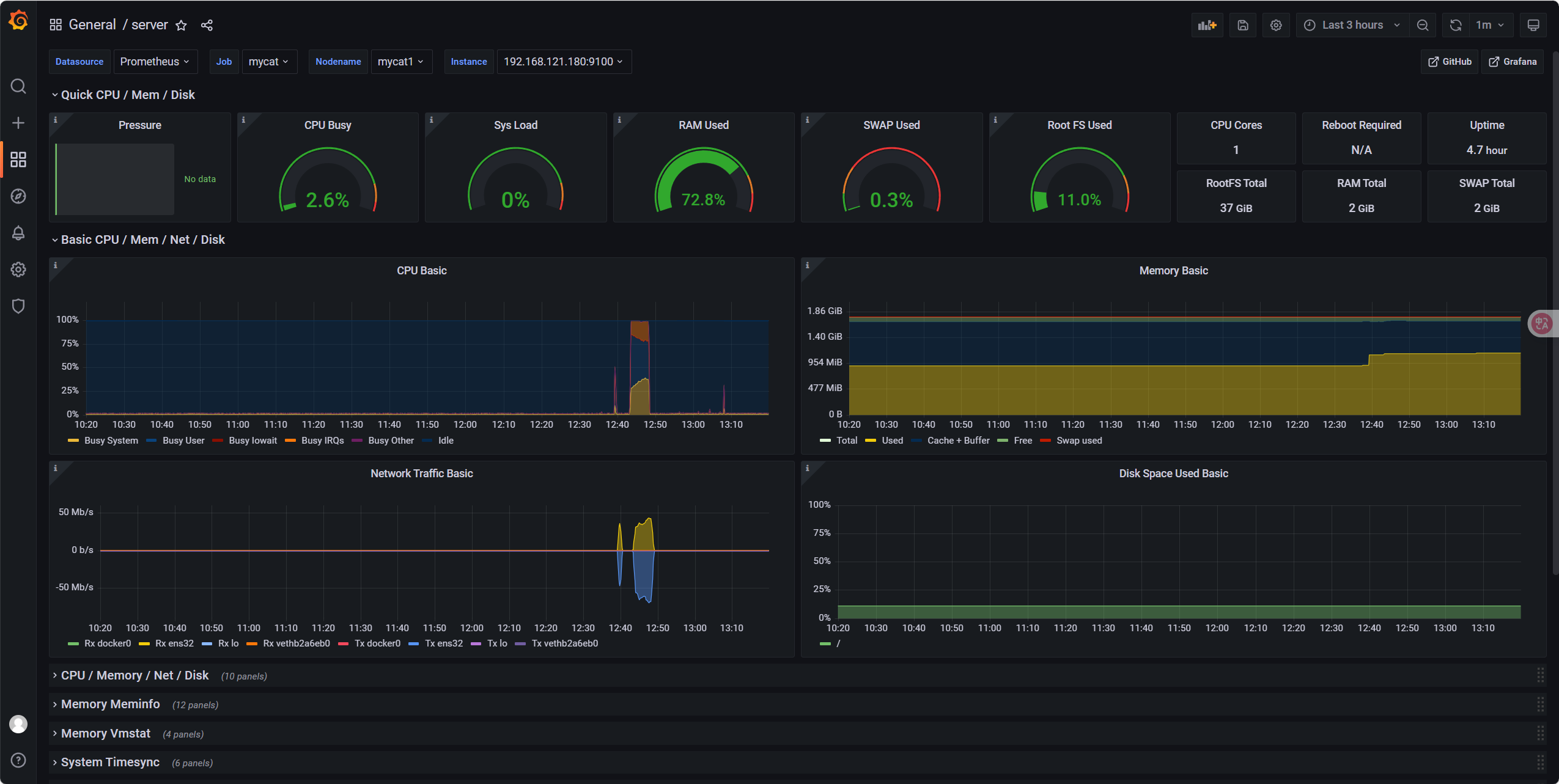

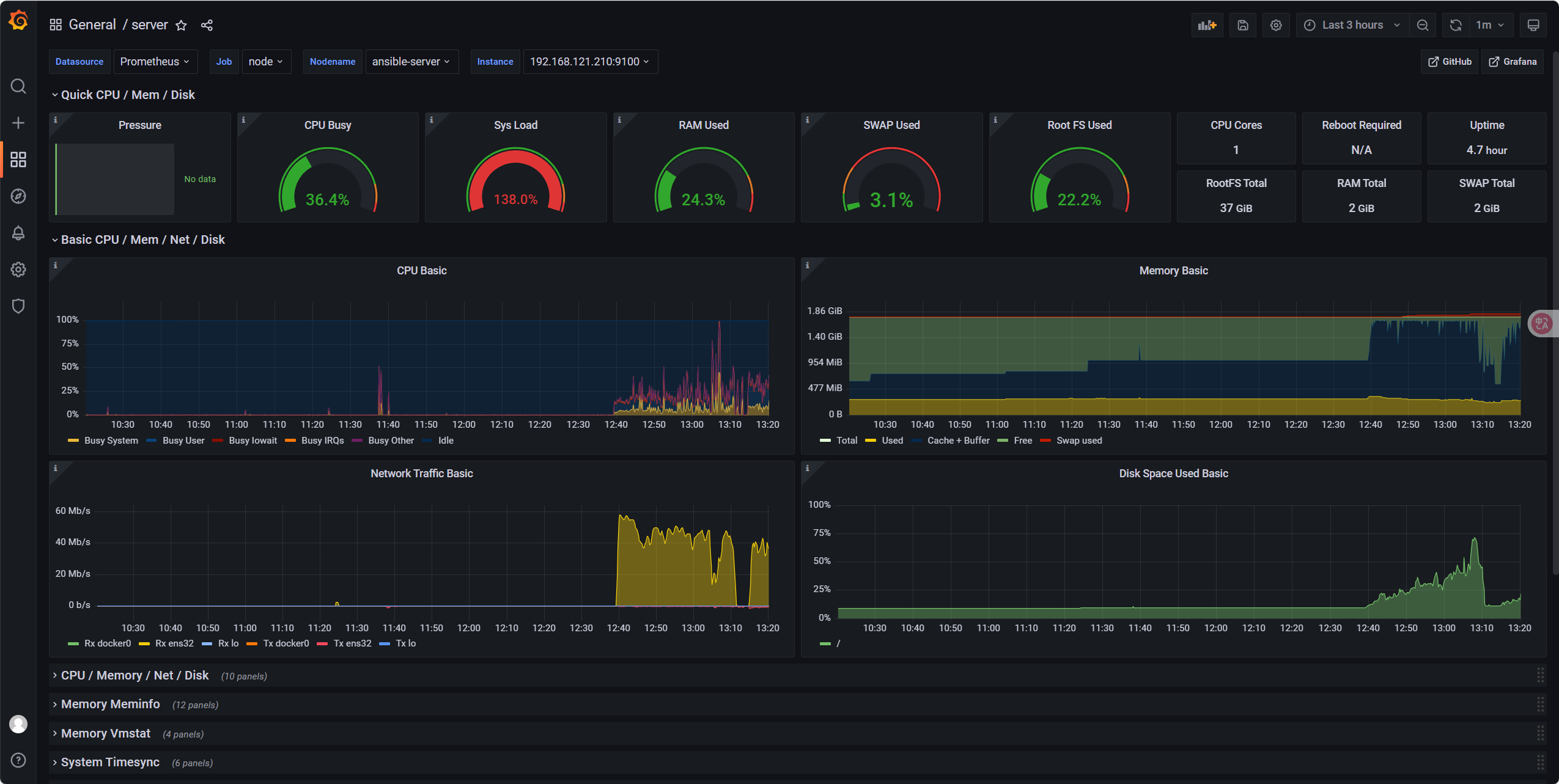

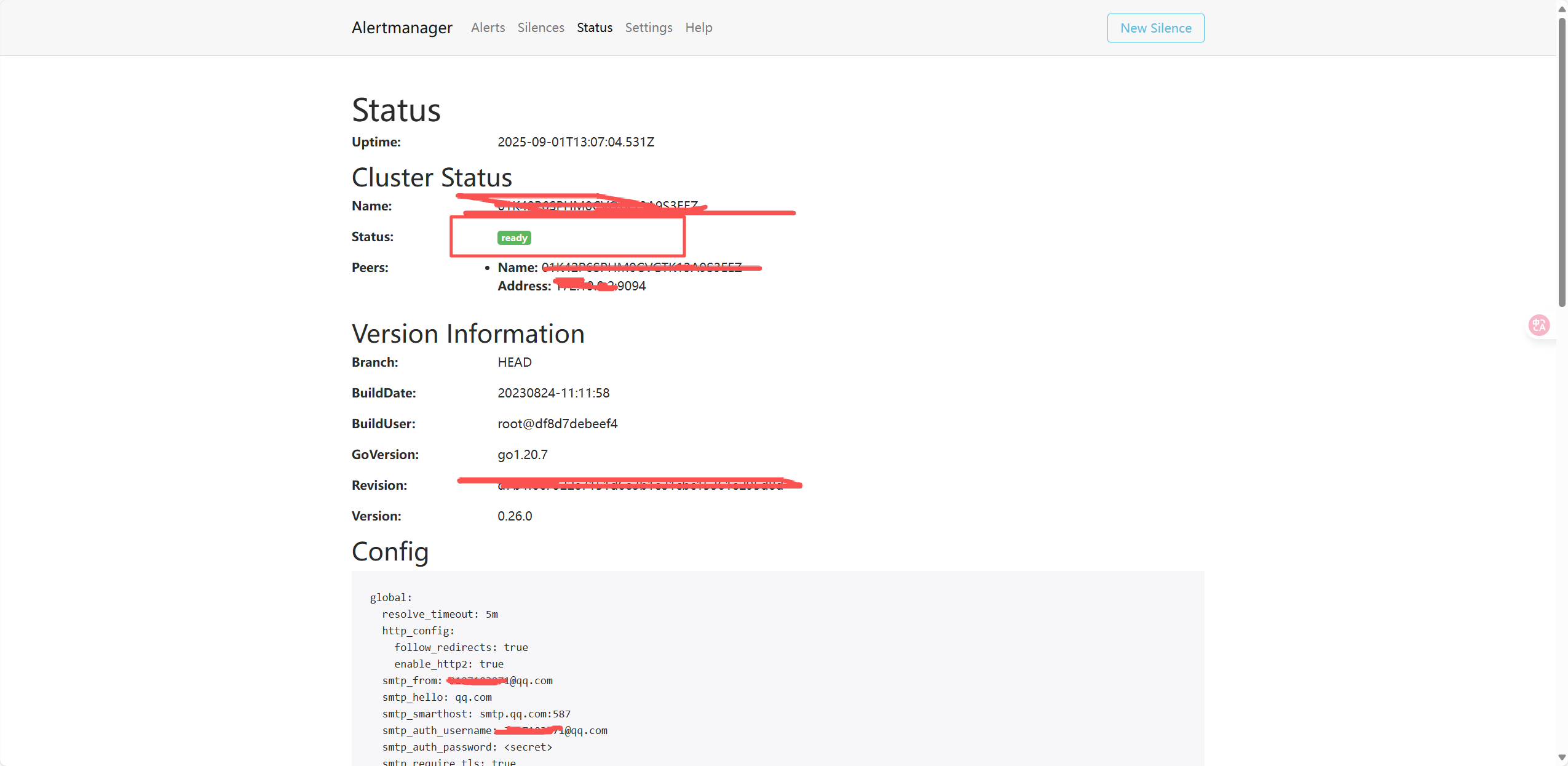

9 Redis 缓存集群部署

9.1 构建redis docker镜像

在 ansible-server 上操作:

# 创建目录

mkdir -p /data/docker/redis

cd /data/docker/redis[root@ansible-server redis]# docker pull docker.1ms.run/redis:6.2.6 # 拉取镜像

6.2.6: Pulling from redis

1fe172e4850f: Pull complete

6fbcd347bf99: Pull complete

993114c67627: Pull complete

2a560260ca39: Pull complete

b7179886a292: Pull complete

8901ffe2be84: Pull complete

Digest: sha256:b7fd1a2c89d09a836f659d72c52d27b9f71202c97014a47639f87c992e8c0f1b

Status: Downloaded newer image for docker.1ms.run/redis:6.2.6

docker.1ms.run/redis:6.2.6

[root@ansible-server redis]# docker save -o redis6.2.6.tar docker.1ms.run/redis:6.2.6 # 打包镜像到本地

[root@ansible-server redis]# ls

Dockerfile redis6.2.6.tar

# 节约下载时间

[root@ansible-server redis]# scp redis6.2.6.tar redis1:~/ # 将镜相包分发给redis1

redis6.2.6.tar 100% 111MB 144.7MB/s 00:00

[root@ansible-server redis]# scp redis6.2.6.tar redis2:~/ # 将镜相包分发给redis2

redis6.2.6.tar 0% 0 0.0KB/s --:-- ETA^redis6.2.6.tar 100% 111MB 89.5MB/s 00:01

[root@ansible-server redis]# scp redis6.2.6.tar redis3:~/ # 将镜相包分发给redis3

redis6.2.6.tar 100% 111MB 94.5MB/s 00:01

# 批量读取镜相包

[root@ansible-server redis]# ansible redis -m shell -a 'docker load -i redis6.2.6.tar'

192.168.121.171 | CHANGED | rc=0 >>

Loaded image: docker.1ms.run/redis:6.2.6

192.168.121.173 | CHANGED | rc=0 >>

Loaded image: docker.1ms.run/redis:6.2.6

192.168.121.172 | CHANGED | rc=0 >>

Loaded image: docker.1ms.run/redis:6.2.6

[root@ansible-server redis]# ansible redis -m shell -a 'docker images'

192.168.121.171 | CHANGED | rc=0 >>

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.1ms.run/redis 6.2.6 3c3da61c4be0 3 years ago 113MB

192.168.121.172 | CHANGED | rc=0 >>

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.1ms.run/redis 6.2.6 3c3da61c4be0 3 years ago 113MB

192.168.121.173 | CHANGED | rc=0 >>

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.1ms.run/redis 6.2.6 3c3da61c4be0 3 years ago 113MB[root@redis1 conf]# vim /data/redis/conf/redis.conf

bind 0.0.0.0

protected-mode yes

port 6379

tcp-backlog 511

timeout 0

tcp-keepalive 300

daemonize no

supervised no

pidfile /var/run/redis_6379.pid

loglevel notice

logfile /data/redis-server.log

databases 16

always-show-logo yes

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

dir /data

replica-serve-stale-data yes

replica-read-only yes

repl-diskless-sync no

repl-disable-tcp-nodelay no

replica-priority 100

appendonly yes

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

aof-use-rdb-preamble yes

lua-time-limit 5000

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

stream-node-max-bytes 4096

stream-node-max-entries 100

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit replica 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

dynamic-hz yes

aof-rewrite-incremental-fsync yes

rdb-save-incremental-fsync yes

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 15000

cluster-replica-validity-factor 10

cluster-migration-barrier 1

cluster-require-full-coverage yes

cluster-replica-no-failover no

requirepass 123456scp /data/redis/conf/redis.conf redis2:/data/redis/conf/redis.conf

scp /data/redis/conf/redis.conf redis3:/data/redis/conf/redis.conf9.1.2 部署 Redis 集群

创建 Ansible Playbook:

[root@ansible-server redis]# mkdir -p /data/ansible/roles/redis/tasks

[root@ansible-server redis]# cd /data/ansible/roles/redis/tasks/

[root@ansible-server tasks]# vim main.yml

vim main.yml

- name: 创建redis数据目录file:path: /data/redis/datastate: directorymode: '0755'

- name: 创建redis配置目录template:src: /data/docker/redis/redis.confdest: /data/redis/conf/redis.confmode: '0644'

- name: 启动Redis容器docker_container:name: redisimage: docker.1ms.run/redis:6.2.6state: startedrestart_policy: alwaysports:- "6379:6379"- "16379:16379"volumes:- /data/redis/data:/data- /data/redis/conf/redis.conf:/usr/local/etc/redis/redis.confnetwork_mode: hostcommand:--requirepass "123456"--masterauth "123456"--cluster-enabled yes[root@ansible-server tasks]# cd /data/ansible

[root@ansible-server ansible]# vim deploy_redis.yml

- hosts: redisvars:ansible_python_interpreter: /usr/bin/python3.6tasks:- include_role:name: redis

[root@ansible-server ansible]# ansible-playbook /data/ansible/deploy_redis.ymlPLAY [redis] ****************************************************************************************************************************TASK [Gathering Facts] ******************************************************************************************************************

ok: [192.168.121.172]

ok: [192.168.121.173]

ok: [192.168.121.171]TASK [include_role : redis] *************************************************************************************************************TASK [创建redis数据目录] **********************************************************************************************************************

ok: [192.168.121.173]

ok: [192.168.121.172]

ok: [192.168.121.171]TASK [创建redis配置目录] **********************************************************************************************************************

ok: [192.168.121.172]

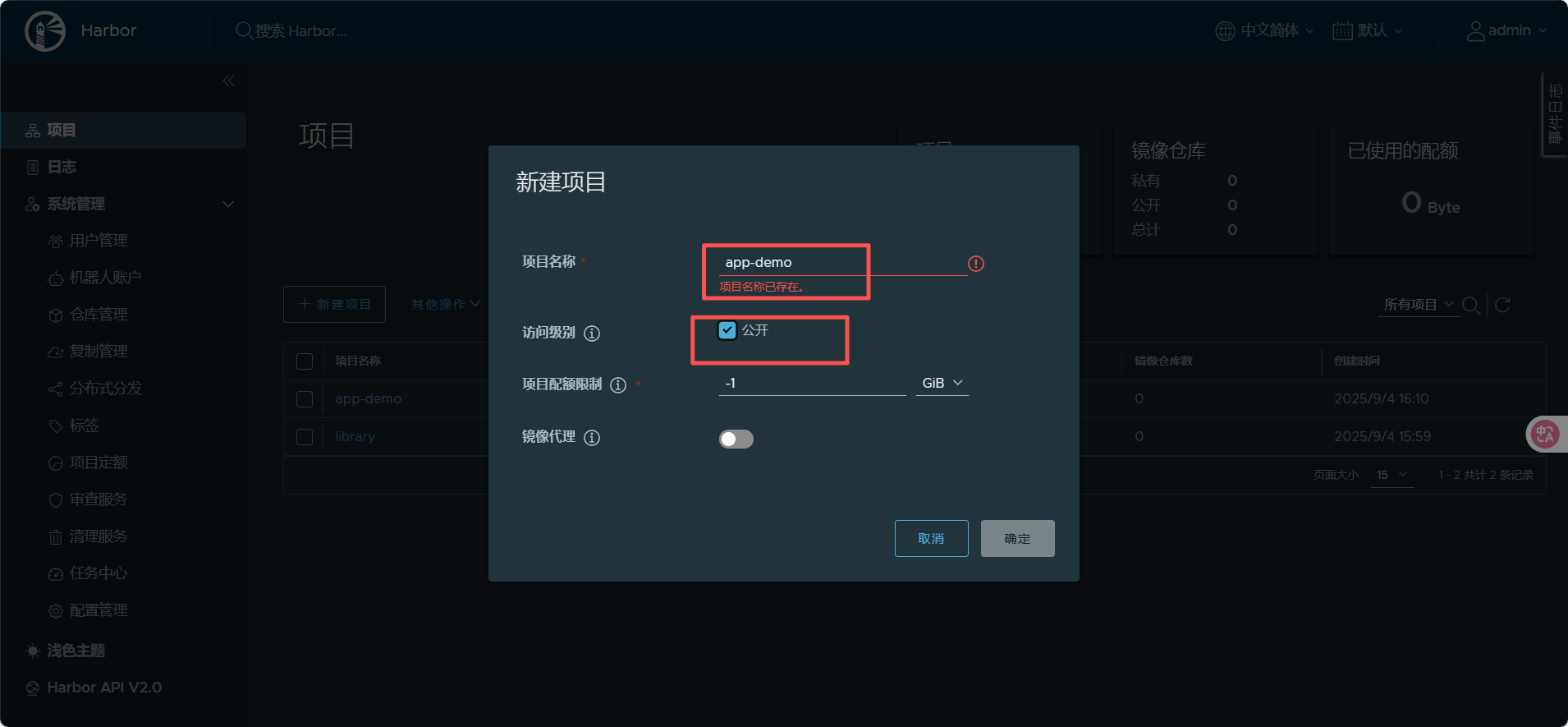

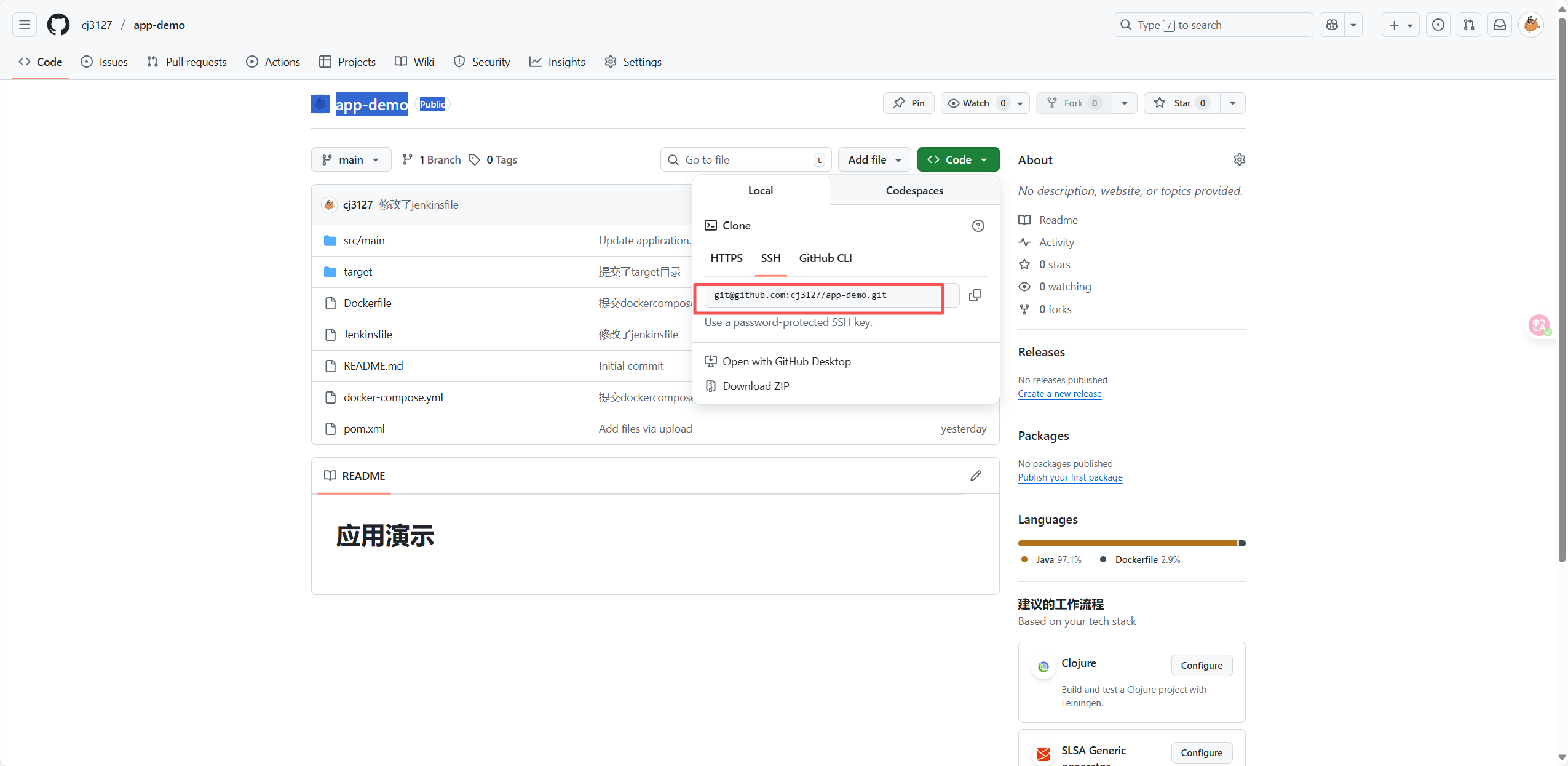

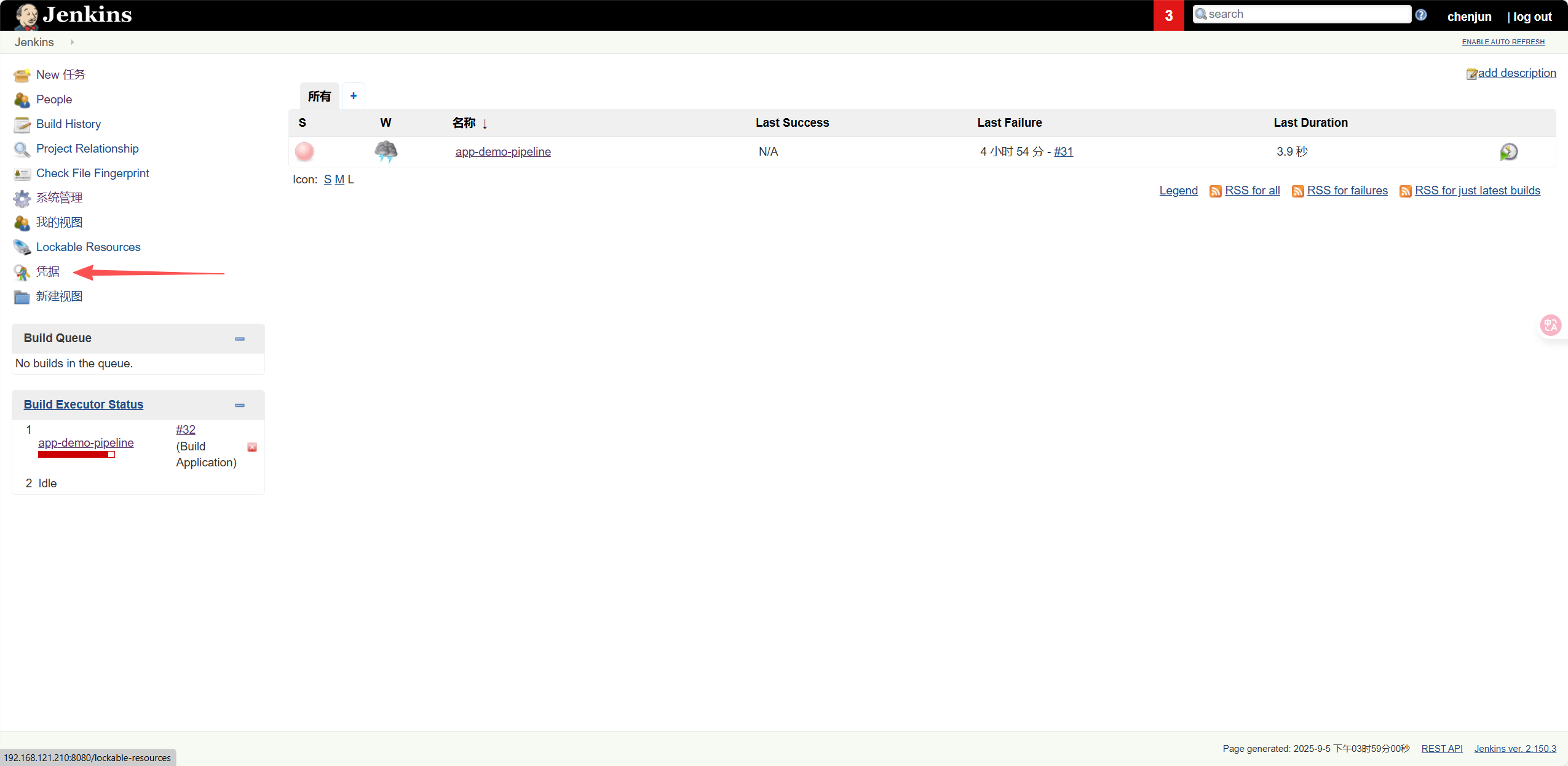

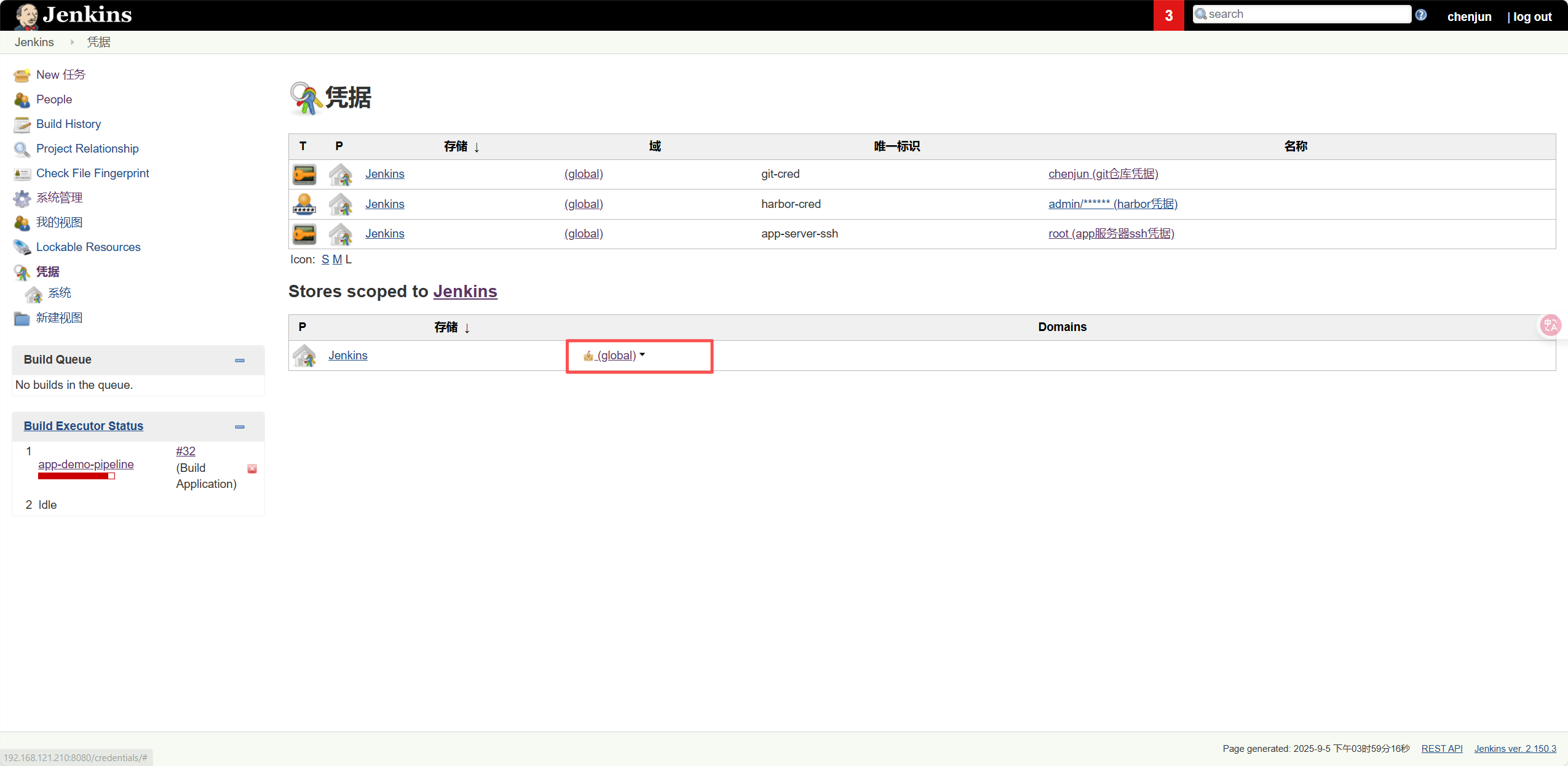

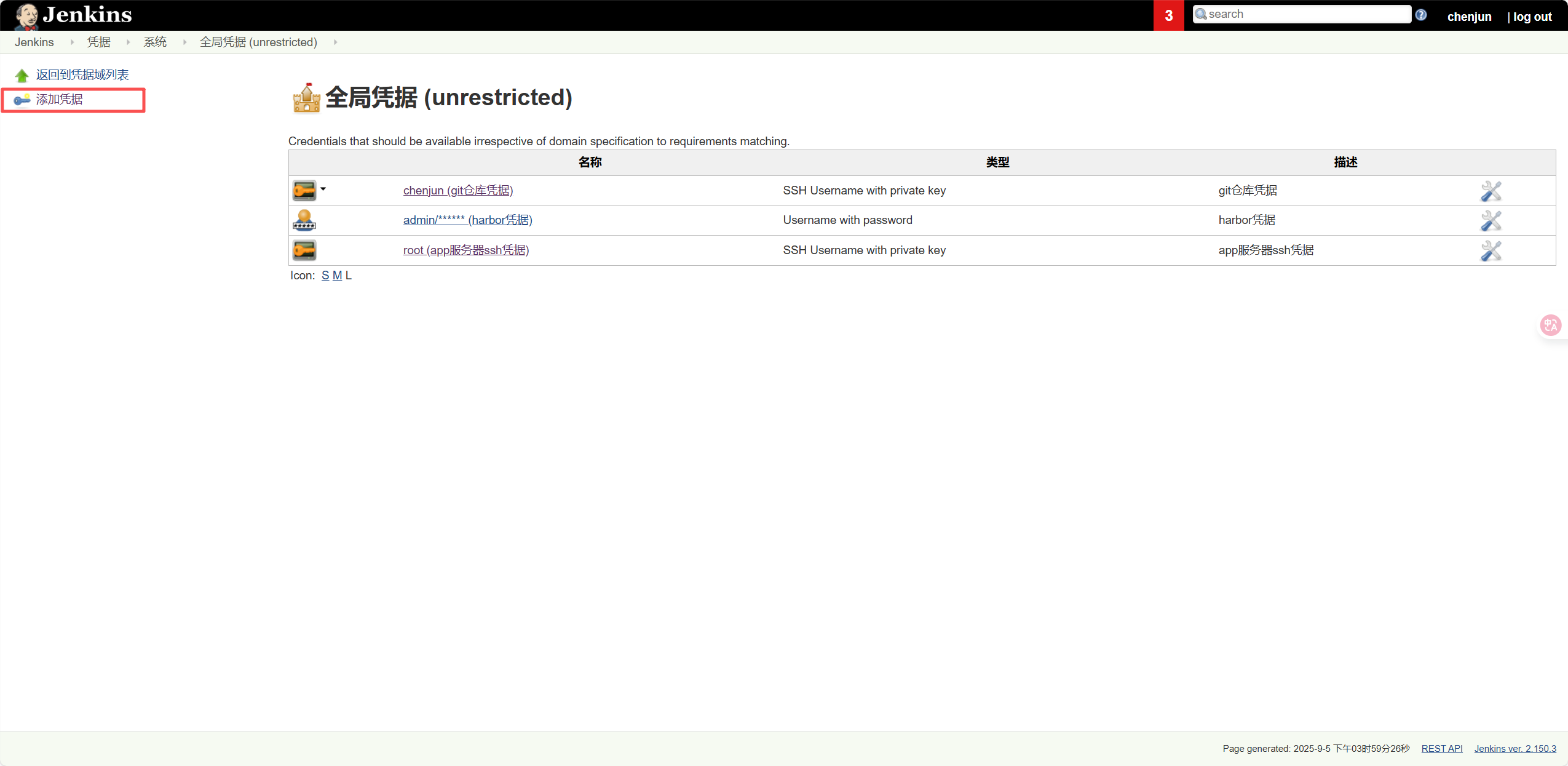

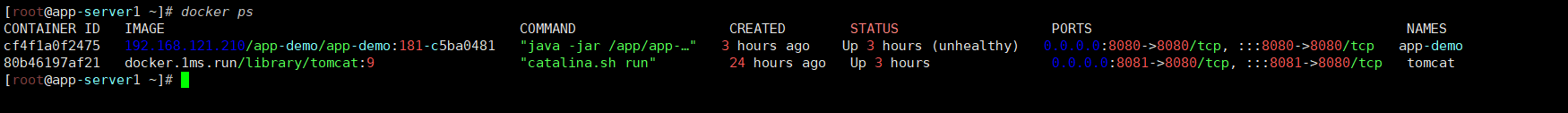

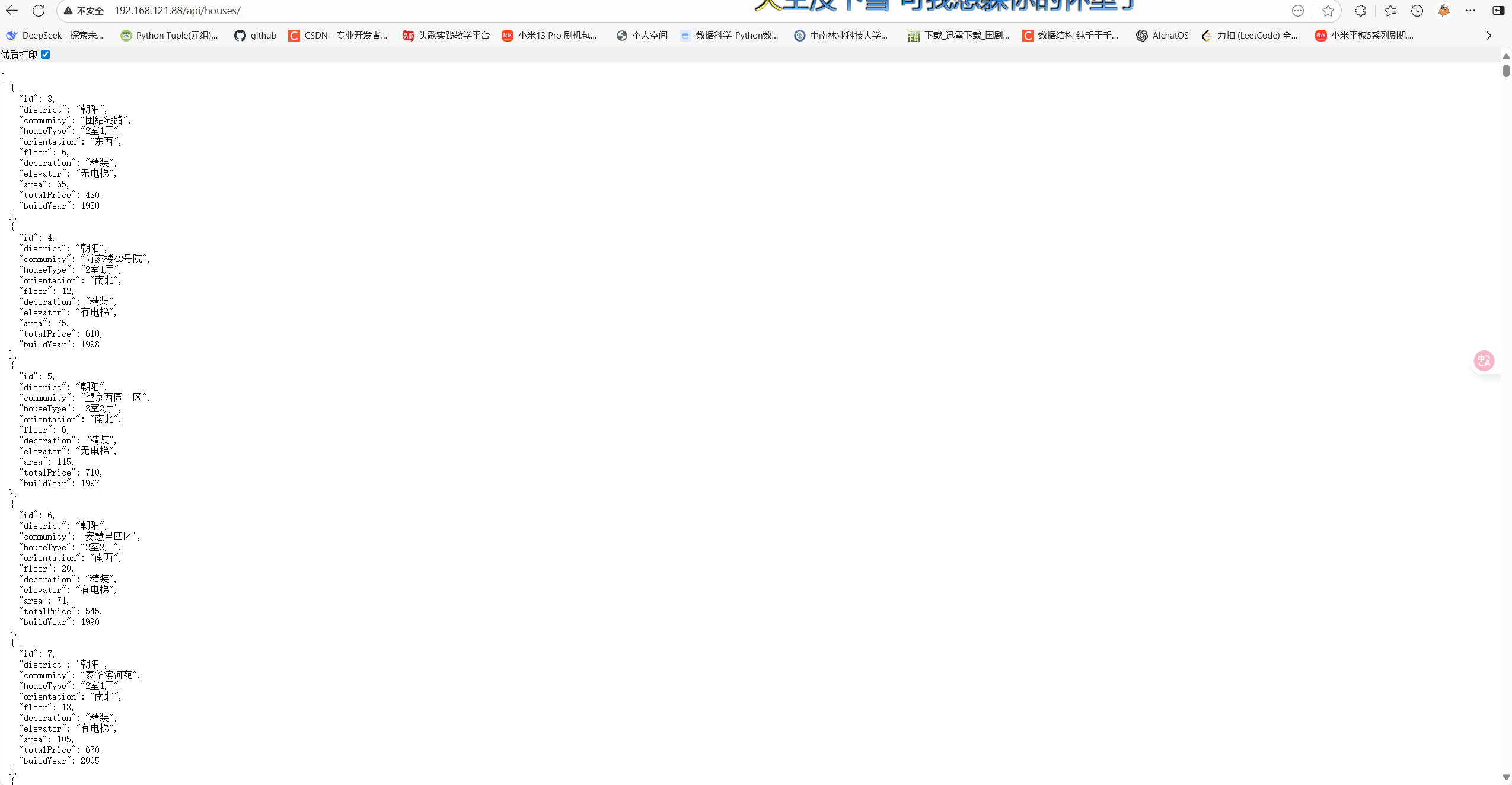

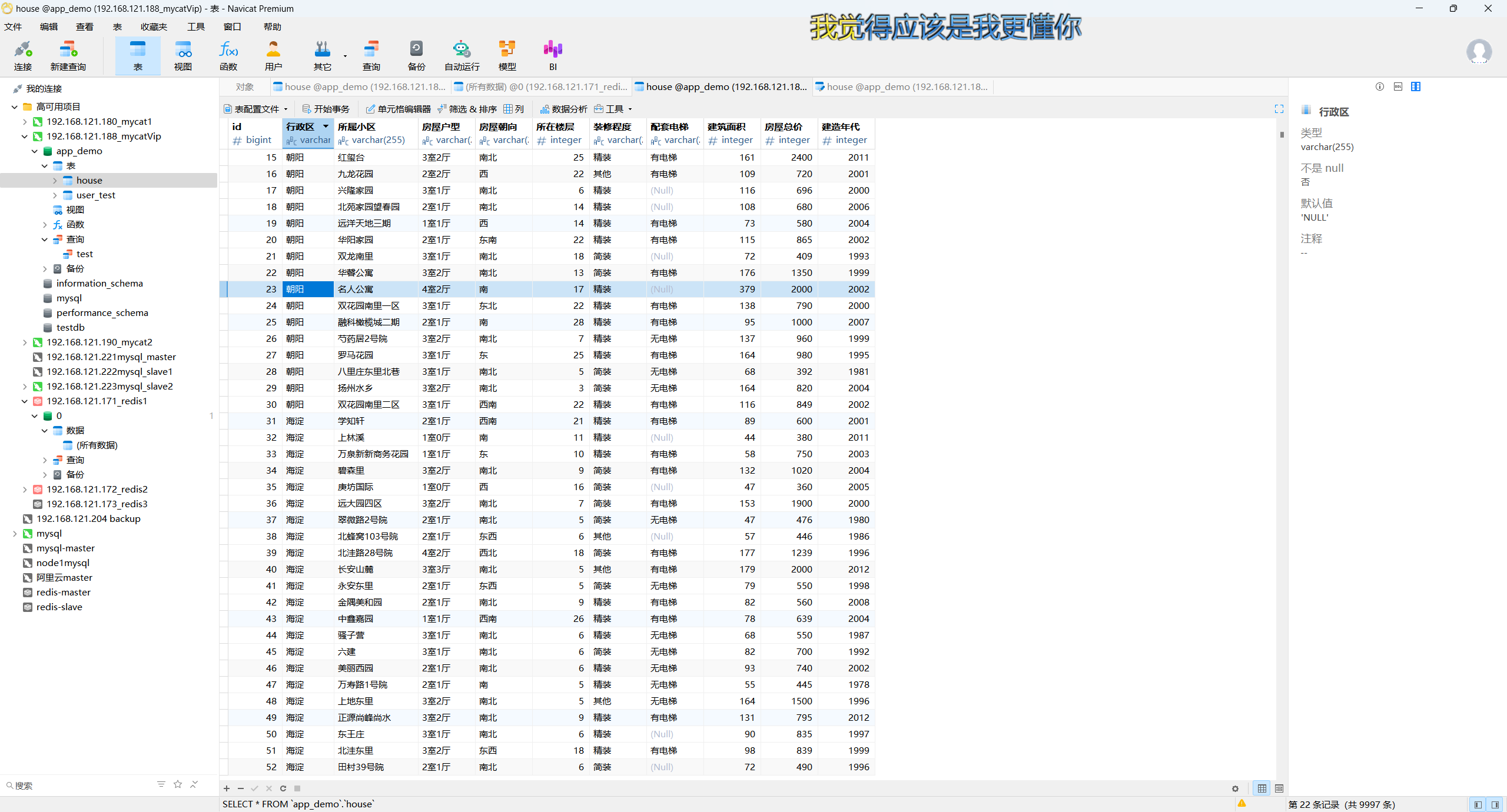

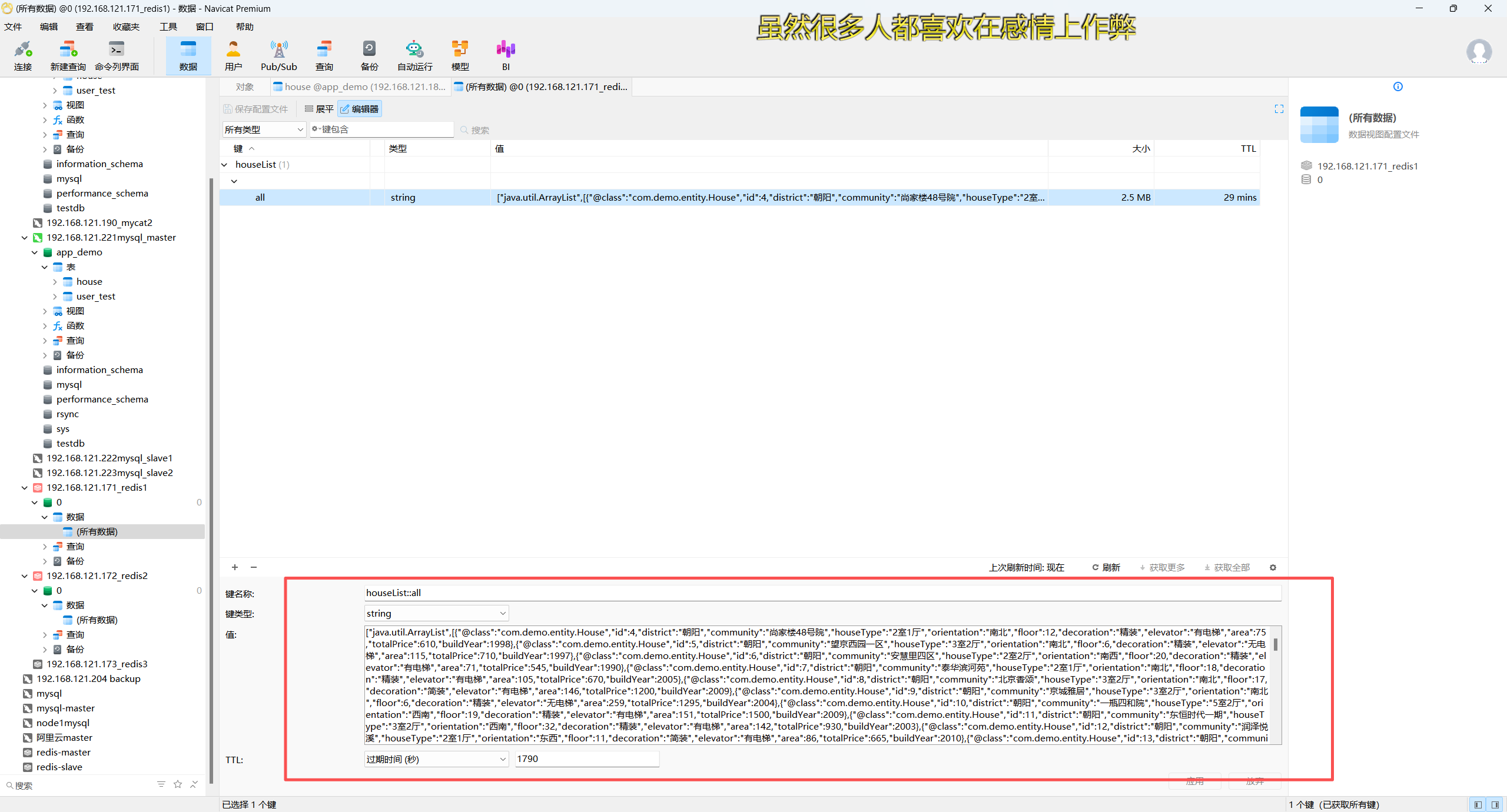

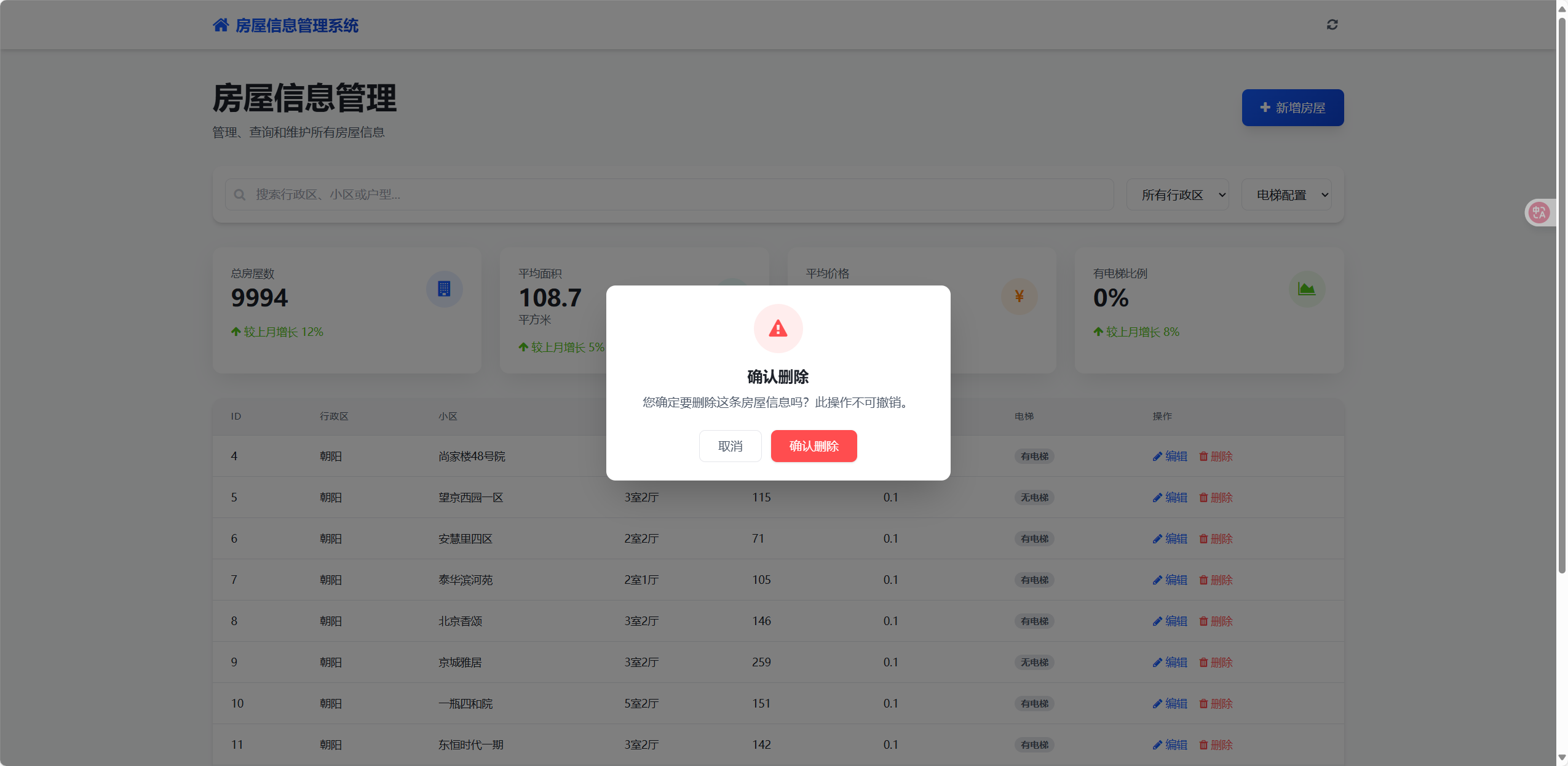

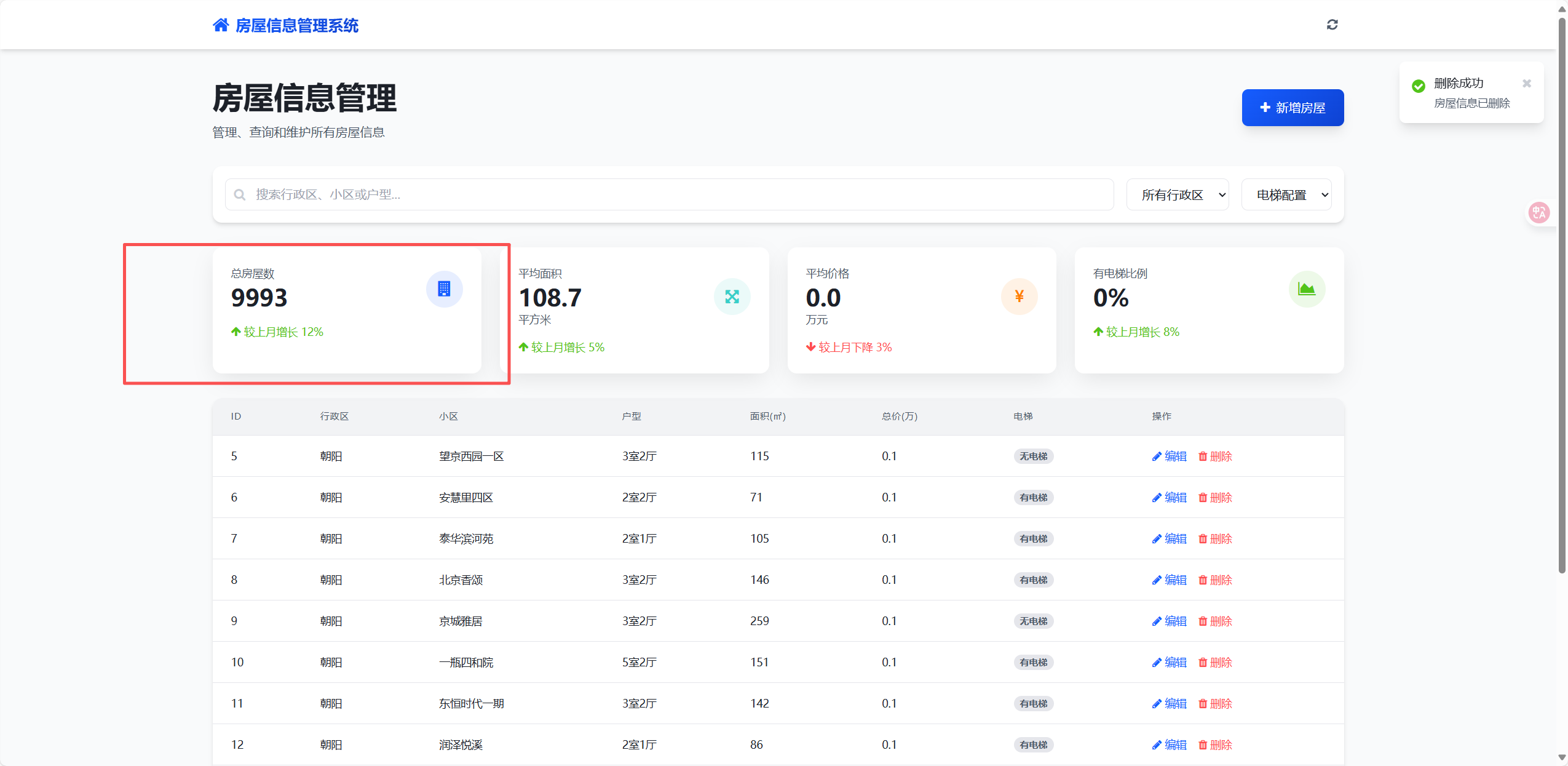

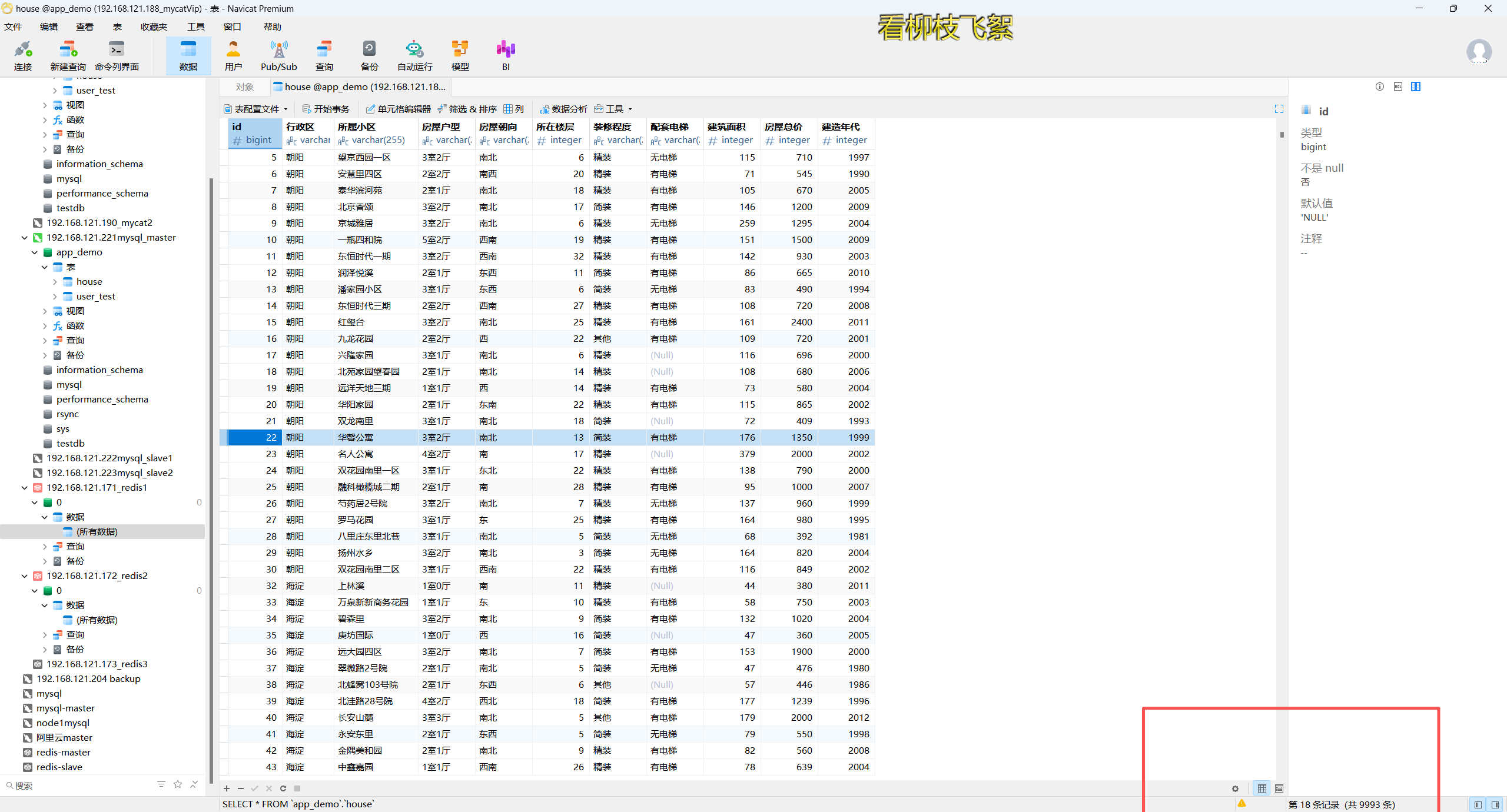

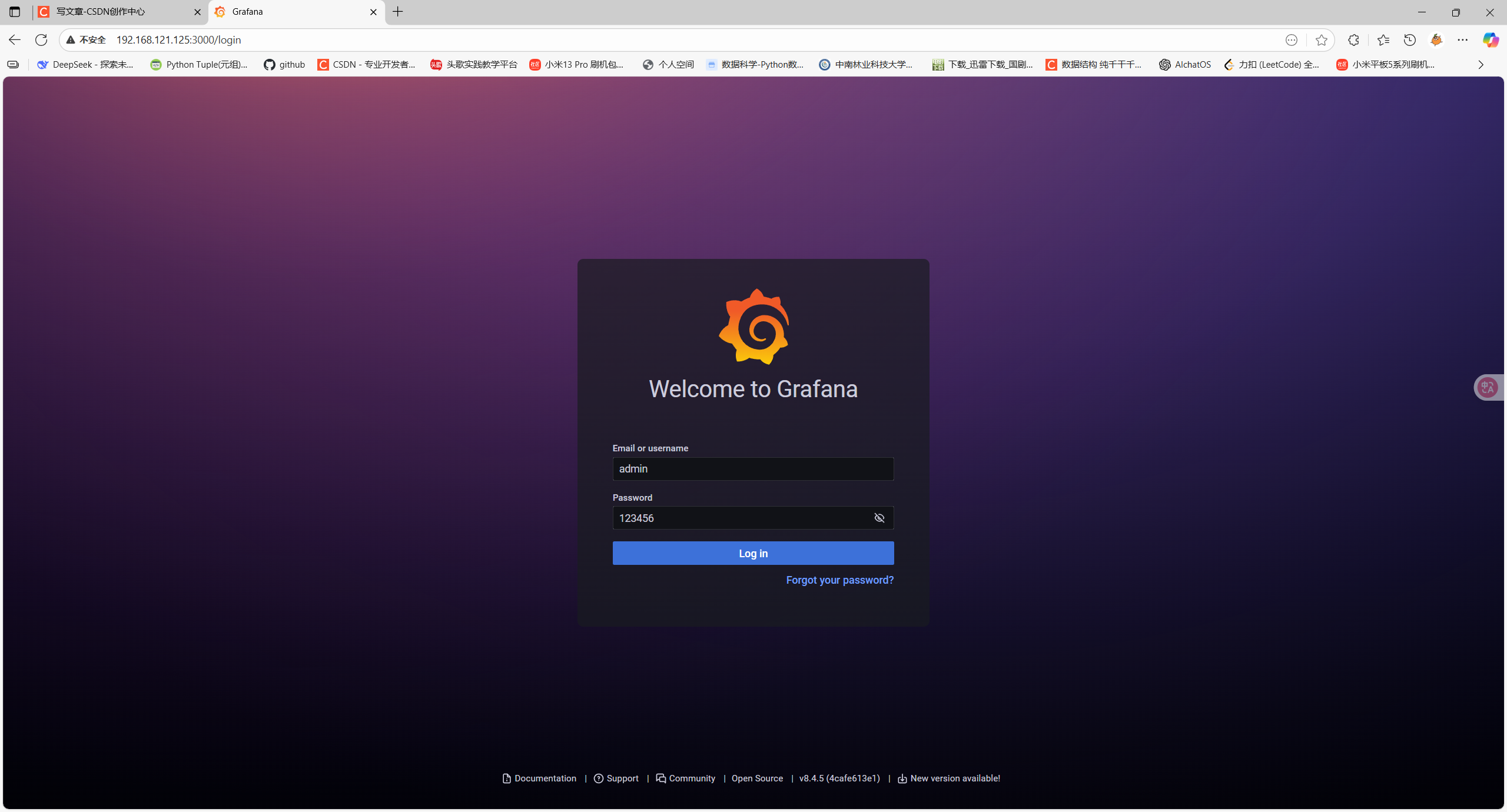

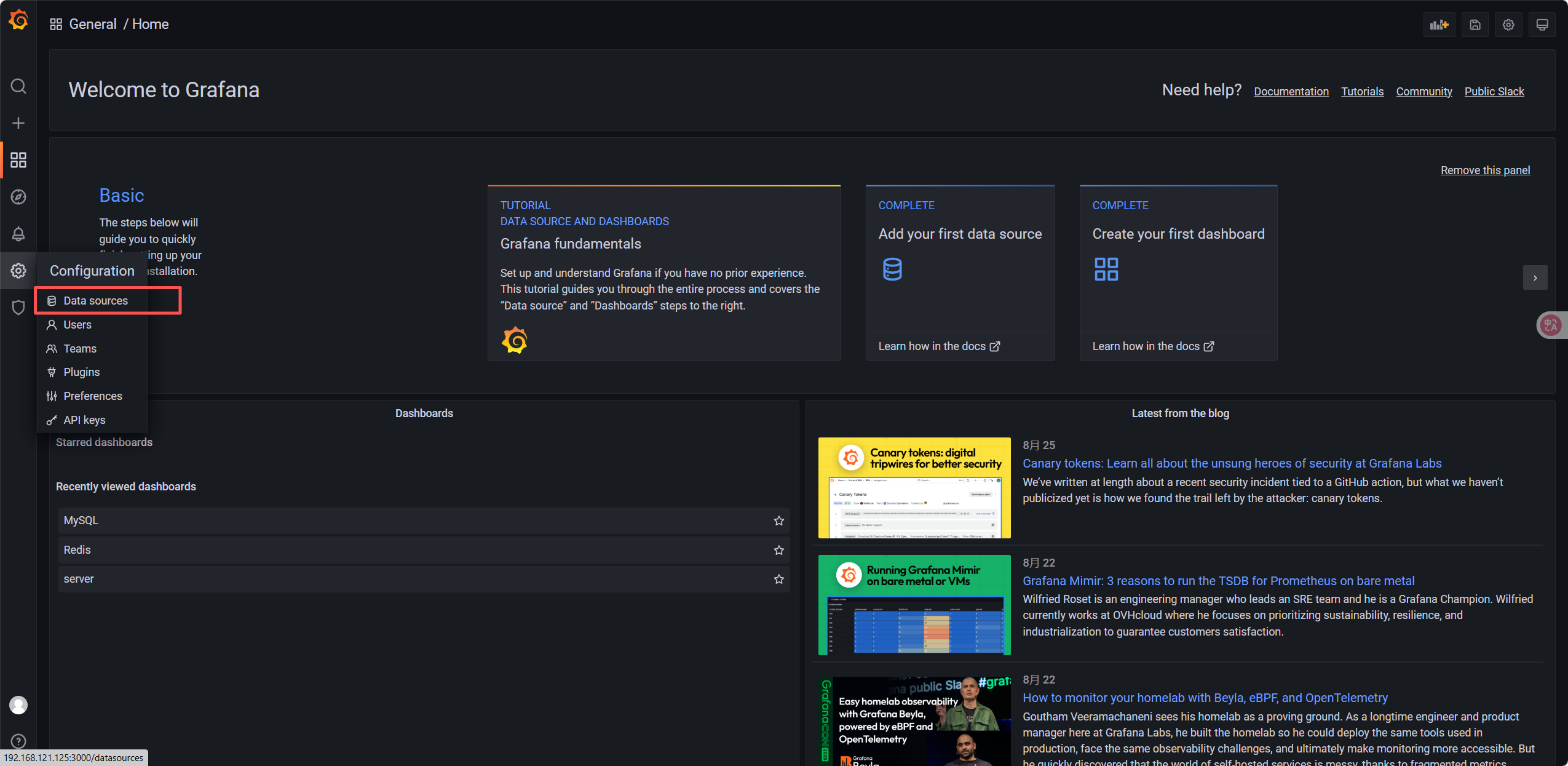

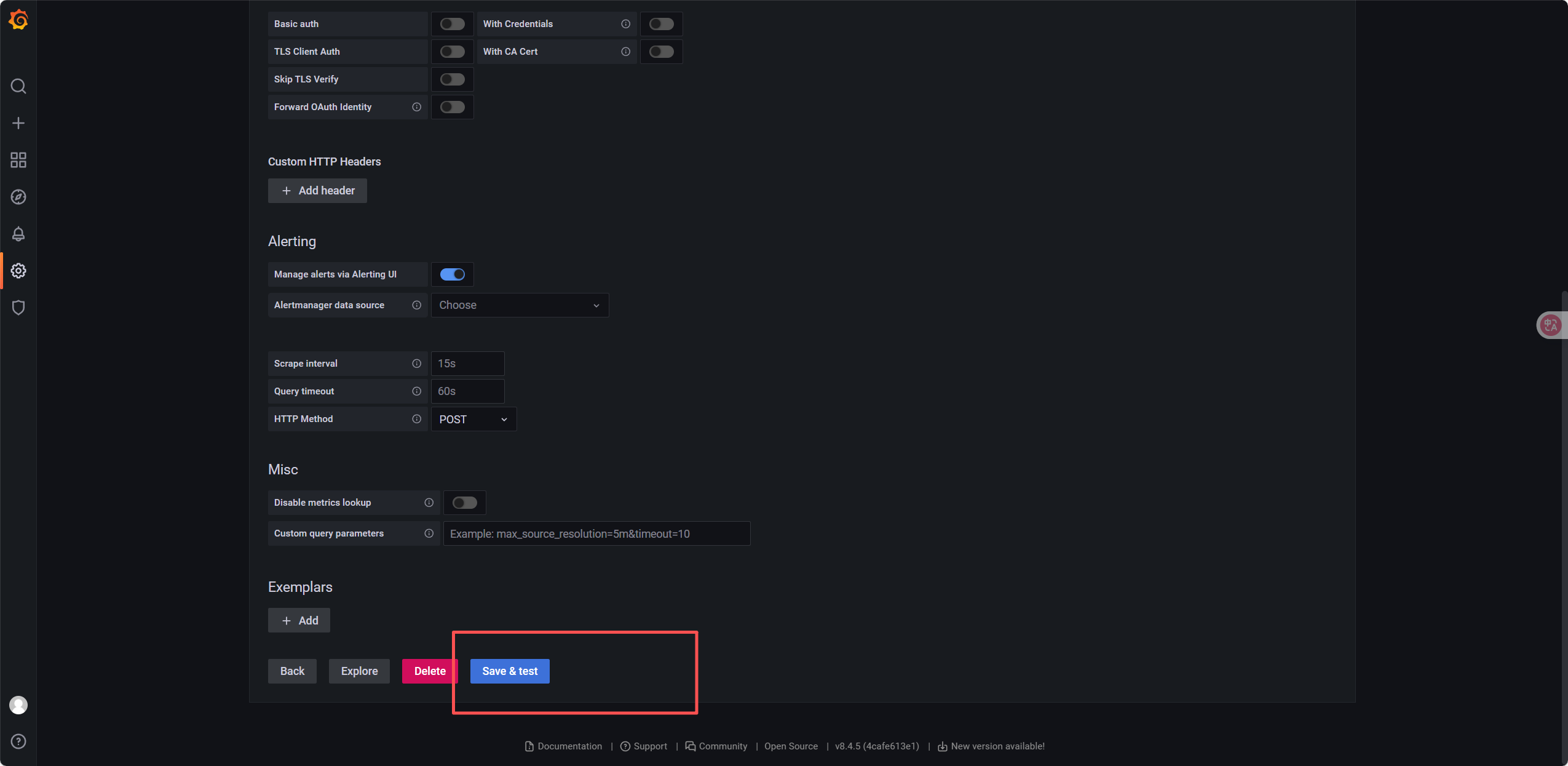

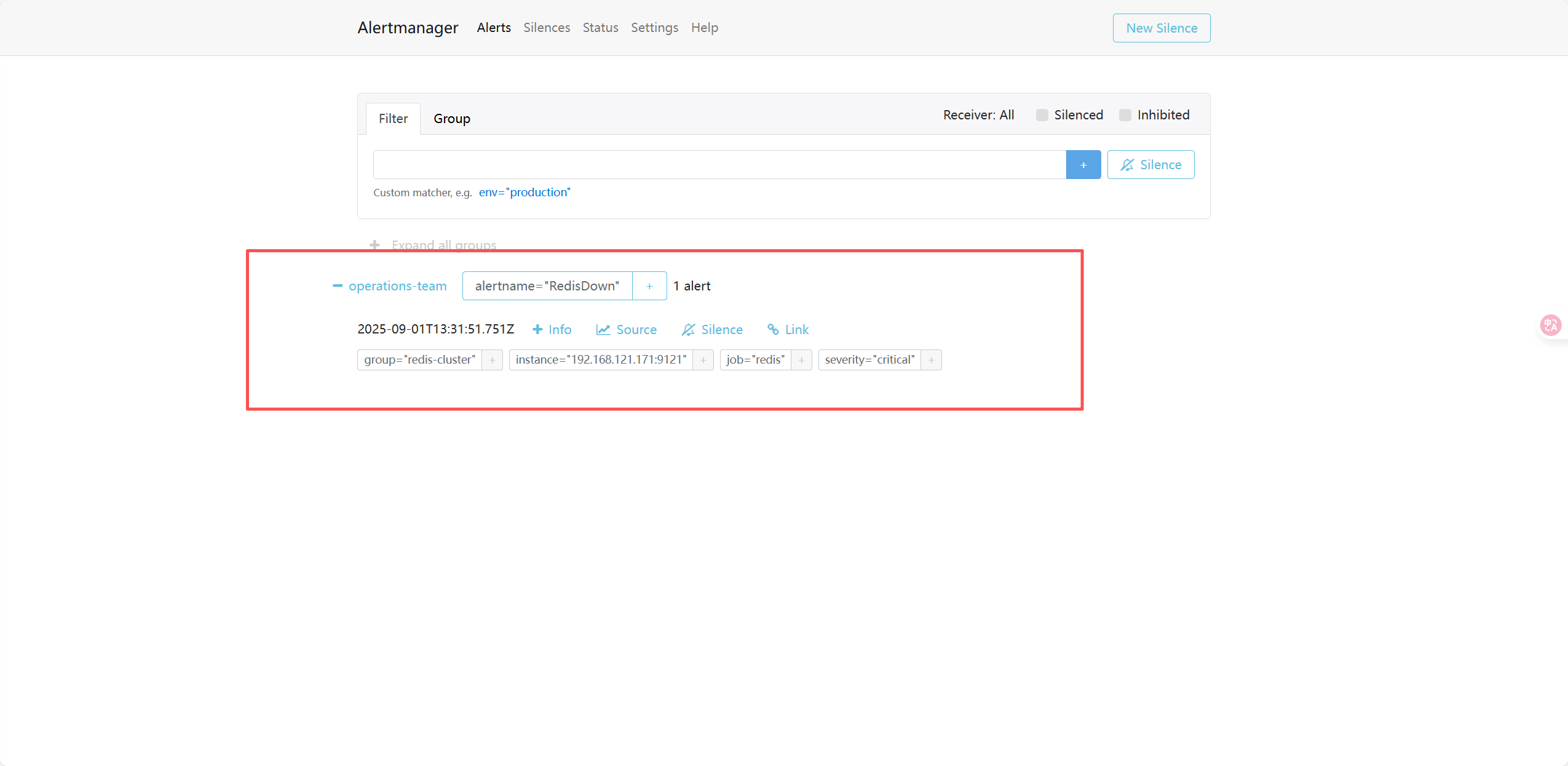

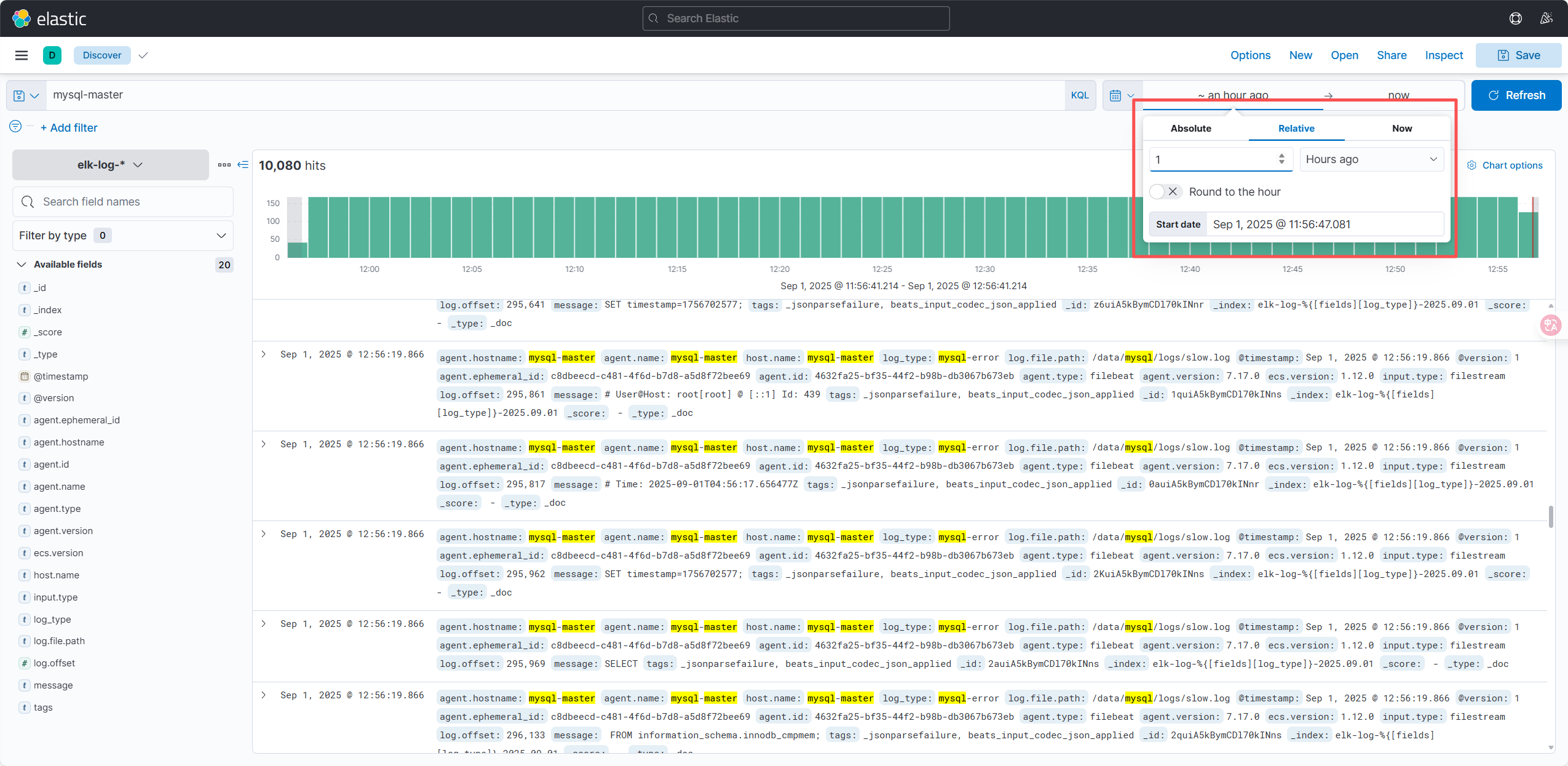

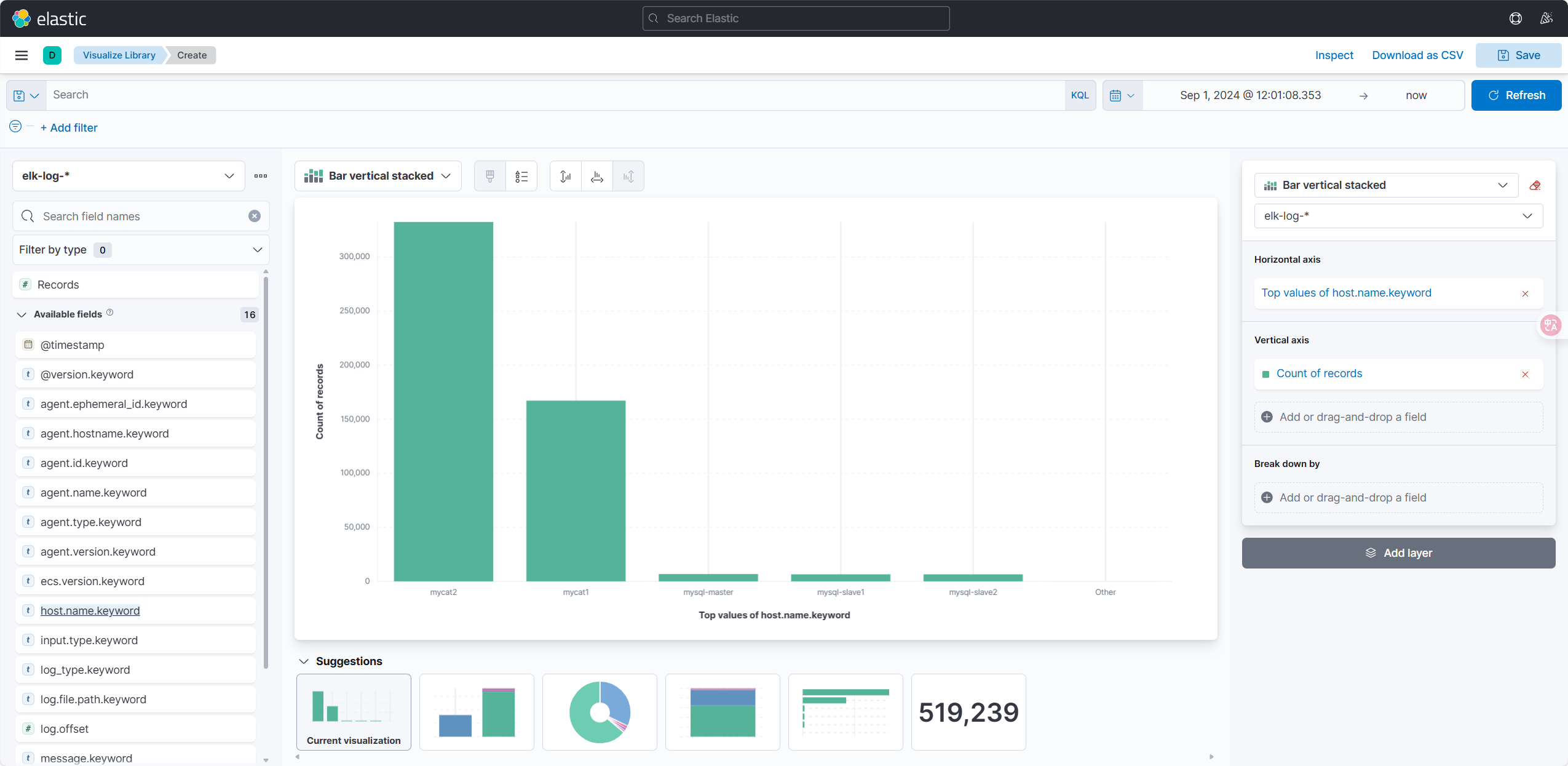

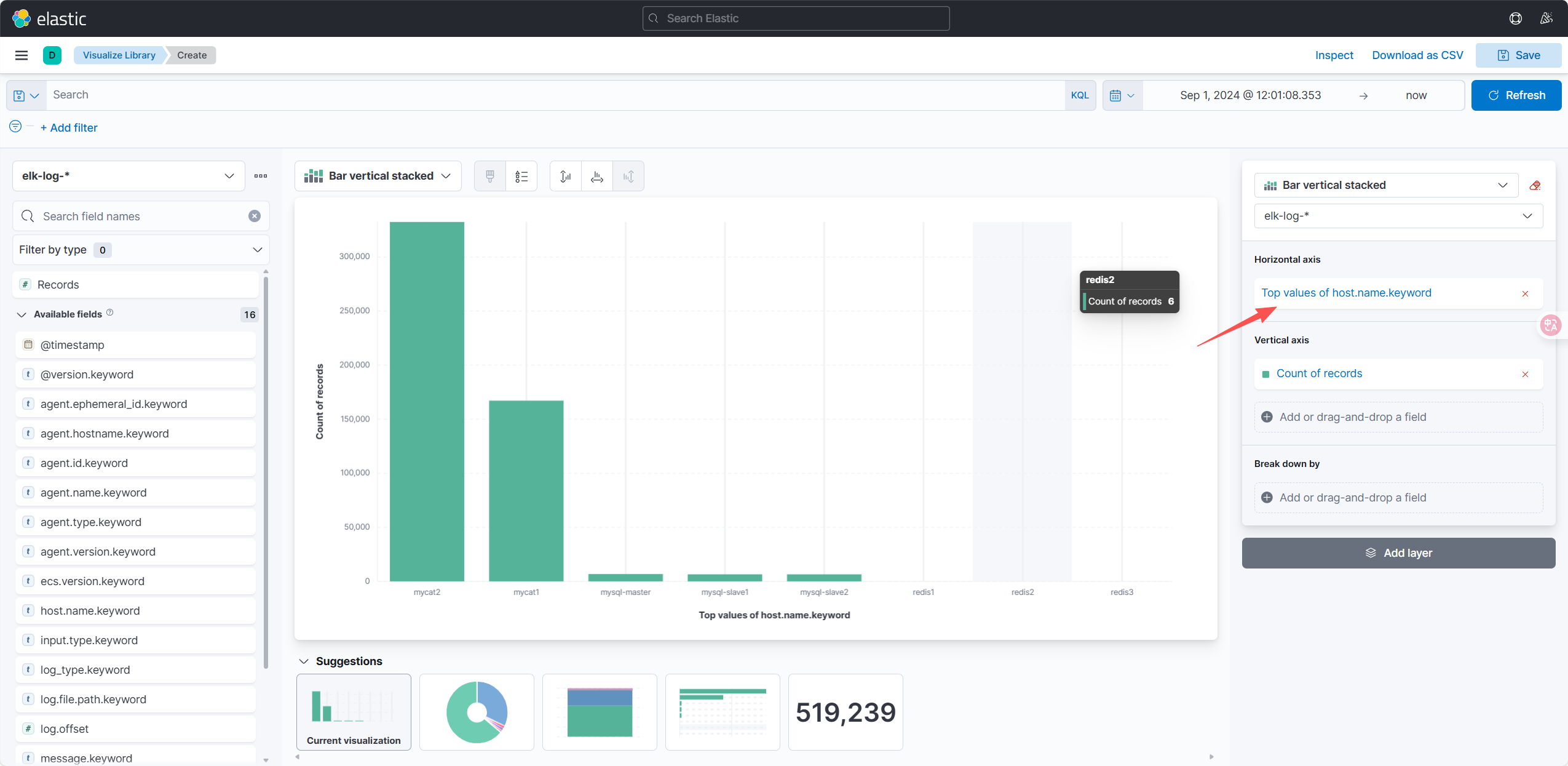

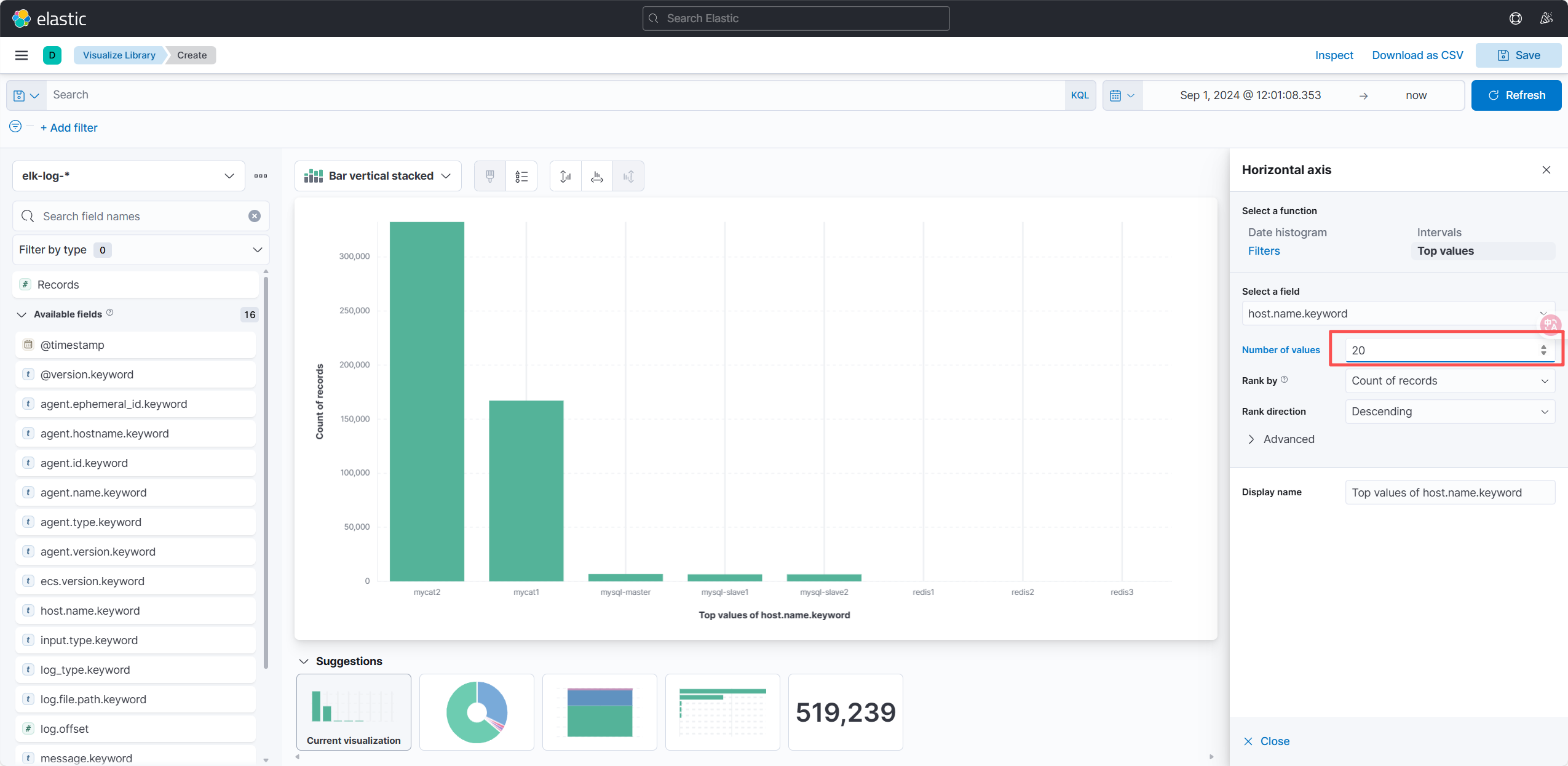

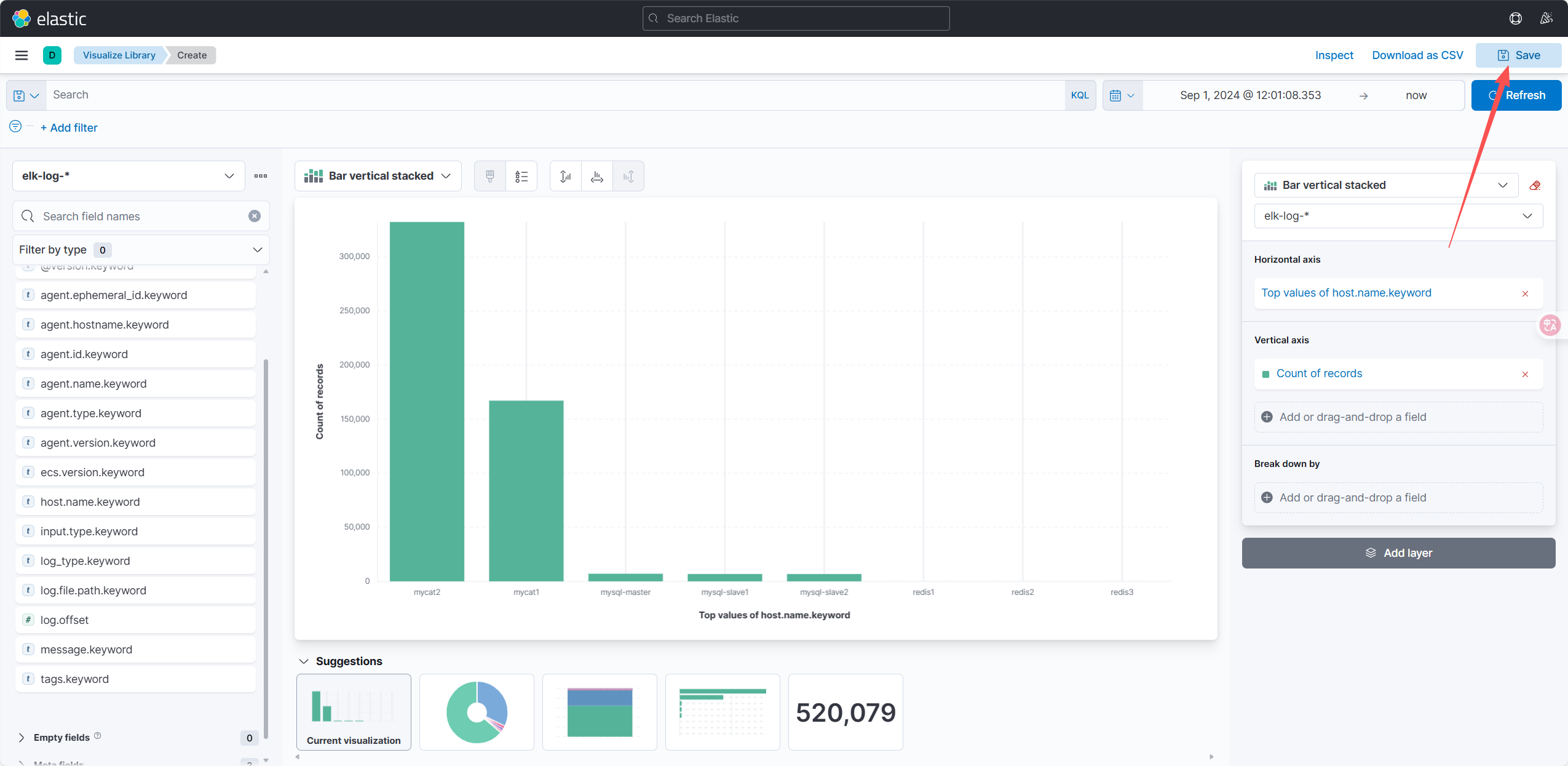

ok: [192.168.121.171]