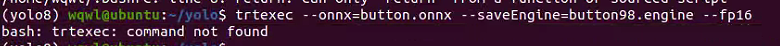

bash:trtexec:command not found

jetson yolov11 部署遇到的问题

- bash:trtexec:command not found

- 转换成engine模型后检测没有结果

- 用vnc远程控制jetson,因为重启以后检测不到显示器,默认的分辨率很低,无法正常使用,在终端中修改分辨率

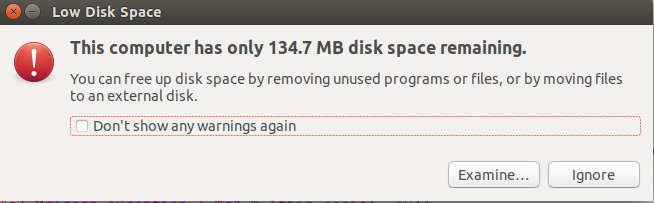

- 内存不足

板子:jetson

bash:trtexec:command not found

环境变量未配置:若 TensorRT 已安装,但 trtexec 所在路径未添加到系统的 PATH 环境变量中,系统就无法找到该命令。trtexec 一般位于 TensorRT 安装目录的 bin 文件夹下(例如 /usr/src/tensorrt/bin)。可通过以下命令临时添加环境变量(以 TensorRT 安装在 /usr/src/tensorrt 为例):

export PATH=/usr/src/tensorrt/bin:$PATH

若要永久生效,可将该命令添加到 ~/.bashrc 或 ~/.zshrc(根据使用的 shell)文件中,然后执行 source ~/.bashrc(或 source ~/.zshrc)使修改生效。

下面忽略即可,只是为了检验tensorrt的位置是否在/usr/src/tensorrt

sudo find / -name "*tensorrt*" 2>/dev/null

(yolo8) wqwl@ubuntu:~/yolo$ sudo find / -name "*tensorrt*" 2>/dev/null

/home/wqwl/Downloads/yolo8/lib/python3.8/site-packages/torch/_dynamo/backends/__pycache__/tensorrt.cpython-38.pyc

/home/wqwl/Downloads/yolo8/lib/python3.8/site-packages/torch/_dynamo/backends/tensorrt.py

/home/wqwl/Downloads/yolo8/lib/python3.8/site-packages/torch/ao/quantization/backend_config/__pycache__/tensorrt.cpython-38.pyc

/home/wqwl/Downloads/yolo8/lib/python3.8/site-packages/torch/ao/quantization/backend_config/tensorrt.py

/home/wqwl/miniforge-pypy3/envs/yolo8/lib/python3.8/site-packages/tensorrt

/home/wqwl/miniforge-pypy3/envs/yolo8/lib/python3.8/site-packages/onnxruntime/capi/libonnxruntime_providers_tensorrt.so

/home/wqwl/miniforge-pypy3/envs/yolo8/lib/python3.8/site-packages/onnxruntime/transformers/models/stable_diffusion/__pycache__/engine_builder_tensorrt.cpython-38.pyc

/home/wqwl/miniforge-pypy3/envs/yolo8/lib/python3.8/site-packages/onnxruntime/transformers/models/stable_diffusion/engine_builder_tensorrt.py

/home/wqwl/miniforge-pypy3/envs/yolo8/lib/python3.8/site-packages/torch/_dynamo/backends/__pycache__/tensorrt.cpython-38.pyc

/home/wqwl/miniforge-pypy3/envs/yolo8/lib/python3.8/site-packages/torch/_dynamo/backends/tensorrt.py

/home/wqwl/miniforge-pypy3/envs/yolo8/lib/python3.8/site-packages/torch/ao/quantization/backend_config/__pycache__/tensorrt.cpython-38.pyc

/home/wqwl/miniforge-pypy3/envs/yolo8/lib/python3.8/site-packages/torch/ao/quantization/backend_config/tensorrt.py

/home/wqwl/miniforge-pypy3/envs/pytorch/lib/python3.8/site-packages/torch/_dynamo/backends/__pycache__/tensorrt.cpython-38.pyc

/home/wqwl/miniforge-pypy3/envs/pytorch/lib/python3.8/site-packages/torch/_dynamo/backends/tensorrt.py

/home/wqwl/miniforge-pypy3/envs/pytorch/lib/python3.8/site-packages/torch/ao/quantization/backend_config/__pycache__/tensorrt.cpython-38.pyc

/home/wqwl/miniforge-pypy3/envs/pytorch/lib/python3.8/site-packages/torch/ao/quantization/backend_config/tensorrt.py

/home/wqwl/yolo/tensorrt-8.2.3.0-cp38-none-linux_aarch64.whl

/var/lib/dpkg/info/nvidia-tensorrt.list

/var/lib/dpkg/info/nvidia-tensorrt-dev.list

/var/lib/dpkg/info/nvidia-tensorrt.md5sums

/var/lib/dpkg/info/tensorrt-libs.list

/var/lib/dpkg/info/tensorrt-libs.md5sums

/var/lib/dpkg/info/tensorrt.md5sums

/var/lib/dpkg/info/nvidia-tensorrt-dev.md5sums

/var/lib/dpkg/info/tensorrt.list

/usr/share/doc/tensorrt-8.5.2.2

/usr/share/doc/nvidia-tensorrt-dev

/usr/share/doc/tensorrt

/usr/share/doc/tensorrt-libs

/usr/share/doc/nvidia-tensorrt

/usr/lib/python3.8/dist-packages/tensorrt-8.5.2.2.dist-info

/usr/lib/python3.8/dist-packages/tensorrt

/usr/lib/python3.8/dist-packages/tensorrt/tensorrt.so

/usr/src/tensorrt

/usr/src/tensorrt/samples/python/yolov3_onnx/onnx_to_tensorrt.py

(yolo8) wqwl@ubuntu:~/yolo$

转换成engine模型后检测没有结果

待进一步测试,未完成

(yolo8) wqwl@ubuntu:~/yolo$ trtexec --onnx=/home/wqwl/yolo/button.onnx --saveEngine=/home/wqwl/yolo/button000.engine --fp16

&&&& RUNNING TensorRT.trtexec [TensorRT v8502] # trtexec --onnx=/home/wqwl/yolo/button.onnx --saveEngine=/home/wqwl/yolo/button000.engine --fp16

[09/08/2025-02:02:04] [I] === Model Options ===

[09/08/2025-02:02:04] [I] Format: ONNX

[09/08/2025-02:02:04] [I] Model: /home/wqwl/yolo/button.onnx

[09/08/2025-02:02:04] [I] Output:

[09/08/2025-02:02:04] [I] === Build Options ===

[09/08/2025-02:02:04] [I] Max batch: explicit batch

[09/08/2025-02:02:04] [I] Memory Pools: workspace: default, dlaSRAM: default, dlaLocalDRAM: default, dlaGlobalDRAM: default

[09/08/2025-02:02:04] [I] minTiming: 1

[09/08/2025-02:02:04] [I] avgTiming: 8

[09/08/2025-02:02:04] [I] Precision: FP32+FP16

[09/08/2025-02:02:04] [I] LayerPrecisions:

[09/08/2025-02:02:04] [I] Calibration:

[09/08/2025-02:02:04] [I] Refit: Disabled

[09/08/2025-02:02:04] [I] Sparsity: Disabled

[09/08/2025-02:02:04] [I] Safe mode: Disabled

[09/08/2025-02:02:04] [I] DirectIO mode: Disabled

[09/08/2025-02:02:04] [I] Restricted mode: Disabled

[09/08/2025-02:02:04] [I] Build only: Disabled

[09/08/2025-02:02:04] [I] Save engine: /home/wqwl/yolo/button000.engine

[09/08/2025-02:02:04] [I] Load engine:

[09/08/2025-02:02:04] [I] Profiling verbosity: 0

[09/08/2025-02:02:04] [I] Tactic sources: Using default tactic sources

[09/08/2025-02:02:04] [I] timingCacheMode: local

[09/08/2025-02:02:04] [I] timingCacheFile:

[09/08/2025-02:02:04] [I] Heuristic: Disabled

[09/08/2025-02:02:04] [I] Preview Features: Use default preview flags.

[09/08/2025-02:02:04] [I] Input(s)s format: fp32:CHW

[09/08/2025-02:02:04] [I] Output(s)s format: fp32:CHW

[09/08/2025-02:02:04] [I] Input build shapes: model

[09/08/2025-02:02:04] [I] Input calibration shapes: model

[09/08/2025-02:02:04] [I] === System Options ===

[09/08/2025-02:02:04] [I] Device: 0

[09/08/2025-02:02:04] [I] DLACore:

[09/08/2025-02:02:04] [I] Plugins:

[09/08/2025-02:02:04] [I] === Inference Options ===

[09/08/2025-02:02:04] [I] Batch: Explicit

[09/08/2025-02:02:04] [I] Input inference shapes: model

[09/08/2025-02:02:04] [I] Iterations: 10

[09/08/2025-02:02:04] [I] Duration: 3s (+ 200ms warm up)

[09/08/2025-02:02:04] [I] Sleep time: 0ms

[09/08/2025-02:02:04] [I] Idle time: 0ms

[09/08/2025-02:02:04] [I] Streams: 1

[09/08/2025-02:02:04] [I] ExposeDMA: Disabled

[09/08/2025-02:02:04] [I] Data transfers: Enabled

[09/08/2025-02:02:04] [I] Spin-wait: Disabled

[09/08/2025-02:02:04] [I] Multithreading: Disabled

[09/08/2025-02:02:04] [I] CUDA Graph: Disabled

[09/08/2025-02:02:04] [I] Separate profiling: Disabled

[09/08/2025-02:02:04] [I] Time Deserialize: Disabled

[09/08/2025-02:02:04] [I] Time Refit: Disabled

[09/08/2025-02:02:04] [I] NVTX verbosity: 0

[09/08/2025-02:02:04] [I] Persistent Cache Ratio: 0

[09/08/2025-02:02:04] [I] Inputs:

[09/08/2025-02:02:04] [I] === Reporting Options ===

[09/08/2025-02:02:04] [I] Verbose: Disabled

[09/08/2025-02:02:04] [I] Averages: 10 inferences

[09/08/2025-02:02:04] [I] Percentiles: 90,95,99

[09/08/2025-02:02:04] [I] Dump refittable layers:Disabled

[09/08/2025-02:02:04] [I] Dump output: Disabled

[09/08/2025-02:02:04] [I] Profile: Disabled

[09/08/2025-02:02:04] [I] Export timing to JSON file:

[09/08/2025-02:02:04] [I] Export output to JSON file:

[09/08/2025-02:02:04] [I] Export profile to JSON file:

[09/08/2025-02:02:04] [I]

[09/08/2025-02:02:04] [I] === Device Information ===

[09/08/2025-02:02:04] [I] Selected Device: Xavier

[09/08/2025-02:02:04] [I] Compute Capability: 7.2

[09/08/2025-02:02:04] [I] SMs: 6

[09/08/2025-02:02:04] [I] Compute Clock Rate: 1.109 GHz

[09/08/2025-02:02:04] [I] Device Global Memory: 14884 MiB

[09/08/2025-02:02:04] [I] Shared Memory per SM: 96 KiB

[09/08/2025-02:02:04] [I] Memory Bus Width: 256 bits (ECC disabled)

[09/08/2025-02:02:04] [I] Memory Clock Rate: 0.51 GHz

[09/08/2025-02:02:04] [I]

[09/08/2025-02:02:04] [I] TensorRT version: 8.5.2

[09/08/2025-02:02:05] [I] [TRT] [MemUsageChange] Init CUDA: CPU +187, GPU +0, now: CPU 216, GPU 9242 (MiB)

[09/08/2025-02:02:07] [I] [TRT] [MemUsageChange] Init builder kernel library: CPU +106, GPU +100, now: CPU 344, GPU 9361 (MiB)

[09/08/2025-02:02:07] [I] Start parsing network model

[09/08/2025-02:02:07] [I] [TRT] ----------------------------------------------------------------

[09/08/2025-02:02:07] [I] [TRT] Input filename: /home/wqwl/yolo/button.onnx

[09/08/2025-02:02:07] [I] [TRT] ONNX IR version: 0.0.8

[09/08/2025-02:02:07] [I] [TRT] Opset version: 17

[09/08/2025-02:02:07] [I] [TRT] Producer name: pytorch

[09/08/2025-02:02:07] [I] [TRT] Producer version: 2.1.0

[09/08/2025-02:02:07] [I] [TRT] Domain:

[09/08/2025-02:02:07] [I] [TRT] Model version: 0

[09/08/2025-02:02:07] [I] [TRT] Doc string:

[09/08/2025-02:02:07] [I] [TRT] ----------------------------------------------------------------

[09/08/2025-02:02:07] [W] [TRT] onnx2trt_utils.cpp:375: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[09/08/2025-02:02:07] [I] Finish parsing network model

[09/08/2025-02:02:08] [I] [TRT] ---------- Layers Running on DLA ----------

[09/08/2025-02:02:08] [I] [TRT] ---------- Layers Running on GPU ----------

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.0/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.0/act/Sigmoid), /model.0/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.1/conv/Conv + PWN(PWN(/model.1/act/Sigmoid), /model.1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.2/cv1/conv/Conv + PWN(PWN(/model.2/cv1/act/Sigmoid), /model.2/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.2/m.0/cv1/conv/Conv + PWN(PWN(/model.2/m.0/cv1/act/Sigmoid), /model.2/m.0/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.2/m.0/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(PWN(/model.2/m.0/cv2/act/Sigmoid), /model.2/m.0/cv2/act/Mul), /model.2/m.0/Add)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.2/Split_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.2/Split_output_1 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.2/cv2/conv/Conv + PWN(PWN(/model.2/cv2/act/Sigmoid), /model.2/cv2/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.3/conv/Conv + PWN(PWN(/model.3/act/Sigmoid), /model.3/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.4/cv1/conv/Conv + PWN(PWN(/model.4/cv1/act/Sigmoid), /model.4/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.4/m.0/cv1/conv/Conv + PWN(PWN(/model.4/m.0/cv1/act/Sigmoid), /model.4/m.0/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.4/m.0/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(PWN(/model.4/m.0/cv2/act/Sigmoid), /model.4/m.0/cv2/act/Mul), /model.4/m.0/Add)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.4/Split_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.4/Split_output_1 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.4/cv2/conv/Conv + PWN(PWN(/model.4/cv2/act/Sigmoid), /model.4/cv2/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.5/conv/Conv + PWN(PWN(/model.5/act/Sigmoid), /model.5/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.6/cv1/conv/Conv + PWN(PWN(/model.6/cv1/act/Sigmoid), /model.6/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.6/m.0/cv1/conv/Conv || /model.6/m.0/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.6/m.0/cv1/act/Sigmoid), /model.6/m.0/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.6/m.0/cv2/act/Sigmoid), /model.6/m.0/cv2/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.6/m.0/m/m.0/cv1/conv/Conv + PWN(PWN(/model.6/m.0/m/m.0/cv1/act/Sigmoid), /model.6/m.0/m/m.0/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.6/m.0/m/m.0/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(PWN(/model.6/m.0/m/m.0/cv2/act/Sigmoid), /model.6/m.0/m/m.0/cv2/act/Mul), /model.6/m.0/m/m.0/Add)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.6/m.0/m/m.1/cv1/conv/Conv + PWN(PWN(/model.6/m.0/m/m.1/cv1/act/Sigmoid), /model.6/m.0/m/m.1/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.6/m.0/m/m.1/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(PWN(/model.6/m.0/m/m.1/cv2/act/Sigmoid), /model.6/m.0/m/m.1/cv2/act/Mul), /model.6/m.0/m/m.1/Add)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.6/m.0/cv3/conv/Conv + PWN(PWN(/model.6/m.0/cv3/act/Sigmoid), /model.6/m.0/cv3/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.6/Split_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.6/Split_output_1 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.6/cv2/conv/Conv + PWN(PWN(/model.6/cv2/act/Sigmoid), /model.6/cv2/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.7/conv/Conv + PWN(PWN(/model.7/act/Sigmoid), /model.7/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.8/cv1/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.8/cv1/act/Sigmoid), /model.8/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.8/m.0/cv1/conv/Conv || /model.8/m.0/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.8/m.0/cv1/act/Sigmoid), /model.8/m.0/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.8/m.0/cv2/act/Sigmoid), /model.8/m.0/cv2/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.8/m.0/m/m.0/cv1/conv/Conv + PWN(PWN(/model.8/m.0/m/m.0/cv1/act/Sigmoid), /model.8/m.0/m/m.0/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.8/m.0/m/m.0/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(PWN(/model.8/m.0/m/m.0/cv2/act/Sigmoid), /model.8/m.0/m/m.0/cv2/act/Mul), /model.8/m.0/m/m.0/Add)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.8/m.0/m/m.1/cv1/conv/Conv + PWN(PWN(/model.8/m.0/m/m.1/cv1/act/Sigmoid), /model.8/m.0/m/m.1/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.8/m.0/m/m.1/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(PWN(/model.8/m.0/m/m.1/cv2/act/Sigmoid), /model.8/m.0/m/m.1/cv2/act/Mul), /model.8/m.0/m/m.1/Add)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.8/m.0/cv3/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.8/m.0/cv3/act/Sigmoid), /model.8/m.0/cv3/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.8/Split_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.8/Split_output_1 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.8/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.8/cv2/act/Sigmoid), /model.8/cv2/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.9/cv1/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.9/cv1/act/Sigmoid), /model.9/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POOLING: /model.9/m/MaxPool

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POOLING: /model.9/m_1/MaxPool

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POOLING: /model.9/m_2/MaxPool

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.9/cv1/act/Mul_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.9/m/MaxPool_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.9/m_1/MaxPool_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.9/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.9/cv2/act/Sigmoid), /model.9/cv2/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.10/cv1/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.10/cv1/act/Sigmoid), /model.10/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.10/Split_7

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.10/m/m.0/attn/qkv/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] SHUFFLE: /model.10/m/m.0/attn/Reshape

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.10/m/m.0/attn/Split

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.10/m/m.0/attn/Split_10

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.10/m/m.0/attn/Split_11

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] SHUFFLE: /model.10/m/m.0/attn/Reshape_2

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] MATRIX_MULTIPLY: /model.10/m/m.0/attn/MatMul

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] SOFTMAX: /model.10/m/m.0/attn/Softmax

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] MATRIX_MULTIPLY: /model.10/m/m.0/attn/MatMul_1

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] SHUFFLE: /model.10/m/m.0/attn/Reshape_1

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.10/m/m.0/attn/pe/conv/Conv + /model.10/m/m.0/attn/Add

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.10/m/m.0/attn/proj/conv/Conv + /model.10/m/m.0/Add

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.10/m/m.0/ffn/ffn.0/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.10/m/m.0/ffn/ffn.0/act/Sigmoid), /model.10/m/m.0/ffn/ffn.0/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.10/m/m.0/ffn/ffn.1/conv/Conv + /model.10/m/m.0/Add_1

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.10/Split_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.10/m/m.0/Add_1_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.10/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.10/cv2/act/Sigmoid), /model.10/cv2/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] RESIZE: /model.11/Resize

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.11/Resize_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.13/cv1/conv/Conv + PWN(PWN(/model.13/cv1/act/Sigmoid), /model.13/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.13/m.0/cv1/conv/Conv + PWN(PWN(/model.13/m.0/cv1/act/Sigmoid), /model.13/m.0/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.13/m.0/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(PWN(/model.13/m.0/cv2/act/Sigmoid), /model.13/m.0/cv2/act/Mul), /model.13/m.0/Add)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.13/Split_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.13/Split_output_1 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.13/cv2/conv/Conv + PWN(PWN(/model.13/cv2/act/Sigmoid), /model.13/cv2/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] RESIZE: /model.14/Resize

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.14/Resize_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.16/cv1/conv/Conv + PWN(PWN(/model.16/cv1/act/Sigmoid), /model.16/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.16/m.0/cv1/conv/Conv + PWN(PWN(/model.16/m.0/cv1/act/Sigmoid), /model.16/m.0/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.16/m.0/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(PWN(/model.16/m.0/cv2/act/Sigmoid), /model.16/m.0/cv2/act/Mul), /model.16/m.0/Add)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.16/Split_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.16/Split_output_1 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.16/cv2/conv/Conv + PWN(PWN(/model.16/cv2/act/Sigmoid), /model.16/cv2/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.17/conv/Conv + PWN(PWN(/model.17/act/Sigmoid), /model.17/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv2.0/cv2.0.0/conv/Conv + PWN(PWN(/model.23/cv2.0/cv2.0.0/act/Sigmoid), /model.23/cv2.0/cv2.0.0/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv3.0/cv3.0.0/cv3.0.0.0/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.23/cv3.0/cv3.0.0/cv3.0.0.0/act/Sigmoid), /model.23/cv3.0/cv3.0.0/cv3.0.0.0/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.13/cv2/act/Mul_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv2.0/cv2.0.1/conv/Conv + PWN(PWN(/model.23/cv2.0/cv2.0.1/act/Sigmoid), /model.23/cv2.0/cv2.0.1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv3.0/cv3.0.0/cv3.0.0.1/conv/Conv + PWN(PWN(/model.23/cv3.0/cv3.0.0/cv3.0.0.1/act/Sigmoid), /model.23/cv3.0/cv3.0.0/cv3.0.0.1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.19/cv1/conv/Conv + PWN(PWN(/model.19/cv1/act/Sigmoid), /model.19/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv2.0/cv2.0.2/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv3.0/cv3.0.1/cv3.0.1.0/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.19/m.0/cv1/conv/Conv + PWN(PWN(/model.19/m.0/cv1/act/Sigmoid), /model.19/m.0/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.23/cv3.0/cv3.0.1/cv3.0.1.0/act/Sigmoid), /model.23/cv3.0/cv3.0.1/cv3.0.1.0/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv3.0/cv3.0.1/cv3.0.1.1/conv/Conv + PWN(PWN(/model.23/cv3.0/cv3.0.1/cv3.0.1.1/act/Sigmoid), /model.23/cv3.0/cv3.0.1/cv3.0.1.1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.19/m.0/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv3.0/cv3.0.2/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(PWN(/model.19/m.0/cv2/act/Sigmoid), /model.19/m.0/cv2/act/Mul), /model.19/m.0/Add)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] SHUFFLE: /model.23/Reshape

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.23/Reshape_copy_output

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.19/Split_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.19/Split_output_1 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.19/cv2/conv/Conv + PWN(PWN(/model.19/cv2/act/Sigmoid), /model.19/cv2/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.20/conv/Conv + PWN(PWN(/model.20/act/Sigmoid), /model.20/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv2.1/cv2.1.0/conv/Conv + PWN(PWN(/model.23/cv2.1/cv2.1.0/act/Sigmoid), /model.23/cv2.1/cv2.1.0/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv3.1/cv3.1.0/cv3.1.0.0/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.23/cv3.1/cv3.1.0/cv3.1.0.0/act/Sigmoid), /model.23/cv3.1/cv3.1.0/cv3.1.0.0/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.10/cv2/act/Mul_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv2.1/cv2.1.1/conv/Conv + PWN(PWN(/model.23/cv2.1/cv2.1.1/act/Sigmoid), /model.23/cv2.1/cv2.1.1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv3.1/cv3.1.0/cv3.1.0.1/conv/Conv + PWN(PWN(/model.23/cv3.1/cv3.1.0/cv3.1.0.1/act/Sigmoid), /model.23/cv3.1/cv3.1.0/cv3.1.0.1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.22/cv1/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.22/cv1/act/Sigmoid), /model.22/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv2.1/cv2.1.2/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv3.1/cv3.1.1/cv3.1.1.0/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.22/m.0/cv1/conv/Conv || /model.22/m.0/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.23/cv3.1/cv3.1.1/cv3.1.1.0/act/Sigmoid), /model.23/cv3.1/cv3.1.1/cv3.1.1.0/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv3.1/cv3.1.1/cv3.1.1.1/conv/Conv + PWN(PWN(/model.23/cv3.1/cv3.1.1/cv3.1.1.1/act/Sigmoid), /model.23/cv3.1/cv3.1.1/cv3.1.1.1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.22/m.0/cv1/act/Sigmoid), /model.22/m.0/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.22/m.0/cv2/act/Sigmoid), /model.22/m.0/cv2/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.22/m.0/m/m.0/cv1/conv/Conv + PWN(PWN(/model.22/m.0/m/m.0/cv1/act/Sigmoid), /model.22/m.0/m/m.0/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv3.1/cv3.1.2/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.22/m.0/m/m.0/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] SHUFFLE: /model.23/Reshape_1

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.23/Reshape_1_copy_output

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(PWN(/model.22/m.0/m/m.0/cv2/act/Sigmoid), /model.22/m.0/m/m.0/cv2/act/Mul), /model.22/m.0/m/m.0/Add)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.22/m.0/m/m.1/cv1/conv/Conv + PWN(PWN(/model.22/m.0/m/m.1/cv1/act/Sigmoid), /model.22/m.0/m/m.1/cv1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.22/m.0/m/m.1/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(PWN(/model.22/m.0/m/m.1/cv2/act/Sigmoid), /model.22/m.0/m/m.1/cv2/act/Mul), /model.22/m.0/m/m.1/Add)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.22/m.0/cv3/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.22/m.0/cv3/act/Sigmoid), /model.22/m.0/cv3/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.22/Split_output_0 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.22/Split_output_1 copy

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.22/cv2/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.22/cv2/act/Sigmoid), /model.22/cv2/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv2.2/cv2.2.0/conv/Conv + PWN(PWN(/model.23/cv2.2/cv2.2.0/act/Sigmoid), /model.23/cv2.2/cv2.2.0/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv3.2/cv3.2.0/cv3.2.0.0/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.23/cv3.2/cv3.2.0/cv3.2.0.0/act/Sigmoid), /model.23/cv3.2/cv3.2.0/cv3.2.0.0/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv2.2/cv2.2.1/conv/Conv + PWN(PWN(/model.23/cv2.2/cv2.2.1/act/Sigmoid), /model.23/cv2.2/cv2.2.1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv3.2/cv3.2.0/cv3.2.0.1/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.23/cv3.2/cv3.2.0/cv3.2.0.1/act/Sigmoid), /model.23/cv3.2/cv3.2.0/cv3.2.0.1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv2.2/cv2.2.2/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv3.2/cv3.2.1/cv3.2.1.0/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.23/cv3.2/cv3.2.1/cv3.2.1.0/act/Sigmoid), /model.23/cv3.2/cv3.2.1/cv3.2.1.0/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv3.2/cv3.2.1/cv3.2.1.1/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(PWN(/model.23/cv3.2/cv3.2.1/cv3.2.1.1/act/Sigmoid), /model.23/cv3.2/cv3.2.1/cv3.2.1.1/act/Mul)

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/cv3.2/cv3.2.2/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] SHUFFLE: /model.23/Reshape_2

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] COPY: /model.23/Reshape_2_copy_output

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] SHUFFLE: /model.23/dfl/Reshape + /model.23/dfl/Transpose

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] SOFTMAX: /model.23/dfl/Softmax

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONVOLUTION: /model.23/dfl/conv/Conv

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] SHUFFLE: /model.23/dfl/Reshape_1

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONSTANT: /model.23/Constant_9_output_0

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] ELEMENTWISE: /model.23/Sub

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONSTANT: /model.23/Constant_10_output_0

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] ELEMENTWISE: /model.23/Add_1

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(/model.23/Constant_11_output_0 + (Unnamed Layer* 387) [Shuffle], PWN(/model.23/Add_2, /model.23/Div_1))

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] ELEMENTWISE: /model.23/Sub_1

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] CONSTANT: /model.23/Constant_12_output_0 + (Unnamed Layer* 392) [Shuffle]

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] ELEMENTWISE: /model.23/Mul_2

[09/08/2025-02:02:08] [I] [TRT] [GpuLayer] POINTWISE: PWN(/model.23/Sigmoid)

[09/08/2025-02:02:10] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +260, GPU +228, now: CPU 617, GPU 9620 (MiB)

[09/08/2025-02:02:10] [I] [TRT] [MemUsageChange] Init cuDNN: CPU +82, GPU +77, now: CPU 699, GPU 9697 (MiB)

[09/08/2025-02:02:10] [I] [TRT] Local timing cache in use. Profiling results in this builder pass will not be stored.

[09/08/2025-02:23:03] [I] [TRT] Total Activation Memory: 15680147456

[09/08/2025-02:23:03] [I] [TRT] Detected 1 inputs and 3 output network tensors.

[09/08/2025-02:23:03] [I] [TRT] Total Host Persistent Memory: 278192

[09/08/2025-02:23:03] [I] [TRT] Total Device Persistent Memory: 9728

[09/08/2025-02:23:03] [I] [TRT] Total Scratch Memory: 4194304

[09/08/2025-02:23:03] [I] [TRT] [MemUsageStats] Peak memory usage of TRT CPU/GPU memory allocators: CPU 5 MiB, GPU 2124 MiB

[09/08/2025-02:23:03] [I] [TRT] [BlockAssignment] Started assigning block shifts. This will take 158 steps to complete.

[09/08/2025-02:23:04] [I] [TRT] [BlockAssignment] Algorithm ShiftNTopDown took 49.9935ms to assign 10 blocks to 158 nodes requiring 10799616 bytes.

[09/08/2025-02:23:04] [I] [TRT] Total Activation Memory: 10799616

[09/08/2025-02:23:04] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +0, now: CPU 979, GPU 10538 (MiB)

[09/08/2025-02:23:04] [I] [TRT] [MemUsageChange] Init cuDNN: CPU +0, GPU +0, now: CPU 979, GPU 10538 (MiB)

[09/08/2025-02:23:04] [W] [TRT] TensorRT encountered issues when converting weights between types and that could affect accuracy.

[09/08/2025-02:23:04] [W] [TRT] If this is not the desired behavior, please modify the weights or retrain with regularization to adjust the magnitude of the weights.

[09/08/2025-02:23:04] [W] [TRT] Check verbose logs for the list of affected weights.

[09/08/2025-02:23:04] [W] [TRT] - 1 weights are affected by this issue: Detected NaN values and converted them to corresponding FP16 NaN.

[09/08/2025-02:23:04] [W] [TRT] - 73 weights are affected by this issue: Detected subnormal FP16 values.

[09/08/2025-02:23:04] [W] [TRT] - 1 weights are affected by this issue: Detected values less than smallest positive FP16 subnormal value and converted them to the FP16 minimum subnormalized value.

[09/08/2025-02:23:04] [I] [TRT] [MemUsageChange] TensorRT-managed allocation in building engine: CPU +5, GPU +8, now: CPU 5, GPU 8 (MiB)

[09/08/2025-02:23:04] [I] Engine built in 1260.39 sec.

[09/08/2025-02:23:04] [I] [TRT] Loaded engine size: 6 MiB

[09/08/2025-02:23:04] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +0, now: CPU 863, GPU 10536 (MiB)

[09/08/2025-02:23:04] [I] [TRT] [MemUsageChange] Init cuDNN: CPU +0, GPU +0, now: CPU 863, GPU 10536 (MiB)

[09/08/2025-02:23:04] [I] [TRT] [MemUsageChange] TensorRT-managed allocation in engine deserialization: CPU +0, GPU +5, now: CPU 0, GPU 5 (MiB)

[09/08/2025-02:23:04] [I] Engine deserialized in 0.142366 sec.

[09/08/2025-02:23:04] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +1, GPU +0, now: CPU 864, GPU 10536 (MiB)

[09/08/2025-02:23:04] [I] [TRT] [MemUsageChange] Init cuDNN: CPU +0, GPU +0, now: CPU 864, GPU 10536 (MiB)

[09/08/2025-02:23:04] [I] [TRT] [MemUsageChange] TensorRT-managed allocation in IExecutionContext creation: CPU +0, GPU +10, now: CPU 0, GPU 15 (MiB)

[09/08/2025-02:23:04] [I] Setting persistentCacheLimit to 0 bytes.

[09/08/2025-02:23:04] [I] Using random values for input images

[09/08/2025-02:23:04] [I] Created input binding for images with dimensions 1x3x640x640

[09/08/2025-02:23:04] [I] Using random values for output output0

[09/08/2025-02:23:04] [I] Created output binding for output0 with dimensions 1x38x8400

[09/08/2025-02:23:04] [I] Starting inference

[09/08/2025-02:23:08] [I] Warmup completed 12 queries over 200 ms

[09/08/2025-02:23:08] [I] Timing trace has 214 queries over 3.21037 s

[09/08/2025-02:23:08] [I]

[09/08/2025-02:23:08] [I] === Trace details ===

[09/08/2025-02:23:08] [I] Trace averages of 10 runs:

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.9306 ms - Host latency: 14.5765 ms (enqueue 6.32881 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.2068 ms - Host latency: 13.7883 ms (enqueue 8.30298 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 17.2467 ms - Host latency: 17.8034 ms (enqueue 15.0263 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 15.6561 ms - Host latency: 16.3159 ms (enqueue 5.72841 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.3614 ms - Host latency: 13.998 ms (enqueue 7.04658 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.3784 ms - Host latency: 14.0439 ms (enqueue 6.05887 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.333 ms - Host latency: 14.071 ms (enqueue 7.08997 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.5115 ms - Host latency: 14.1604 ms (enqueue 8.28361 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.3803 ms - Host latency: 14.0319 ms (enqueue 5.82911 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.3013 ms - Host latency: 13.9596 ms (enqueue 7.99796 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.4116 ms - Host latency: 14.0284 ms (enqueue 4.85885 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.4163 ms - Host latency: 14.0245 ms (enqueue 3.88177 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.4133 ms - Host latency: 14.0384 ms (enqueue 3.86726 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.4191 ms - Host latency: 14.0316 ms (enqueue 3.91974 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.4063 ms - Host latency: 14.0255 ms (enqueue 3.62468 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.4101 ms - Host latency: 14.0173 ms (enqueue 3.72148 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.4031 ms - Host latency: 14.012 ms (enqueue 3.61973 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.4102 ms - Host latency: 14.0061 ms (enqueue 3.69102 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.3987 ms - Host latency: 13.99 ms (enqueue 3.69624 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.4244 ms - Host latency: 14.0419 ms (enqueue 3.87229 ms)

[09/08/2025-02:23:08] [I] Average on 10 runs - GPU latency: 13.4055 ms - Host latency: 14.0559 ms (enqueue 5.10146 ms)

[09/08/2025-02:23:08] [I]

[09/08/2025-02:23:08] [I] === Performance summary ===

[09/08/2025-02:23:08] [I] Throughput: 66.659 qps

[09/08/2025-02:23:08] [I] Latency: min = 13.6357 ms, max = 190.592 ms, mean = 15.5254 ms, median = 14.0298 ms, percentile(90%) = 14.2144 ms, percentile(95%) = 18.1958 ms, percentile(99%) = 29.5359 ms

[09/08/2025-02:23:08] [I] Enqueue Time: min = 3.36572 ms, max = 149.133 ms, mean = 6.85428 ms, median = 4.42426 ms, percentile(90%) = 10.0273 ms, percentile(95%) = 14.5533 ms, percentile(99%) = 31.8622 ms

[09/08/2025-02:23:08] [I] H2D Latency: min = 0.360352 ms, max = 41.032 ms, mean = 0.706807 ms, median = 0.477539 ms, percentile(90%) = 0.53833 ms, percentile(95%) = 0.554688 ms, percentile(99%) = 1.45398 ms

[09/08/2025-02:23:08] [I] GPU Compute Time: min = 13.0247 ms, max = 149.402 ms, mean = 14.6757 ms, median = 13.4125 ms, percentile(90%) = 13.5024 ms, percentile(95%) = 16.0237 ms, percentile(99%) = 28.8882 ms

[09/08/2025-02:23:08] [I] D2H Latency: min = 0.0947266 ms, max = 0.209442 ms, mean = 0.142906 ms, median = 0.140656 ms, percentile(90%) = 0.155518 ms, percentile(95%) = 0.162964 ms, percentile(99%) = 0.182129 ms

[09/08/2025-02:23:08] [I] Total Host Walltime: 3.21037 s

[09/08/2025-02:23:08] [I] Total GPU Compute Time: 3.1406 s

[09/08/2025-02:23:08] [W] * GPU compute time is unstable, with coefficient of variance = 70.2262%.

[09/08/2025-02:23:08] [W] If not already in use, locking GPU clock frequency or adding --useSpinWait may improve the stability.

[09/08/2025-02:23:08] [I] Explanations of the performance metrics are printed in the verbose logs.

[09/08/2025-02:23:08] [I]

&&&& PASSED TensorRT.trtexec [TensorRT v8502] # trtexec --onnx=/home/wqwl/yolo/button.onnx --saveEngine=/home/wqwl/yolo/button000.engine --fp16参考文章:jetson 安装tensorRT

Jetson系列安装onnxruntime-gpu

用vnc远程控制jetson,因为重启以后检测不到显示器,默认的分辨率很低,无法正常使用,在终端中修改分辨率

xrandr --fb 1280x720

分辨率越高延迟越高 不建议设置太高。

内存不足

删除 !Delete !

这是 Linux 系统的 “磁盘空间不足” 警告提示。提示显示计算机只剩下 134.7 MB 的磁盘空间,建议通过移除未使用的程序或文件