【LLM】FastMCP v2 :让模型交互更智能

🔎大家好,我是Sonhhxg_柒,希望你看完之后,能对你有所帮助,不足请指正!共同学习交流

🔎

📝个人主页-Sonhhxg_柒的博客_CSDN博客 📃

🎁欢迎各位→点赞👍 + 收藏⭐️ + 留言📝

📣系列专栏 - 机器学习【ML】 自然语言处理【NLP】 深度学习【DL】

🖍foreword

✔说明⇢本人讲解主要包括Python、机器学习(ML)、深度学习(DL)、自然语言处理(NLP)等内容。

如果你对这个系列感兴趣的话,可以关注订阅哟👋

人工智能领域正在飞速发展,为了突破训练数据的局限性,大型语言模型(LLM)需要畅通无阻地访问外部数据和功能。在此背景下,模型上下文协议(MCP)应运而生,它被形象地称为"人工智能的USB-C接口"。MCP为LLM提供了一个标准化的安全连接方案,使其能够便捷地对接各类公共资源、工具及提示服务器。

它就像是为 LLM 交互量身定制的 API,允许服务器:

- 通过资源公开数据(类似于将信息加载到 LLM 上下文中的 GET 端点)。

- 通过工具提供功能(例如用于执行代码或副作用的 POST 端点)。

- 通过提示(可重复使用的模板,用于一致的 LLM 响应)定义交互模式。

- 还有更多!

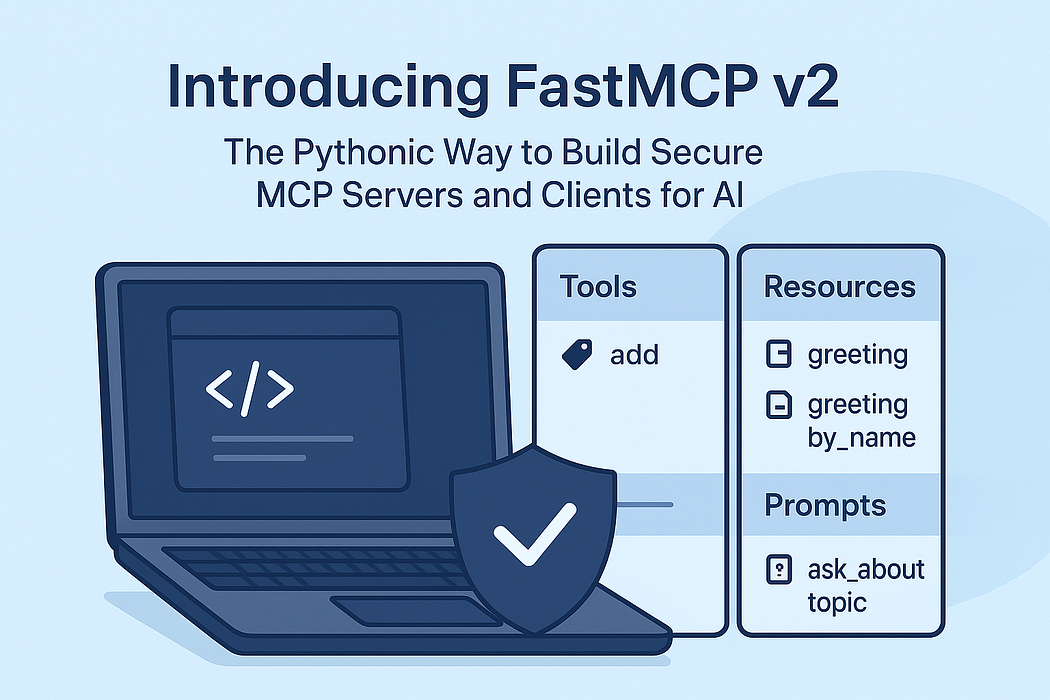

FastMCP v2 是一个高级 Python 库,它使 MCP 服务器的构建、管理和交互变得轻而易举。它旨在:

- 🚀快速:最少的代码,实现快速开发。

- 🍀简单:使用少量样板构建服务器。

- 🐍 Pythonic:对于 Python 开发人员来说感觉很自然。

- 🔍完整:涵盖从开发到生产的所有内容。

在本文中,我们将深入探讨 FastMCP v2 的功能,并提供代码示例和输出。无论您是将 LLM 与数据库、API 还是自定义逻辑集成,FastMCP 都能满足您的需求。

一、入门:FastMCP 的基本功能

FastMCP 的亮点在于其简洁性。您可以在几分钟内启动一个 MCP 服务器。以下是一个使用工具、资源和提示创建服务器的基本示例。

1、依赖项

- python 3.12

- fastmcp>=2.11.3

2、服务器设置(mcp_server.py)

from fastmcp import FastMCP

from fastmcp.prompts.prompt import PromptMessage, TextContent# 创建基本服务器实例

mcp = FastMCP(name="MyMCPServer")# 工具

@mcp.tool

def add(a: int, b: int) -> int:"""两数相加"""return a + b# 资源

@mcp.resource("resource://greeting")

def get_greeting() -> str:"""提供简单的信息。"""return "Hello from FastMCP Resources!"# 资源模板

@mcp.resource("data://{name}/greeting")

def get_greeting_by_name(name: str) -> str:"""为特定姓名提供问候语。"""return f"hello, {name} from FastMCP Resources!"# 返回字符串的基本提示(自动转换为用户消息)

@mcp.prompt

def ask_about_topic(topic: str) -> str:"""生成一条用户消息,要求对某个主题进行解释。"""return f"你能解释一下 '{topic}'?"# Prompt returning a specific message type

@mcp.prompt

def generate_code_request(language: str, task_description: str) -> PromptMessage:"""生成请求代码生成的用户消息。"""content = f"用 {language}进行回答, 执行以下任务的函数:{task_description}"return PromptMessage(role="user", content=TextContent(type="text", text=content))if __name__ == "__main__":# 使用STDIO启动服务器# mcp.run()# 在端口8000上使用HTTP启动服务器mcp.run(transport="http", host="127.0.0.1", port=8000)- 工具:公开 Python 函数供 LLM 调用,例如

add用于简单的计算。 - 资源:静态或动态数据源,例如可以参数化的问候语。

- 提示:可重复使用的模板来指导 LLM 响应。

3、客户端交互(mcp_client.py)

现在,让我们使用 FastMCP 的客户端与该服务器进行交互。

from fastmcp import Client# 连接本地服务 (STDIO)

# client = Client("mcp_server.py")# 连接HTTP服务

client = Client("http://127.0.0.1:8000/mcp")async def call_mcp():async with client:# 基本服务器交互await client.ping()# 列出可用工具列表tools = await client.list_tools()resources = await client.list_resources()resource_templates = await client.list_resource_templates()prompts = await client.list_prompts()print("\n===============================Tools============================================\n")print("\n".join([tool.name for tool in tools]))print("\n===============================Resources========================================\n")print("\n".join([resource.name for resource in resources]))print("\n===============================Resource Templates===============================\n")print("\n".join([resource_template.name for resource_template in resource_templates]))print("\n===============================Prompts==========================================\n")print("\n".join([prompt.name for prompt in prompts]))# 调用工具result = await client.call_tool("add", {"a": 1, "b": 2})print("\n===============================Tool Result=======================================\n")print(result.content[0].text)# 调用资源result = await client.read_resource("resource://greeting")print("\n===============================Resource Result=================================\n")print(result[0].text)# 调用提示词messages = await client.get_prompt("ask_about_topic", {"topic": "AI"})print("\n===============================Prompt Result===================================\n")print(messages.messages[0].content.text)if __name__ == "__main__":import asyncioasyncio.run(call_mcp())输出结果

===============================Tools============================================add===============================Resources========================================get_greeting===============================Resource Templates===============================get_greeting_by_name===============================Prompts==========================================ask_about_topic

generate_code_request===============================Tool Result=======================================3===============================Resource Result=================================Hello from FastMCP Resources!===============================Prompt Result===================================你能解释一下 'AI'?

这演示了如何轻松地列出、调用并与服务器组件交互。FastMCP 负责处理底层协议,使其成为快速原型开发的理想选择。

二、深入了解:高级功能

FastMCP v2 引入了强大的上下文感知功能,实现了更具交互性和动态性的 LLM 体验。

上下文对象:它Context提供对日志记录、进度报告、资源读取、LLM 采样、用户引导等功能的访问。将其注入到具有类型提示的函数中。

用户引导:在工具执行期间请求结构化的用户输入,例如,用于交互式工作流。

服务器日志:将日志(调试、信息、警告、错误)发送回客户端。例子:await ctx.info(f"Processing {len(data)} items")

进度报告:更新客户端的长期运行任务:await ctx.report_progress(progress=i, total=total)

LLM 抽样:利用客户端的 LLM 在工具内生成文本。

1、高级服务器设置(advanced_mcp_features_server.py)

from fastmcp import FastMCP, Context

from fastmcp.server.dependencies import get_context

from dataclasses import dataclass

import asyncio# 创建基本服务器实例

mcp = FastMCP(name="MyAdvancedMCPServer")# -----------------------Context-------------------------------

@mcp.tool

async def process_file(file_uri: str, ctx: Context) -> str:"""使用上下文进行日志记录和资源访问来处理文件。"""# Context is available as the ctx parameterreturn "Processed file"# 需要上下文但不将其作为参数接收的实用函数

async def process_data(data: list[float]) -> dict:# 获取活动上下文-仅在请求中调用时有效ctx = get_context()await ctx.info(f"Processing {len(data)} data points")@mcp.tool

async def analyze_dataset(dataset_name: str) -> dict:# Call utility function that uses context internally# load data from file# data = load_data(dataset_name)data = [1, 2, 3, 4, 5, 6, 7, 8, 9, 10]await process_data(data)return {"processed": len(data), "results": data}# -----------------------Elicitation---------------------------

@dataclass

class UserInfo:name: strage: int@mcp.tool

async def collect_user_info(ctx: Context) -> str:"""通过交互式提示词收集用户信息。"""result = await ctx.elicit(message="Please provide your information",response_type=UserInfo)if result.action == "accept":user = result.datareturn f"Hello {user.name}, you are {user.age} years old"elif result.action == "decline":return "Information not provided"else: # cancelreturn "Operation cancelled"# ----------------------Progress Reporting-------------------------------

@mcp.tool

async def process_items(items: list[str], ctx: Context) -> dict:"""处理带有进度更新的项目列表。"""total = len(items)results = []for i, item in enumerate(items):# 在我们处理每个项目时报告进度await ctx.report_progress(progress=i, total=total)# 模拟处理时间await asyncio.sleep(1)results.append(item.upper())# 报告 100% 完成await ctx.report_progress(progress=total, total=total)return {"processed": len(results), "results": results}# ----------------------LLM Sampling---------------------------@mcp.tool

async def analyze_sentiment(text: str, ctx: Context) -> dict:"""使用客户端的LLM分析文本的情感。"""prompt = f"""Analyze the sentiment of the following text as positive, negative, or neutral. Just output a single word - 'positive', 'negative', or 'neutral'.Text to analyze: {text}"""# Request LLM analysisresponse = await ctx.sample(prompt)# from fastmcp.client.sampling import SamplingMessage# messages = [# SamplingMessage(role="user", content=f"I have this data: {context_data}"),# SamplingMessage(role="assistant", content="I can see your data. What would you like me to analyze?"),# SamplingMessage(role="user", content=user_query)# ]# response = await ctx.sample(# messages=messages,# system_prompt="You are an expert Python programmer. Provide concise, working code examples without explanations.",# model_preferences="claude-3-sonnet", # Prefer a specific model# include_context="thisServer", # Use the server's context# temperature=0.7,# max_tokens=300# )# Process the LLM's responsesentiment = response.text.strip().lower()# Map to standard sentiment valuesif "positive" in sentiment:sentiment = "positive"elif "negative" in sentiment:sentiment = "negative"else:sentiment = "neutral"return {"text": text, "sentiment": sentiment}if __name__ == "__main__":# Start server with HTTP on port 8000mcp.run(transport="http", host="127.0.0.1", port=8001)2、多服务器客户端和处理程序

FastMCP 通过 JSON 配置支持多个服务器。客户端可以使用自定义回调来处理引导、日志、进度和采样。

3、多服务器客户端(multiserver_mcp_client.py)

from fastmcp import Client

from fastmcp.client.elicitation import ElicitResult

from fastmcp.client.logging import LogMessage

from fastmcp.client.sampling import (SamplingMessage,SamplingParams,RequestContext,

)# 本地服务 (STDIO)

# client = Client("advanced_mcp_features_server.py")# HTTP 服务

# client = Client("http://127.0.0.1:8000/mcp")# JSON配置(多个服务器)

# 工具按服务器名称分隔,例如:server_name_tool_name [my_server_add]

# 资源按服务器名称分隔,例如:resource://server_name/resource_name [resource://my_server/greeting]

config = {"mcpServers": {"my_advanced_server": {"url": "http://127.0.0.1:8001/mcp","transport": "http"},"my_server": {"command": "python","args": ["./mcp_server.py"],"env": {"LOG_LEVEL": "INFO"}}}

}async def elicitation_handler(message: str, response_type: type, params, context):# 向用户展示消息并收集输入print(message)name = input("输入姓名: ")age = input("输入年龄: ")if name == "" or age == "":return ElicitResult(action="decline")# 使用提供的数据类类型创建响应# FastMCP为您将JSON模式转换为这种Python类型response_data = response_type(name=name, age=age)# 您可以直接返回数据-FastMCP将隐式接受启发return response_data# 或者显式返回一个ElicitResult以获得更多控制权# return ElicitResult(action="accept", content=response_data)async def log_handler(message: LogMessage):"""处理来自MCP服务器的传入日志并转发它们,标准Python日志系统。"""msg = message.data.get('msg')extra = message.data.get('extra')level = message.levelprint("======Log Data from handler======")print(msg)print(extra)print(level)print("=================================")# 将MCP日志级别转换为Python日志级别# level = LOGGING_LEVEL_MAP.get(message.level.upper(), logging.INFO)# 使用标准日志库记录消息# logger.log(level, msg, extra=extra)async def progress_handler(progress: float,total: float | None,message: str | None

) -> None:if total is not None:percentage = (progress / total) * 100print(f"Progress: {percentage:.1f}% - {message or ''}")else:print(f"Progress: {progress} - {message or ''}")async def sampling_handler(messages: list[SamplingMessage],params: SamplingParams,context: RequestContext

) -> str:print("======从处理程序中采样数据======")print(messages[0].content.text)print("=================================")# 您的LLM集成逻辑在这里# 从消息中提取文本并生成响应return "neutral"client = Client(config,elicitation_handler=elicitation_handler,log_handler=log_handler,progress_handler=progress_handler,sampling_handler=sampling_handler

)async def call_mcp():async with client:# Basic server interactionawait client.ping()# List available operationstools = await client.list_tools()resources = await client.list_resources()resource_templates = await client.list_resource_templates()prompts = await client.list_prompts()print("\n===============================Tools======================================================\n")print("\n".join([tool.name for tool in tools]))print("\n===============================Resources==================================================\n")print("\n".join([resource.name for resource in resources]))print("\n===============================Resource Templates==========================================\n")print("\n".join([resource_template.name for resource_template in resource_templates]))print("\n===============================Prompts=====================================================\n")print("\n".join([prompt.name for prompt in prompts]))# 调用工具result = await client.call_tool("my_server_add", {"a": 1, "b": 2})print("\n===============================Tool Result===================================================\n")print(result.content[0].text)# 调用资源result = await client.read_resource("resource://my_server/greeting")print("\n===============================Resource Result===============================================\n")print(result[0].text)# 调用提示词messages = await client.get_prompt("my_server_ask_about_topic", {"topic": "AI"})print("\n===============================Prompt Result==================================================\n")print(messages.messages[0].content.text)# 使用上下文调用高级服务器工具result = await client.call_tool("my_advanced_server_process_file", {"file_uri": "file://test.txt"})print("\n===============================Advanced Server Tool with context Result============================\n")print(result.content[0].text)# 使用启发式调用高级服务器工具print("\n===============================Advanced Server Tool with elicitation Result===============================\n")result = await client.call_tool("my_advanced_server_collect_user_info")print(result.content[0].text)# 调用具有日志记录功能的高级服务器工具print("\n===============================Advanced Server Tool with logging Result===============================\n")result = await client.call_tool("my_advanced_server_analyze_dataset", {"dataset_name": "test.txt"})print(result.content[0].text)# 调用具有进度报告功能的高级服务器工具print("\n===============================Advanced Server Tool with progress reporting Result===============================\n")result = await client.call_tool("my_advanced_server_process_items", {"items": ["item1", "item2", "item3"]})print(result.content[0].text)# 使用LLM采样调用高级服务器工具print("\n===============================Advanced Server Tool with LLM sampling Result===============================\n")result = await client.call_tool("my_advanced_server_analyze_sentiment",{"text": "AI is the future of technology"})print(result.content[0].text)if __name__ == "__main__":import asyncioasyncio.run(call_mcp())结果输出

===============================Tools======================================================my_advanced_server_process_file

my_advanced_server_analyze_dataset

my_advanced_server_collect_user_info

my_advanced_server_process_items

my_advanced_server_analyze_sentiment

my_server_add===============================Resources==================================================my_server_get_greeting===============================Resource Templates==========================================my_server_get_greeting_by_name===============================Prompts=====================================================my_server_ask_about_topic

my_server_generate_code_request===============================Tool Result===================================================3===============================Resource Result===============================================Hello from FastMCP Resources!===============================Prompt Result==================================================你能解释一下 'AI'?===============================Advanced Server Tool with context Result============================Processed file===============================Advanced Server Tool with elicitation Result===============================Please provide your information

输入姓名: Sonhhxg

输入年龄: 26

Hello Sonhhxg, you are 26 years old===============================Advanced Server Tool with logging Result=====================================Log Data from handler======

Processing 10 data points

None

info

=================================

{"processed":10,"results":[1,2,3,4,5,6,7,8,9,10]}===============================Advanced Server Tool with progress reporting Result===============================Progress: 0.0% -

Progress: 33.3% -

Progress: 66.7% -

Progress: 100.0% -

{"processed":3,"results":["ITEM1","ITEM2","ITEM3"]}===============================Advanced Server Tool with LLM sampling Result=====================================从处理程序中采样数据======

Analyze the sentiment of the following text as positive, negative, or neutral. Just output a single word - 'positive', 'negative', or 'neutral'.Text to analyze: AI is the future of technology

=================================

{"text":"AI is the future of technology","sentiment":"neutral"}三、安全和高级配置

FastMCP v2 强调生产就绪性,具有以下功能:

- 身份验证:具有灵活身份验证模式的安全服务器,从简单的 API 密钥到与外部身份提供商的完整 OAuth 2.1 集成。

- 中间件:检查、修改和响应所有 MCP 请求和响应

- 代理:充当其他 MCP 服务器的中介或更改传输。

- 基于标签的过滤:有选择地显示带有

include_tags和的组件exclude_tags。 - 自定义路由:对于 HTTP,使用添加健康检查等端点

@mcp.custom_route。

四、为什么选择 FastMCP v2?

FastMCP 为 Python 开发者提供了一套高效工具,可快速构建安全的 MCP 服务器和客户端。其异步优先架构与完备的功能集,既能满足个人项目需求,也能胜任企业级应用开发,保障系统高性能运行。马上体验,开启 AI 集成新篇章!