k8s—部署discuz论坛和tomca商城

目录

一、部署discuz论坛和tomcat商城

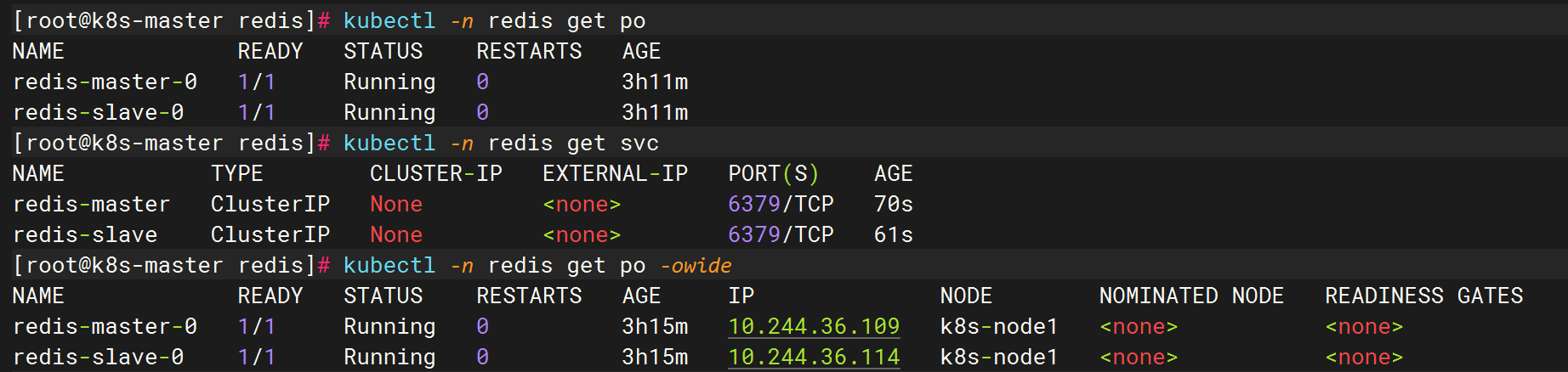

redis

编写redis配置,并启用

编辑

mysql

编写mysql配置,并启用

构建自定义镜像

luo-nginx:latest

配置文件

nginx的配置文件

php-fpm服务服务的配置文件

Dockerfile 文件

生成镜像

从镜像 nginx:v1 中运行容器

启动容器

进入容器

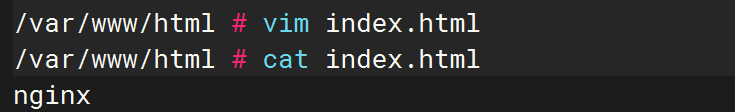

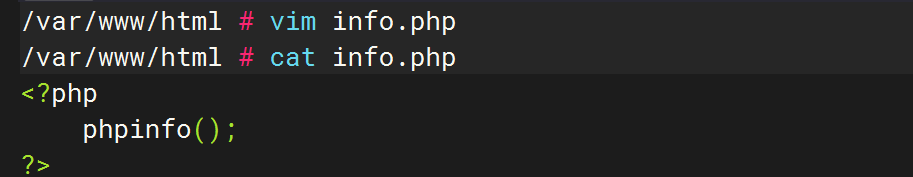

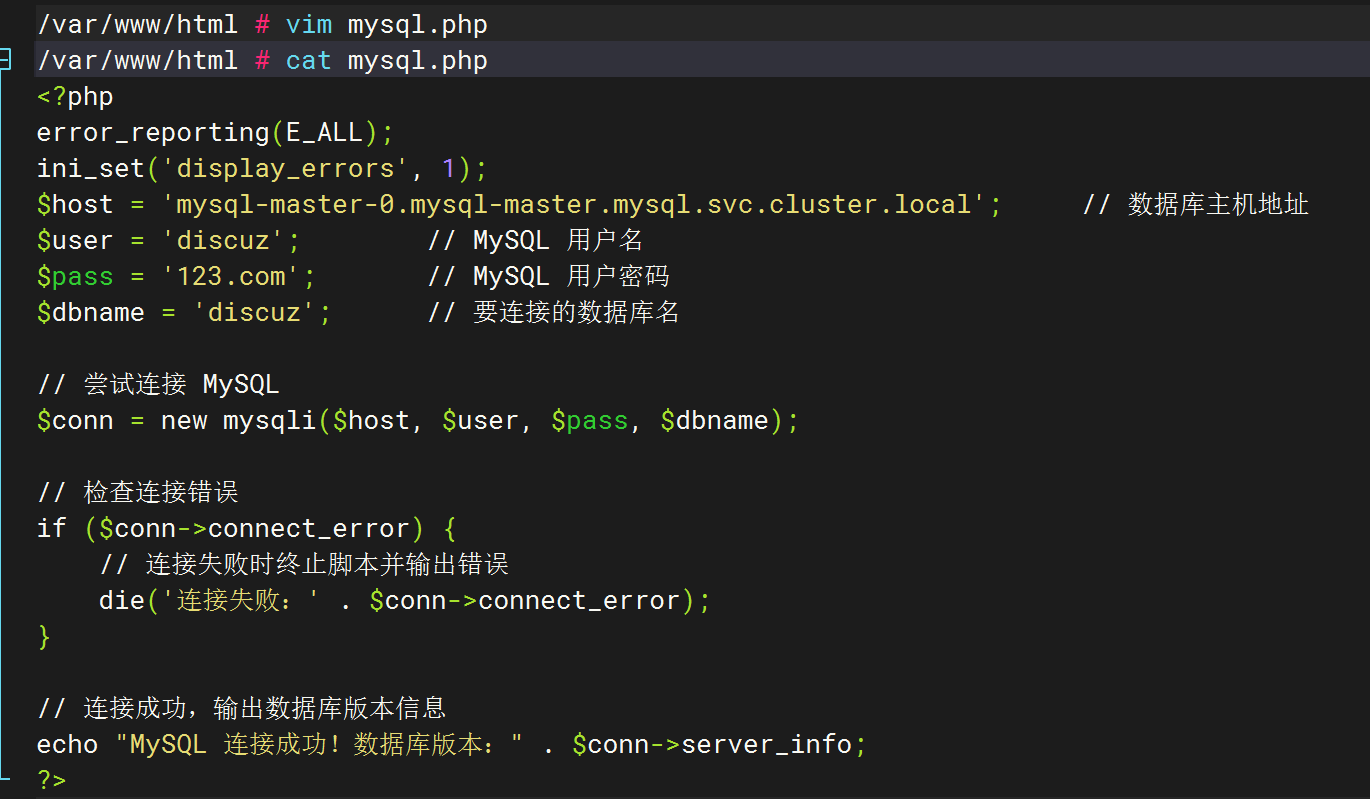

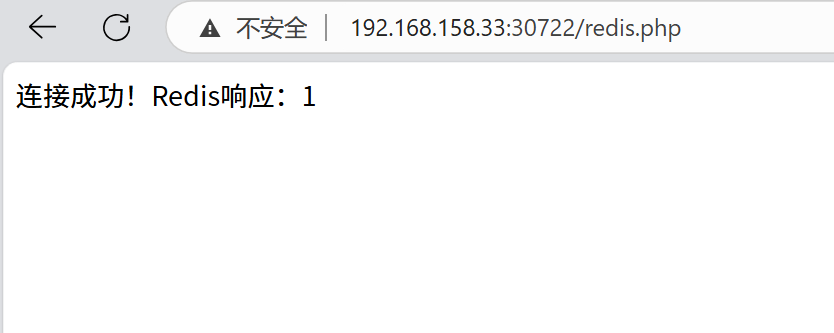

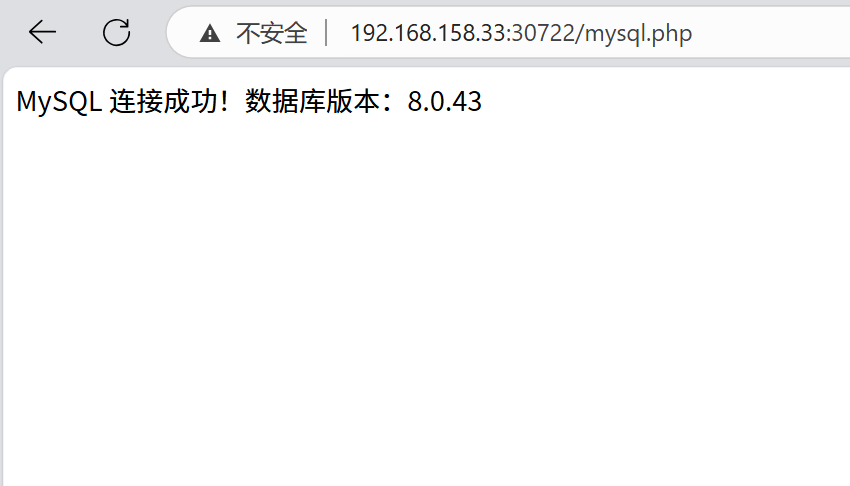

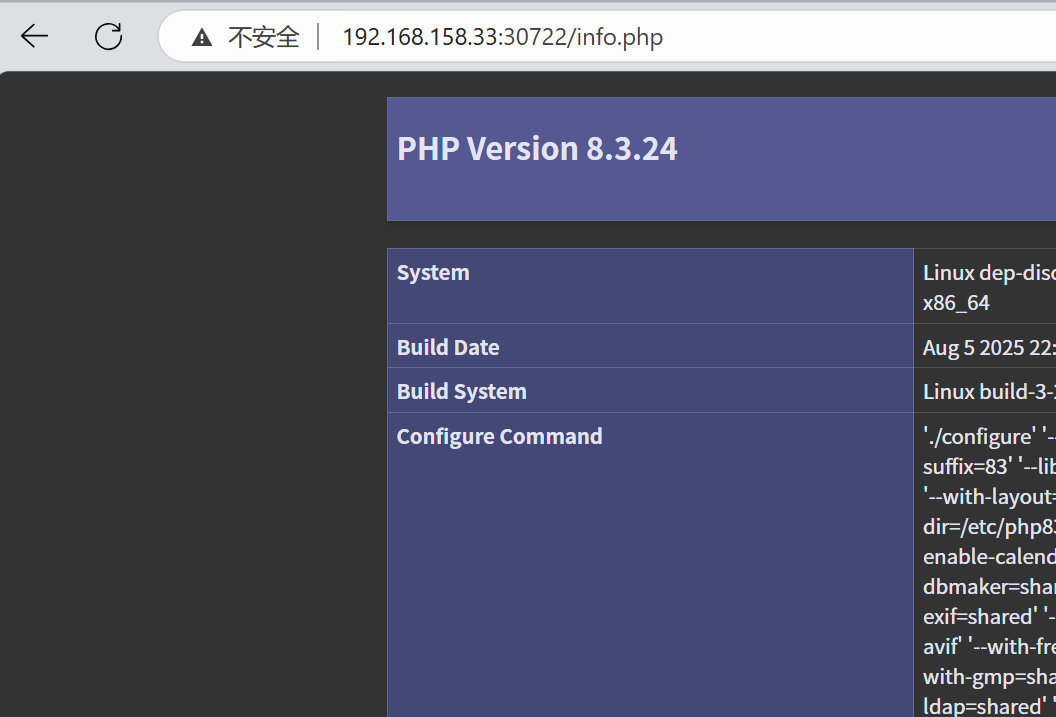

编写测试文件

index.html info.php mysql.php redis.php

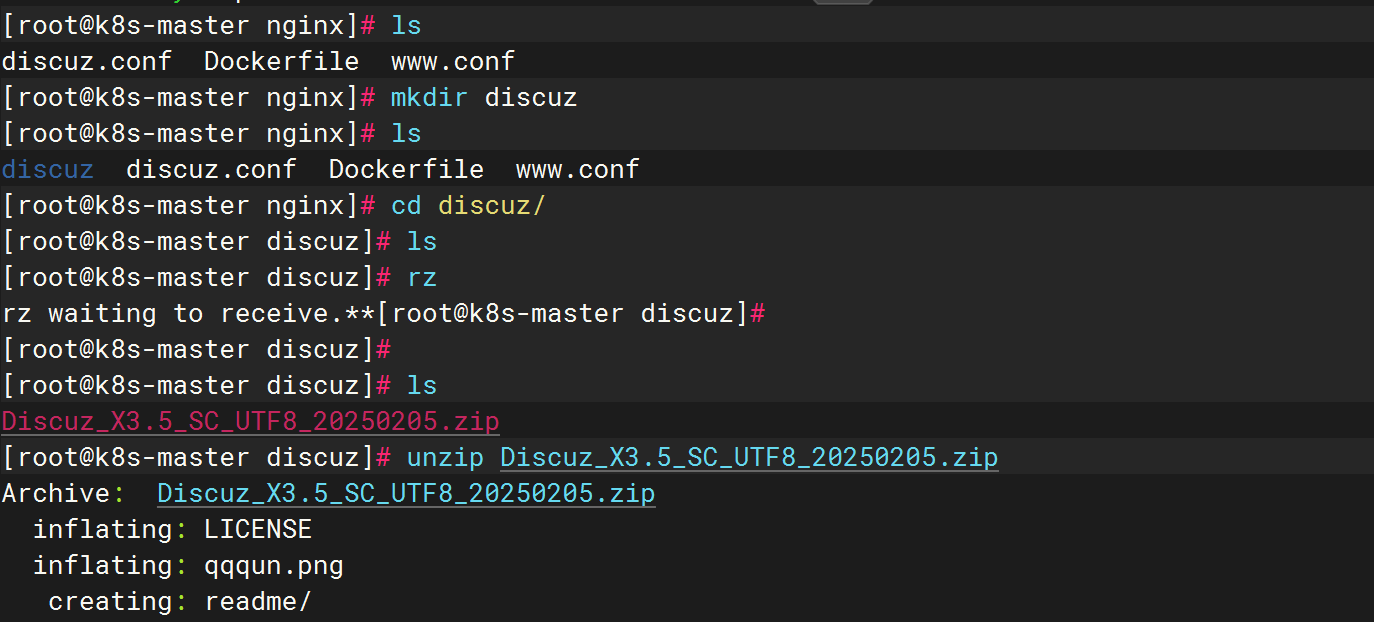

导入discuz文件

创建目录

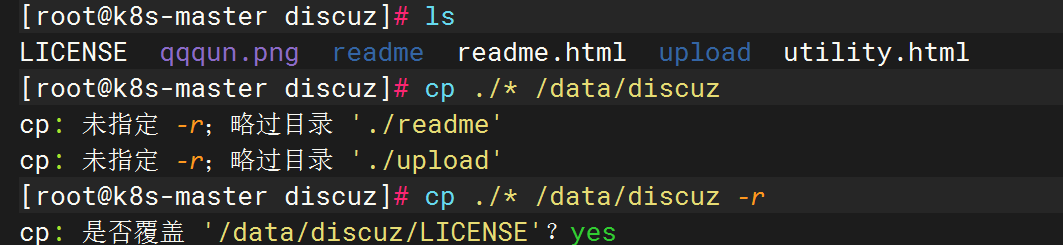

解压

拷贝文件到容器

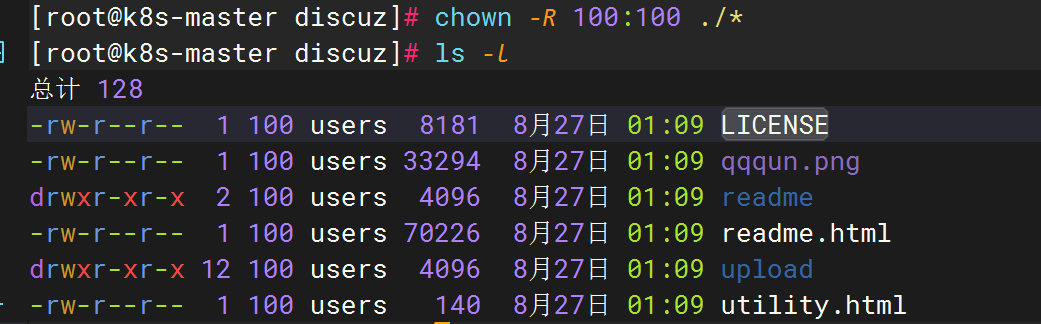

修改文件属性

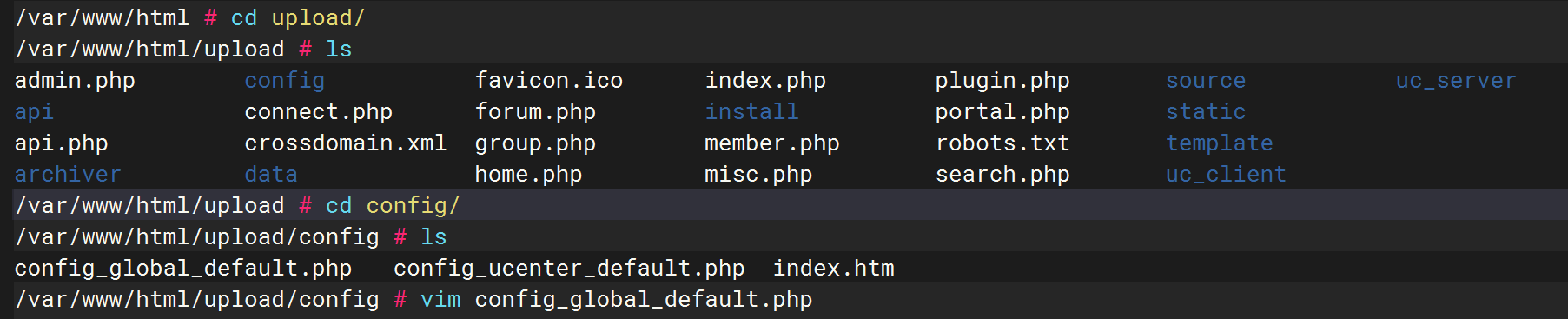

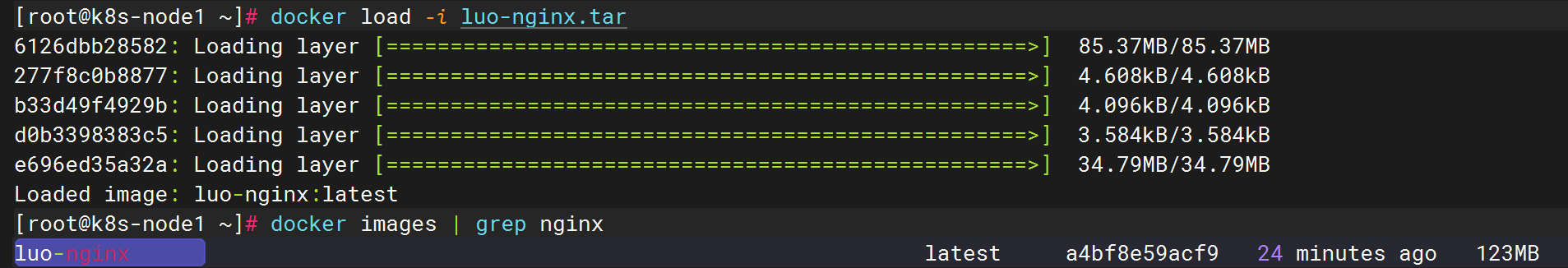

修改discuz默认配置文件

修改 config_global_default.php 文件

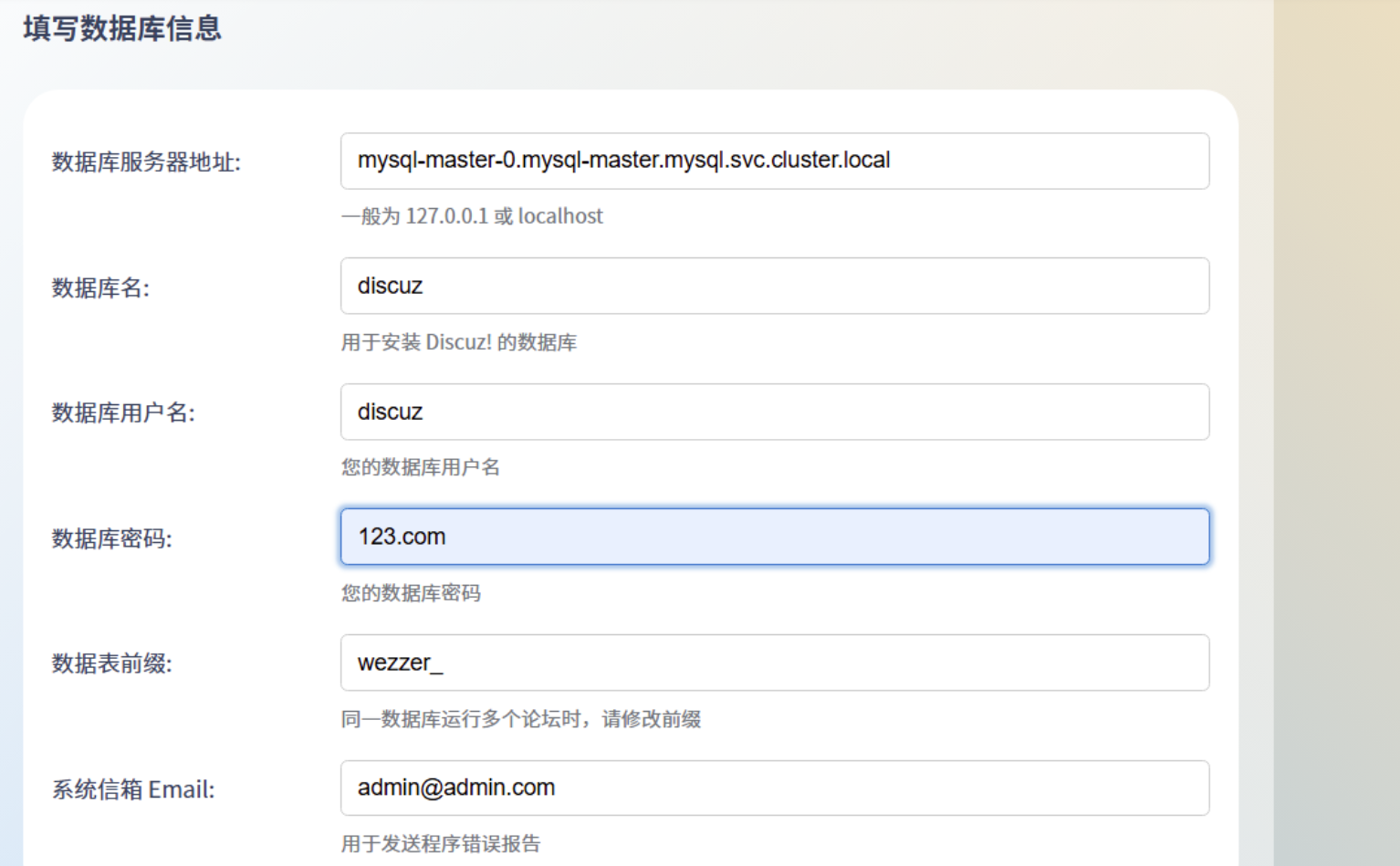

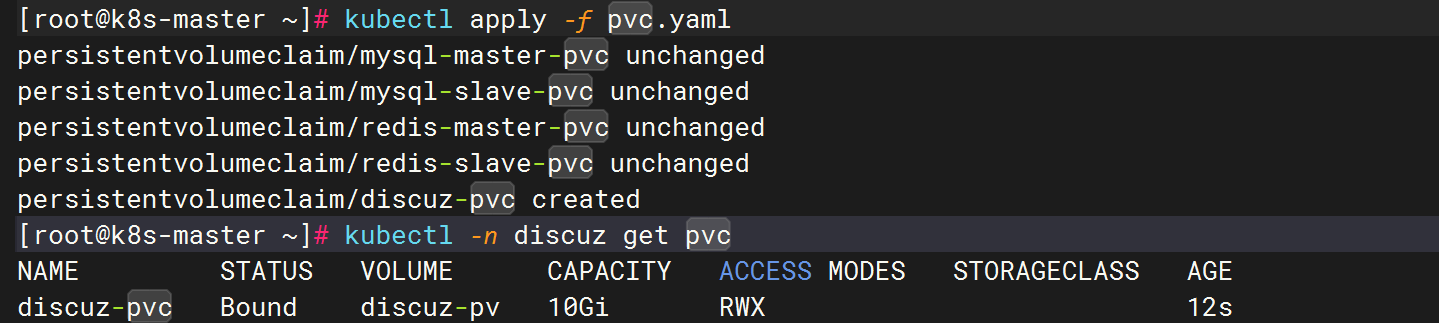

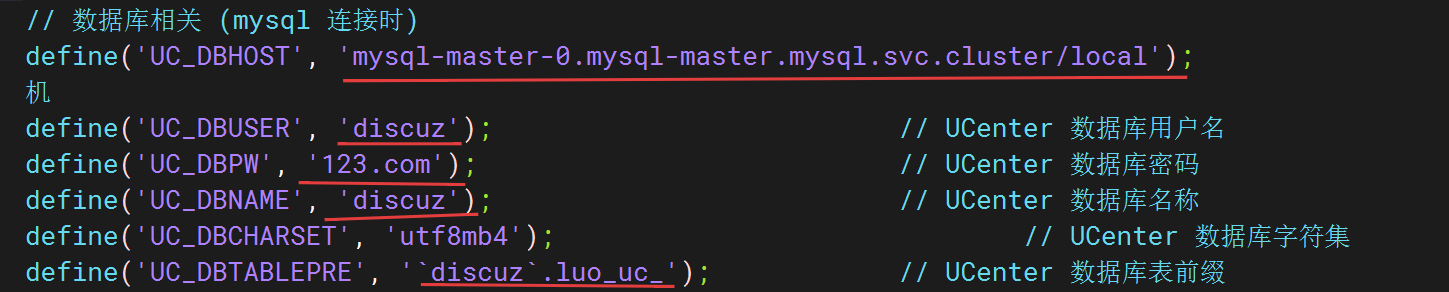

mysql数据库 修改

主库

从库

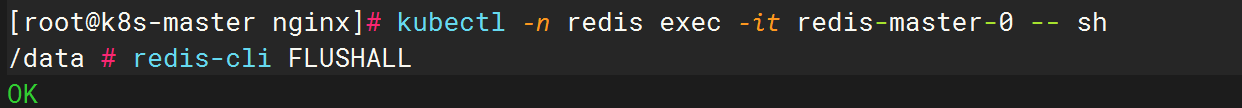

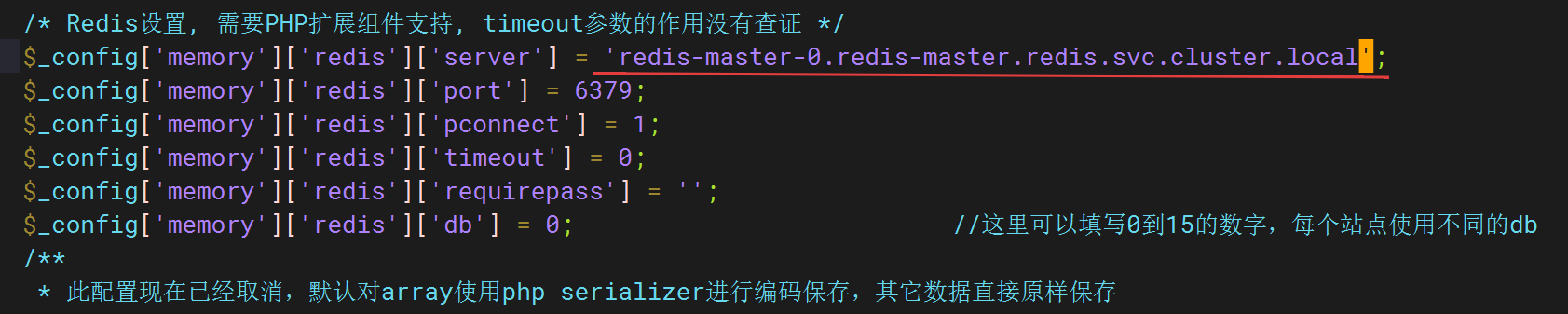

redis数据库

修改 config_ucenter_default.php 文件

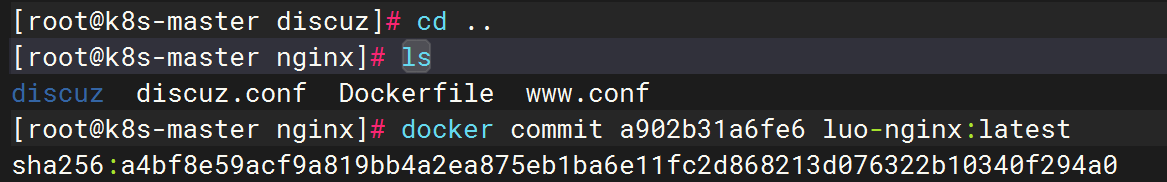

导出镜像

镜像归档为tar包

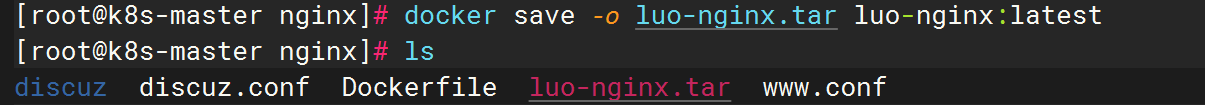

拷贝镜像给node节点

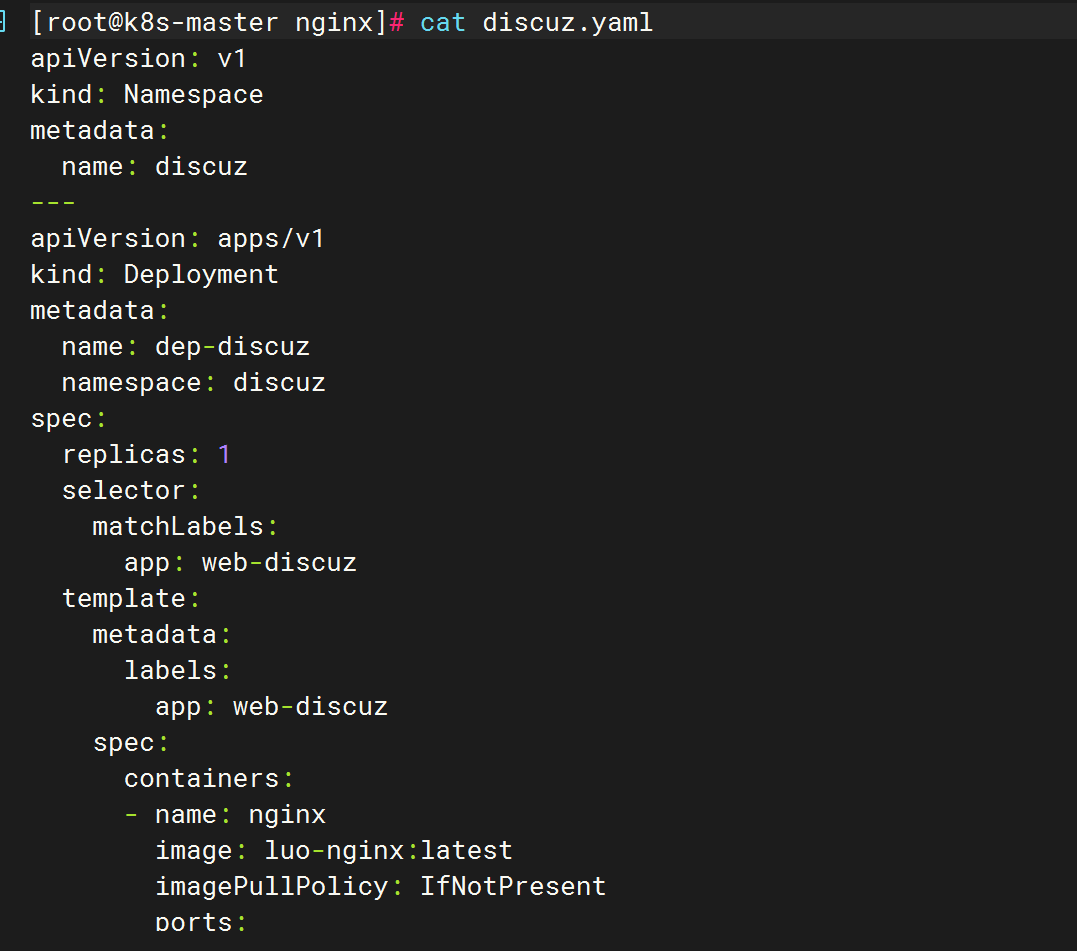

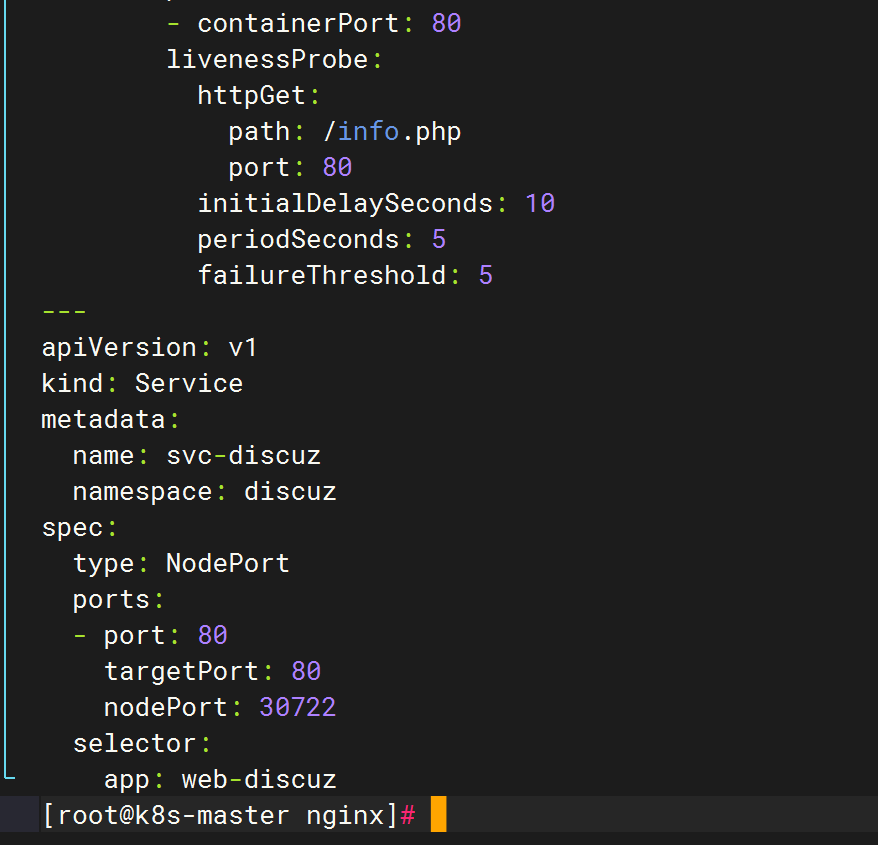

部署discuz

编写discuz文件

启动文件

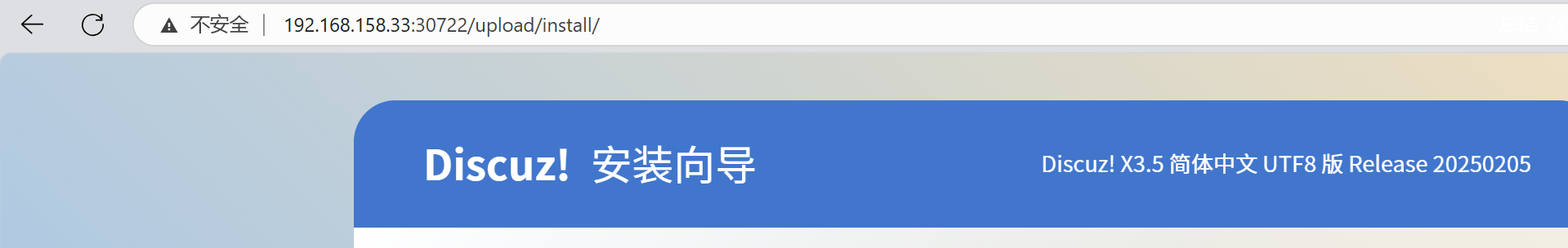

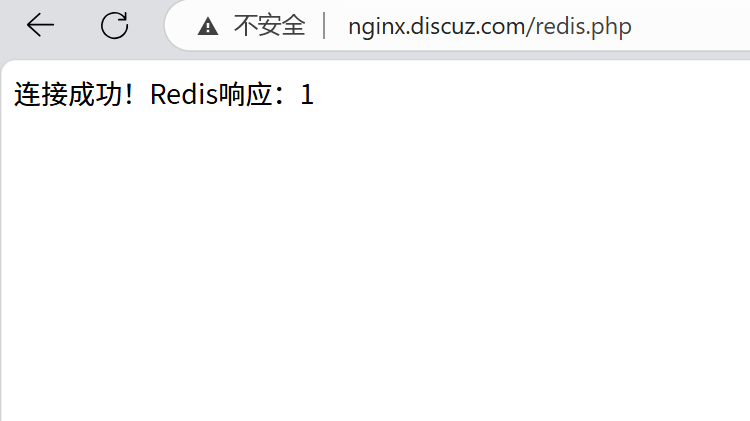

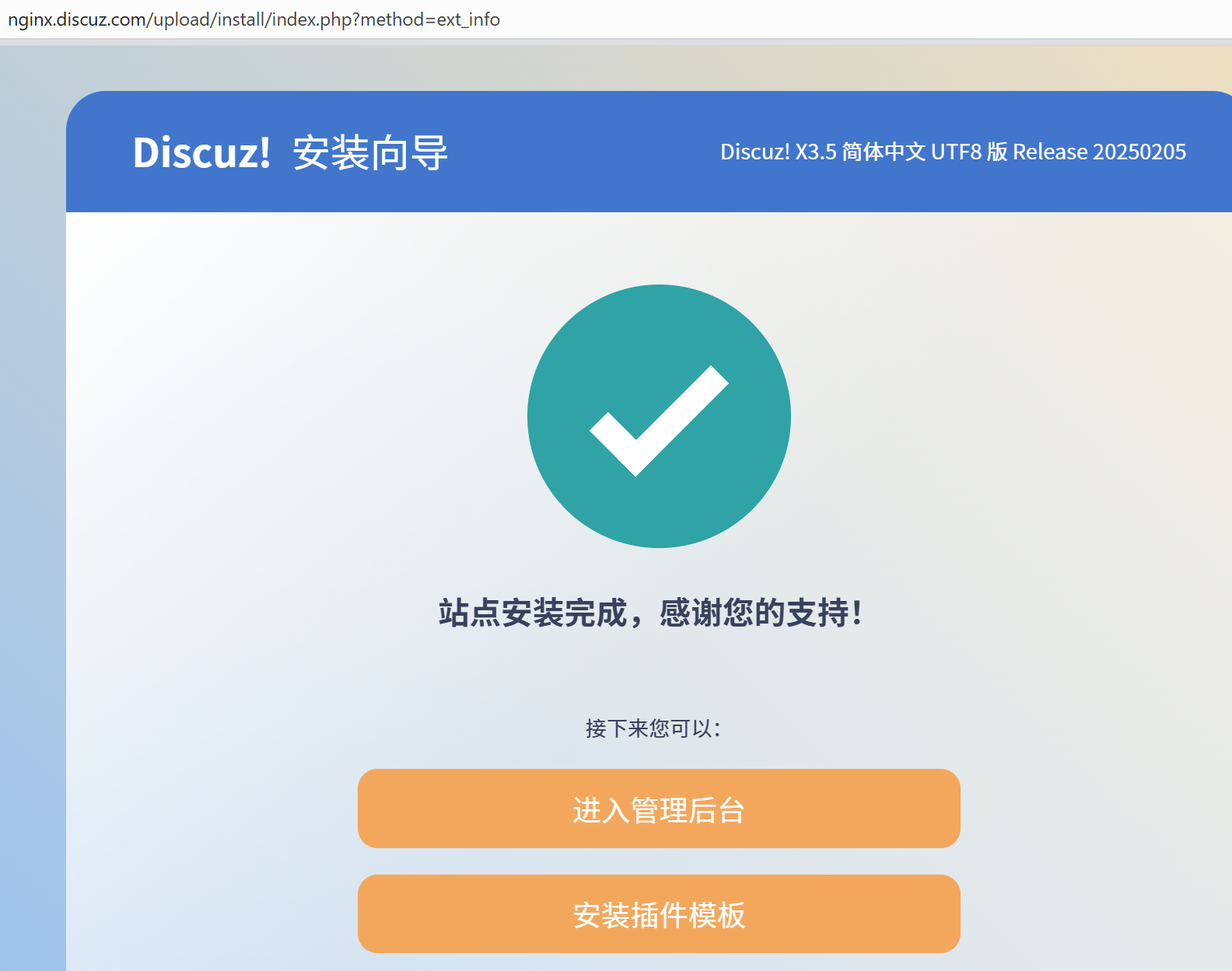

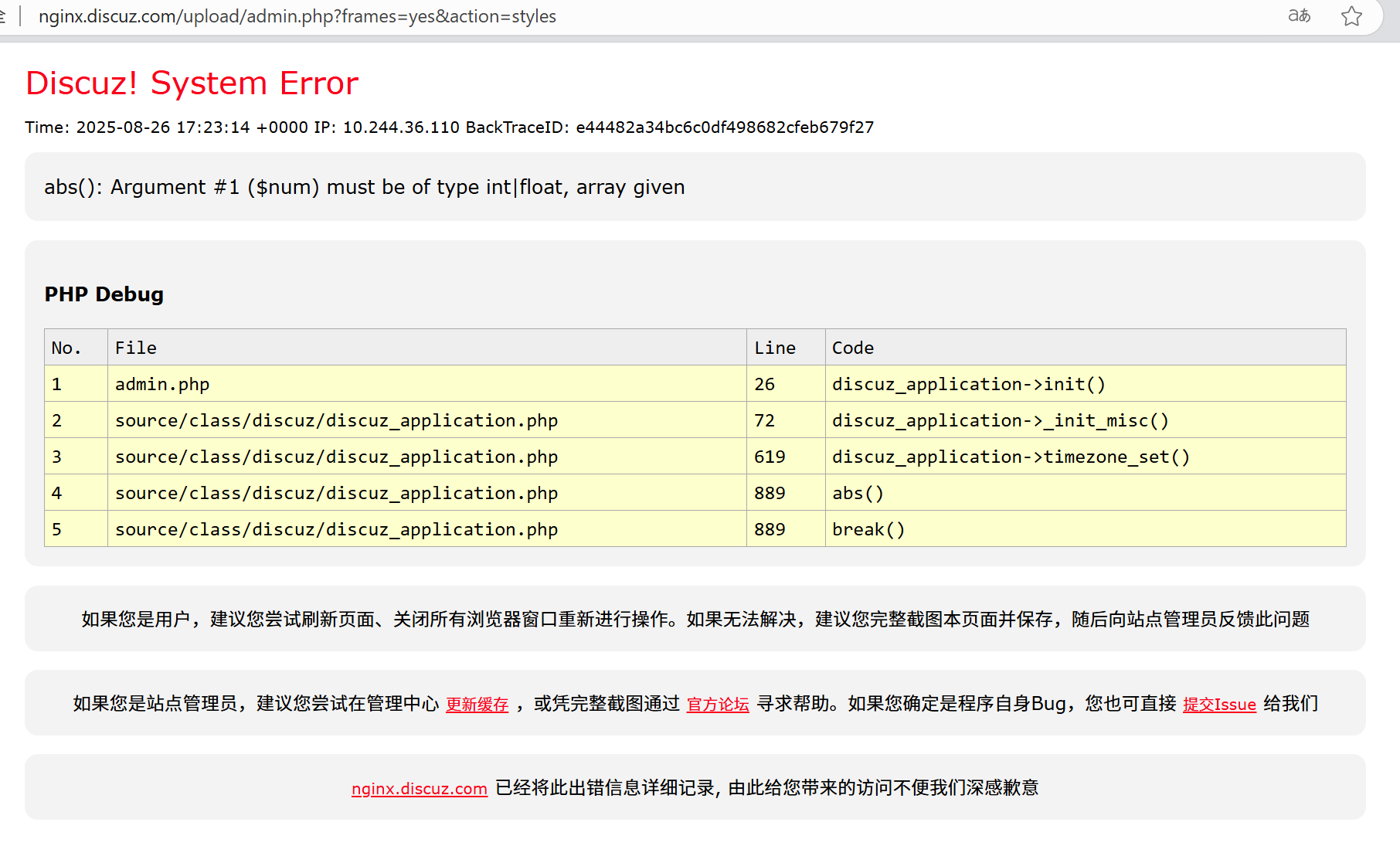

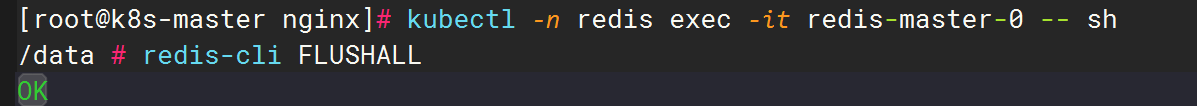

访问

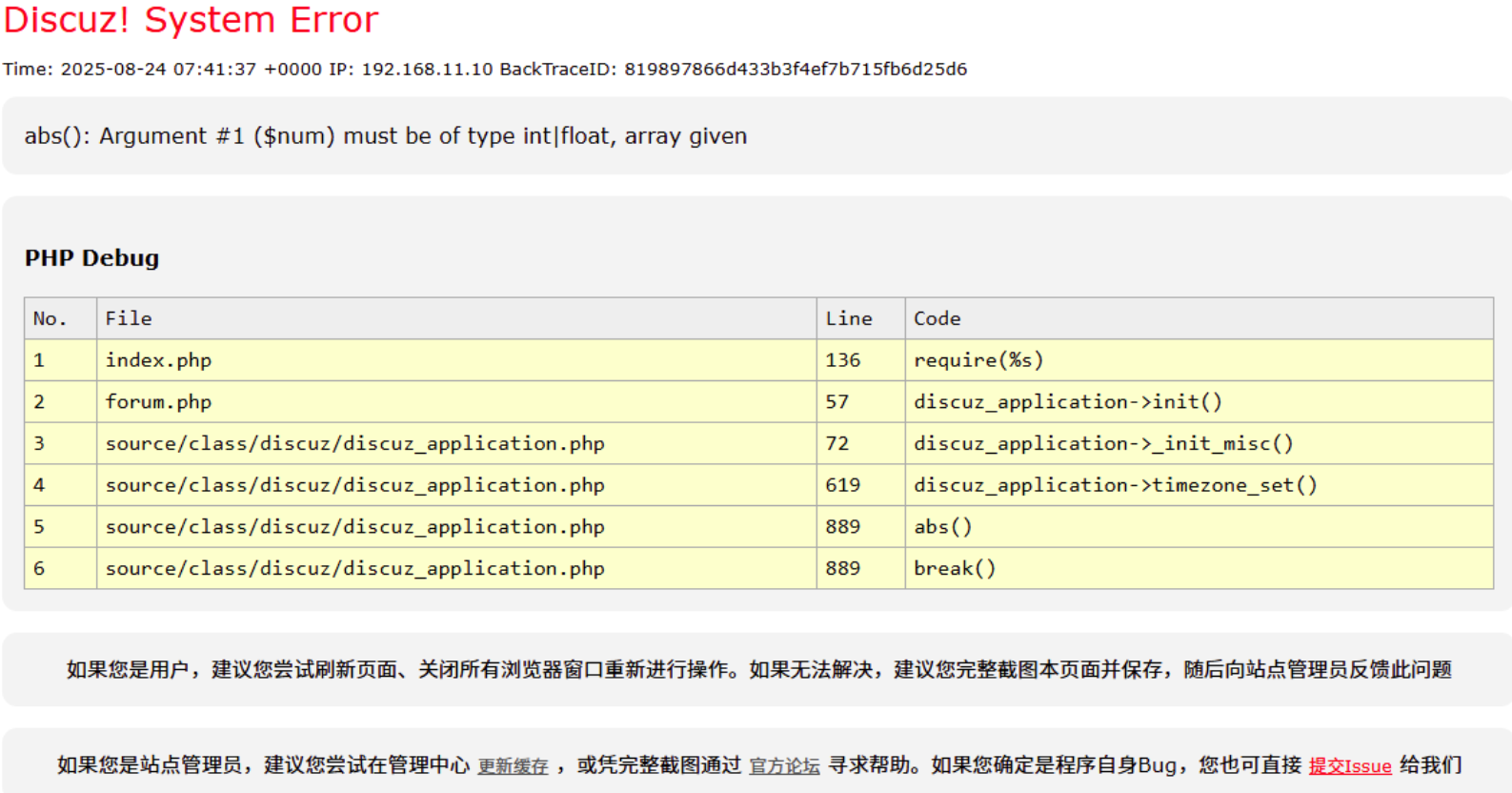

问题

访问成功!

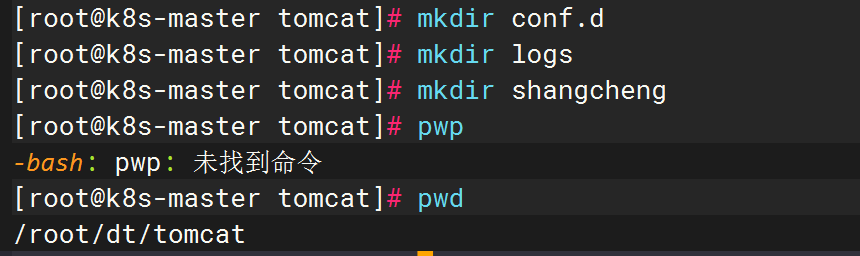

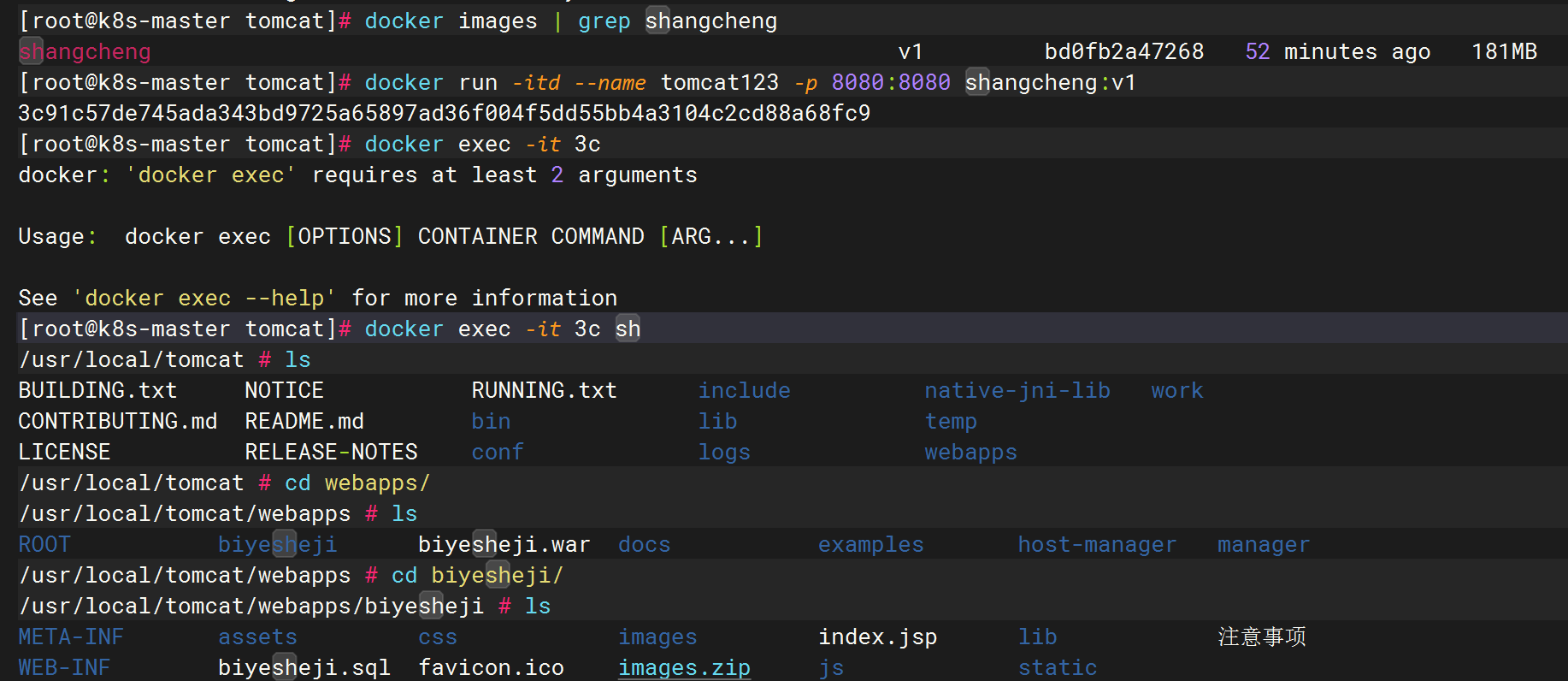

部署 tomcat 商城

自定义镜像

下载镜像

将 war 包放到shangcheng目录下

生成测试镜像

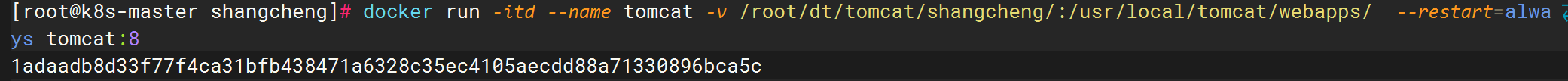

运行一个测试容器,解压war包

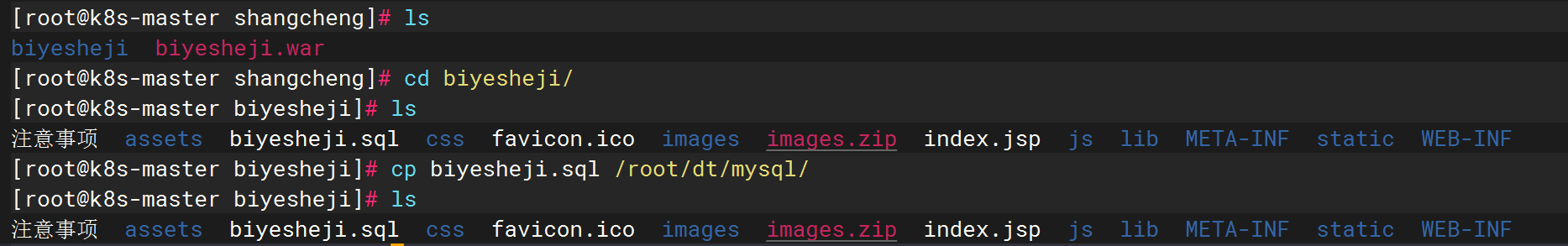

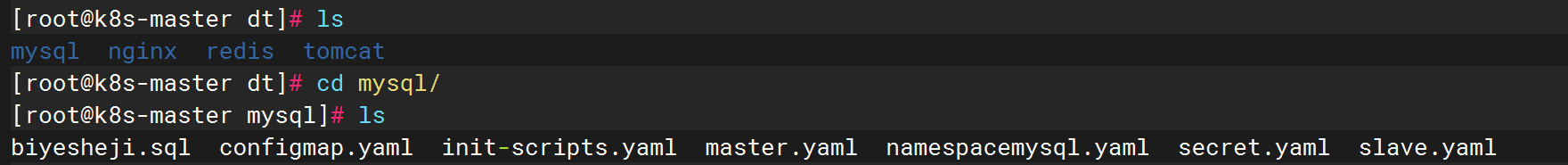

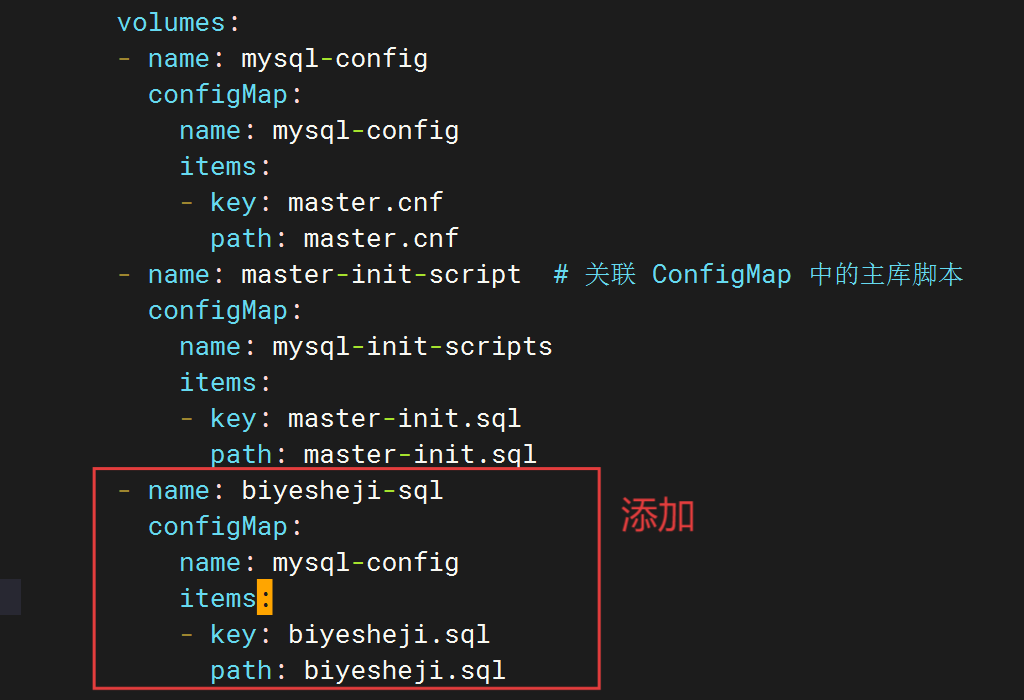

biyesheji.sql 文件拷贝给mysql库

注入sql语句

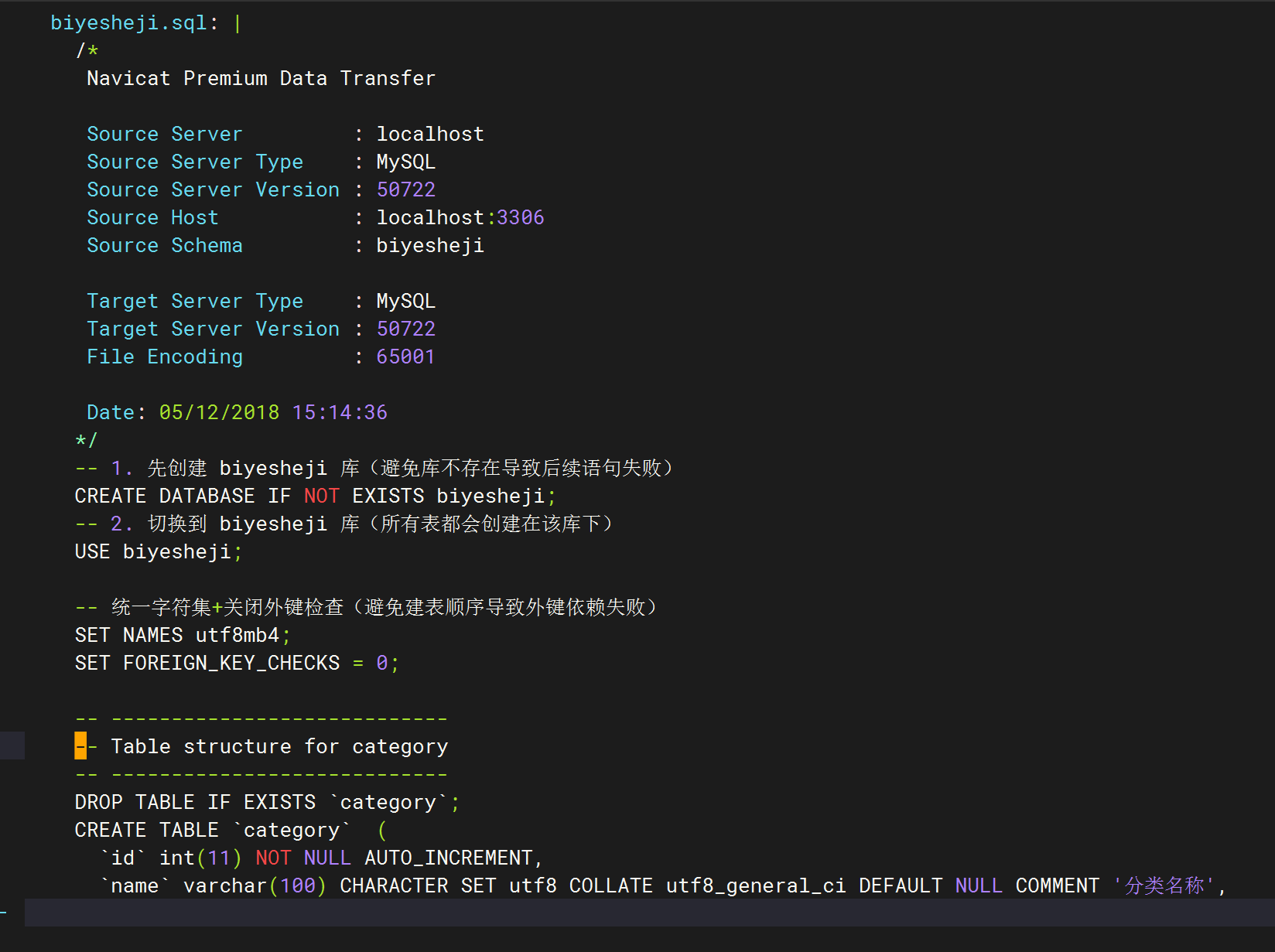

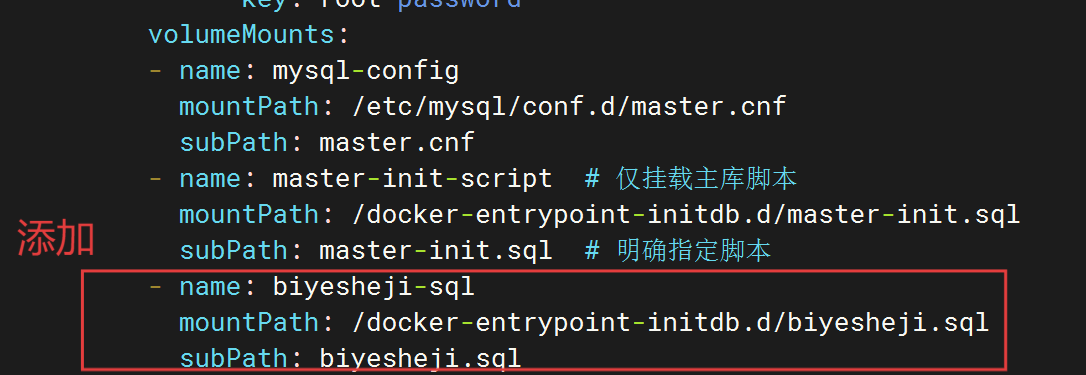

修改

mysql 主配置

匹配configmap

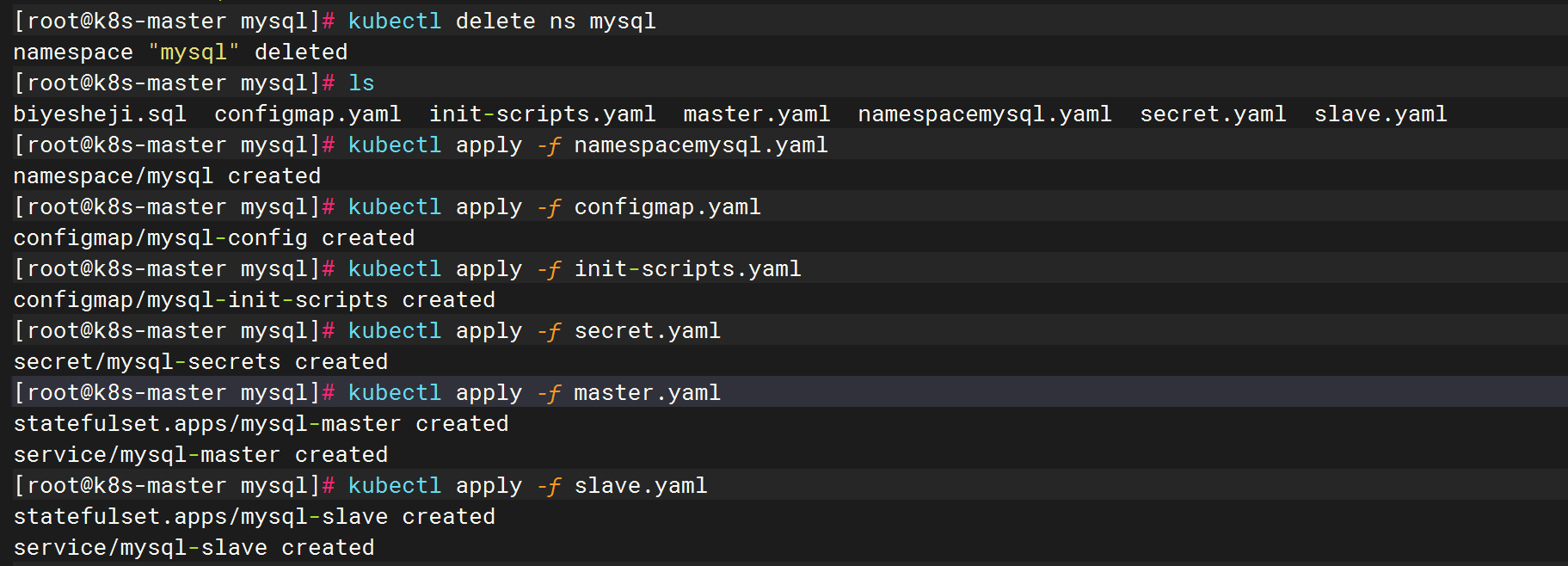

更新资源清单

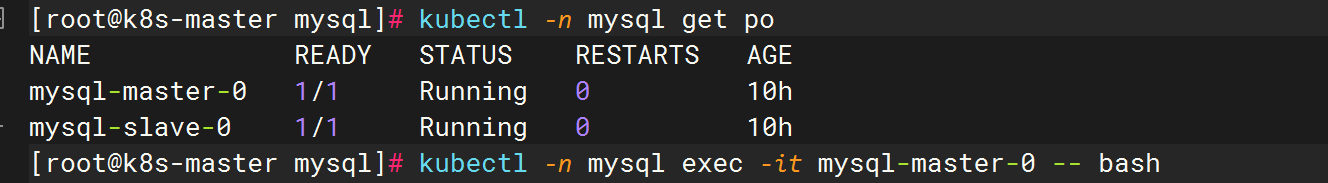

验证

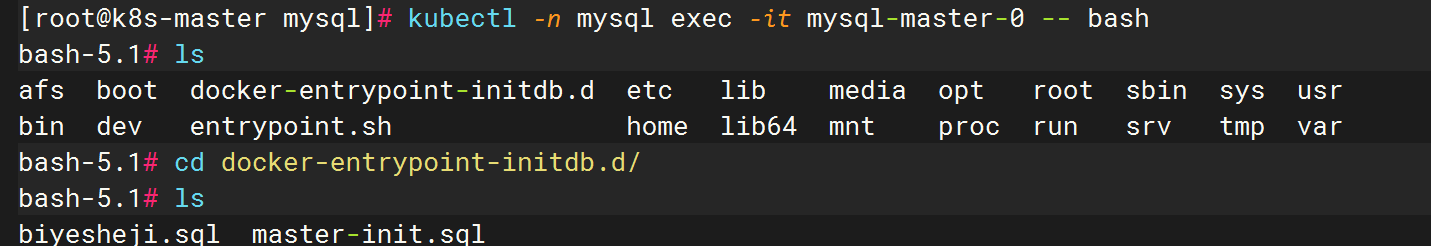

biyesheji.sql 是否注入到 mysql 主库里

编写镜像

编写 Dockerfile 文件

生成镜像

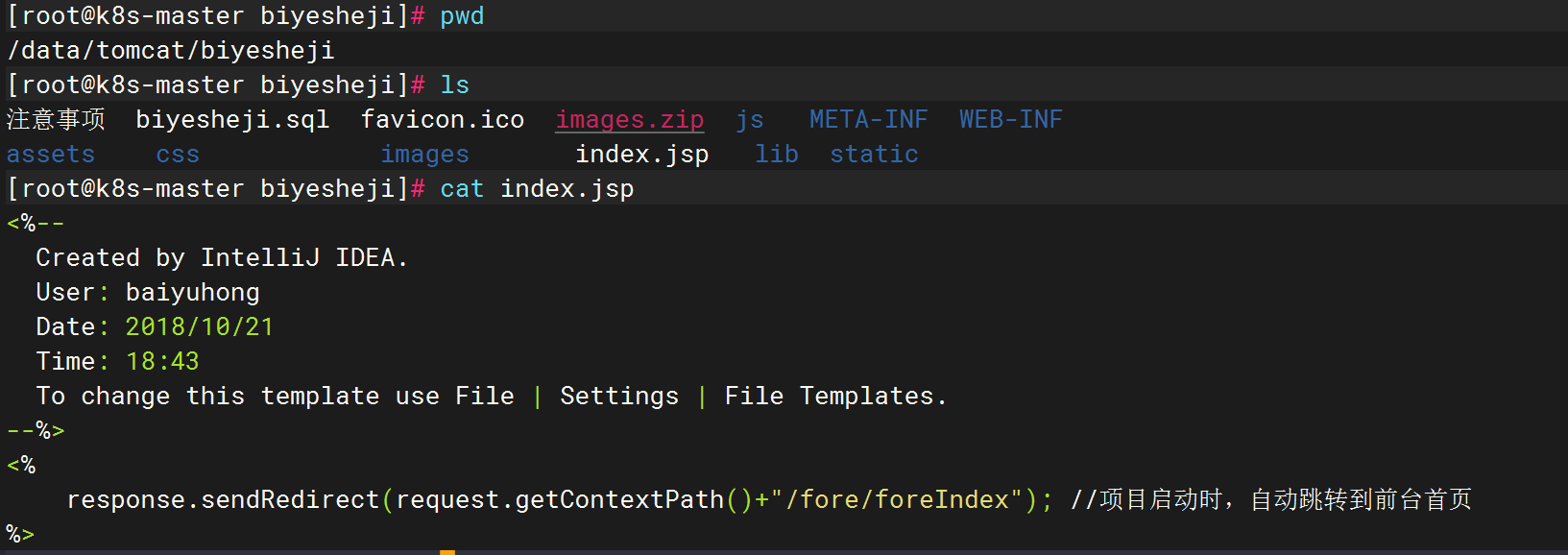

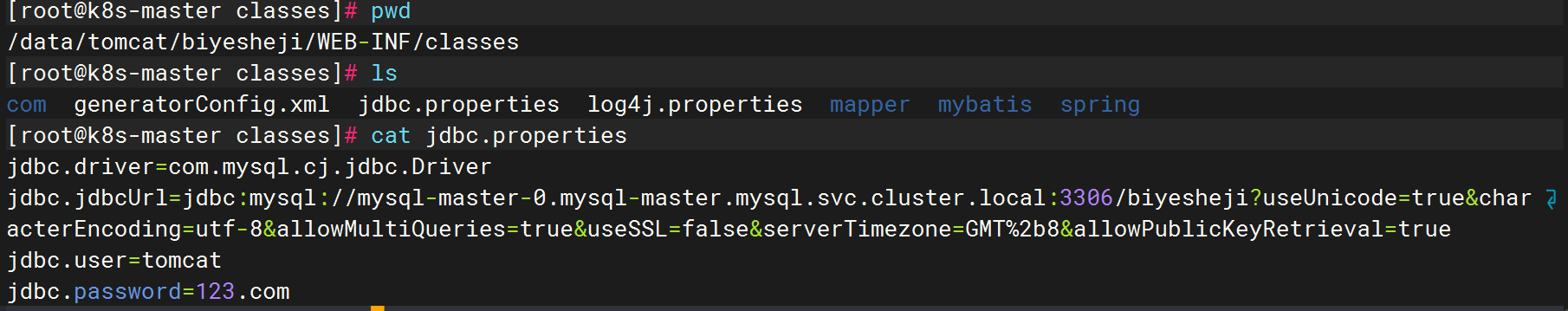

修改商城默认配置

从上面生成的这个镜像,运行容器

进入容器 修改 tomcat 文件

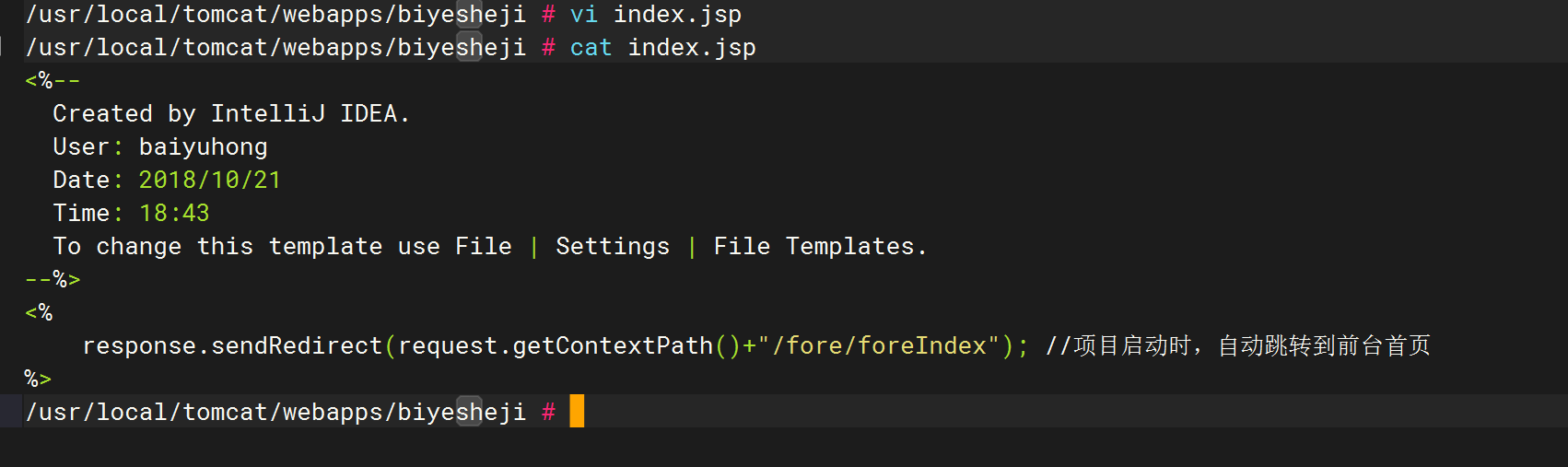

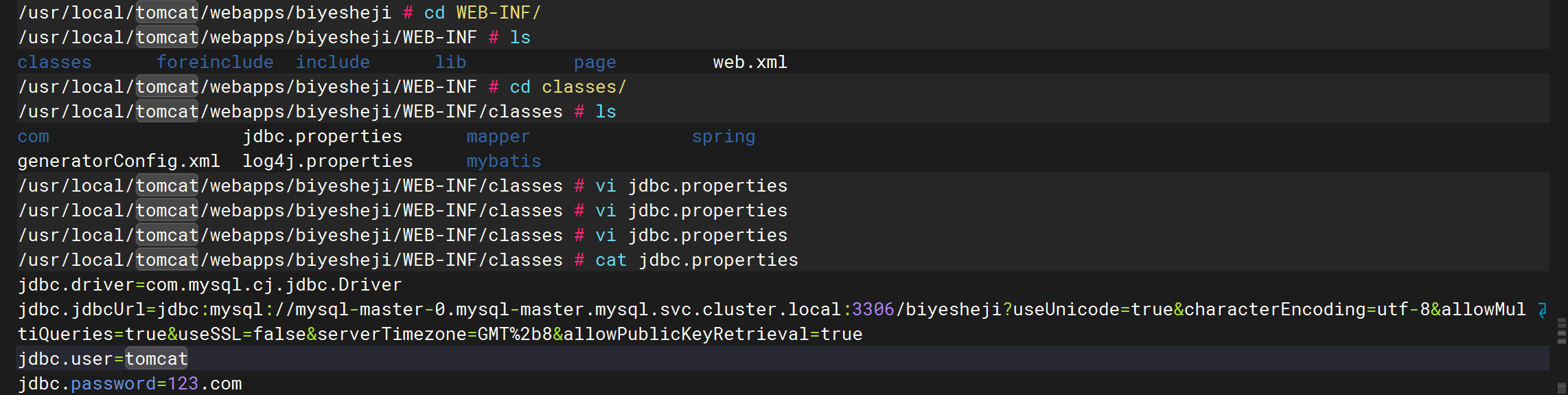

index.jsp jdbc.properties 这两个

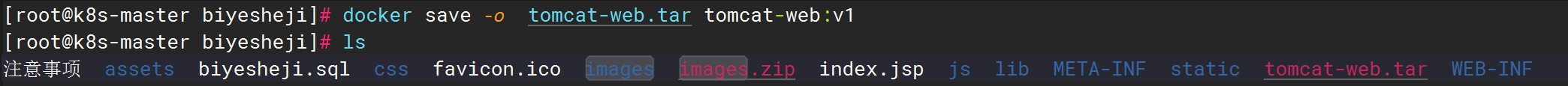

容器生成镜像

镜像归档为tar包

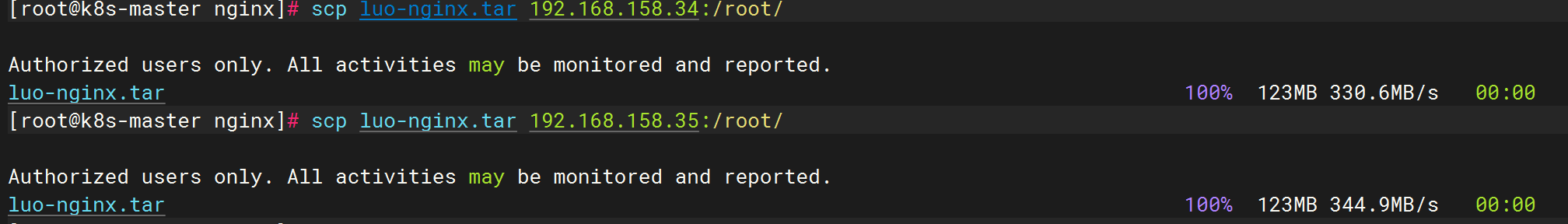

node节点

从tar包中加载镜像

部署tomcat商城

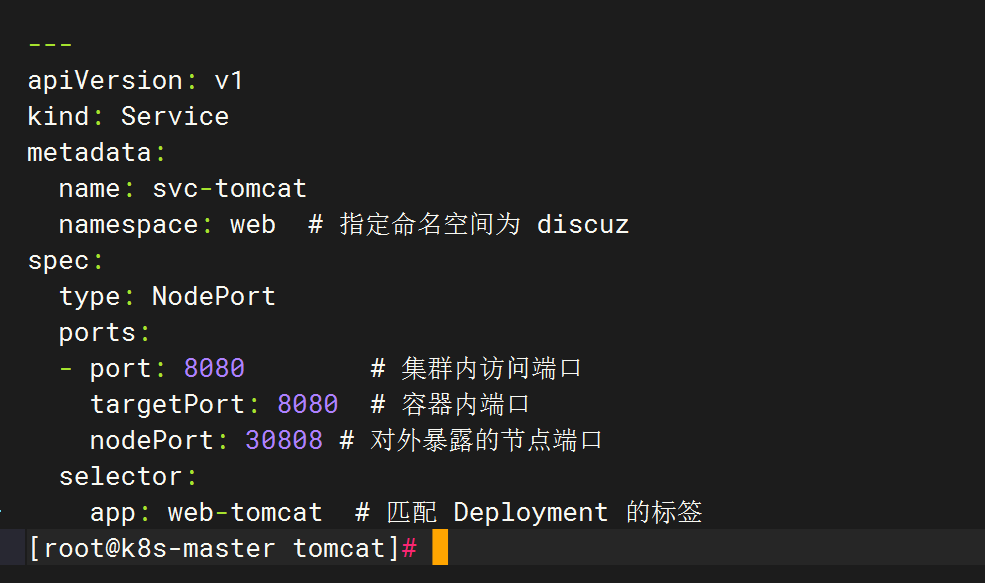

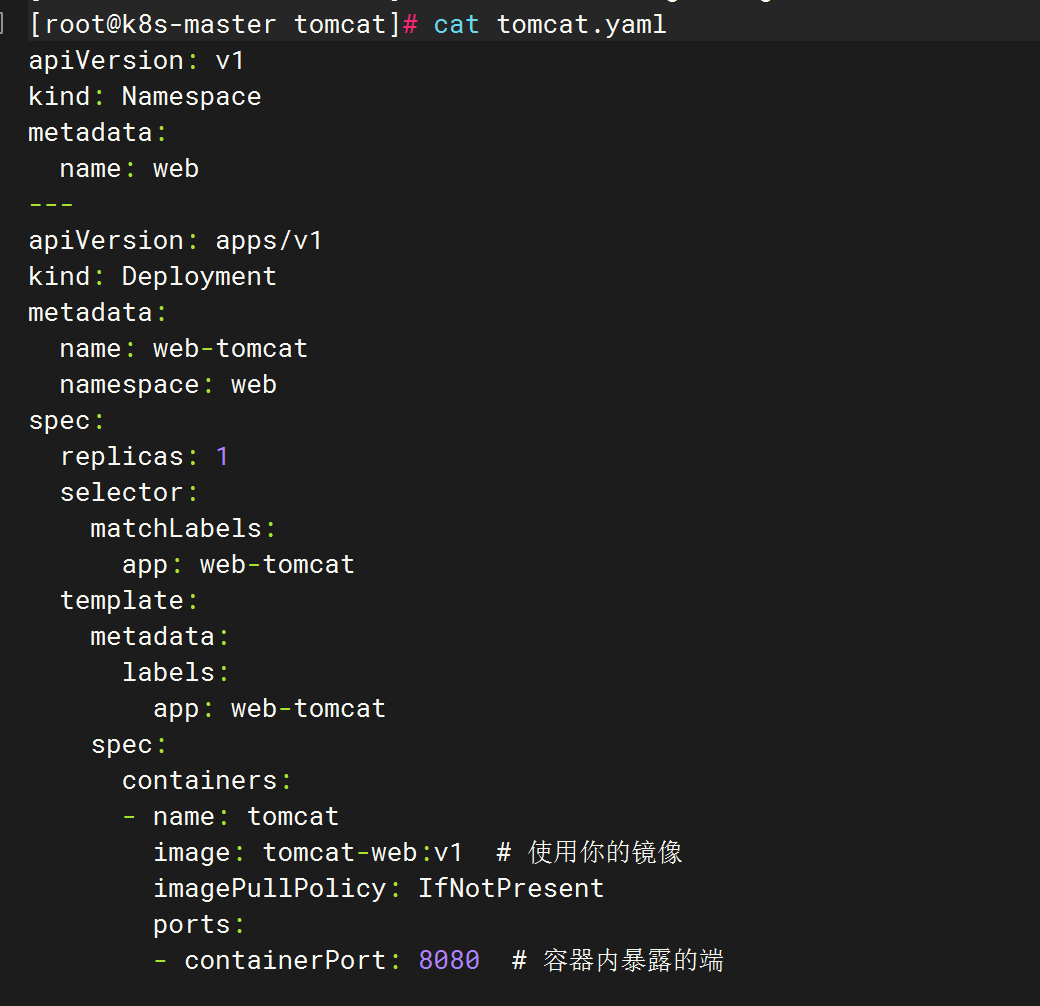

编写tomcat的yaml文件

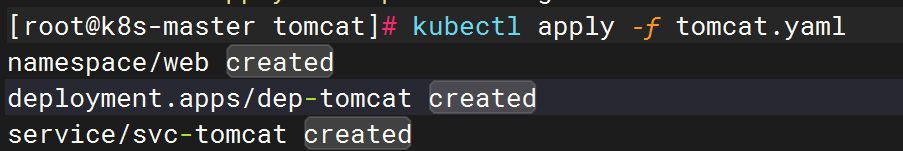

提交tomcat资源清单

访问

访问成功

二、部署discuz论坛与tomcat商城-基于域名访问

环境准备

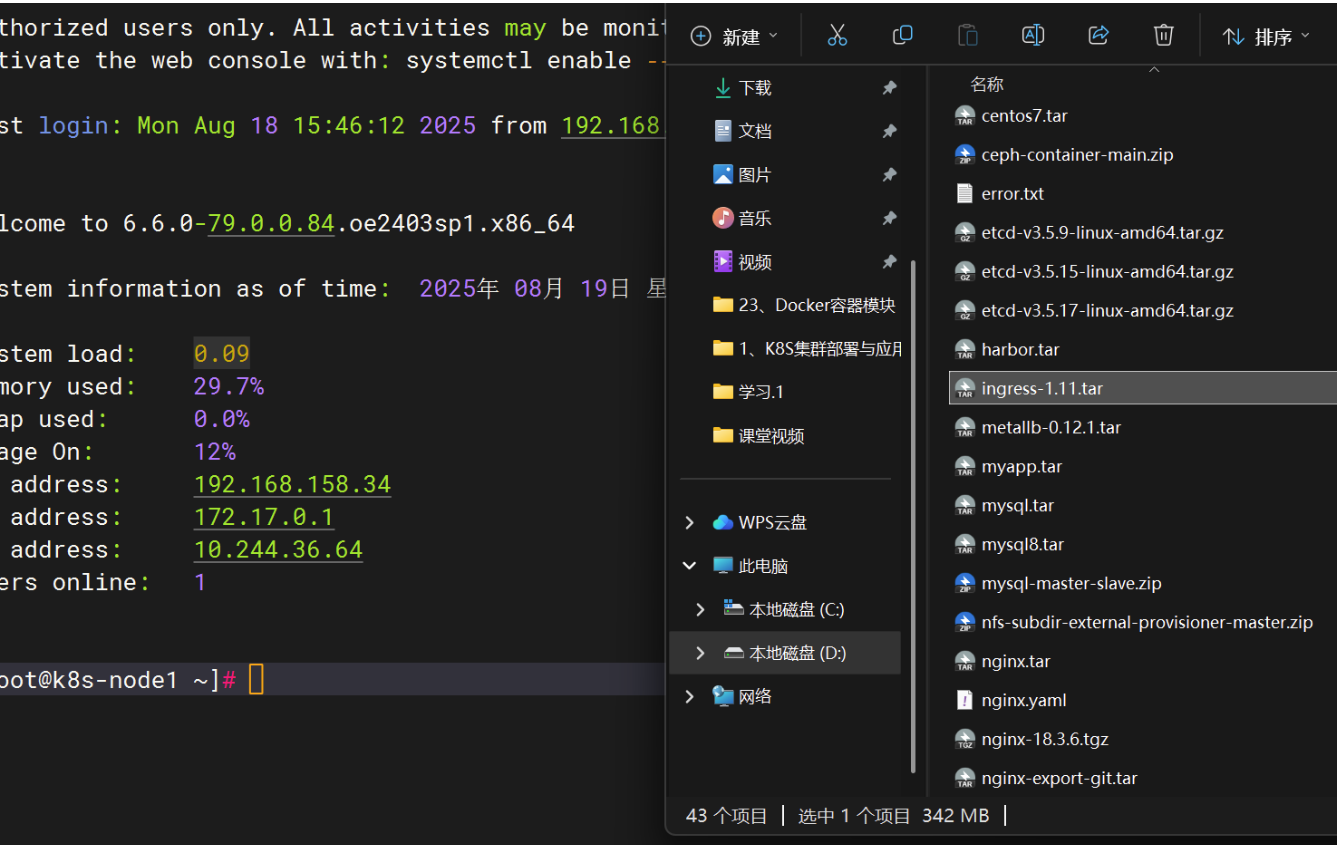

将ingress-1.11.tar镜像包拷贝到每个node节点

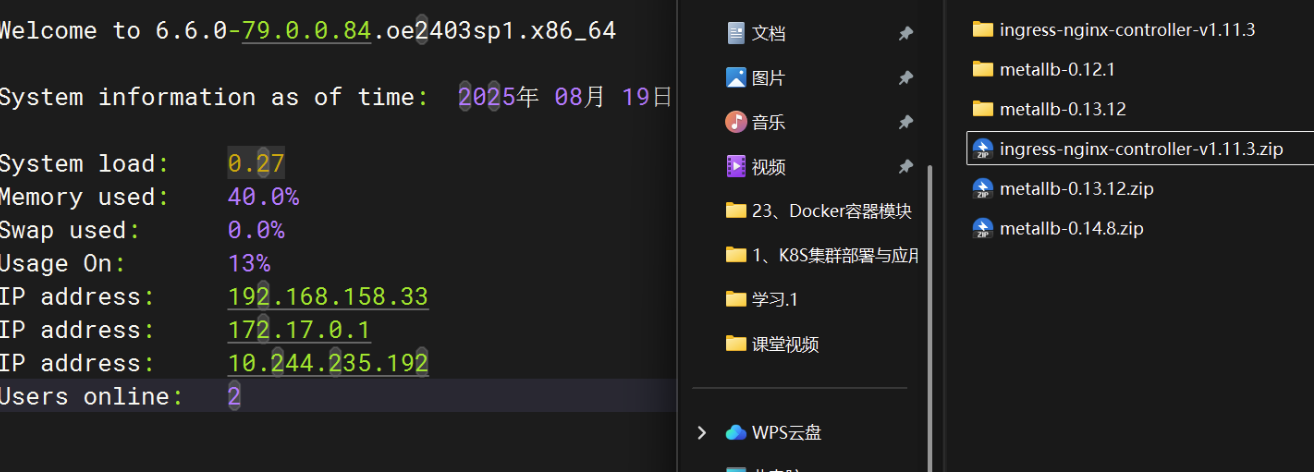

将ingress-nginx-controller-v1.11.3.zip拷贝到master主节点,这个是资源清单文件

镜像包拷贝到node节点,并加载镜像

master主节点

解压ingress软件包

修改ingress配置文件

启用ingress

下载ipvsadm

LoadBalancer模式

搭建metallb支持LoadBalancer

编写地址段分配configmap

创建IP地址池

关联IP地址池

提交资源清单

查看

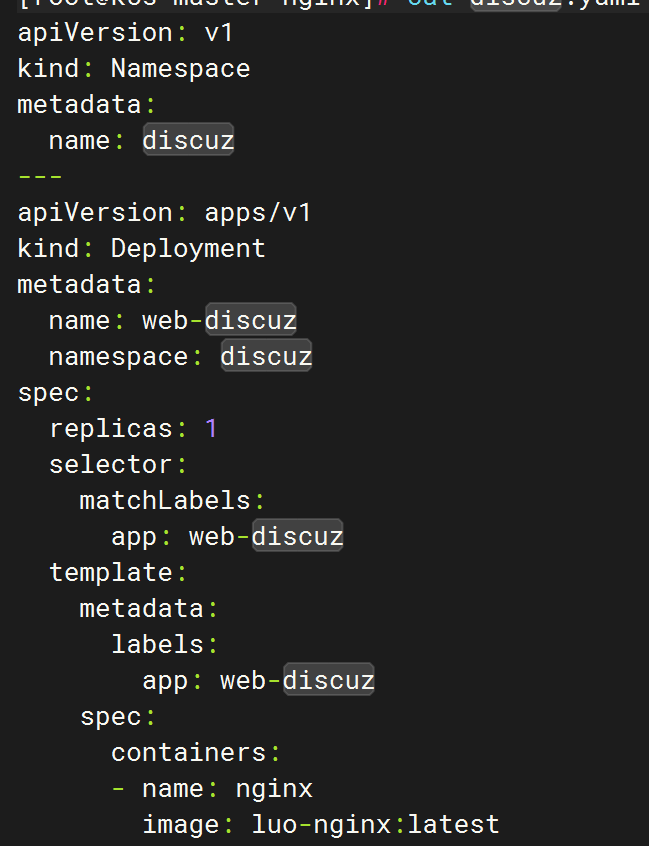

1、discuz域名访问

修改discuz的yaml文件

主要修改service

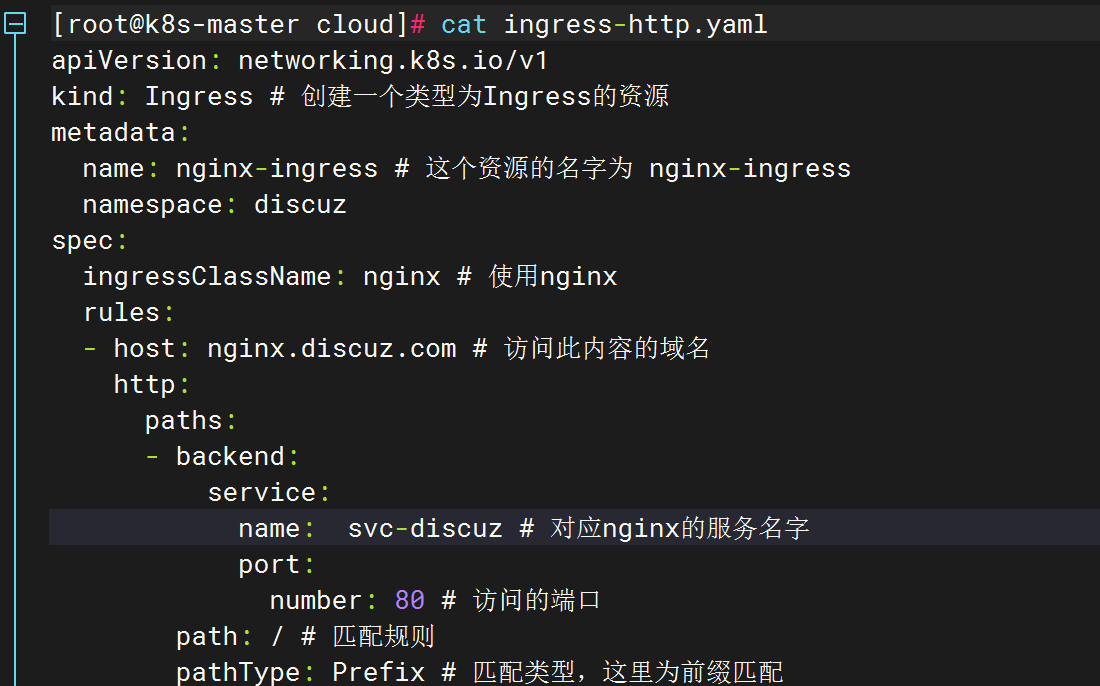

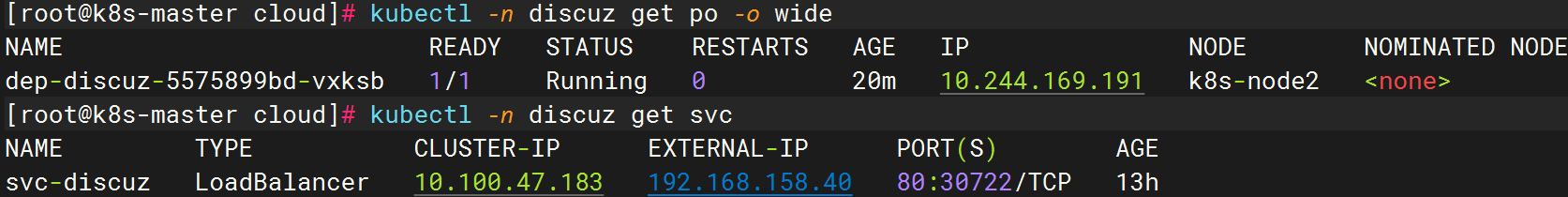

ingress配置文件

提交资源清单

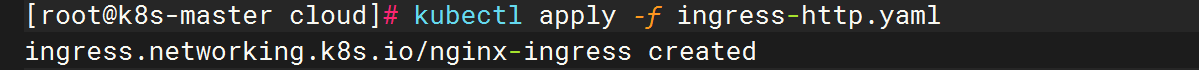

查看

测试主机hosts文件添加域名

访问

discuz域名访问成功

2、tomcat域名访问

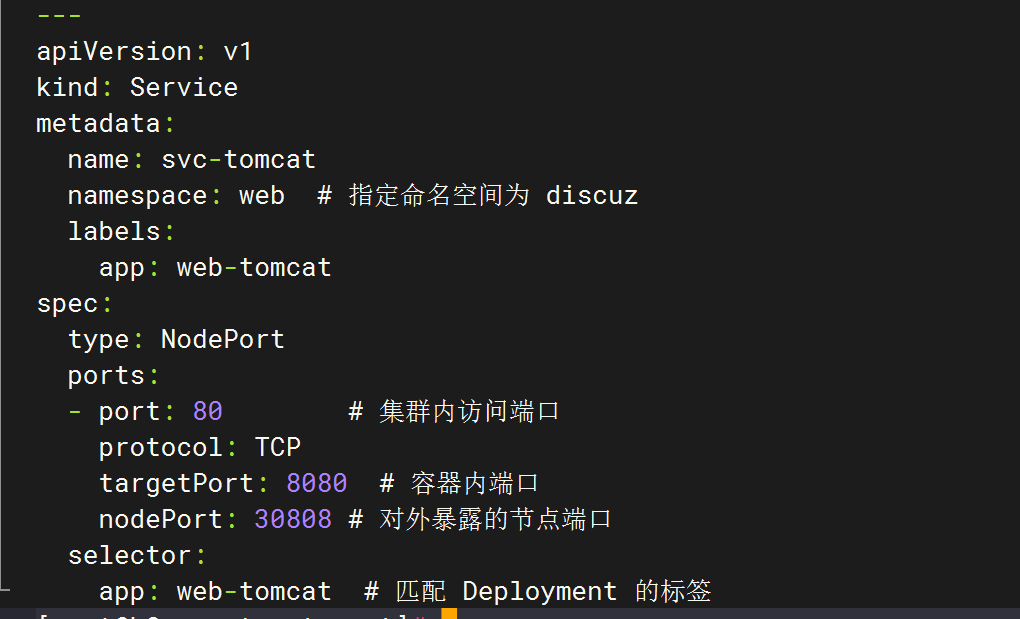

修改tomcat的yaml文件

主要修改service

ingress配置文件

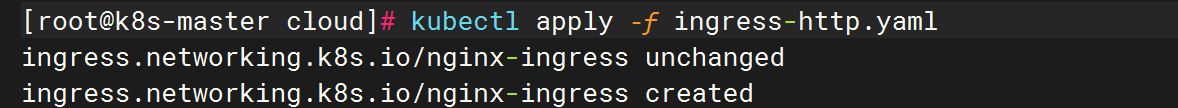

提交资源清单

查看

discuz

tomcat

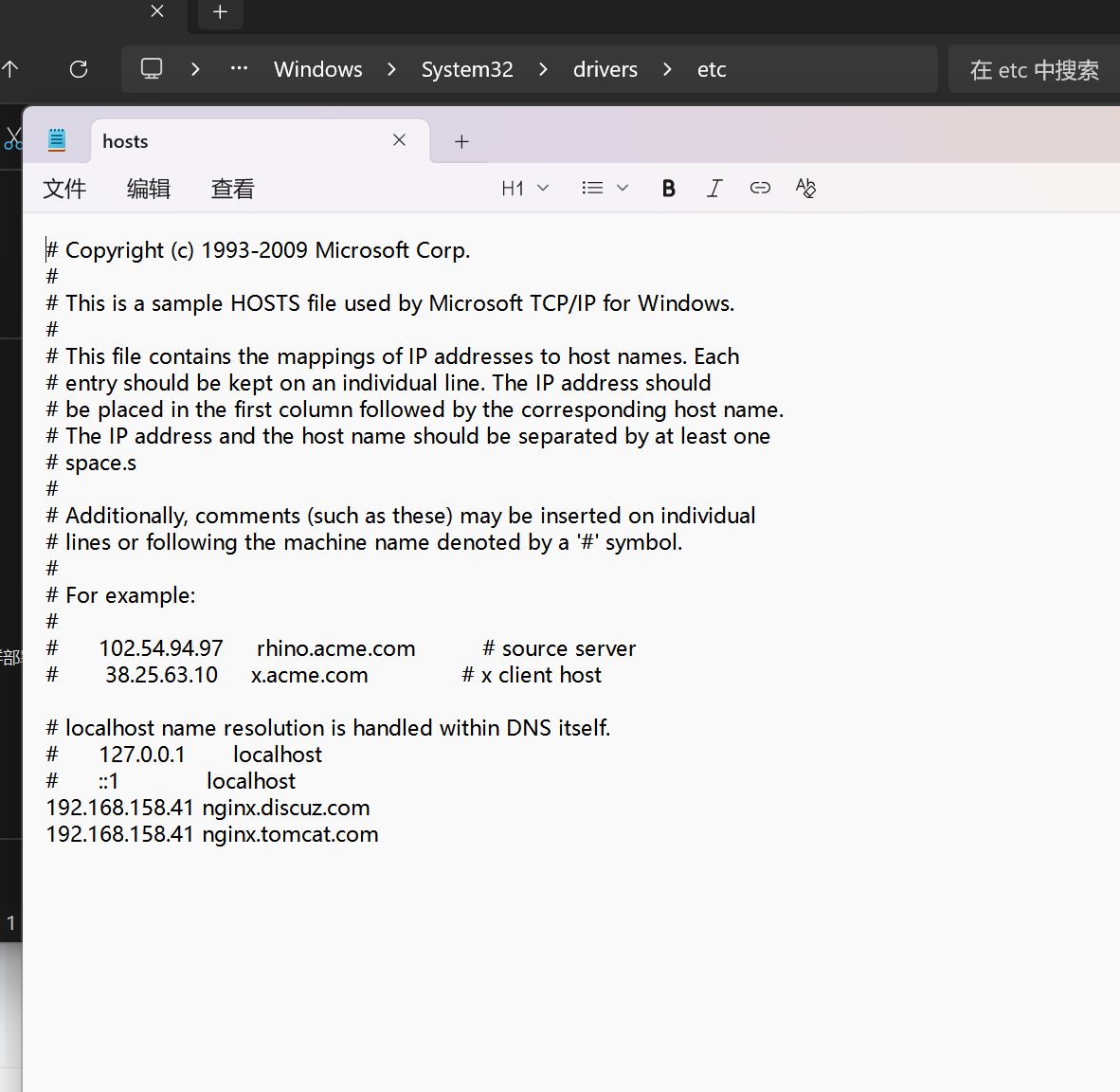

测试主机hosts文件添加域名

访问

tomcat商城,访问成功

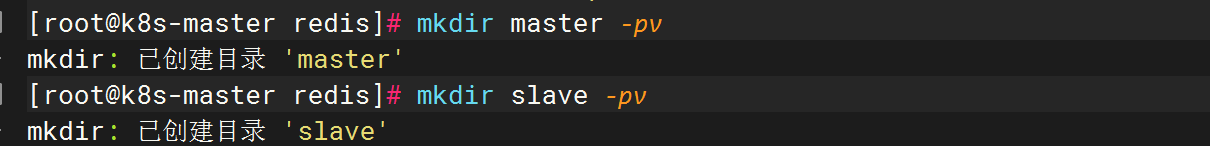

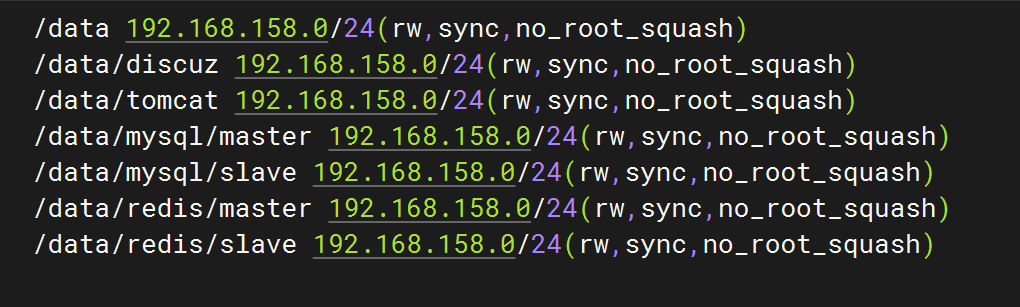

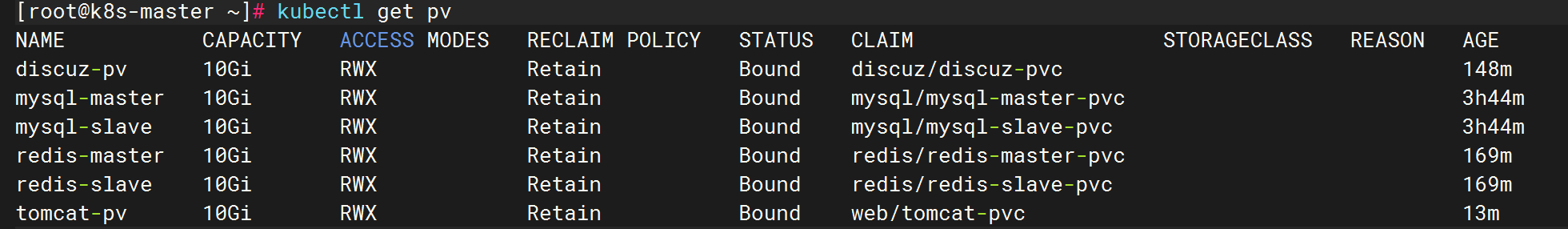

三、持久化存储(pvc和pv)

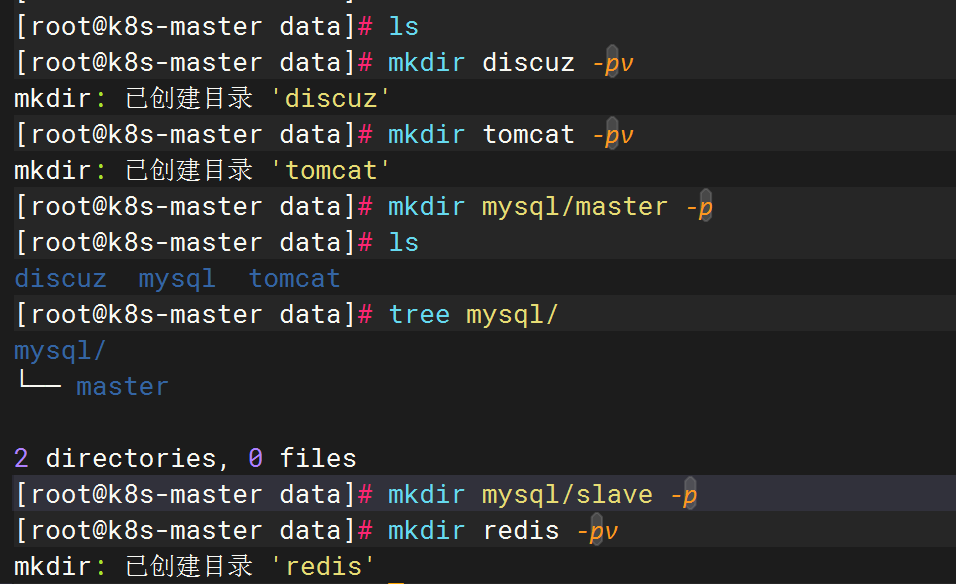

1、nfs共享目录文件

1、以k8s的控制节点 master 作为NFS服务端

2、所有 node 节点 都下载 nfs 服务

3、在宿主机创建NFS需要的共享目录

编辑

4、配置nfs共享服务器上的/data目录

编辑

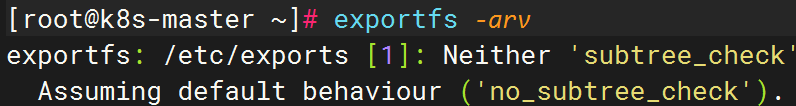

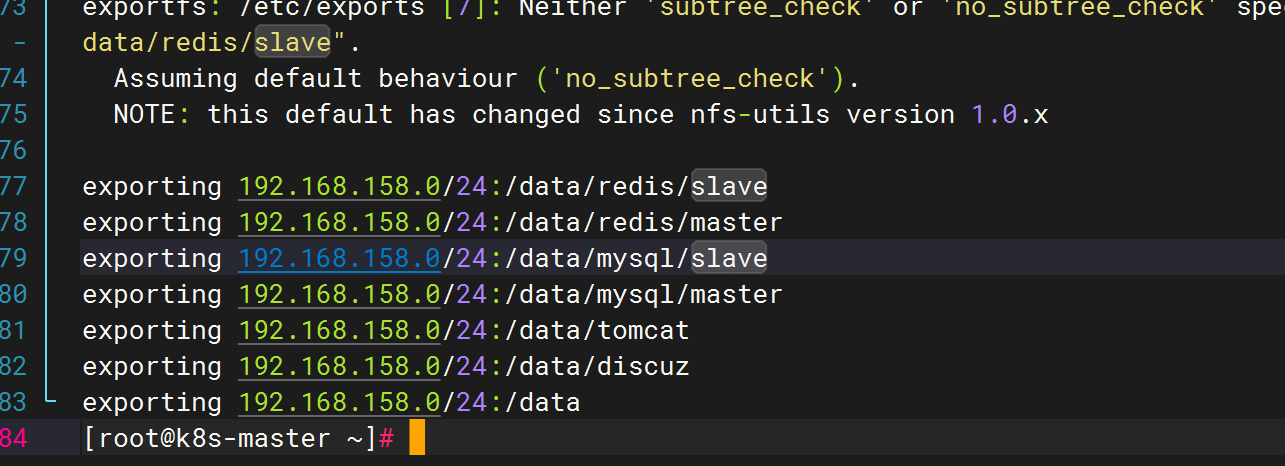

5、使 nfs 配置生效

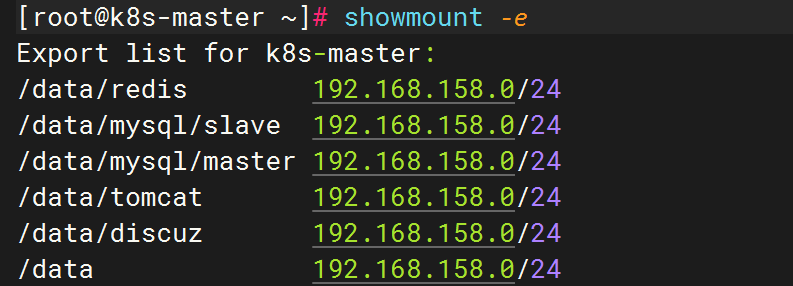

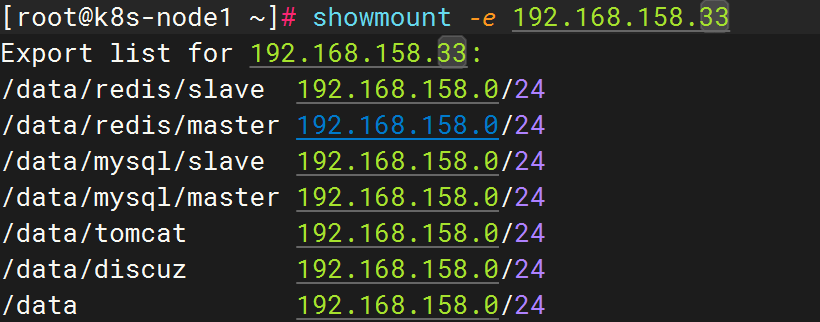

6、node节点测试nfs

2、mysql 可持久化

1.生成 pv 对应共享目录

2.提交资源清单生成 pv

3.生成对应pvc 对应 pv

4.查看

查看pv和pvc,STATUS是Bound,表示这个pv已经被pvc绑定了

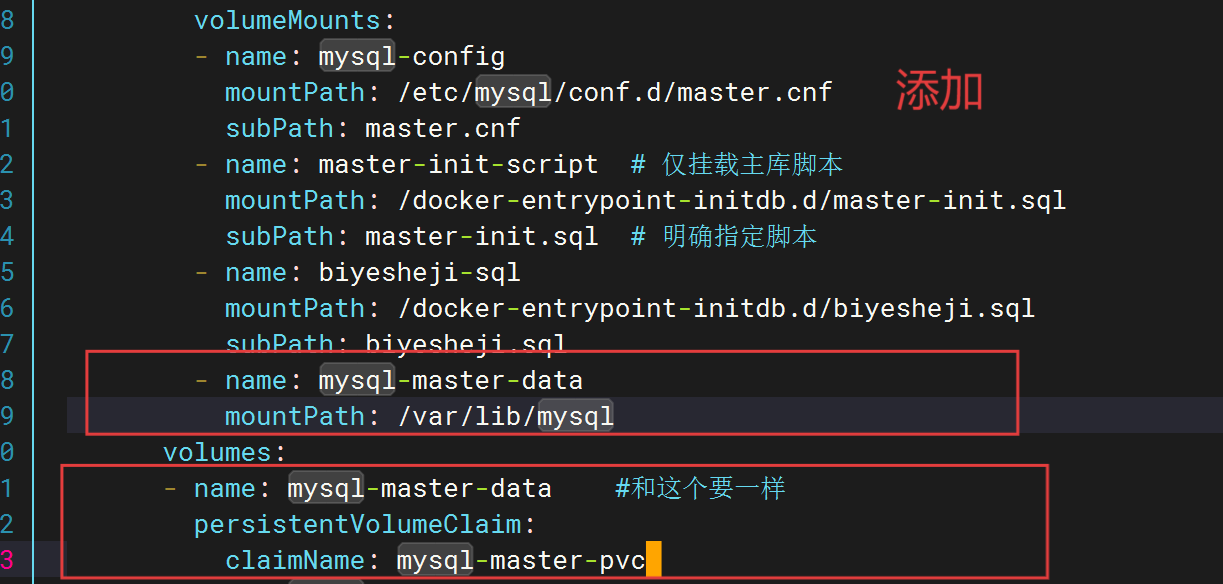

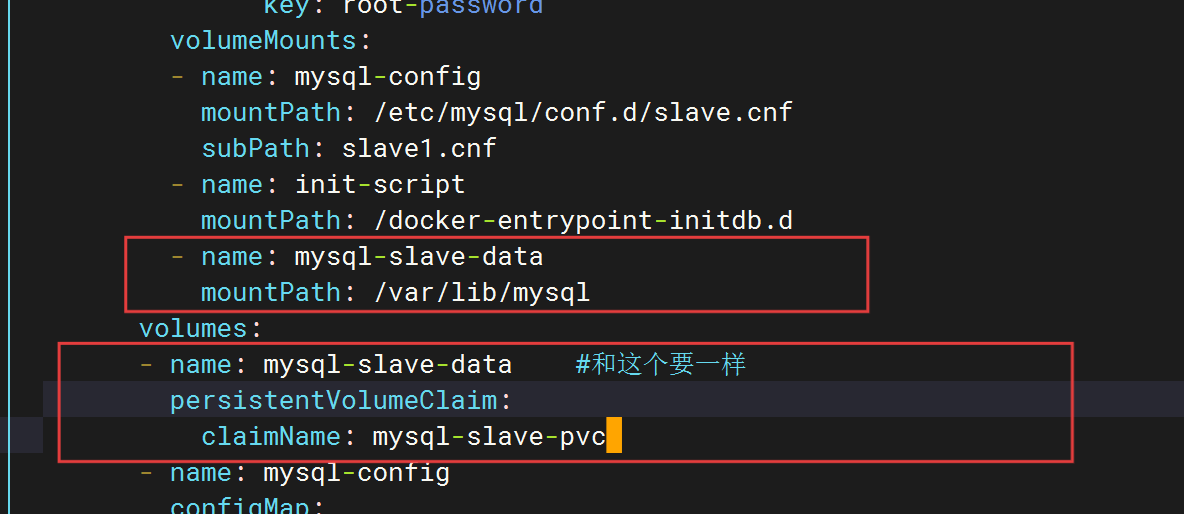

5.修改 mysql 配置文件 对应pvc

1.master (主)

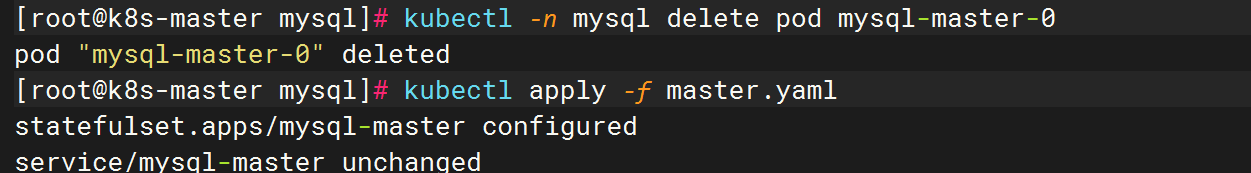

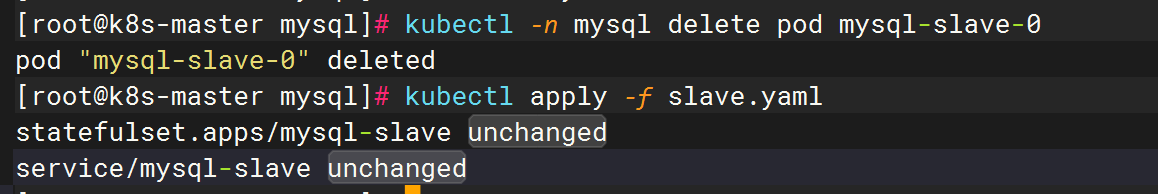

2.slave(从)

6.验证

3、redis 可持久化

1.生成 pv 存储卷

2.更新资源清单

3.生成 pvc 对应 pv

4.修改redis配置文件对应 pvc

1.master

2.slave

更新

验证

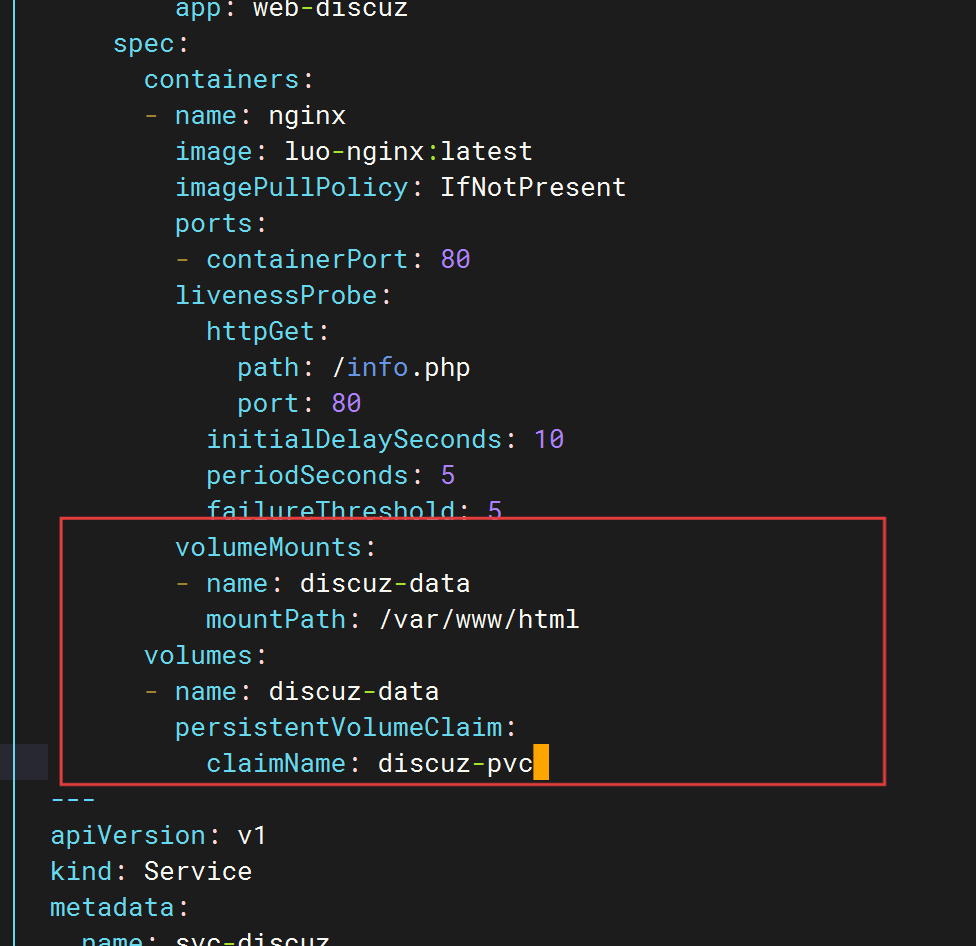

4、discuz 可持久化

1.生成pv

更新

2.生成pvc 对应pv

更新

3.修改discuz配置文件

4.在共享目录中添加对应文件

修改属主和属组

修改配置文件 对应 pvc

更新

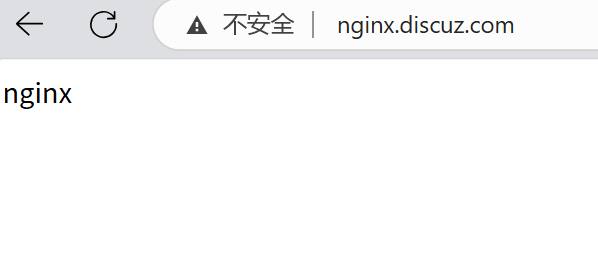

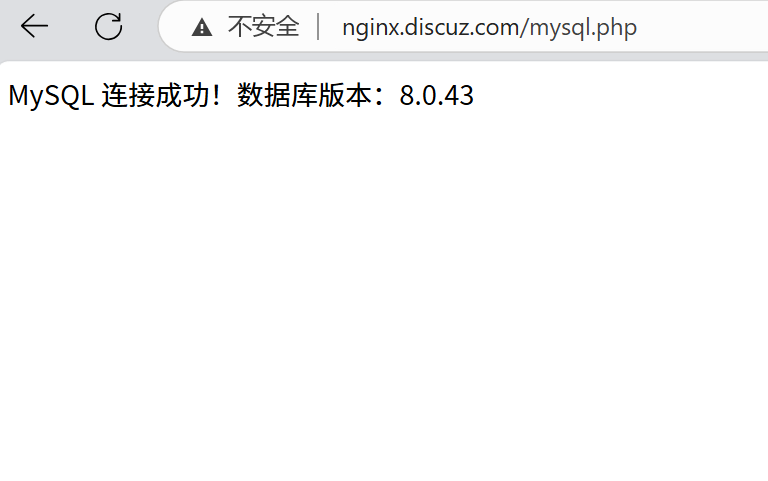

访问

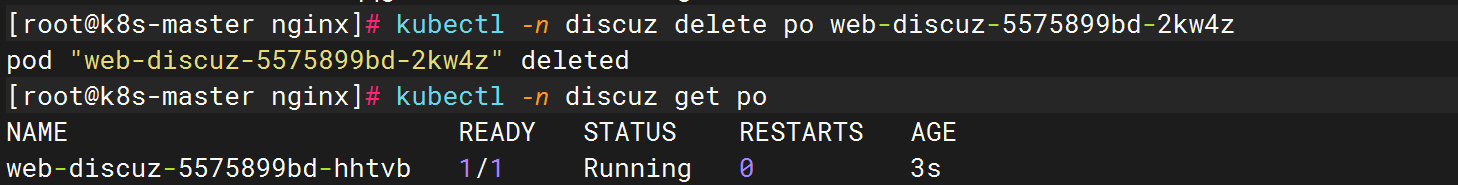

5.验证持久化

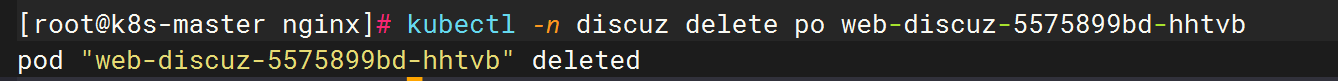

删除nginx pod

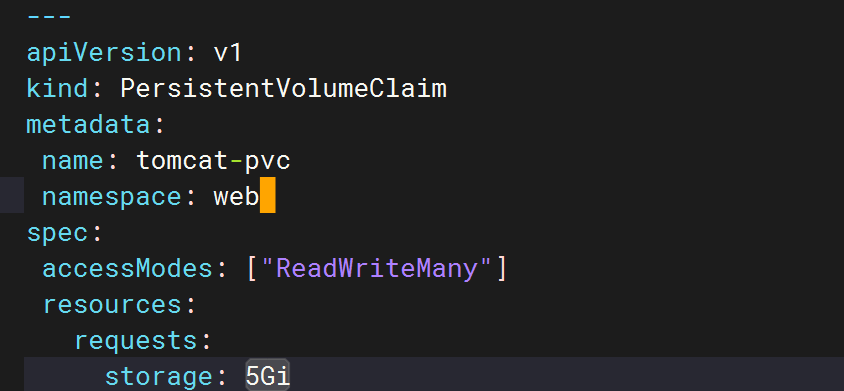

5、tomcat 持久化

1.添加 pv 对应 共享目录

2.生成pvc 对应pv

3.修改配置文件 对应 pvc

更新资源

编辑

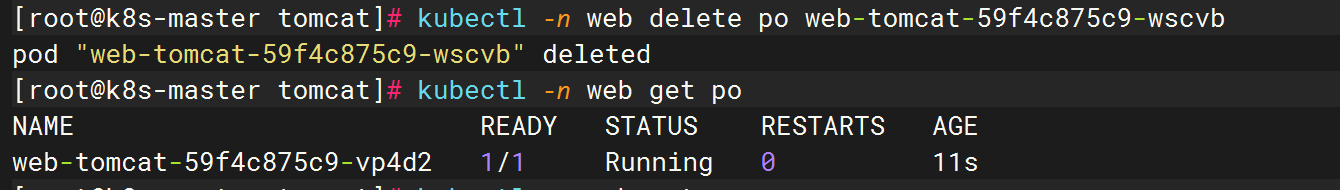

4.文件修改

修改index.jsp

修改jdbc.properties 文件

5.访问

删除pod再进行访问

6.访问成功

注意:正常的部署顺序是:

可持久化部署>>部署discuz论坛和tomcat商城>>部署基于域名访问

一、部署discuz论坛和tomcat商城

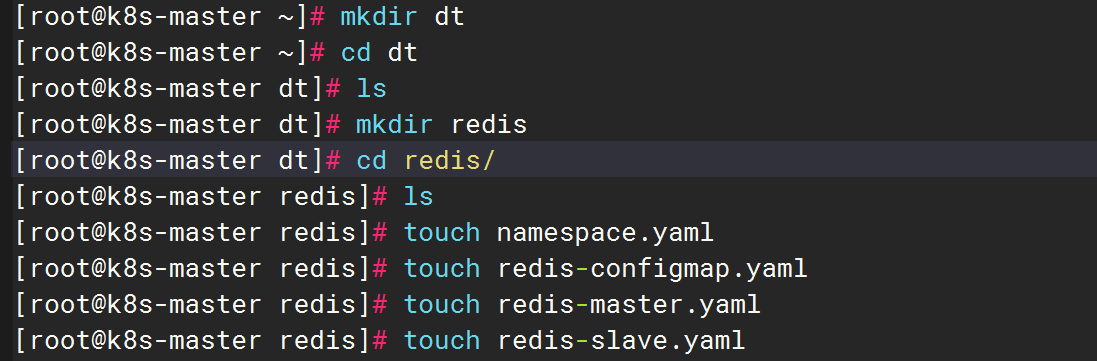

redis

编写redis配置,并启用

[root@k8s-master ~]# mkdir dt

[root@k8s-master ~]# cd dt

[root@k8s-master dt]# ls

[root@k8s-master dt]# mkdir redis

[root@k8s-master dt]# cd redis/

[root@k8s-master redis]# ls

[root@k8s-master redis]# touch namespace.yaml

[root@k8s-master redis]# touch redis-configmap.yaml

[root@k8s-master redis]# touch redis-master.yaml

[root@k8s-master redis]# touch redis-slave.yaml

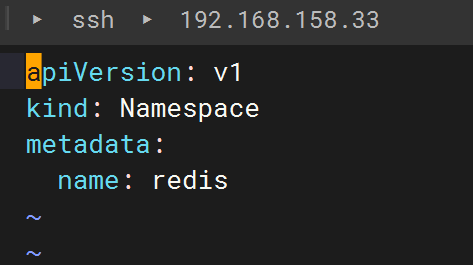

[root@k8s-master redis]# cat namespace.yaml

apiVersion: v1

kind: Namespace

metadata:name: redis[root@k8s-master redis]# cat redis-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: redis-confignamespace: redis

data:redis-master.conf: |port 6379bind 0.0.0.0protected-mode nodaemonize notimeout 0save ""appendonly nomaxmemory 1gbmaxmemory-policy allkeys-lruredis-slave.conf: |port 6379bind 0.0.0.0protected-mode nodaemonize notimeout 0save ""appendonly nomaxmemory 1gbmaxmemory-policy allkeys-lruslaveof redis-master-0.redis-master.redis.svc.cluster.local 6379slave-read-only yes

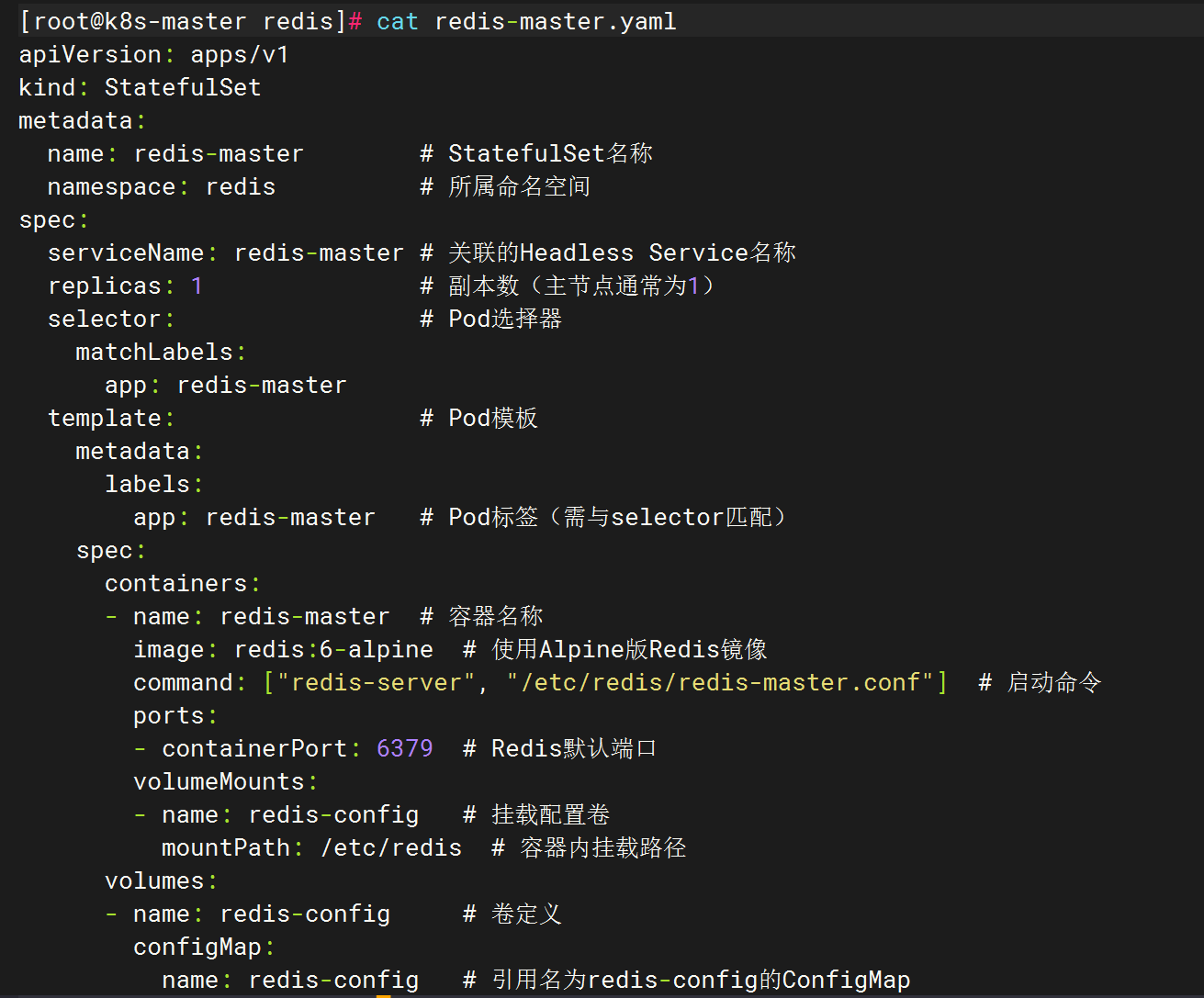

[root@k8s-master redis]# cat redis-master.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:name: redis-master # StatefulSet名称namespace: redis # 所属命名空间

spec:serviceName: redis-master # 关联的Headless Service名称replicas: 1 # 副本数(主节点通常为1)selector: # Pod选择器matchLabels:app: redis-mastertemplate: # Pod模板metadata:labels:app: redis-master # Pod标签(需与selector匹配)spec:containers:- name: redis-master # 容器名称image: redis:6-alpine # 使用Alpine版Redis镜像command: ["redis-server", "/etc/redis/redis-master.conf"] # 启动命令ports:- containerPort: 6379 # Redis默认端口volumeMounts:- name: redis-config # 挂载配置卷mountPath: /etc/redis # 容器内挂载路径volumes:- name: redis-config # 卷定义configMap:name: redis-config # 引用名为redis-config的ConfigMap

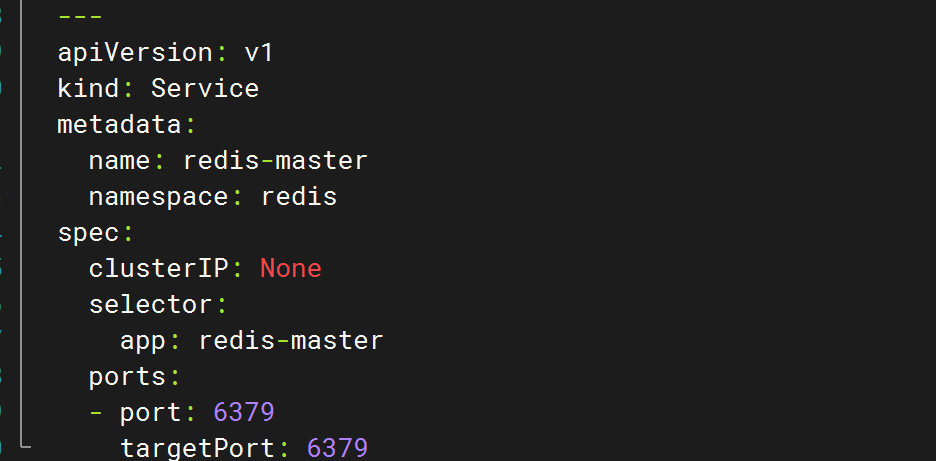

---

apiVersion: v1

kind: Service

metadata:name: redis-masternamespace: redis

spec:clusterIP: Noneselector:app: redis-masterports:- port: 6379targetPort: 6379

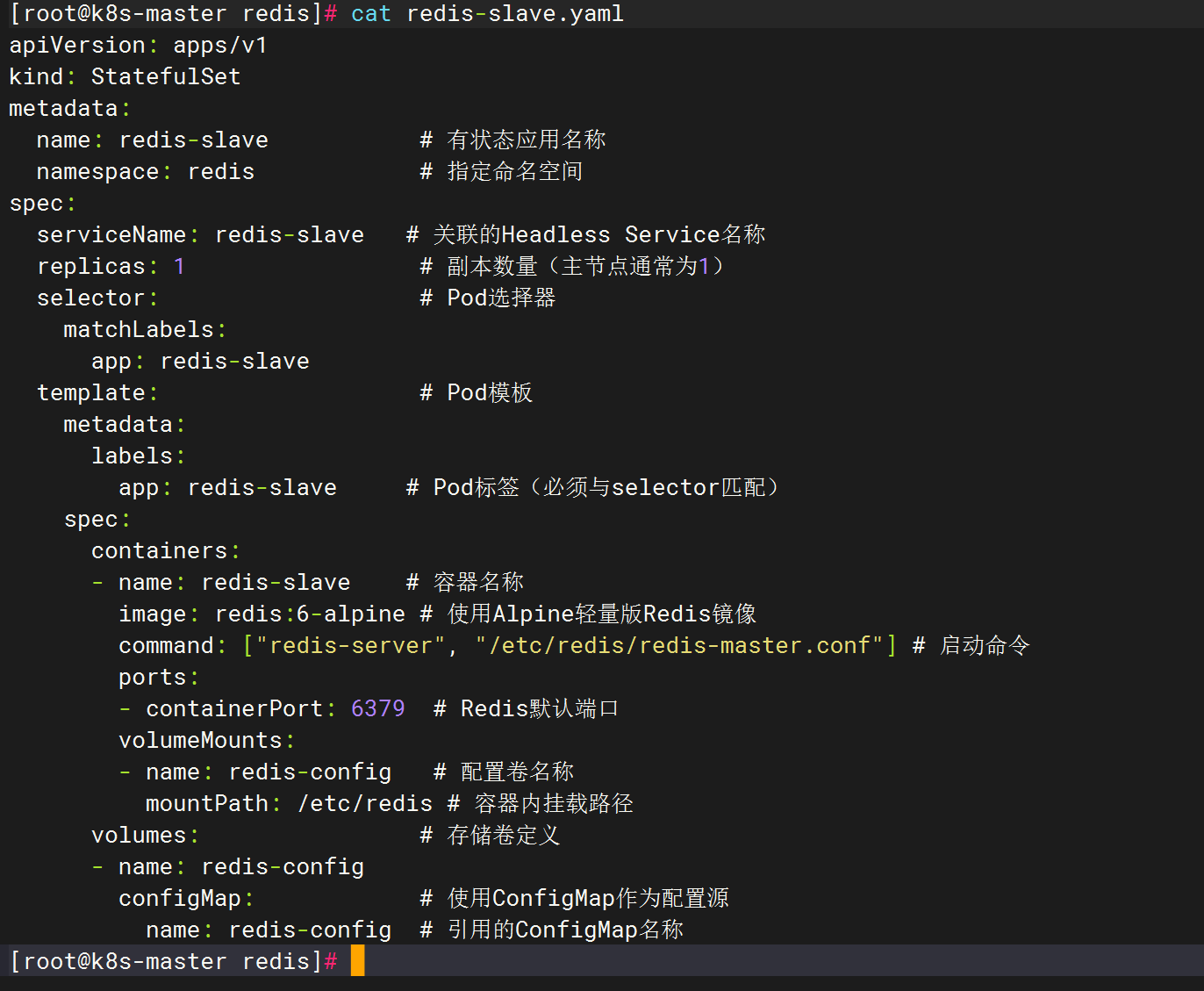

[root@k8s-master redis]# cat redis-slave.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:name: redis-slave # 有状态应用名称namespace: redis # 指定命名空间

spec:serviceName: redis-slave # 关联的Headless Service名称replicas: 1 # 副本数量(主节点通常为1)selector: # Pod选择器matchLabels:app: redis-slavetemplate: # Pod模板metadata:labels:app: redis-slave # Pod标签(必须与selector匹配)spec:containers:- name: redis-slave # 容器名称image: redis:6-alpine # 使用Alpine轻量版Redis镜像command: ["redis-server", "/etc/redis/redis-master.conf"] # 启动命令ports:- containerPort: 6379 # Redis默认端口volumeMounts:- name: redis-config # 配置卷名称mountPath: /etc/redis # 容器内挂载路径volumes: # 存储卷定义- name: redis-configconfigMap: # 使用ConfigMap作为配置源name: redis-config # 引用的ConfigMap名称

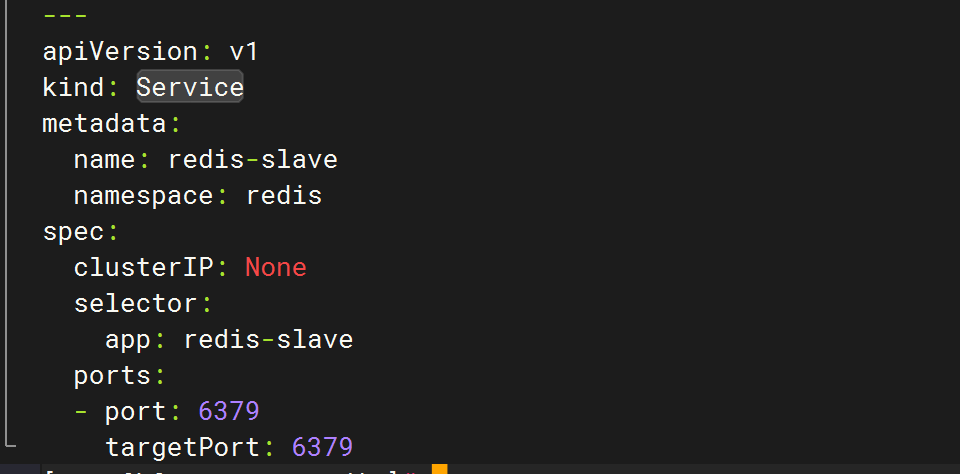

---

apiVersion: v1

kind: Service

metadata:name: redis-slavenamespace: redis

spec:clusterIP: Noneselector:app: redis-slaveports:- port: 6379

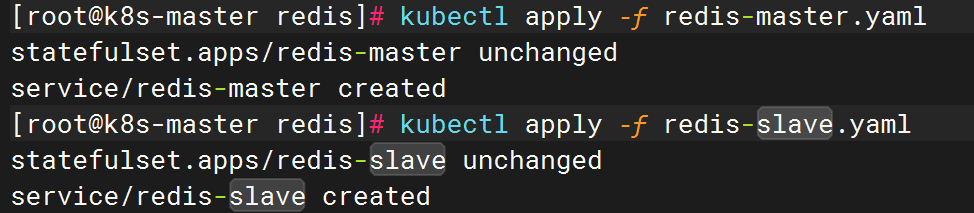

[root@k8s-master redis]# ![]()

![]()

![]()

![]()

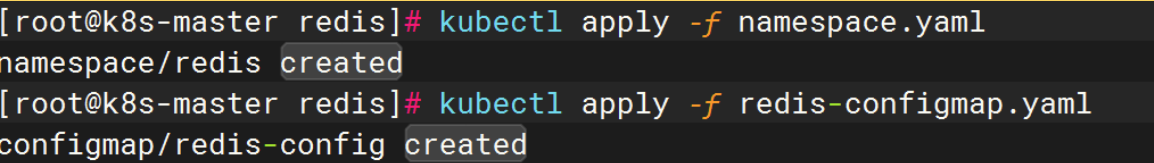

[root@k8s-master redis]# kubectl apply -f namespace.yaml

namespace/redis created

[root@k8s-master redis]# kubectl apply -f redis-configmap.yaml

configmap/redis-config created

[root@k8s-master redis]# kubectl apply -f redis-master.yaml

statefulset.apps/redis-master created

[root@k8s-master redis]# kubectl apply -f redis-slave.yaml

statefulset.apps/redis-slave created

mysql

编写mysql配置,并启用

[root@k8s-master dt]# mkdir mysql

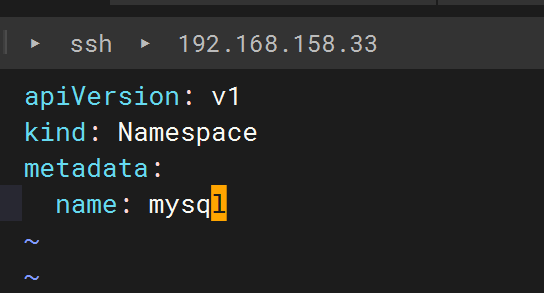

[root@k8s-master dt]# cd mysql[root@k8s-master mysql]# cat namespacemysql.yaml

apiVersion: v1

kind: Namespace

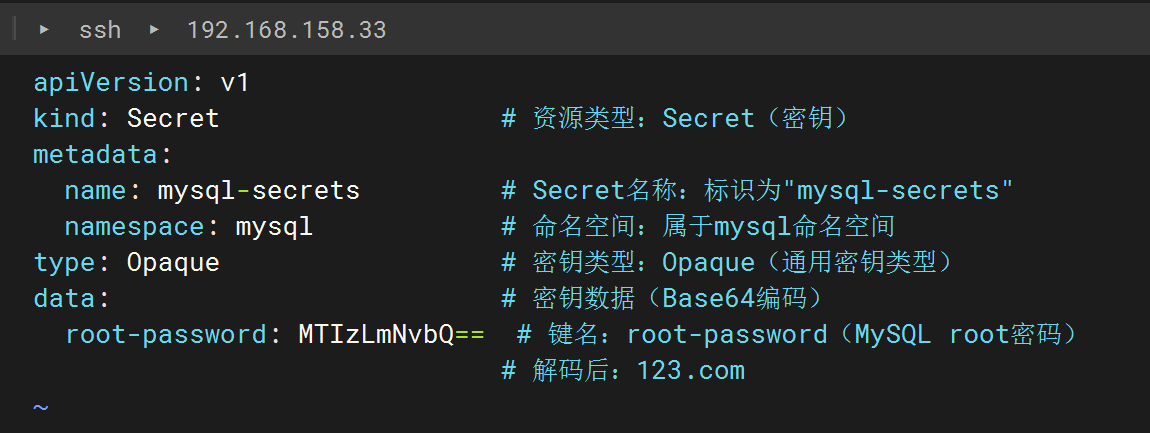

metadata:name: mysql[root@k8s-master mysql]# cat secret.yaml

apiVersion: v1

kind: Secret # 资源类型:Secret(密钥)

metadata:name: mysql-secrets # Secret名称:标识为"mysql-secrets"namespace: mysql # 命名空间:属于mysql命名空间

type: Opaque # 密钥类型:Opaque(通用密钥类型)

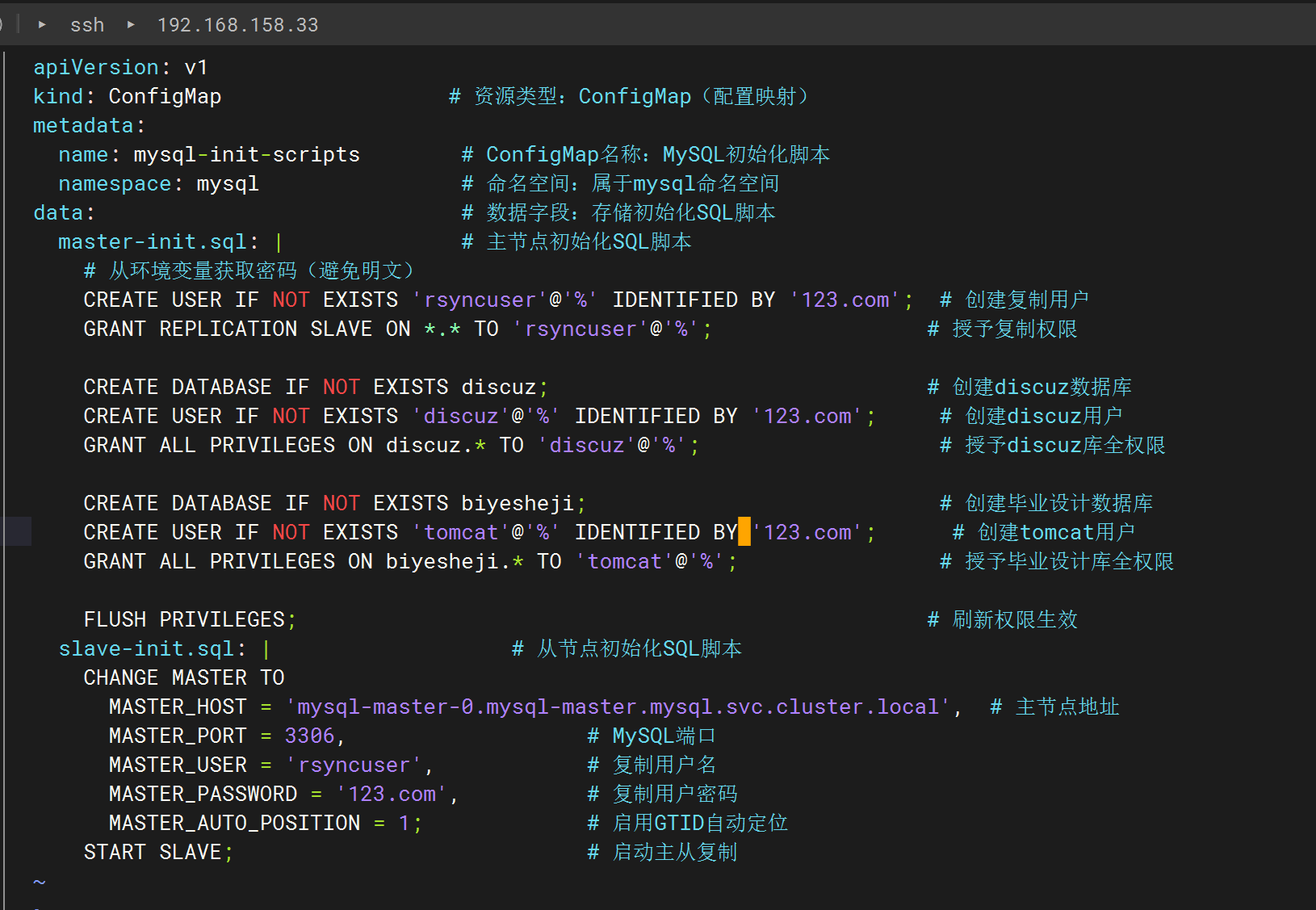

data: # 密钥数据(Base64编码)root-password: MTIzLmNvbQ== # 键名:root-password(MySQL root密码)# 解码后:123.com[root@k8s-master mysql]# cat init-scripts.yaml

apiVersion: v1

kind: ConfigMap # 资源类型:ConfigMap(配置映射)

metadata:name: mysql-init-scripts # ConfigMap名称:MySQL初始化脚本namespace: mysql # 命名空间:属于mysql命名空间

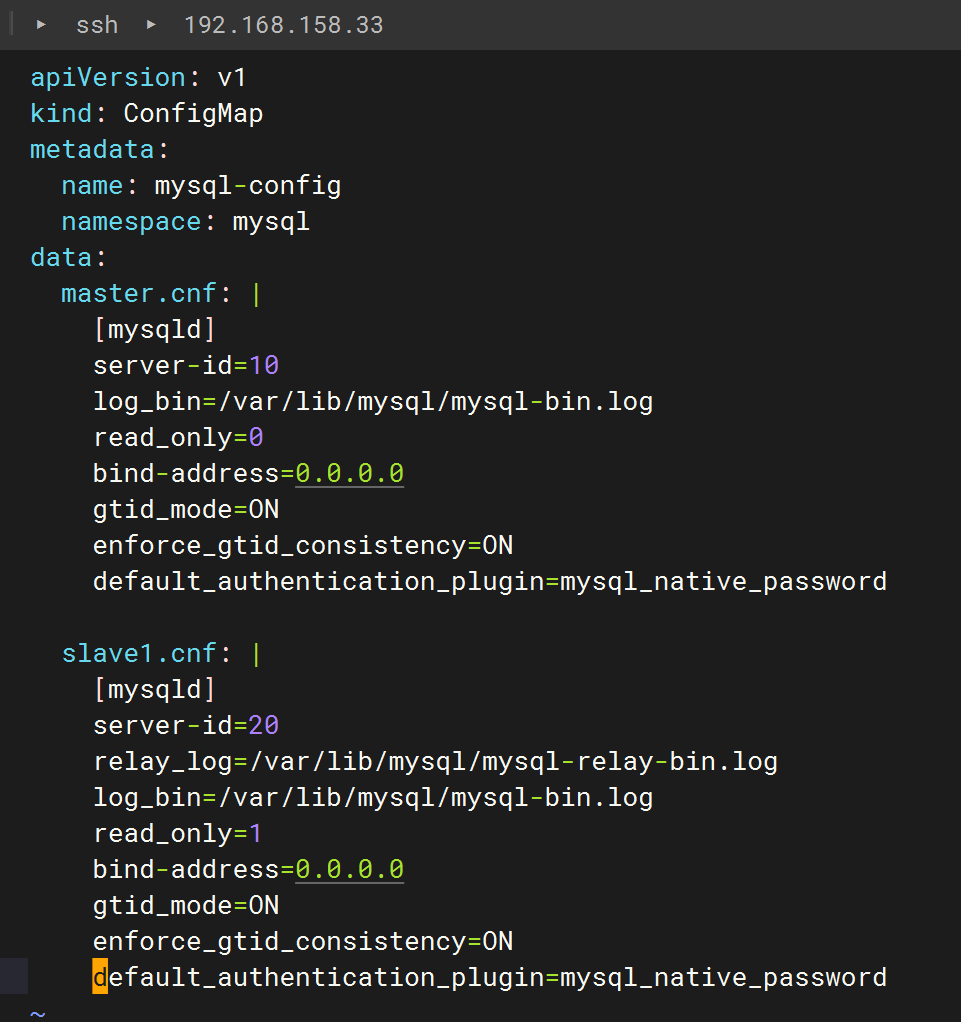

data: # 数据字段:存储初始化SQL脚本master-init.sql: | # 主节点初始化SQL脚本# 从环境变量获取密码(避免明文)CREATE USER IF NOT EXISTS 'rsyncuser'@'%' IDENTIFIED BY '123.com'; # 创建复制用户GRANT REPLICATION SLAVE ON *.* TO 'rsyncuser'@'%'; # 授予复制权限CREATE DATABASE IF NOT EXISTS discuz; # 创建discuz数据库CREATE USER IF NOT EXISTS 'discuz'@'%' IDENTIFIED BY '123.com'; # 创建discuz用户GRANT ALL PRIVILEGES ON discuz.* TO 'discuz'@'%'; # 授予discuz库全权限CREATE DATABASE IF NOT EXISTS biyesheji; # 创建毕业设计数据库CREATE USER IF NOT EXISTS 'tomcat'@'%' IDENTIFIED BY '123.com'; # 创建tomcat用户GRANT ALL PRIVILEGES ON biyesheji.* TO 'tomcat'@'%'; # 授予毕业设计库全权限FLUSH PRIVILEGES; # 刷新权限生效slave-init.sql: | # 从节点初始化SQL脚本CHANGE MASTER TOMASTER_HOST = 'mysql-master-0.mysql-master.mysql.svc.cluster.local', # 主节点地址MASTER_PORT = 3306, # MySQL端口MASTER_USER = 'rsyncuser', # 复制用户名MASTER_PASSWORD = '123.com', # 复制用户密码MASTER_AUTO_POSITION = 1; # 启用GTID自动定位START SLAVE; # 启动主从复制[root@k8s-master mysql]# cat configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: mysql-confignamespace: mysql

data:master.cnf: |[mysqld]server-id=10log_bin=/var/lib/mysql/mysql-bin.logread_only=0bind-address=0.0.0.0gtid_mode=ONenforce_gtid_consistency=ONdefault_authentication_plugin=mysql_native_passwordslave1.cnf: |[mysqld]server-id=20relay_log=/var/lib/mysql/mysql-relay-bin.loglog_bin=/var/lib/mysql/mysql-bin.logread_only=1bind-address=0.0.0.0gtid_mode=ONenforce_gtid_consistency=ONdefault_authentication_plugin=mysql_native_password[root@k8s-master mysql]# cat master.yaml

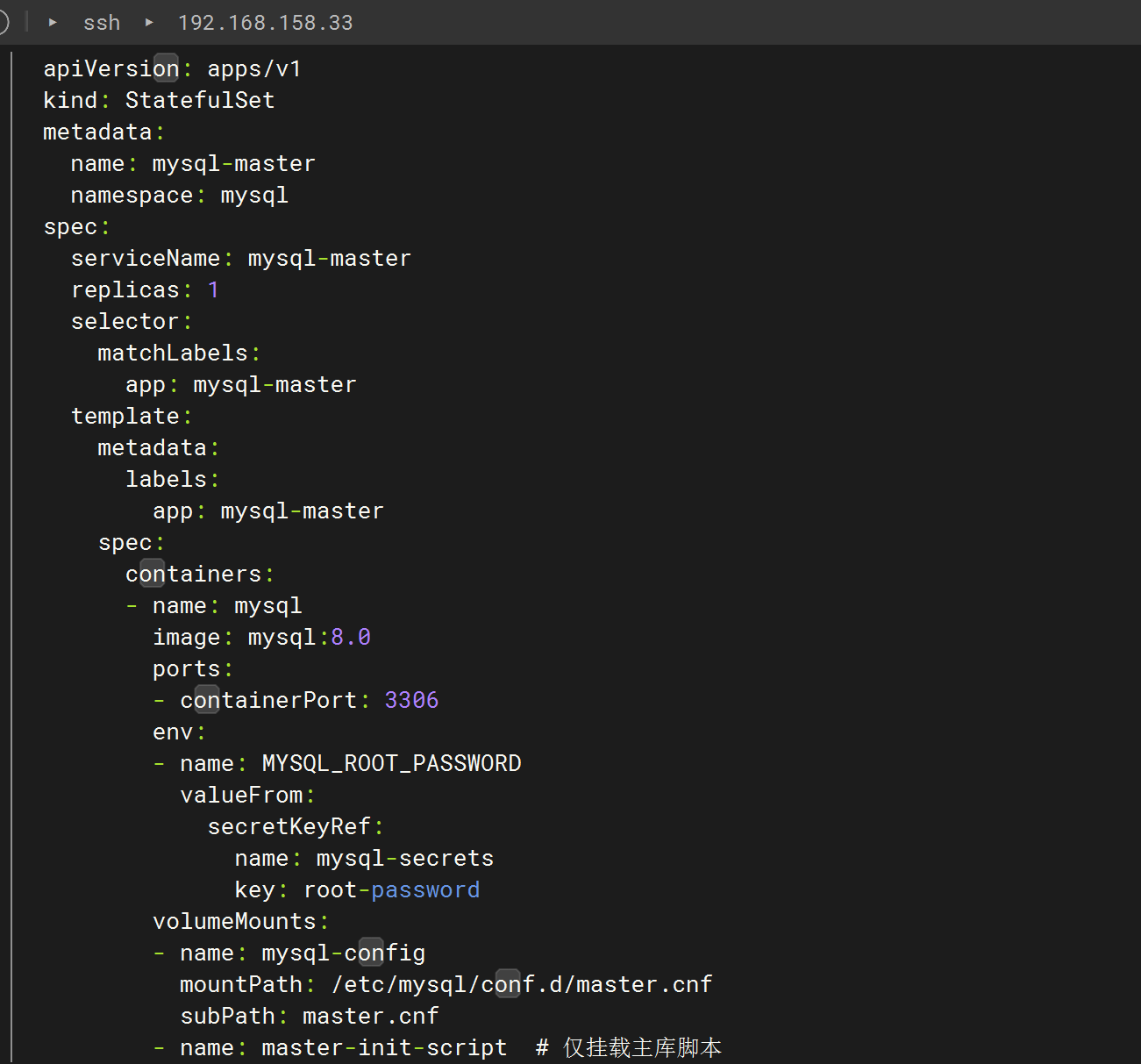

apiVersion: apps/v1

kind: StatefulSet

metadata:name: mysql-masternamespace: mysql

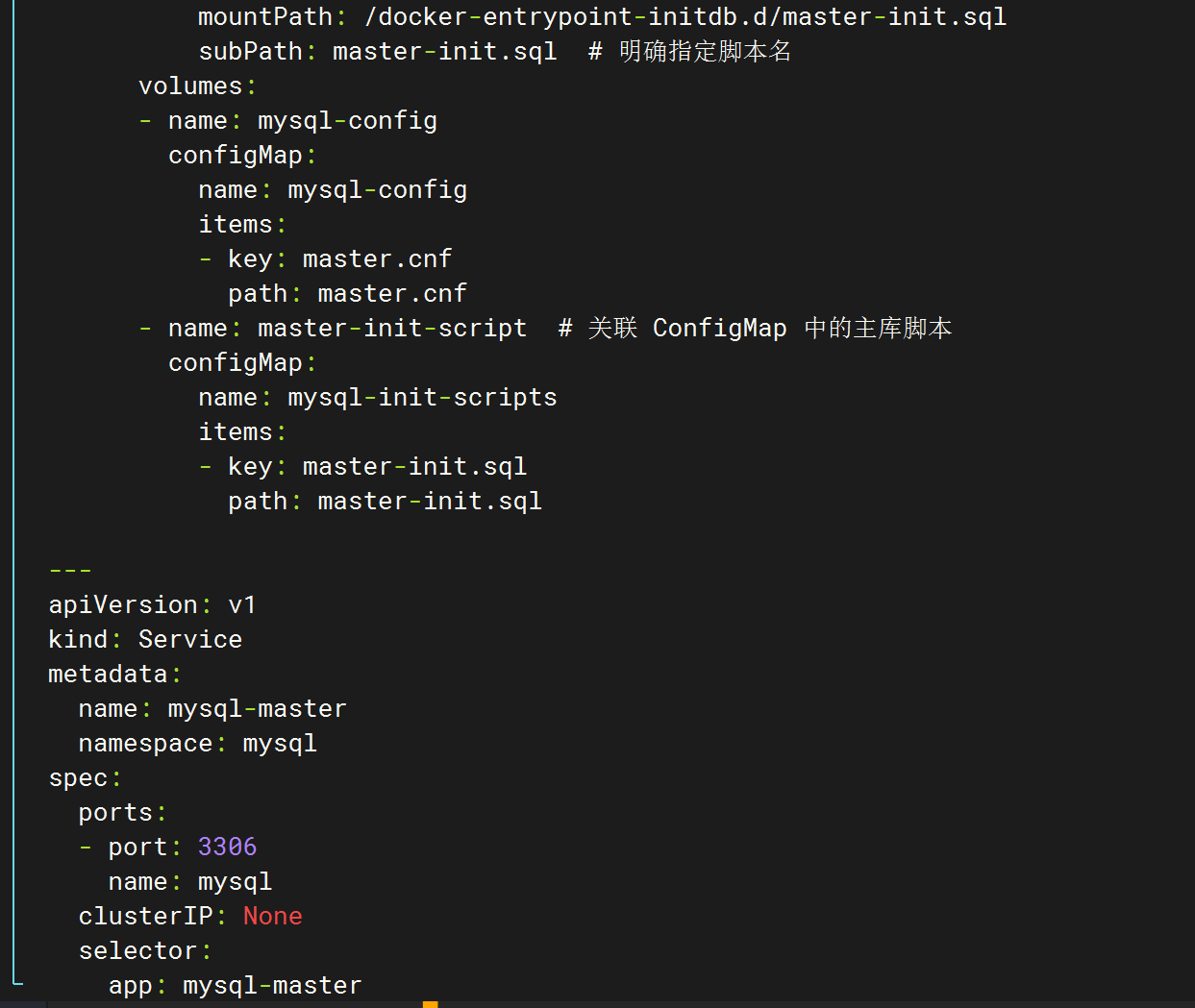

spec:serviceName: mysql-masterreplicas: 1selector:matchLabels:app: mysql-mastertemplate:metadata:labels:app: mysql-masterspec:containers:- name: mysqlimage: mysql:8.0ports:- containerPort: 3306env:- name: MYSQL_ROOT_PASSWORDvalueFrom:secretKeyRef:name: mysql-secretskey: root-passwordvolumeMounts:- name: mysql-configmountPath: /etc/mysql/conf.d/master.cnfsubPath: master.cnf- name: master-init-script # 仅挂载主库脚本mountPath: /docker-entrypoint-initdb.d/master-init.sqlsubPath: master-init.sql # 明确指定脚本名volumes:- name: mysql-configconfigMap:name: mysql-configitems:- key: master.cnfpath: master.cnf- name: master-init-script # 关联 ConfigMap 中的主库脚本configMap:name: mysql-init-scriptsitems:- key: master-init.sqlpath: master-init.sql---

apiVersion: v1

kind: Service

metadata:name: mysql-masternamespace: mysql

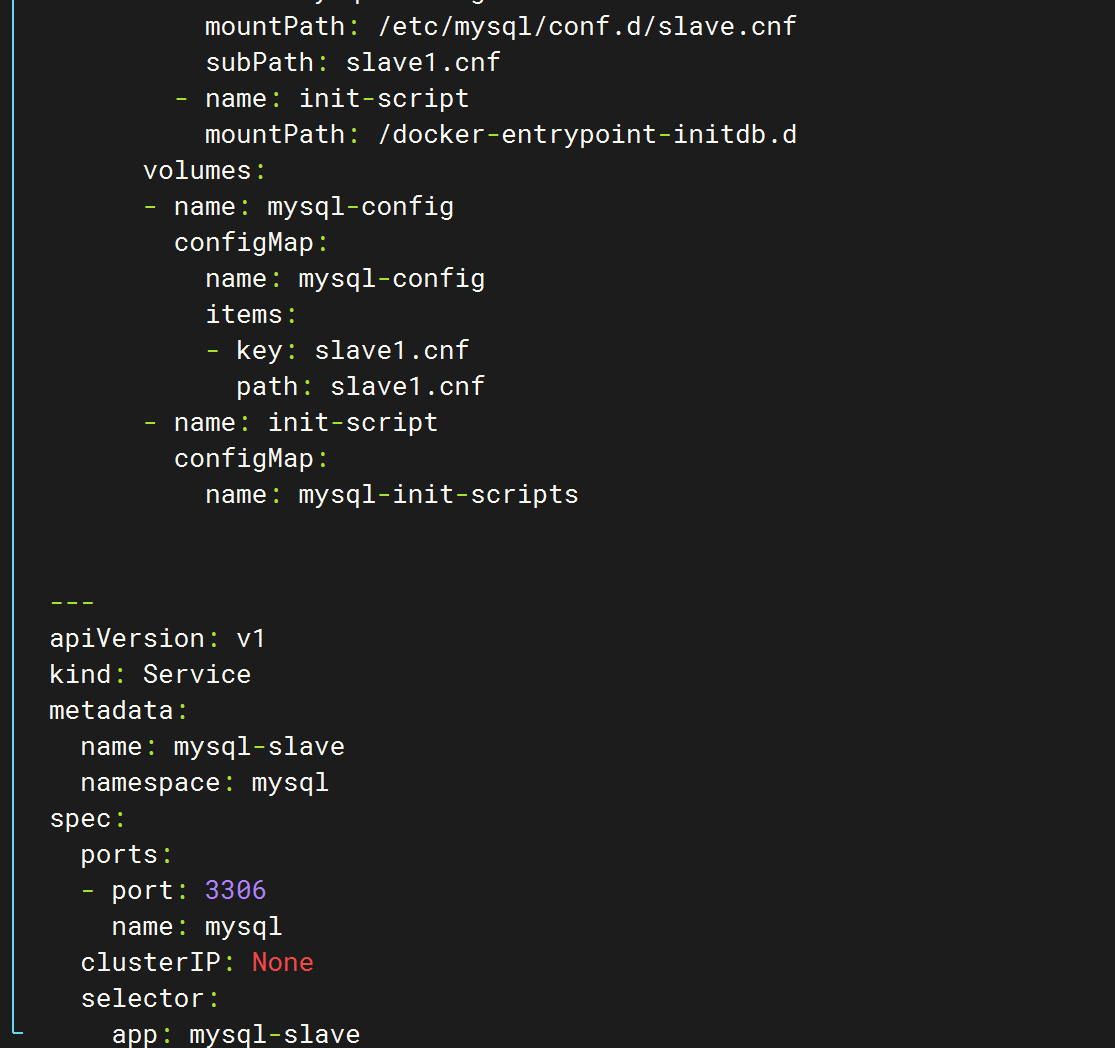

spec:ports:- port: 3306name: mysqlclusterIP: Noneselector:app: mysql-master[root@k8s-master mysql]# cat slave.yaml

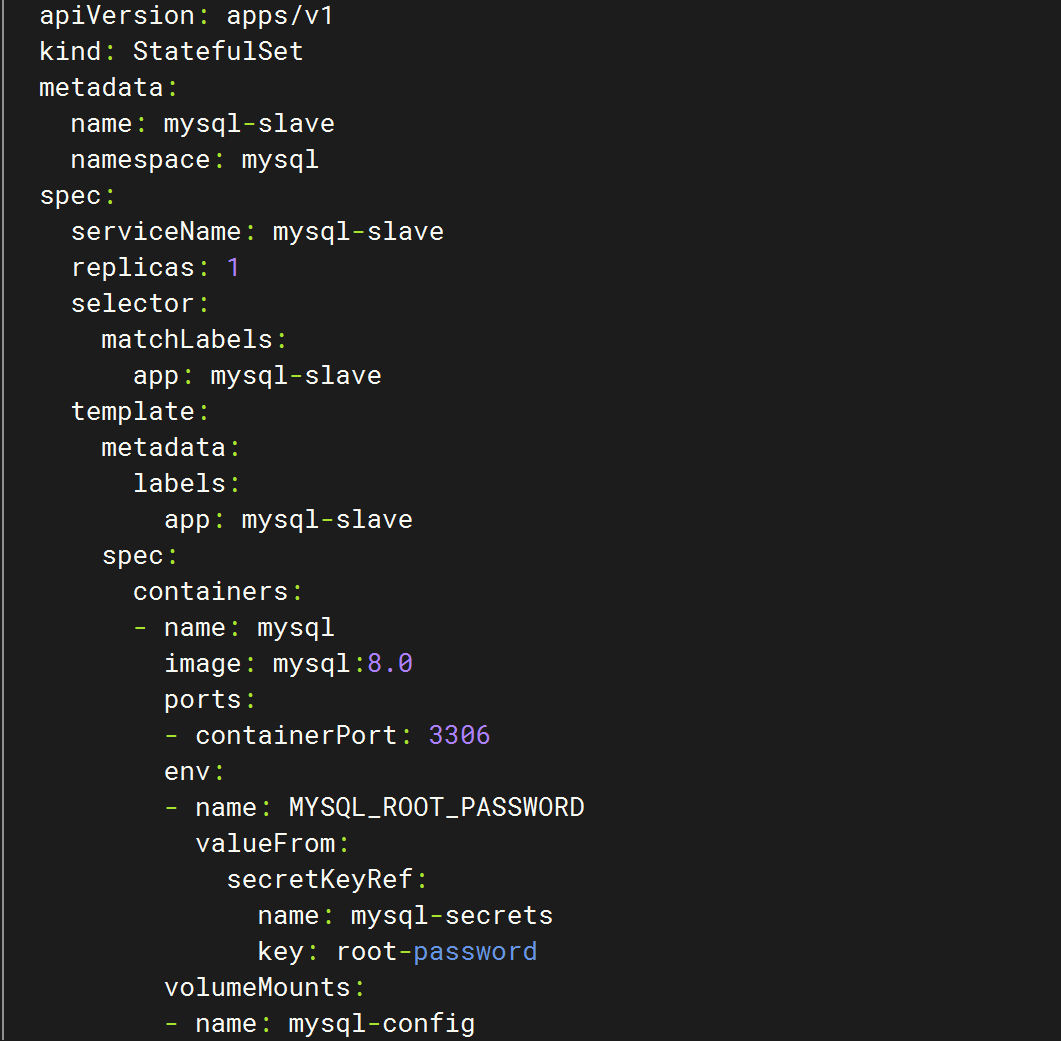

apiVersion: apps/v1

kind: StatefulSet

metadata:name: mysql-slavenamespace: mysql

spec:serviceName: mysql-slavereplicas: 1 selector:matchLabels:app: mysql-slavetemplate:metadata:labels:app: mysql-slavespec:containers:- name: mysqlimage: mysql:8.0ports:- containerPort: 3306env:- name: MYSQL_ROOT_PASSWORDvalueFrom:secretKeyRef:name: mysql-secretskey: root-passwordvolumeMounts:- name: mysql-configmountPath: /etc/mysql/conf.d/slave.cnfsubPath: slave1.cnf- name: init-scriptmountPath: /docker-entrypoint-initdb.dvolumes:- name: mysql-configconfigMap:name: mysql-configitems:- key: slave1.cnfpath: slave1.cnf- name: init-scriptconfigMap:name: mysql-init-scripts---

apiVersion: v1

kind: Service

metadata:name: mysql-slavenamespace: mysql

spec:ports:- port: 3306name: mysqlclusterIP: Noneselector:app: mysql-slave![]()

![]()

![]()

![]()

![]()

![]()

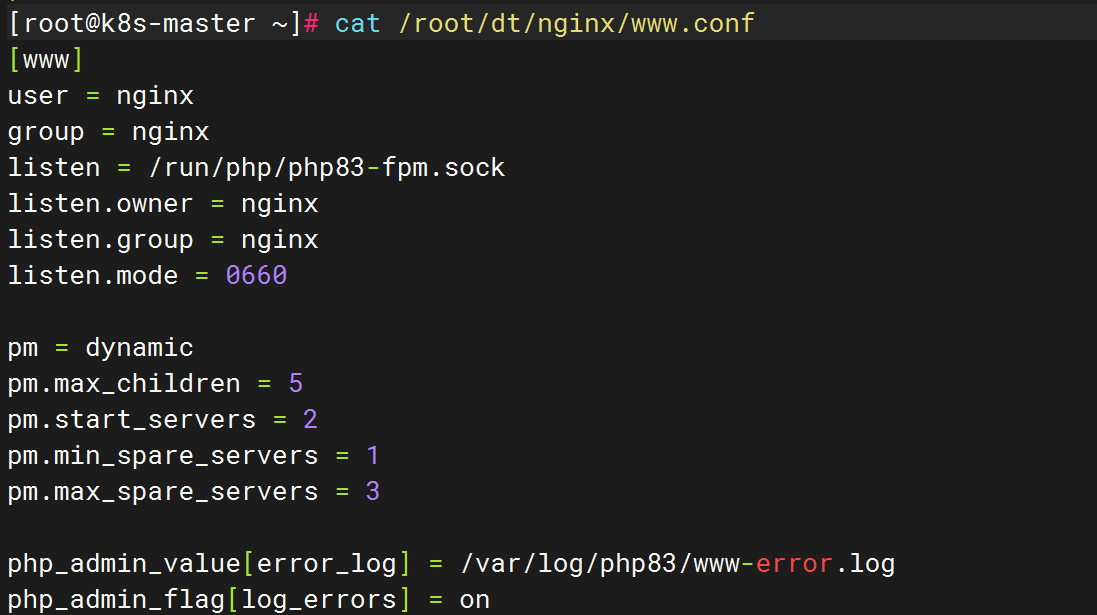

[root@k8s-master mysql]# ls

configmap.yaml init-scripts.yaml master.yaml namespacemysql.yaml secret.yaml slave.yaml[root@k8s-master mysql]# kubectl apply -f namespacemysql.yaml

namespace/mysql created

[root@k8s-master mysql]# kubectl apply -f secret.yaml

secret/mysql-secrets created

[root@k8s-master mysql]# kubectl apply -f init-scripts.yaml

configmap/mysql-init-scripts created

[root@k8s-master mysql]# kubectl apply -f configmap.yaml

configmap/mysql-config created

[root@k8s-master mysql]# kubectl apply -f master.yaml

statefulset.apps/mysql-master created

service/mysql-master created

[root@k8s-master mysql]# kubectl apply -f slave.yaml

statefulset.apps/mysql-slave created

service/mysql-slave created

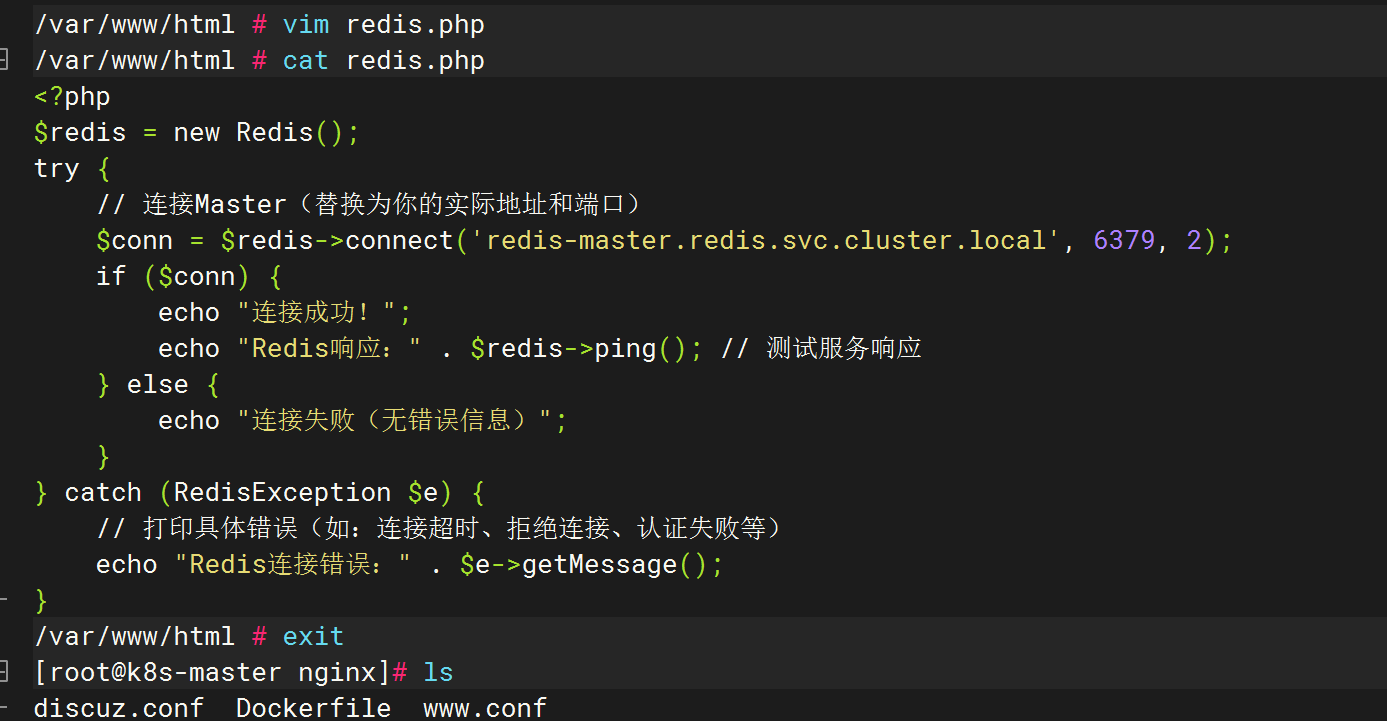

构建自定义镜像

luo-nginx:latest

配置文件

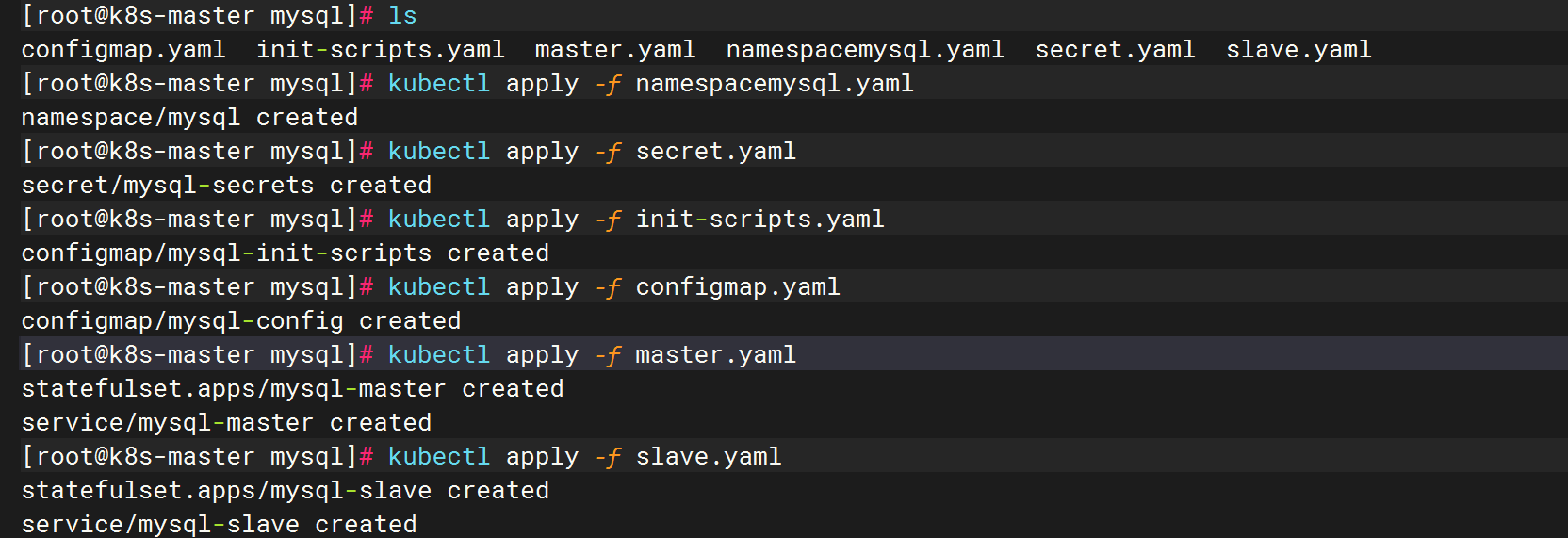

nginx的配置文件

[root@k8s-master nginx]# ls

discuz.conf Dockerfile www.conf[root@k8s-master ~]# cat /root/dt/nginx/discuz.conf

server {listen 80;server_name localhost;root /var/www/html;index index.php index.html index.htm;access_log /var/log/nginx/discuz_access.log;error_log /var/log/nginx/discuz_error.log;location / {try_files $uri $uri/ /index.php?$query_string;}location ~ \.php$ {fastcgi_pass unix:/run/php/php83-fpm.sock;fastcgi_index index.php;fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;include fastcgi_params;}location ~ /\.ht {deny all;}

}

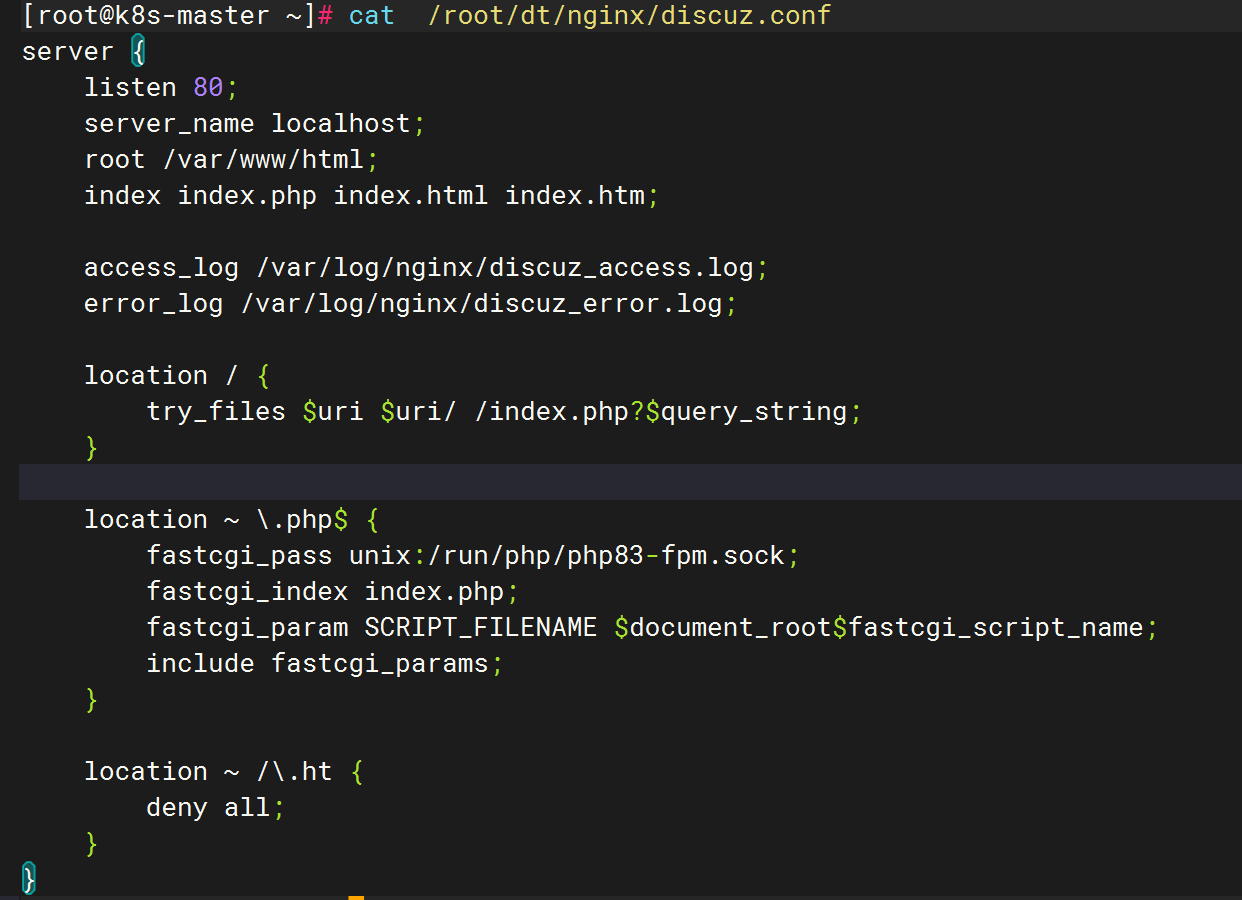

php-fpm服务服务的配置文件

[root@k8s-master ~]# cat /root/dt/nginx/www.conf

[www]

user = nginx

group = nginx

listen = /run/php/php83-fpm.sock

listen.owner = nginx

listen.group = nginx

listen.mode = 0660pm = dynamic

pm.max_children = 5

pm.start_servers = 2

pm.min_spare_servers = 1

pm.max_spare_servers = 3php_admin_value[error_log] = /var/log/php83/www-error.log

php_admin_flag[log_errors] = on

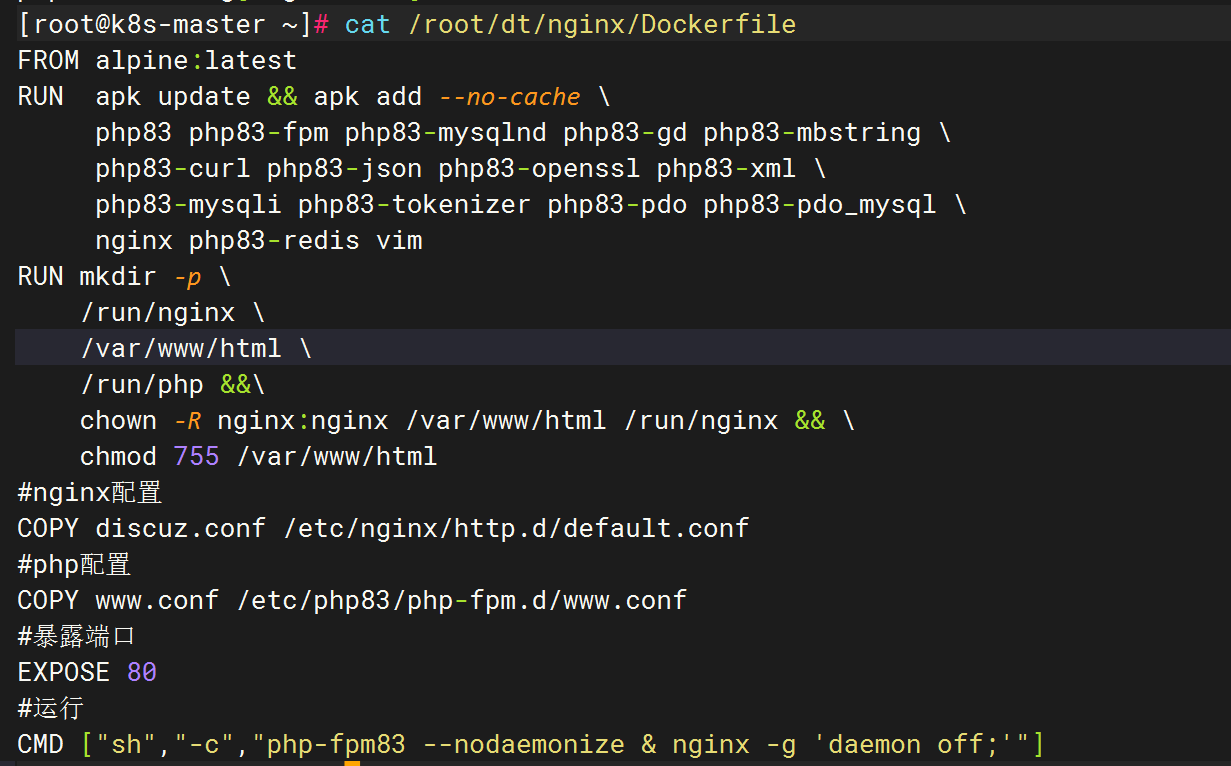

Dockerfile 文件

[root@k8s-master ~]# cat /root/dt/nginx/Dockerfile

FROM alpine:latest

RUN apk update && apk add --no-cache \php83 php83-fpm php83-mysqlnd php83-gd php83-mbstring \php83-curl php83-json php83-openssl php83-xml \php83-mysqli php83-tokenizer php83-pdo php83-pdo_mysql \nginx php83-redis vim

RUN mkdir -p \/run/nginx \/var/www/html \/run/php &&\chown -R nginx:nginx /var/www/html /run/nginx && \chmod 755 /var/www/html

#nginx配置

COPY discuz.conf /etc/nginx/http.d/default.conf

#php配置

COPY www.conf /etc/php83/php-fpm.d/www.conf

#暴露端口

EXPOSE 80

#运行

CMD ["sh","-c","php-fpm83 --nodaemonize & nginx -g 'daemon off;'"]

![]()

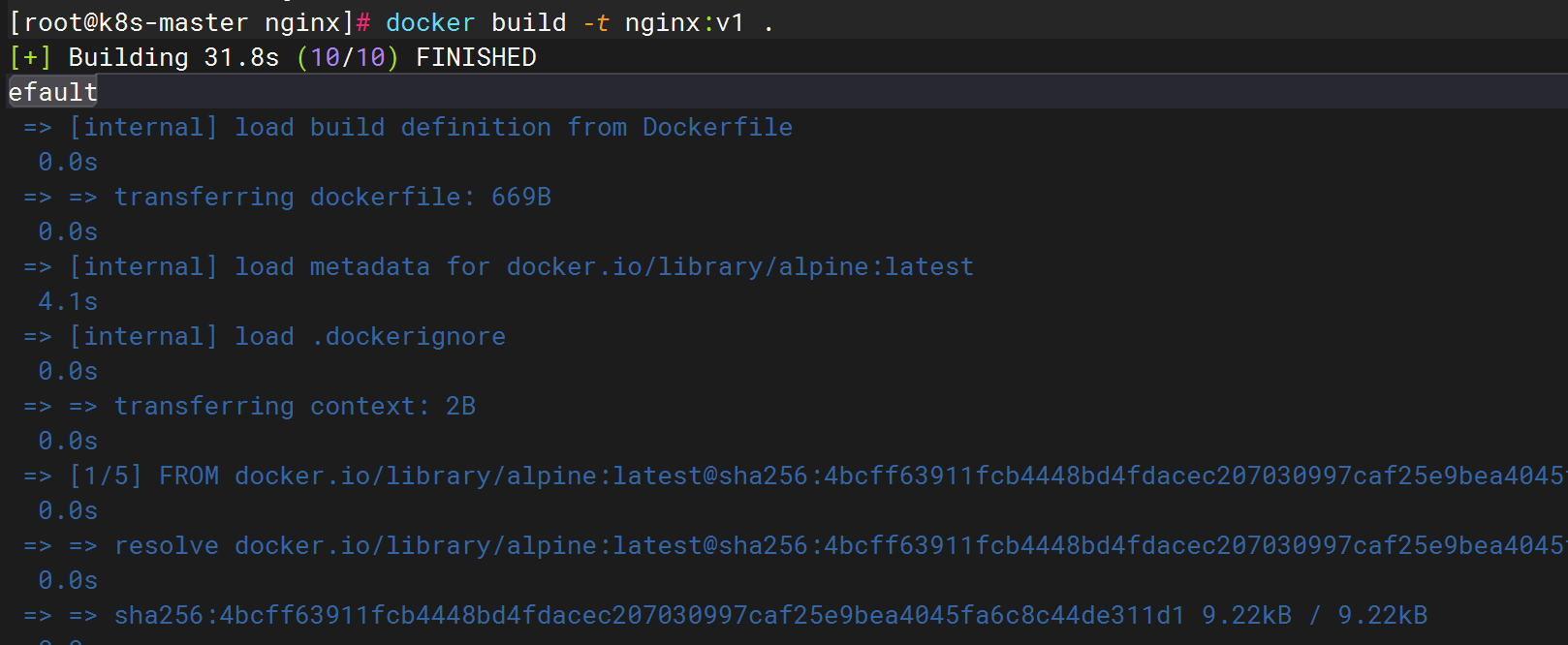

生成镜像

[root@k8s-master nginx]# docker build -t nginx:v1 .

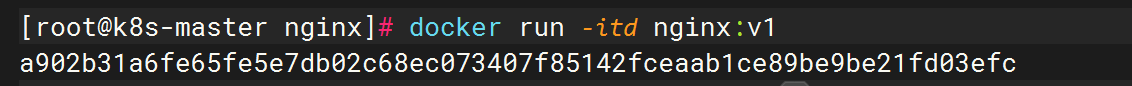

从镜像 nginx:v1 中运行容器

启动容器

[root@k8s-master nginx]# docker run -itd nginx:v1

a902b31a6fe65fe5e7db02c68ec073407f85142fceaab1ce89be9be21fd03efc

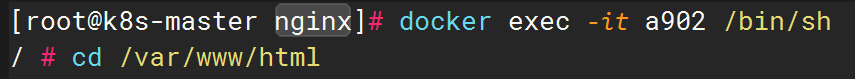

进入容器

[root@k8s-master nginx]# docker exec -it a902 /bin/sh

/ # cd /var/www/html

编写测试文件

index.html info.php mysql.php redis.php

/var/www/html # vim index.html

/var/www/html # cat index.html

nginx

/var/www/html # vim info.php

/var/www/html # cat info.php

<?phpphpinfo();

?>

/var/www/html # vim mysql.php

/var/www/html # cat mysql.php

<?php

error_reporting(E_ALL);

ini_set('display_errors', 1);

$host = 'mysql-master-0.mysql-master.mysql.svc.cluster.local'; // 数据库主机地址

$user = 'discuz'; // MySQL 用户名

$pass = '123.com'; // MySQL 用户密码

$dbname = 'discuz'; // 要连接的数据库名// 尝试连接 MySQL

$conn = new mysqli($host, $user, $pass, $dbname);// 检查连接错误

if ($conn->connect_error) {// 连接失败时终止脚本并输出错误die('连接失败:' . $conn->connect_error);

}// 连接成功,输出数据库版本信息

echo "MySQL 连接成功!数据库版本:" . $conn->server_info;

?>

/var/www/html # vim redis.php

/var/www/html # cat redis.php

<?php

$redis = new Redis();

try {// 连接Master(替换为你的实际地址和端口)$conn = $redis->connect('redis-master.redis.svc.cluster.local', 6379, 2); if ($conn) {echo "连接成功!";echo "Redis响应:" . $redis->ping(); // 测试服务响应} else {echo "连接失败(无错误信息)";}

} catch (RedisException $e) {// 打印具体错误(如:连接超时、拒绝连接、认证失败等)echo "Redis连接错误:" . $e->getMessage();

}

/var/www/html # exit

[root@k8s-master nginx]# ls

discuz.conf Dockerfile www.conf

导入discuz文件

创建目录

为了方便,在 /root/dt/nginx/目录下创建discuz目录,存放discuz软件包

[root@k8s-master nginx]# ls

discuz.conf Dockerfile www.conf

[root@k8s-master nginx]# mkdir discuz

[root@k8s-master nginx]# ls

discuz discuz.conf Dockerfile www.conf

[root@k8s-master nginx]# cd discuz/

[root@k8s-master discuz]# ls

[root@k8s-master discuz]# rz

rz waiting to receive.**[root@k8s-master discuz]#

[root@k8s-master discuz]#

[root@k8s-master discuz]# ls

Discuz_X3.5_SC_UTF8_20250205.zip

[root@k8s-master discuz]# unzip Discuz_X3.5_SC_UTF8_20250205.zip 解压

将软件包仍到discuz目录,并解压

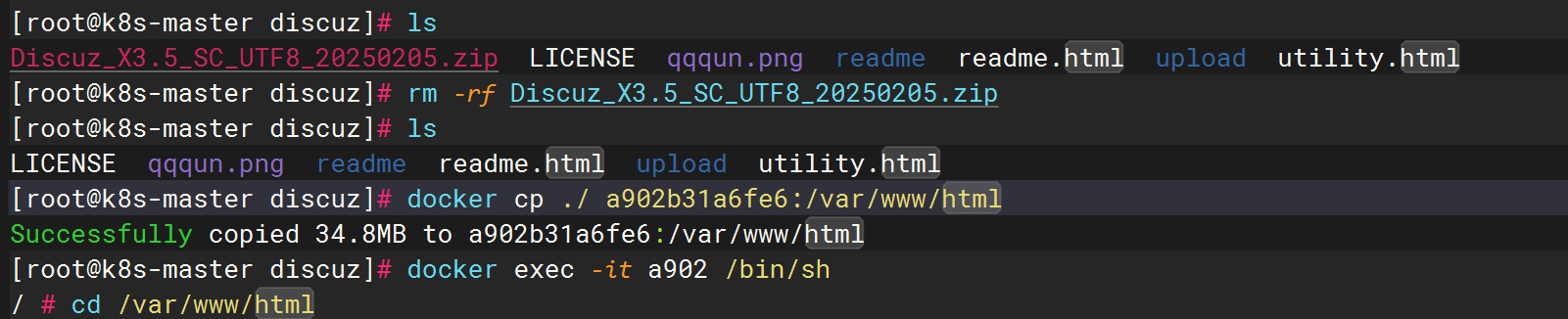

拷贝文件到容器

删除discuz软件包,并将discuz目录下所有的文件拷贝到之前启动 的容器中

[root@k8s-master discuz]# ls

Discuz_X3.5_SC_UTF8_20250205.zip LICENSE qqqun.png readme readme.html upload utility.html

[root@k8s-master discuz]# rm -rf Discuz_X3.5_SC_UTF8_20250205.zip

[root@k8s-master discuz]# ls

LICENSE qqqun.png readme readme.html upload utility.html

[root@k8s-master discuz]# docker cp ./ a902b31a6fe6:/var/www/html

Successfully copied 34.8MB to a902b31a6fe6:/var/www/html

[root@k8s-master discuz]# docker exec -it a902 /bin/sh

/ # cd /var/www/html

修改文件属性

修改html目录下所有的属主:属组

/var/www/html # ls

LICENSE info.php qqqun.png readme.html upload

index.html mysql.php readme redis.php utility.html

/var/www/html # cd ..

/var/www # chown -R nginx:nginx html/

/var/www # cd html

/var/www/html # ls -l

修改discuz默认配置文件

修改 config_global_default.php 文件

/var/www/html/upload # cd config/

/var/www/html/upload/config # ls

config_global_default.php config_ucenter_default.php index.htm

/var/www/html/upload/config # vim config_global_default.php

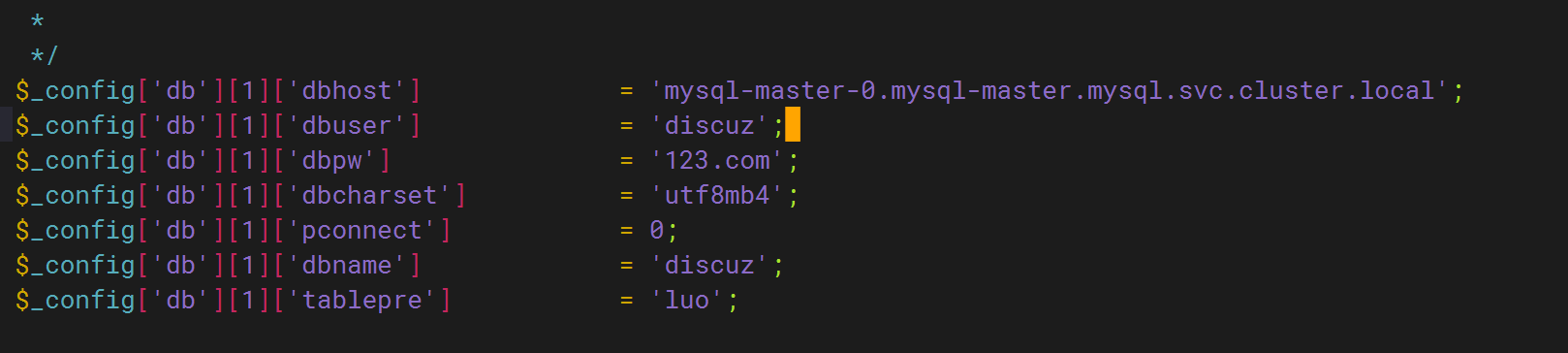

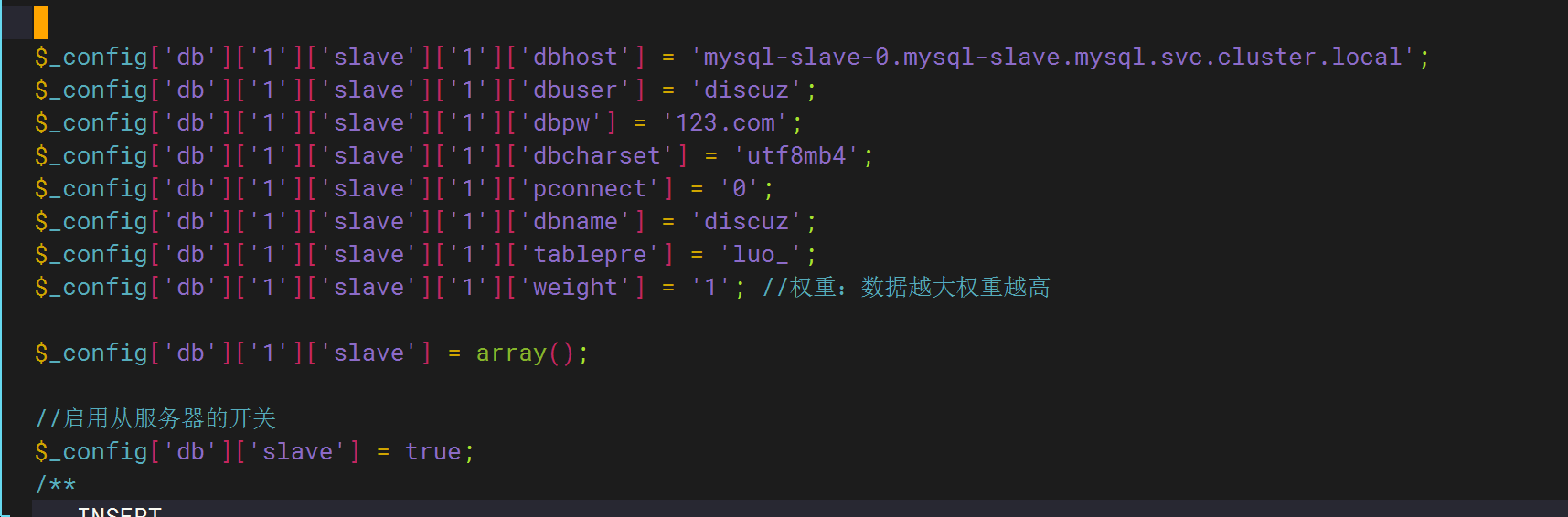

mysql数据库 修改

mysql修改后如下

主库

修改如下

从库

修改如下

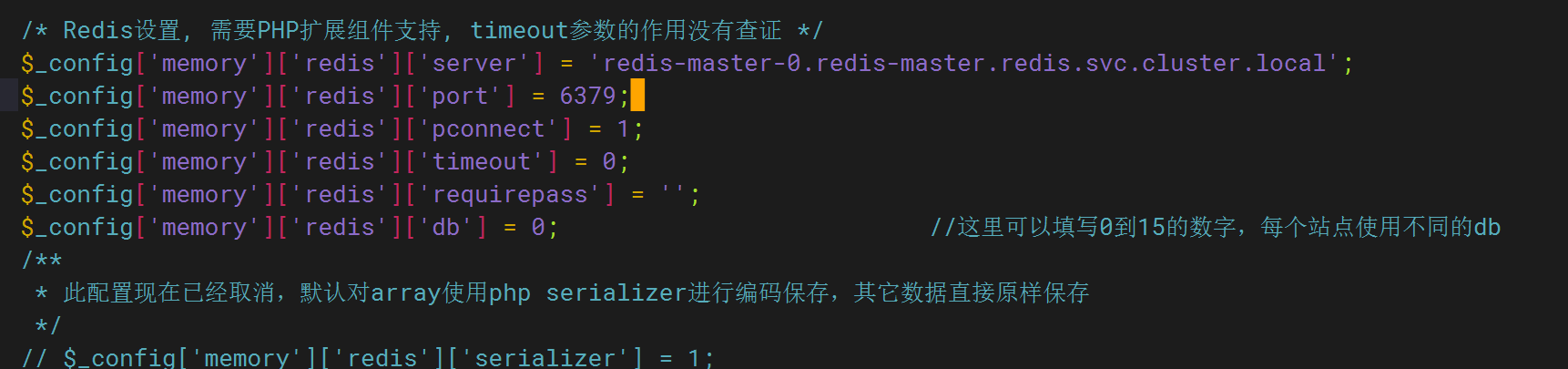

redis数据库

修改如下

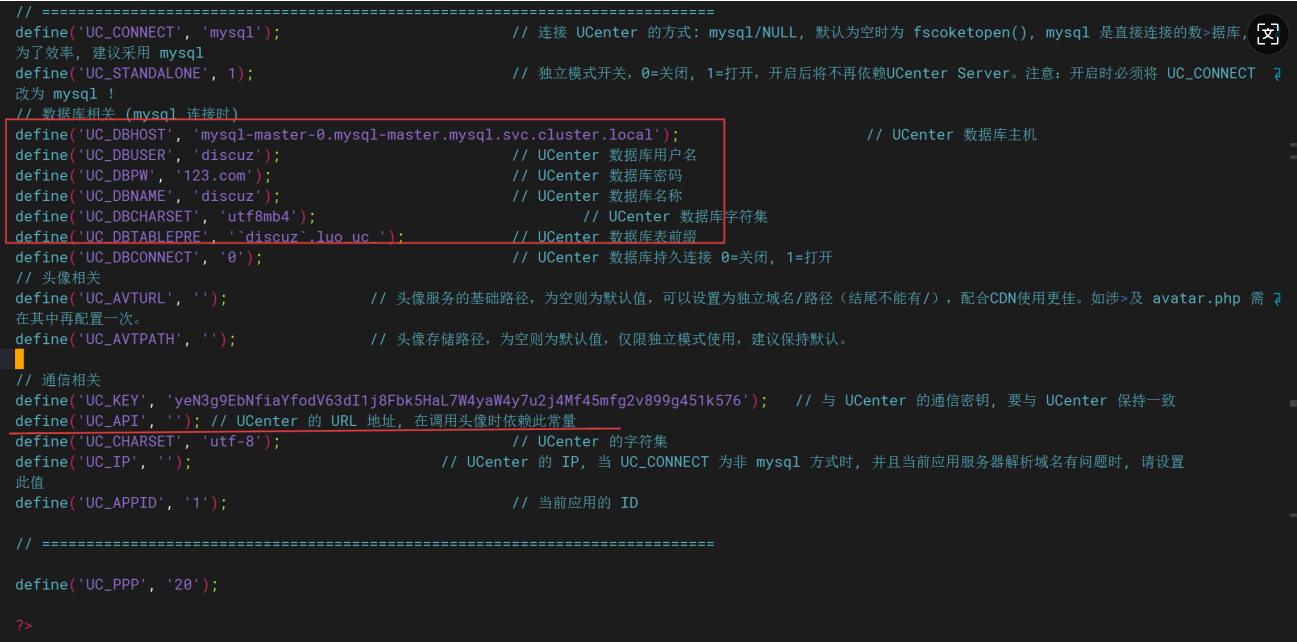

修改 config_ucenter_default.php 文件

![]()

![]()

导出镜像

[root@k8s-master nginx]# docker commit a902b31a6fe6 luo-nginx:latest

sha256:a4bf8e59acf9a819bb4a2ea875eb1ba6e11fc2d868213d076322b10340f294a0

镜像归档为tar包

[root@k8s-master nginx]# docker save -o luo-nginx.tar luo-nginx:latest

[root@k8s-master nginx]# ls

discuz discuz.conf Dockerfile luo-nginx.tar www.conf

拷贝镜像给node节点

[root@k8s-master nginx]# scp luo-nginx.tar 192.168.158.34:/root/Authorized users only. All activities may be monitored and reported.

luo-nginx.tar 100% 123MB 330.6MB/s 00:00

[root@k8s-master nginx]# scp luo-nginx.tar 192.168.158.35:/root/Authorized users only. All activities may be monitored and reported.

luo-nginx.tar 100% 123MB 344.9MB/s 00:00

部署discuz

编写discuz文件

[root@k8s-master nginx]# cat discuz.yaml

apiVersion: v1

kind: Namespace

metadata:name: discuz

---

apiVersion: apps/v1

kind: Deployment

metadata:name: dep-discuznamespace: discuz

spec:replicas: 1selector:matchLabels:app: web-discuztemplate:metadata:labels:app: web-discuzspec:containers:- name: nginximage: luo-nginx:latestimagePullPolicy: IfNotPresentports:- containerPort: 80livenessProbe: httpGet:path: /info.phpport: 80initialDelaySeconds: 10periodSeconds: 5failureThreshold: 5

---

apiVersion: v1

kind: Service

metadata:name: svc-discuznamespace: discuz

spec:type: NodePortports:- port: 80targetPort: 80nodePort: 30722selector:app: web-discuz

启动文件

[root@k8s-master nginx]# vim discuz.yaml

[root@k8s-master nginx]# kubectl apply -f discuz.yaml

namespace/discuz unchanged

deployment.apps/dep-discuz unchanged

service/svc-discuz created[root@k8s-master nginx]# kubectl -n discuz get po

NAME READY STATUS RESTARTS AGE

dep-discuz-56d977c44b-4p8gn 0/1 ImagePullBackOff 0 108s

[root@k8s-master nginx]# kubectl -n discuz get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dep-discuz-56d977c44b-4p8gn 0/1 ImagePullBackOff 0 2m1s 10.244.169.175 k8s-node2 <none> <none>

[root@k8s-master nginx]# kubectl -n discuz get svc -owide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc-discuz NodePort 10.100.47.183 <none> 80:30722/TCP 51s app=web-discuz

访问

问题

出现这个页面时,将redi缓存清理就ok

访问成功!

部署 tomcat 商城

自定义镜像

[root@k8s-master dt]# cd tomcat/

[root@k8s-master tomcat]# pwd

/root/dt/tomcat

[root@k8s-master tomcat]# mkdir conf.d

[root@k8s-master tomcat]# mkdir logs

[root@k8s-master tomcat]# mkdir shangcheng

[root@k8s-master tomcat]# pwp

-bash: pwp: 未找到命令

[root@k8s-master tomcat]# pwd

/root/dt/tomcat

下载镜像

docker pull tomcat:8将 war 包放到shangcheng目录下

[root@k8s-master tomcat]# cd shangcheng/

[root@k8s-master shangcheng]# ls

[root@k8s-master shangcheng]# rz

rz waiting to receive.**[root@k8s-master shangcheng]#

[root@k8s-master shangcheng]# ls

biyesheji.war

生成测试镜像

运行一个测试容器,解压war包

宿主机目录文件挂载到容器里,war包会自行解压

[root@k8s-master shangcheng]# docker run -itd --name tomcat -v /root/dt/tomcat/shangcheng/:/usr/local/tomcat/webapps/ --restart=always tomcat:8

1adaadb8d33f77f4ca31bfb438471a6328c35ec4105aecdd88a71330896bca5c

biyesheji.sql 文件拷贝给mysql库

[root@k8s-master shangcheng]# ls

biyesheji biyesheji.war

[root@k8s-master shangcheng]# cd biyesheji/

[root@k8s-master biyesheji]# ls

注意事项 assets biyesheji.sql css favicon.ico images images.zip index.jsp js lib META-INF static WEB-INF

[root@k8s-master biyesheji]# cp biyesheji.sql /root/dt/mysql/

[root@k8s-master biyesheji]# ls

注意事项 assets biyesheji.sql css favicon.ico images images.zip index.jsp js lib META-INF static WEB-INF

[root@k8s-master dt]# ls

mysql nginx redis tomcat

[root@k8s-master dt]# cd mysql/

[root@k8s-master mysql]# ls

biyesheji.sql configmap.yaml init-scripts.yaml master.yaml namespacemysql.yaml secret.yaml slave.yaml

注入sql语句

将复制的 sql文件内容 拷贝 到 configmap.yaml 文件中

修改

并添加这两句

[root@k8s-master mysql]# vim configmap.yaml

-- 1. 先创建 biyesheji 库(避免库不存在导致后续语句失败)CREATE DATABASE IF NOT EXISTS biyesheji;-- 2. 切换到 biyesheji 库(所有表都会创建在该库下)USE biyesheji;![]()

mysql 主配置

修改后如下

[root@k8s-master mysql]# cat master.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:name: mysql-masternamespace: mysql

spec:serviceName: mysql-masterreplicas: 1selector:matchLabels:app: mysql-mastertemplate:metadata:labels:app: mysql-masterspec:containers:- name: mysqlimage: mysql:8.0ports:- containerPort: 3306env:- name: MYSQL_ROOT_PASSWORDvalueFrom:secretKeyRef:name: mysql-secretskey: root-passwordvolumeMounts:- name: mysql-configmountPath: /etc/mysql/conf.d/master.cnfsubPath: master.cnf- name: master-init-script # 仅挂载主库脚本mountPath: /docker-entrypoint-initdb.d/master-init.sqlsubPath: master-init.sql # 明确指定脚本- name: biyesheji-sqlmountPath: /docker-entrypoint-initdb.d/biyesheji.sqlsubPath: biyesheji.sqlvolumes:- name: mysql-configconfigMap:name: mysql-configitems:- key: master.cnfpath: master.cnf- name: master-init-script # 关联 ConfigMap 中的主库脚本configMap:name: mysql-init-scriptsitems:- key: master-init.sqlpath: master-init.sql- name: biyesheji-sqlconfigMap:name: mysql-configitems:- key: biyesheji.sqlpath: biyesheji.sql

---

apiVersion: v1

kind: Service

metadata:name: mysql-masternamespace: mysql

spec:ports:- port: 3306name: mysqlclusterIP: Noneselector:app: mysql-master匹配configmap

需要添加下面几行

volumeMounts:- name: biyesheji-sqlmountPath: /docker-entrypoint-initdb.d/biyesheji.sqlsubPath: biyesheji.sqlvolumes:- name: biyesheji-sqlconfigMap:name: mysql-configitems:- key: biyesheji.sqlpath: biyesheji.sql

更新资源清单

然后执行如下操作

[root@k8s-master mysql]# kubectl delete ns mysql

namespace "mysql" deleted

[root@k8s-master mysql]# ls

biyesheji.sql configmap.yaml init-scripts.yaml master.yaml namespacemysql.yaml secret.yaml slave.yaml

[root@k8s-master mysql]# kubectl apply -f namespacemysql.yaml

namespace/mysql created

[root@k8s-master mysql]# kubectl apply -f configmap.yaml

configmap/mysql-config created

[root@k8s-master mysql]# kubectl apply -f init-scripts.yaml

configmap/mysql-init-scripts created

[root@k8s-master mysql]# kubectl apply -f secret.yaml

secret/mysql-secrets created

[root@k8s-master mysql]# kubectl apply -f master.yaml

statefulset.apps/mysql-master created

service/mysql-master created

[root@k8s-master mysql]# kubectl apply -f slave.yaml

statefulset.apps/mysql-slave created

service/mysql-slave created

[root@k8s-master mysql]# kubectl -n mysql get po

NAME READY STATUS RESTARTS AGE

mysql-master-0 1/1 Running 0 20s

mysql-slave-0 1/1 Running 0 8s

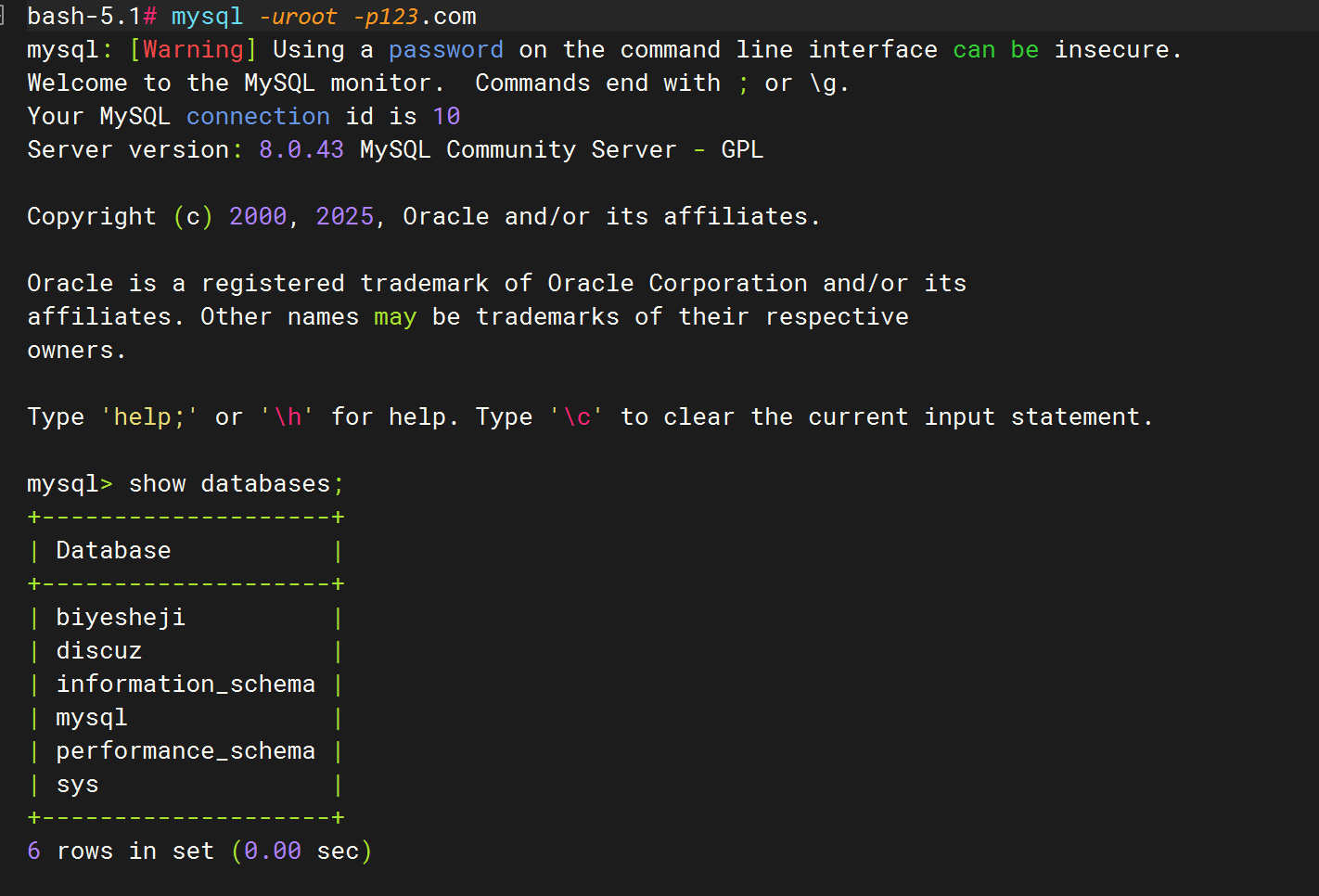

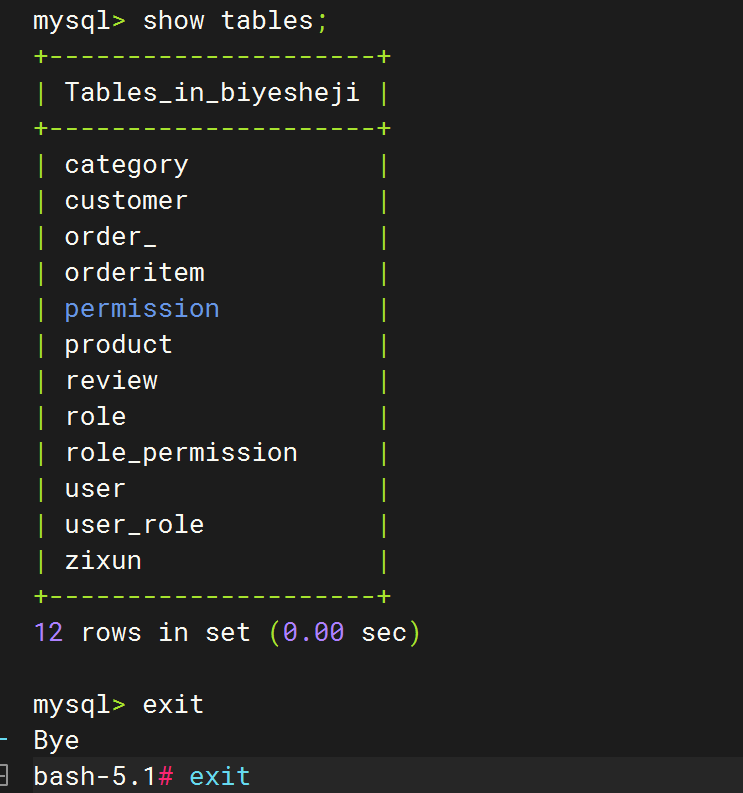

验证

biyesheji.sql 是否注入到 mysql 主库里

[root@k8s-master mysql]# kubectl -n mysql exec -it mysql-master-0 -- bash

bash-5.1# ls

afs boot docker-entrypoint-initdb.d etc lib media opt root sbin sys usr

bin dev entrypoint.sh home lib64 mnt proc run srv tmp var

bash-5.1# cd docker-entrypoint-initdb.d/

bash-5.1# ls # 查看有没有 biyesheji.sql ,有就证明注入进来了

biyesheji.sql master-init.sql

bash-5.1# mysql -uroot -p123.com

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 10

Server version: 8.0.43 MySQL Community Server - GPLCopyright (c) 2000, 2025, Oracle and/or its affiliates.Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.mysql> show databases;

+--------------------+

| Database |

+--------------------+

| biyesheji |

| discuz |

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

6 rows in set (0.00 sec)mysql> use biyesheji;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -ADatabase changed

mysql> show tables;

+---------------------+

| Tables_in_biyesheji |

+---------------------+

| category |

| customer |

| order_ |

| orderitem |

| permission |

| product |

| review |

| role |

| role_permission |

| user |

| user_role |

| zixun |

+---------------------+

12 rows in set (0.00 sec)mysql> exit

Bye

bash-5.1# exit

exit

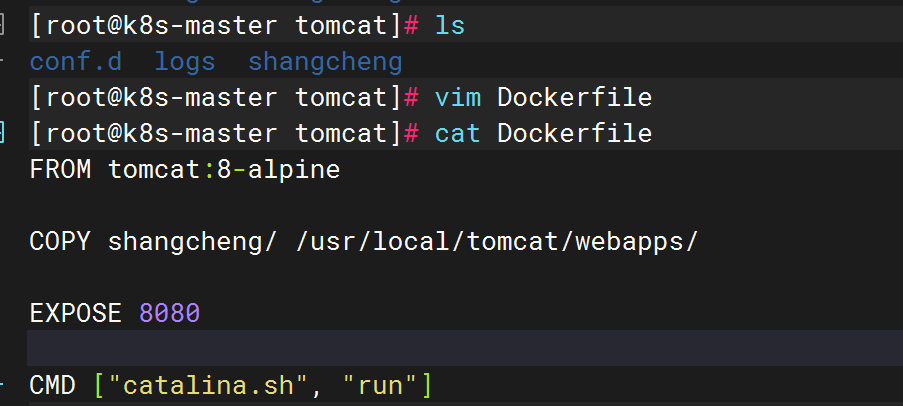

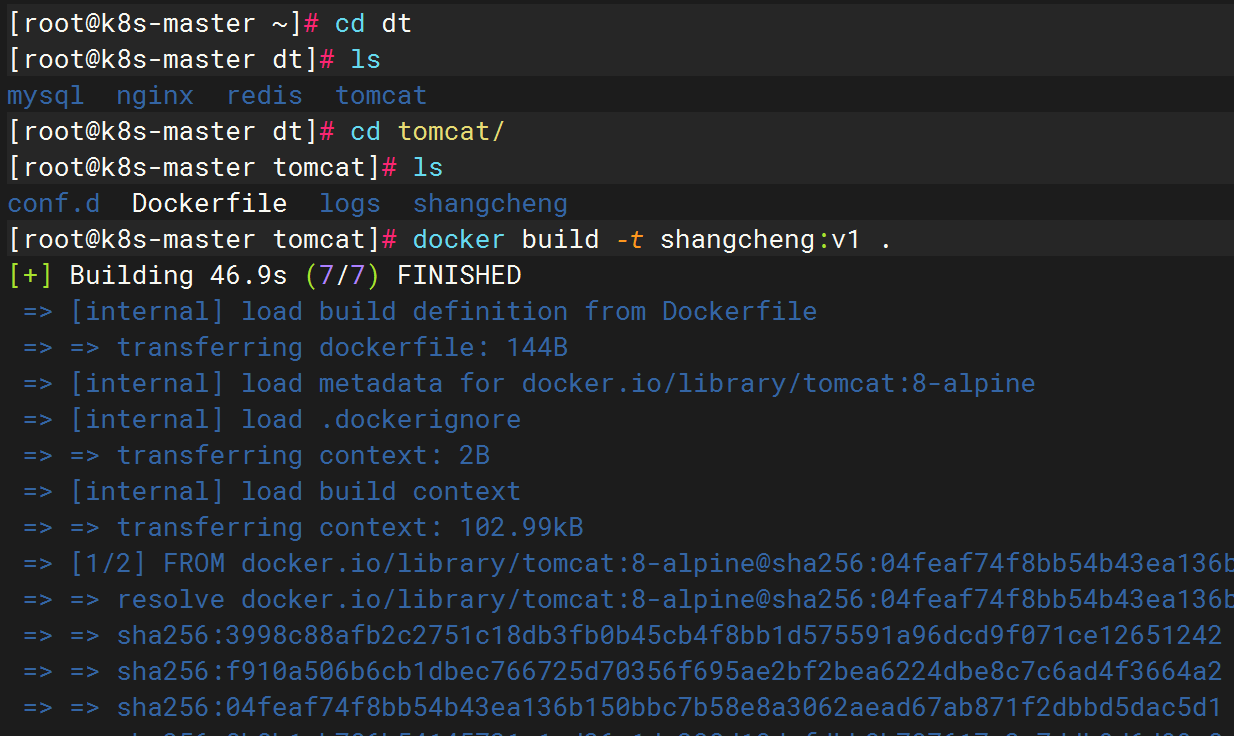

编写镜像

编写 Dockerfile 文件

/root/dt/tomcat

[root@k8s-master tomcat]# ls

conf.d logs shangcheng

[root@k8s-master tomcat]# vim Dockerfile

[root@k8s-master tomcat]# cat Dockerfile

FROM tomcat:8-alpineCOPY shangcheng/ /usr/local/tomcat/webapps/EXPOSE 8080CMD ["catalina.sh", "run"]

生成镜像

[root@k8s-master tomcat]# docker build -t shangcheng:v1 .

修改商城默认配置

从上面生成的这个镜像,运行容器

进入容器 修改 tomcat 文件

index.jsp jdbc.properties 这两个

[root@k8s-master tomcat]# docker images | grep shangcheng

shangcheng v1 bd0fb2a47268 52 minutes ago 181MB

[root@k8s-master tomcat]# docker run -itd --name tomcat123 -p 8080:8080 shangcheng:v1

3c91c57de745ada343bd9725a65897ad36f004f5dd55bb4a3104c2cd88a68fc9[root@k8s-master tomcat]# docker exec -it 3c sh

/usr/local/tomcat # ls

BUILDING.txt NOTICE RUNNING.txt include native-jni-lib work

CONTRIBUTING.md README.md bin lib temp

LICENSE RELEASE-NOTES conf logs webapps

/usr/local/tomcat # cd webapps/

/usr/local/tomcat/webapps # ls

ROOT biyesheji biyesheji.war docs examples host-manager manager

/usr/local/tomcat/webapps # cd biyesheji/

/usr/local/tomcat/webapps/biyesheji # ls

META-INF assets css images index.jsp lib 注意事项

WEB-INF biyesheji.sql favicon.ico images.zip js static

/usr/local/tomcat/webapps/biyesheji # vim index.jsp

sh: vim: not found

/usr/local/tomcat/webapps/biyesheji # vi index.jsp

/usr/local/tomcat/webapps/biyesheji # cat index.jsp

<%--Created by IntelliJ IDEA.User: baiyuhongDate: 2018/10/21Time: 18:43To change this template use File | Settings | File Templates.

--%>

<%response.sendRedirect(request.getContextPath()+"/fore/foreIndex"); //项目启动时,自动跳转到前台首页 #在 fore前加 /

%>

/usr/local/tomcat/webapps/biyesheji #

/usr/local/tomcat/webapps/biyesheji # cd WEB-INF/

/usr/local/tomcat/webapps/biyesheji/WEB-INF # ls

classes foreinclude include lib page web.xml

/usr/local/tomcat/webapps/biyesheji/WEB-INF # cd classes/

/usr/local/tomcat/webapps/biyesheji/WEB-INF/classes # ls

com jdbc.properties mapper spring

generatorConfig.xml log4j.properties mybatis

/usr/local/tomcat/webapps/biyesheji/WEB-INF/classes # vi jdbc.properties

/usr/local/tomcat/webapps/biyesheji/WEB-INF/classes # vi jdbc.properties

/usr/local/tomcat/webapps/biyesheji/WEB-INF/classes # vi jdbc.properties

/usr/local/tomcat/webapps/biyesheji/WEB-INF/classes # cat jdbc.properties

jdbc.driver=com.mysql.cj.jdbc.Driver

jdbc.jdbcUrl=jdbc:mysql://mysql-master-0.mysql-master.mysql.svc.cluster.local:3306/biyesheji?useUnicode=true&characterEncoding=utf-8&allowMultiQueries=true&useSSL=false&serverTimezone=GMT%2b8&allowPublicKeyRetrieval=true

jdbc.user=tomcat

jdbc.password=123.com

注意:vi 进去修改,删除的时候,小a向前删,大A向前删

容器生成镜像

[root@k8s-master biyesheji]# docker commit 3c91c57de745 tomcat-web:v1

sha256:79eecf4dee57c2d23bc7a2e5fb5bdac65bd50139dbad93cda96ac302dd77154f

镜像归档为tar包

[root@k8s-master biyesheji]# docker save -o tomcat-web.tar tomcat-web:v1

[root@k8s-master biyesheji]# ls

注意事项 assets biyesheji.sql css favicon.ico images images.zip index.jsp js lib META-INF static tomcat-web.tar WEB-INF

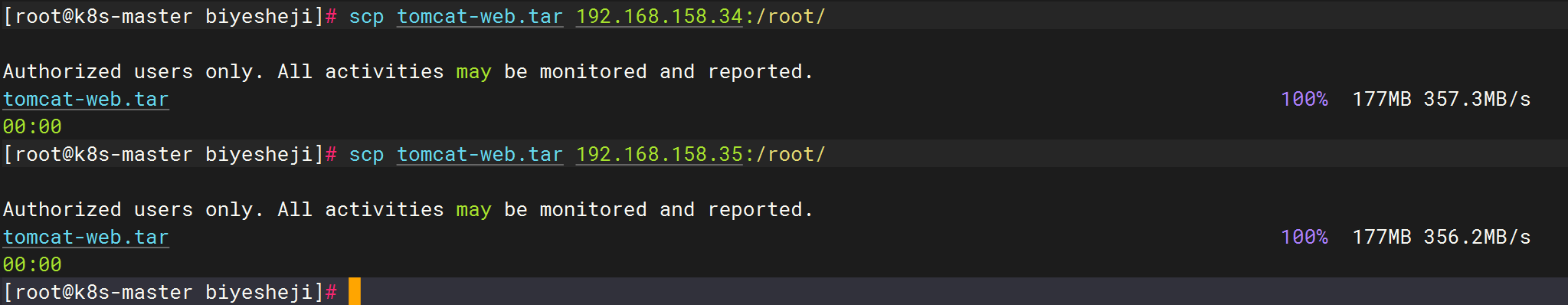

拷贝tar包被node节点

[root@k8s-master biyesheji]# scp tomcat-web.tar 192.168.158.34:/root/Authorized users only. All activities may be monitored and reported.

tomcat-web.tar 100% 177MB 357.3MB/s 00:00

[root@k8s-master biyesheji]# scp tomcat-web.tar 192.168.158.35:/root/Authorized users only. All activities may be monitored and reported.

tomcat-web.tar 100% 177MB 356.2MB/s 00:00

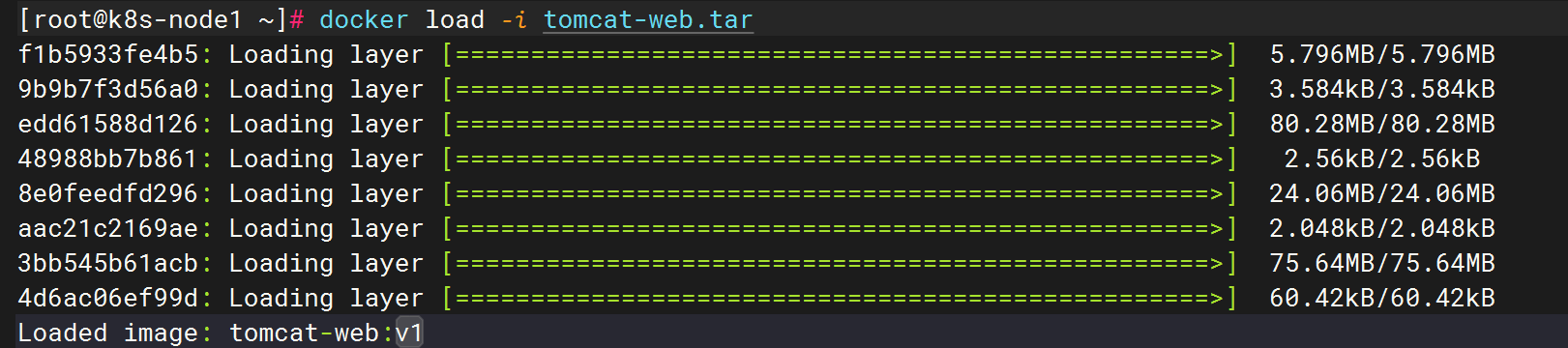

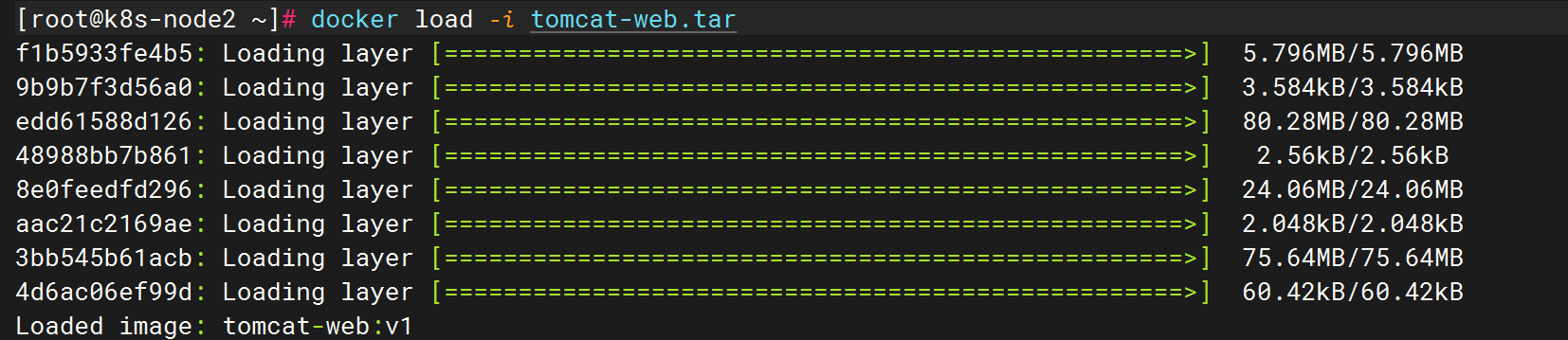

node节点

从tar包中加载镜像

[root@k8s-node1 ~]# docker load -i tomcat-web.tar

f1b5933fe4b5: Loading layer [==================================================>] 5.796MB/5.796MB

9b9b7f3d56a0: Loading layer [==================================================>] 3.584kB/3.584kB

edd61588d126: Loading layer [==================================================>] 80.28MB/80.28MB

48988bb7b861: Loading layer [==================================================>] 2.56kB/2.56kB

8e0feedfd296: Loading layer [==================================================>] 24.06MB/24.06MB

aac21c2169ae: Loading layer [==================================================>] 2.048kB/2.048kB

3bb545b61acb: Loading layer [==================================================>] 75.64MB/75.64MB

4d6ac06ef99d: Loading layer [==================================================>] 60.42kB/60.42kB

Loaded image: tomcat-web:v1[root@k8s-node2 ~]# docker load -i tomcat-web.tar

f1b5933fe4b5: Loading layer [==================================================>] 5.796MB/5.796MB

9b9b7f3d56a0: Loading layer [==================================================>] 3.584kB/3.584kB

edd61588d126: Loading layer [==================================================>] 80.28MB/80.28MB

48988bb7b861: Loading layer [==================================================>] 2.56kB/2.56kB

8e0feedfd296: Loading layer [==================================================>] 24.06MB/24.06MB

aac21c2169ae: Loading layer [==================================================>] 2.048kB/2.048kB

3bb545b61acb: Loading layer [==================================================>] 75.64MB/75.64MB

4d6ac06ef99d: Loading layer [==================================================>] 60.42kB/60.42kB

Loaded image: tomcat-web:v1

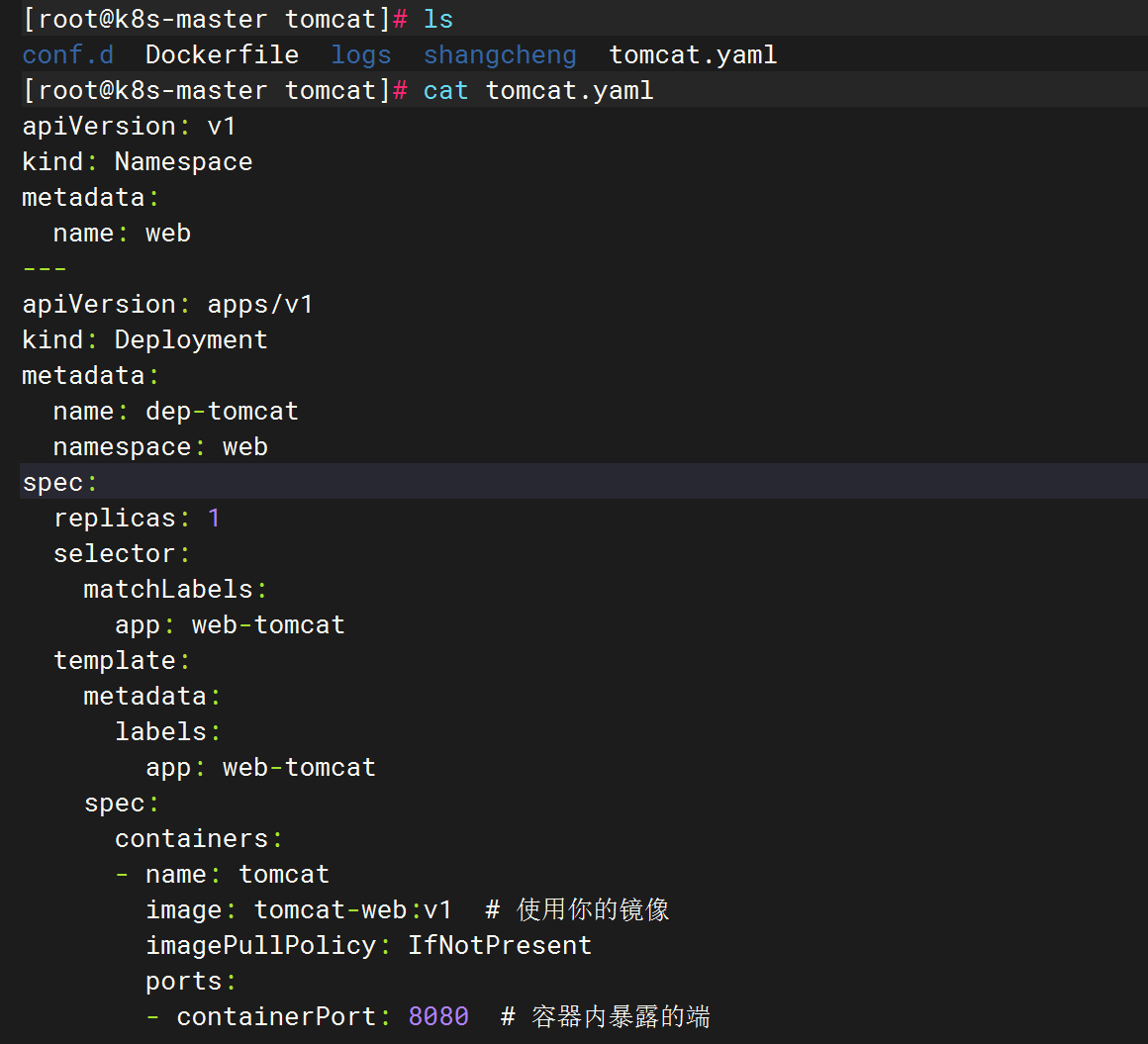

部署tomcat商城

编写tomcat的yaml文件

[root@k8s-master tomcat]# ls

conf.d Dockerfile logs shangcheng tomcat.yaml[root@k8s-master tomcat]# cat tomcat.yaml

apiVersion: v1

kind: Namespace

metadata:name: web

---

apiVersion: apps/v1

kind: Deployment

metadata:name: dep-tomcatnamespace: web

spec:replicas: 1selector:matchLabels:app: web-tomcattemplate:metadata:labels:app: web-tomcatspec:containers:- name: tomcatimage: tomcat-web:v1 # 使用你的镜像imagePullPolicy: IfNotPresentports:- containerPort: 8080 # 容器内暴露的端---

apiVersion: v1

kind: Service

metadata:name: svc-tomcatnamespace: web # 指定命名空间为 discuz

spec:type: NodePortports:- port: 8080 # 集群内访问端口targetPort: 8080 # 容器内端口nodePort: 30808 # 对外暴露的节点端口selector:app: web-tomcat # 匹配 Deployment 的标签

提交tomcat资源清单

[root@k8s-master tomcat]# kubectl apply -f tomcat.yaml

namespace/web created

deployment.apps/dep-tomcat created

service/svc-tomcat created

访问

访问成功

192.168.158.33:30808/biyesheji/fore/foreIndex

二、部署discuz论坛与tomcat商城-基于域名访问

环境准备

将ingress-1.11.tar镜像包拷贝到每个node节点

将ingress-nginx-controller-v1.11.3.zip拷贝到master主节点,这个是资源清单文件

镜像包拷贝到node节点,并加载镜像

[root@k8s-node1 ~]# docker load -i ingress-1.11.tar

[root@k8s-node2 ~]# docker load -i ingress-1.11.tar master主节点

解压ingress软件包

[root@k8s-master ~]# rz

rz waiting to receive.**[root@k8s-master ~]#

[root@k8s-master ~]# unzip ingress-nginx-controller-v1.11.3.zip

Archive: ingress-nginx-controller-v1.11.3.zip

修改ingress配置文件

#配置文件路径 /root/ingress-nginx-controller-v1.11.3/deploy/static/provider/cloud/

[root@k8s-master cloud]# ls

deploy.yaml kustomization.yaml

#修改配置文件,将里面的三处镜像后面的@到最后的删除掉

[root@k8s-master cloud]# vim deploy.yaml 启用ingress

[root@k8s-master cloud]# kubectl create -f deploy.yaml [root@k8s-master cloud]# kubectl -n ingress-nginx get pod

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-qkm2p 0/1 Completed 0 45s

ingress-nginx-admission-patch-n27t5 0/1 Completed 0 45s

ingress-nginx-controller-7d7455dcf8-84grm 1/1 Running 0 45s#查看是在哪个节点

[root@k8s-master cloud]# kubectl -n ingress-nginx get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create-qkm2p 0/1 Completed 0 71s 10.244.36.104 k8s-node1 <none> <none>

ingress-nginx-admission-patch-n27t5 0/1 Completed 0 71s 10.244.36.105 k8s-node1 <none> <none>

ingress-nginx-controller-7d7455dcf8-84grm 1/1 Running 0 71s 10.244.36.106 k8s-node1 <none> <none>#显示<pending> 所以需要修改LoadBalancer

[root@k8s-master cloud]# kubectl -n ingress-nginx get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.99.187.201 <pending> 80:30237/TCP,443:30538/TCP 106s

ingress-nginx-controller-admission ClusterIP 10.101.75.87 <none> 443/TCP 106s[root@k8s-master cloud]# kubectl -n ingress-nginx edit svc ingress-nginx-controllertype: NodePort #将这儿的LoadBalancer 改为NodePort 然后保存退出#再查看service,就没问题了修改好了

[root@k8s-master cloud]# kubectl -n ingress-nginx get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.99.187.201 <none> 80:30237/TCP,443:30538/TCP 7m25s

ingress-nginx-controller-admission ClusterIP 10.101.75.87 <none> 443/TCP 7m25s#创建的这个service是什么类型,无所谓下载ipvsadm

#这里下载ipvsadm,配置好

#先下载安装

yum install ipvsadm -y

#验证安装

ipvsadm --version

#加载 IPVS 内核模块

# 加载核心模块

modprobe ip_vs

# 检查已加载模块

lsmod | grep ip_vs

# 此模式必须安装ipvs内核模块(集群部署的时候已安装),否则会降级为iptables

# 开启ipvs,cm: configmap

# 打开配置文件修改mode: "ipvs"

[root@k8s-master01 ~]# kubectl edit cm kube-proxy -n kube-system

#重启 kube-proxy Pod

[root@k8s-master01 ~]# kubectl delete pod -l k8s-app=kube-proxy -n kube-systemLoadBalancer模式

搭建metallb支持LoadBalancer

拷贝metallb-0.14.8.zip 软件包到master 主节点

解压

[root@k8s-master ~]# unzip metallb-0.14.8.zip [root@k8s-master manifests]# ls

metallb-frr-k8s-prometheus.yaml metallb-frr-prometheus.yaml metallb-native-prometheus.yaml

metallb-frr-k8s.yaml metallb-frr.yaml metallb-native.yaml[root@k8s-master manifests]# kubectl apply -f metallb-native.yaml编写地址段分配configmap

创建IP地址池

cat > IPAddressPool.yaml<<EOF

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:name: planip-pool #这里与下面的L2Advertisement的ip池名称需要一样namespace: metallb-system

spec:addresses:- 192.168.158.40-192.168.158.50 #自定义ip段

EOF关联IP地址池

cat > L2Advertisement.yaml<<EOF

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:name: planip-poolnamespace: metallb-system

spec:ipAddressPools:- planip-pool #这里需要跟上面ip池的名称保持一致

EOF提交资源清单

[root@k8s-master manifests]# kubectl apply -f IPAddressPool.yaml

ipaddresspool.metallb.io/planip-pool created[root@k8s-master manifests]# kubectl apply -f L2Advertisement.yaml

l2advertisement.metallb.io/planip-pool created查看

[root@k8s-master manifests]# kubectl -n metallb-system get pod

NAME READY STATUS RESTARTS AGE

controller-77676c78d9-495lv 1/1 Running 0 2m34s

speaker-5pc9l 1/1 Running 0 2m34s

speaker-gtdxh 1/1 Running 0 2m34s

speaker-nw2dp 1/1 Running 0 2m34s[root@k8s-master manifests]# ls

IPAddressPool.yaml metallb-frr-k8s.yaml metallb-native-prometheus.yaml

L2Advertisement.yaml metallb-frr-prometheus.yaml metallb-native.yaml

metallb-frr-k8s-prometheus.yaml metallb-frr.yaml1、discuz域名访问

修改discuz的yaml文件

[root@k8s-master ~]# cd dt

[root@k8s-master dt]#

[root@k8s-master dt]# ls

mysql nginx redis tomcat

[root@k8s-master dt]# cd nginx/

[root@k8s-master nginx]# ls

discuz discuz.conf discuz.yaml Dockerfile luo-nginx.tar www.conf

[root@k8s-master nginx]# cat discuz.yaml

apiVersion: v1

kind: Namespace

metadata:name: discuz

---

apiVersion: apps/v1

kind: Deployment

metadata:name: web-discuznamespace: discuz

spec:replicas: 1selector:matchLabels:app: web-discuztemplate:metadata:labels:app: web-discuzspec:containers:- name: nginximage: luo-nginx:latestimagePullPolicy: IfNotPresentports:- containerPort: 80livenessProbe: httpGet:path: /info.phpport: 80initialDelaySeconds: 10periodSeconds: 5failureThreshold: 5

---

apiVersion: v1

kind: Service

metadata:name: svc-discuznamespace: discuzlabels: app: web-discuz

spec:ports:- port: 80protocol: TCPtargetPort: 80selector:app: web-discuztype: LoadBalancer主要修改service

通过service事项负载均衡,端口转发

apiVersion: v1

kind: Service

metadata:name: svc-discuz #svc名称namespace: discuz #命名空间labels: app: web-discuz # 服务标签:标识关联的部署

spec:ports:- port: 80 # 服务端口:集群内访问端口protocol: TCP # 协议类型:TCPtargetPort: 80 # 目标端口:容器监听端口selector:app: web-discuz # Pod选择器:关联标签为app=nginx-deploy1的Podtype: LoadBalancer # 服务类型:云平台负载均衡器

ingress配置文件

在ingress配置文件中添加域名

[root@k8s-master cloud]# cat ingress-http.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress # 创建一个类型为Ingress的资源

metadata:name: nginx-ingress # 这个资源的名字为 nginx-ingressnamespace: discuz

spec:ingressClassName: nginx # 使用nginxrules:- host: nginx.discuz.com # 访问此内容的域名http:paths:- backend:service:name: svc-discuz # 对应nginx的服务名字port:number: 80 # 访问的端口path: / # 匹配规则pathType: Prefix # 匹配类型,这里为前缀匹配

提交资源清单

[root@k8s-master nginx]# kubectl apply -f discuz.yaml

namespace/discuz unchanged

deployment.apps/web-discuz created

service/svc-discuz created

[root@k8s-master cloud]# kubectl apply -f ingress-http.yaml

ingress.networking.k8s.io/nginx-ingress created

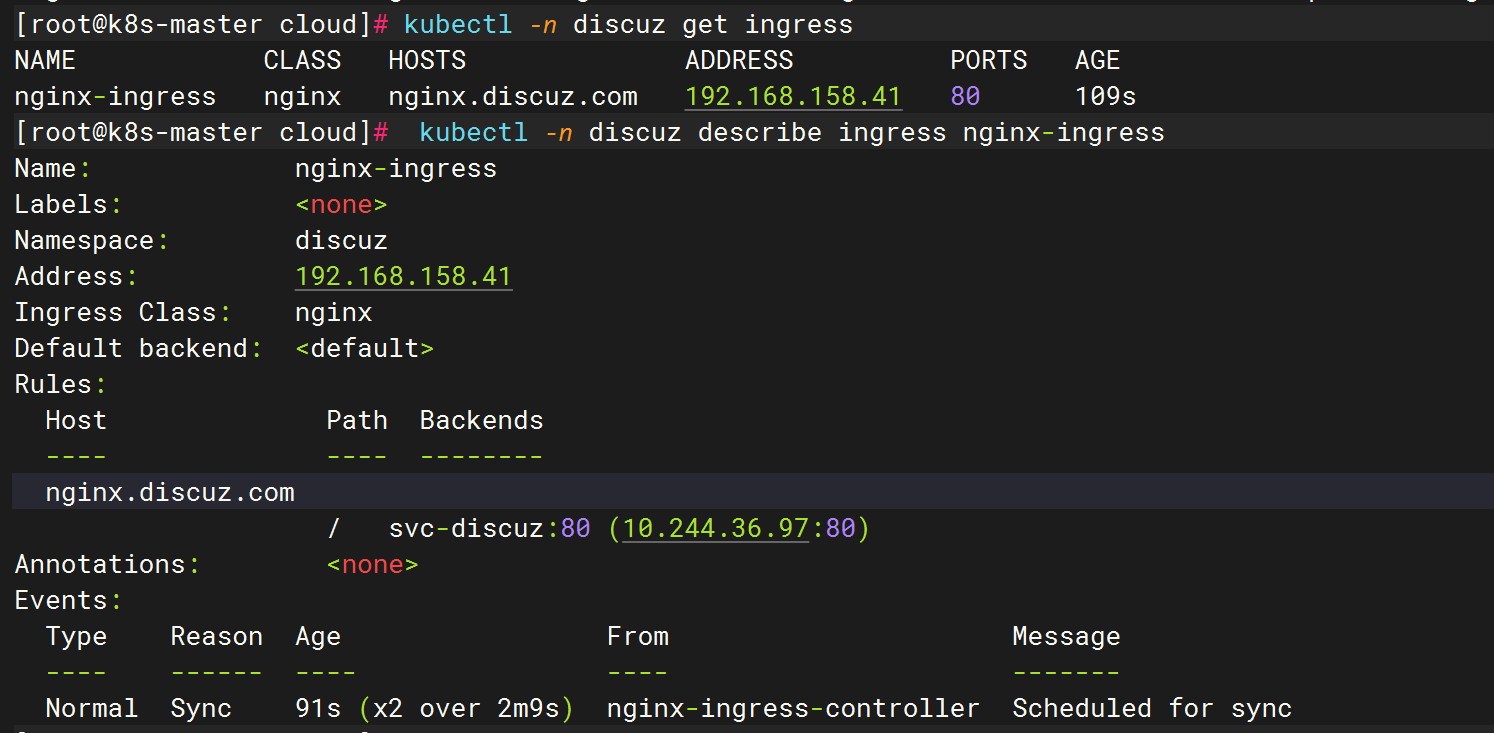

查看

[root@k8s-master cloud]# kubectl -n discuz get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dep-discuz-5575899bd-vxksb 1/1 Running 0 20m 10.244.169.191 k8s-node2 <none> <none>

[root@k8s-master cloud]# kubectl -n discuz get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc-discuz LoadBalancer 10.100.47.183 192.168.158.40 80:30722/TCP 13h

[root@k8s-master cloud]# kubectl -n discuz get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

nginx-ingress nginx nginx.discuz.com 192.168.158.41 80 109s

[root@k8s-master cloud]# kubectl -n discuz describe ingress nginx-ingress

Name: nginx-ingress

Labels: <none>

Namespace: discuz

Address: 192.168.158.41

Ingress Class: nginx

Default backend: <default>

Rules:Host Path Backends---- ---- --------nginx.discuz.com / svc-discuz:80 (10.244.36.97:80)

Annotations: <none>

Events:Type Reason Age From Message---- ------ ---- ---- -------Normal Sync 91s (x2 over 2m9s) nginx-ingress-controller Scheduled for sync

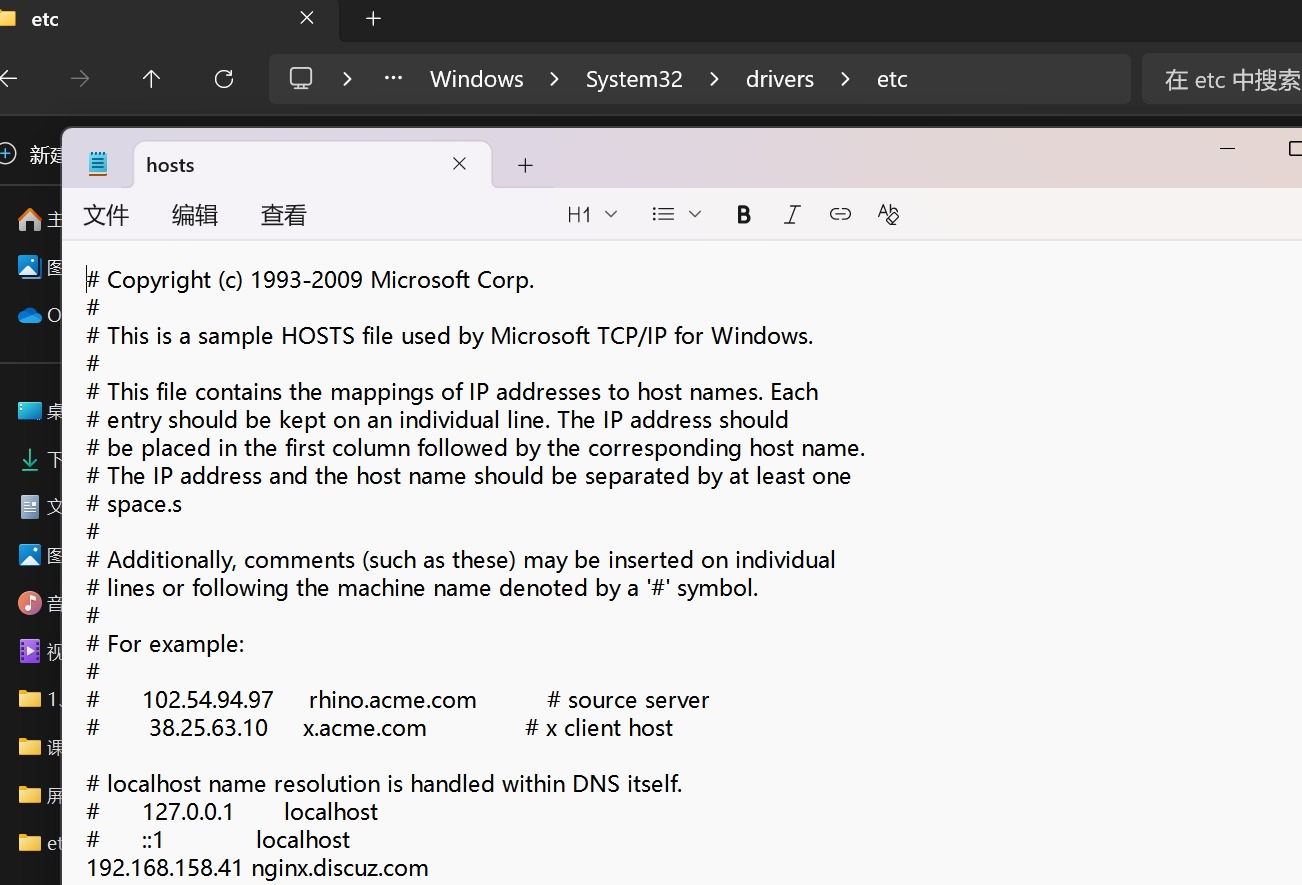

测试主机hosts文件添加域名

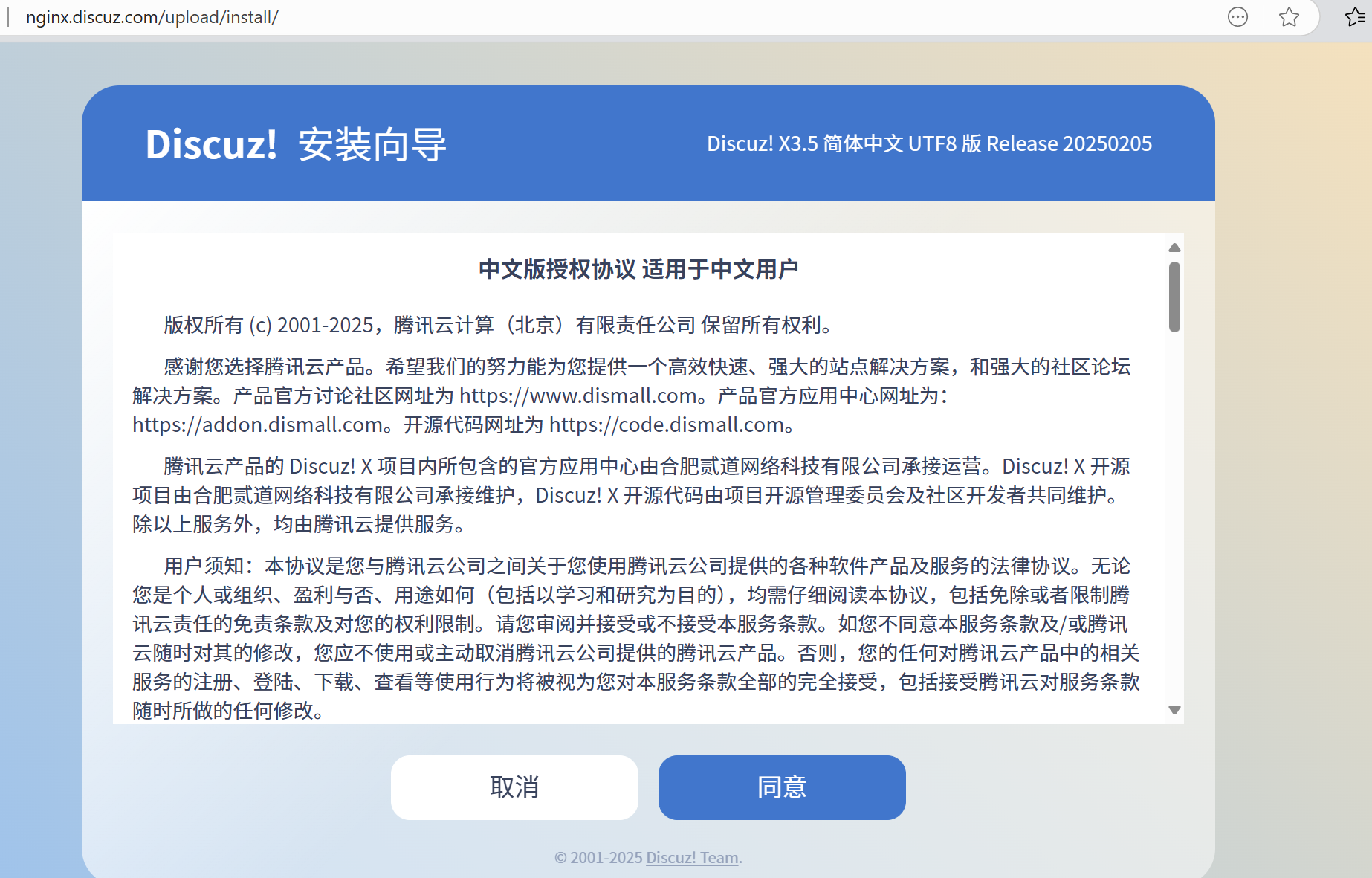

访问

discuz域名访问成功

nginx.discuz.com/upload/install

2、tomcat域名访问

修改tomcat的yaml文件

[root@k8s-master tomcat]# cat tomcat.yaml

apiVersion: v1

kind: Namespace

metadata:name: web

---

apiVersion: apps/v1

kind: Deployment

metadata:name: web-tomcatnamespace: web

spec:replicas: 1selector:matchLabels:app: web-tomcattemplate:metadata:labels:app: web-tomcatspec:containers:- name: tomcatimage: tomcat-web:v1 # 使用你的镜像imagePullPolicy: IfNotPresentports:- containerPort: 8080 # 容器内暴露的端---

apiVersion: v1

kind: Service

metadata:name: svc-tomcatnamespace: web # 指定命名空间为 discuzlabels:app: web-tomcat

spec:type: NodePortports:- port: 80 # 集群内访问端口protocol: TCPtargetPort: 8080 # 容器内端口nodePort: 30808 # 对外暴露的节点端口selector:app: web-tomcat # 匹配 Deployment 的标签

主要修改service

注意:svc关联pod,命名空间要相同,访问端口

ingress配置文件

在ingress配置文件中添加域名

注意:tomcat的命名空间时web,discuz命名空间时discuz,两者命名空间不同,所以这里针对web空间添加ingress规则

添加好后提交

[root@k8s-master cloud]# cat ingress-http.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress # 创建一个类型为Ingress的资源

metadata:name: nginx-ingress # 这个资源的名字为 nginx-ingressnamespace: discuz

spec:ingressClassName: nginx # 使用nginxrules:- host: nginx.discuz.com # 访问此内容的域名http:paths:- backend:service:name: svc-discuz # 对应nginx的服务名字port:number: 80 # 访问的端口path: / # 匹配规则pathType: Prefix # 匹配类型,这里为前缀匹配

---

apiVersion: networking.k8s.io/v1

kind: Ingress # 创建一个类型为Ingress的资源

metadata:name: nginx-ingress # 这个资源的名字为 nginx-ingressnamespace: web

spec:ingressClassName: nginx # 使用nginxrules:- host: nginx.tomcat.com # 访问此内容的域名http:paths:- backend:service:name: svc-tomcat # 对应nginx的服务名字port:number: 80 # 访问的端口path: / # 匹配规则pathType: Prefix # 匹配类型,这里为前缀匹配

提交资源清单

[root@k8s-master cloud]# kubectl apply -f ingress-http.yaml

ingress.networking.k8s.io/nginx-ingress unchanged

ingress.networking.k8s.io/nginx-ingress created

查看

discuz

tomcat

[root@k8s-master cloud]# kubectl -n web get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

nginx-ingress nginx nginx.tomcat.com 192.168.158.41 80 37s

[root@k8s-master cloud]# kubectl -n web describe ingress

Name: nginx-ingress

Labels: <none>

Namespace: web

Address: 192.168.158.41

Ingress Class: nginx

Default backend: <default>

Rules:Host Path Backends---- ---- --------nginx.tomcat.com / svc-tomcat:80 (10.244.36.102:8080)

Annotations: <none>

Events:Type Reason Age From Message---- ------ ---- ---- -------Normal Sync <invalid> (x2 over 12s) nginx-ingress-controller Scheduled for sync

注意观察:他们两个所属的命名空间不同

测试主机hosts文件添加域名

hosts里添加 域名

访问

tomcat商城,访问成功

三、持久化存储(pvc和pv)

1、nfs共享目录文件

1、以k8s的控制节点 master 作为NFS服务端

[root@k8s-master ~]# yum install -y nfs-utils2、所有 node 节点 都下载 nfs 服务

启用nfs服务

yum install nfs-utils -y

systemctl enable --now nfs3、在宿主机创建NFS需要的共享目录

在 根下 创建

[root@k8s-master nginx]# cd /data

[root@k8s-master data]# ls

[root@k8s-master data]# mkdir discuz -pv

mkdir: 已创建目录 'discuz'

[root@k8s-master data]# mkdir tomcat -pv

mkdir: 已创建目录 'tomcat'

[root@k8s-master data]# mkdir mysql/master -p

[root@k8s-master data]# ls

discuz mysql tomcat

[root@k8s-master data]# tree mysql/

mysql/

└── master2 directories, 0 files

[root@k8s-master data]# mkdir mysql/slave -p

[root@k8s-master data]# mkdir redis -pv

mkdir: 已创建目录 'redis'

[root@k8s-master redis]# mkdir master -pv

mkdir: 已创建目录 'master'

[root@k8s-master redis]# mkdir slave -pv

mkdir: 已创建目录 'slave'

4、配置nfs共享服务器上的/data目录

配置好后保存退出

启用nfs 服务

[root@k8s-master ~]# vim /etc/exports

[root@k8s-master ~]# systemctl enable --now nfs5、使 nfs 配置生效

[root@k8s-master ~]# exportfs -arv

exporting 192.168.158.0/24:/data/redis/slave

exporting 192.168.158.0/24:/data/redis/master

exporting 192.168.158.0/24:/data/mysql/slave

exporting 192.168.158.0/24:/data/mysql/master

exporting 192.168.158.0/24:/data/tomcat

exporting 192.168.158.0/24:/data/discuz

exporting 192.168.158.0/24:/data

查看是否生效

[root@k8s-master ~]# showmount -e

Export list for k8s-master:

/data/redis/slave 192.168.158.0/24

/data/redis/master 192.168.158.0/24

/data/mysql/slave 192.168.158.0/24

/data/mysql/master 192.168.158.0/24

/data/tomcat 192.168.158.0/24

/data/discuz 192.168.158.0/24

/data 192.168.158.0/2458.0/24

6、node节点测试nfs

测试没问题

[root@k8s-node1 ~]# showmount -e 192.168.158.33

Export list for 192.168.158.33:

/data/redis/slave 192.168.158.0/24

/data/redis/master 192.168.158.0/24

/data/mysql/slave 192.168.158.0/24

/data/mysql/master 192.168.158.0/24

/data/tomcat 192.168.158.0/24

/data/discuz 192.168.158.0/24

/data 192.168.158.0/24[root@k8s-node2 ~]# showmount -e 192.168.158.33

Export list for 192.168.158.33:

/data/redis/slave 192.168.158.0/24

/data/redis/master 192.168.158.0/24

/data/mysql/slave 192.168.158.0/24

/data/mysql/master 192.168.158.0/24

/data/tomcat 192.168.158.0/24

/data/discuz 192.168.158.0/24

/data 192.168.158.0/24

2、mysql 可持久化

1.生成 pv 对应共享目录

[root@k8s-master ~]# vim pv.yaml

[root@k8s-master ~]# cat pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:name: mysql-master

spec:capacity:storage: 10GiaccessModes: ["ReadWriteMany"]nfs:path: /data/mysql/masterserver: 192.168.158.33

---

apiVersion: v1

kind: PersistentVolume

metadata:name: mysql-slave

spec:capacity:storage: 10GiaccessModes: ["ReadWriteMany"]nfs:path: /data/mysql/slaveserver: 192.168.158.33

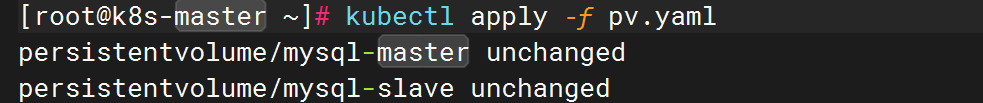

2.提交资源清单生成 pv

[root@k8s-master ~]# kubectl apply -f pv.yaml

persistentvolume/mysql-master unchanged

persistentvolume/mysql-slave unchanged

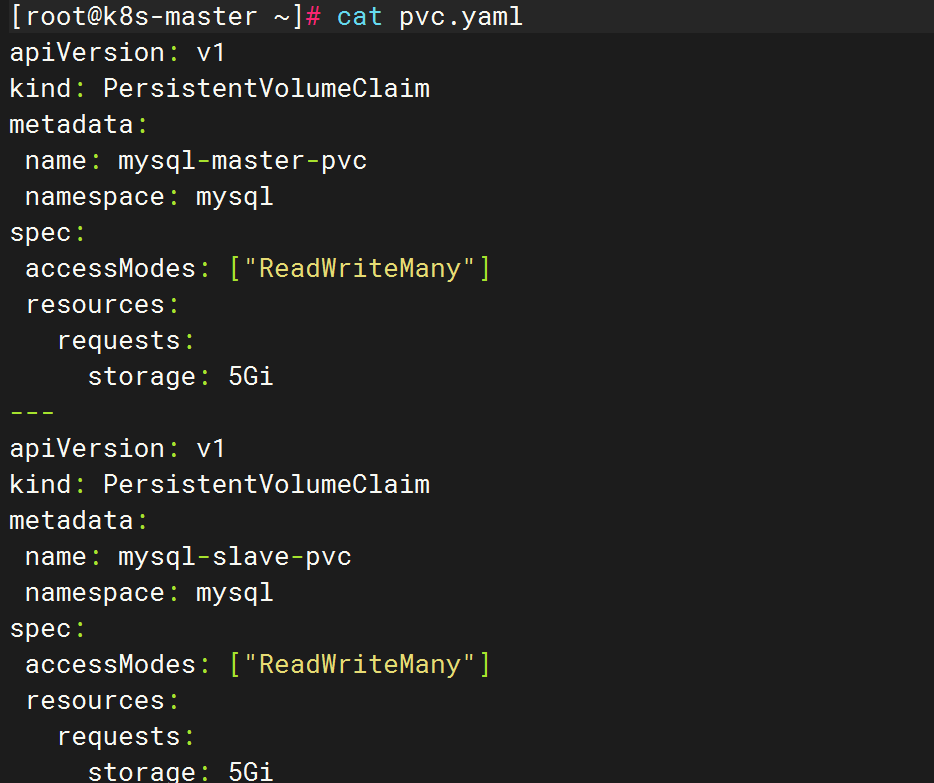

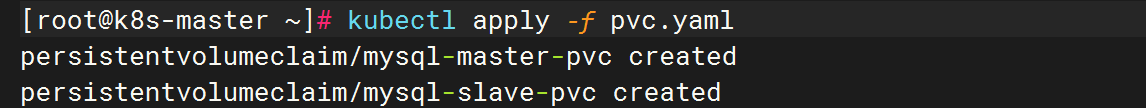

3.生成对应pvc 对应 pv

[root@k8s-master ~]# vim pvc.yaml

[root@k8s-master ~]# cat pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: mysql-master-pvcnamespace: mysql

spec:accessModes: ["ReadWriteMany"]resources:requests:storage: 5Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: mysql-slave-pvcnamespace: mysql

spec:accessModes: ["ReadWriteMany"]resources:requests:storage: 5Gi

[root@k8s-master ~]# kubectl apply -f pvc.yaml

persistentvolumeclaim/mysql-master-pvc created

persistentvolumeclaim/mysql-slave-pvc created

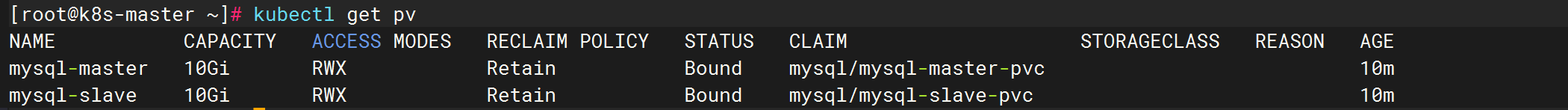

4.查看

pvc成功挂载pv

查看pv和pvc,STATUS是Bound,表示这个pv已经被pvc绑定了

[root@k8s-master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

mysql-master 10Gi RWX Retain Bound mysql/mysql-master-pvc 10m

mysql-slave 10Gi RWX Retain Bound mysql/mysql-slave-pvc 10m

5.修改 mysql 配置文件 对应pvc

1.master (主)

添加如下

volumeMounts:- name: mysql-master-datamountPath: /var/lib/mysqlvolumes:- name: mysql-master-data #和这个要一样persistentVolumeClaim:claimName: mysql-master-pvc

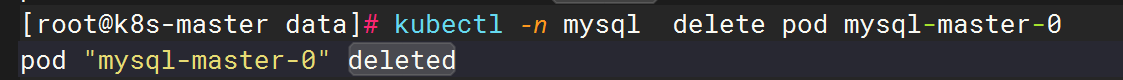

更新之前先删除pod

[root@k8s-master mysql]# kubectl -n mysql delete pod mysql-master-0

pod "mysql-master-0" deleted

[root@k8s-master mysql]# kubectl apply -f master.yaml

statefulset.apps/mysql-master configured

service/mysql-master unchanged

2.slave(从)

添加如下

volumeMounts:- name: mysql-slave-datamountPath: /var/lib/mysqlvolumes:- name: mysql-slave-data #和这个要一样persistentVolumeClaim:claimName: mysql-slave-pvc

[root@k8s-master mysql]# kubectl -n mysql delete pod mysql-slave-0

pod "mysql-slave-0" deleted

[root@k8s-master mysql]# kubectl apply -f slave.yaml

statefulset.apps/mysql-slave unchanged

service/mysql-slave unchanged

6.验证

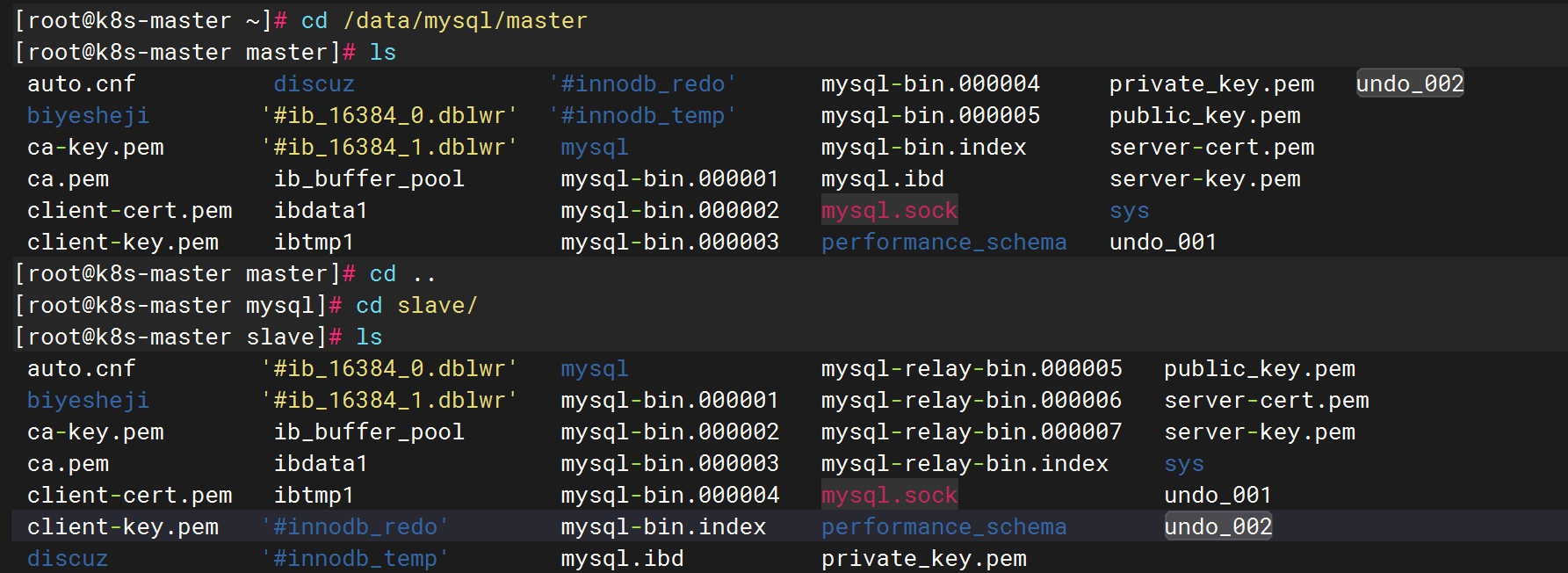

查看数据是否在共享目录中

存在则成功实现持久化

[root@k8s-master ~]# cd /data/mysql/master

[root@k8s-master master]# lsauto.cnf discuz '#innodb_redo' mysql-bin.000004 private_key.pem undo_002biyesheji '#ib_16384_0.dblwr' '#innodb_temp' mysql-bin.000005 public_key.pemca-key.pem '#ib_16384_1.dblwr' mysql mysql-bin.index server-cert.pemca.pem ib_buffer_pool mysql-bin.000001 mysql.ibd server-key.pemclient-cert.pem ibdata1 mysql-bin.000002 mysql.sock sysclient-key.pem ibtmp1 mysql-bin.000003 performance_schema undo_001

[root@k8s-master master]# cd ..

[root@k8s-master mysql]# cd slave/

[root@k8s-master slave]# lsauto.cnf '#ib_16384_0.dblwr' mysql mysql-relay-bin.000005 public_key.pembiyesheji '#ib_16384_1.dblwr' mysql-bin.000001 mysql-relay-bin.000006 server-cert.pemca-key.pem ib_buffer_pool mysql-bin.000002 mysql-relay-bin.000007 server-key.pemca.pem ibdata1 mysql-bin.000003 mysql-relay-bin.index sysclient-cert.pem ibtmp1 mysql-bin.000004 mysql.sock undo_001client-key.pem '#innodb_redo' mysql-bin.index performance_schema undo_002discuz '#innodb_temp' mysql.ibd private_key.pem

3、redis 可持久化

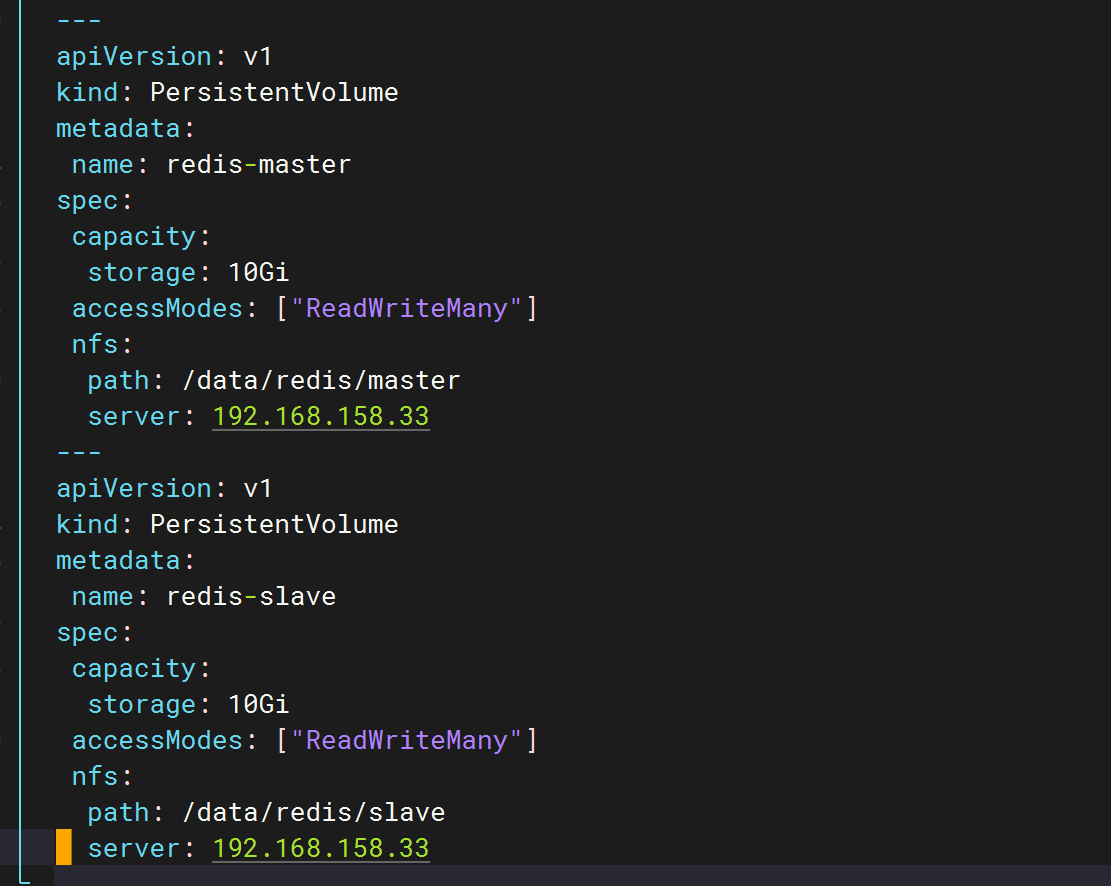

1.生成 pv 存储卷

在之前的 pvc.yaml 文件中添加

aapiVersion: v1

kind: PersistentVolume

metadata:name: redis-master

spec:capacity:storage: 10GiaccessModes: ["ReadWriteMany"]nfs:path: /data/redis/masterserver: 192.168.158.33

---

apiVersion: v1

kind: PersistentVolume

metadata:name: redis-slave

spec:capacity:storage: 10GiaccessModes: ["ReadWriteMany"]nfs:path: /data/redis/slaveserver: 192.168.158.33

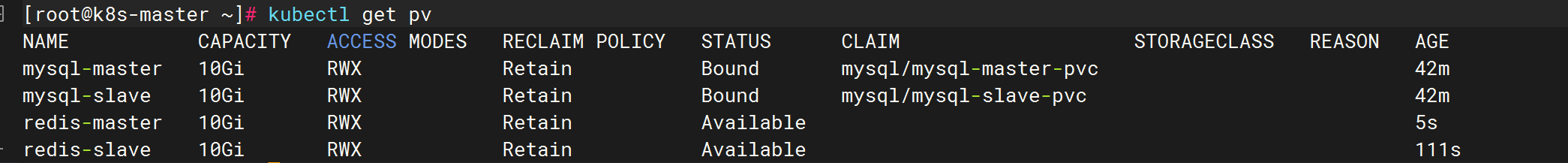

2.更新资源清单

bound 绑定了

Available 可用

[root@k8s-master ~]# kubectl apply -f pv.yaml

persistentvolume/mysql-master unchanged

persistentvolume/mysql-slave unchanged

persistentvolume/redis-master created

persistentvolume/redis-slave unchanged

[root@k8s-master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

mysql-master 10Gi RWX Retain Bound mysql/mysql-master-pvc 42m

mysql-slave 10Gi RWX Retain Bound mysql/mysql-slave-pvc 42m

redis-master 10Gi RWX Retain Available 5s

redis-slave 10Gi RWX Retain Available 111s

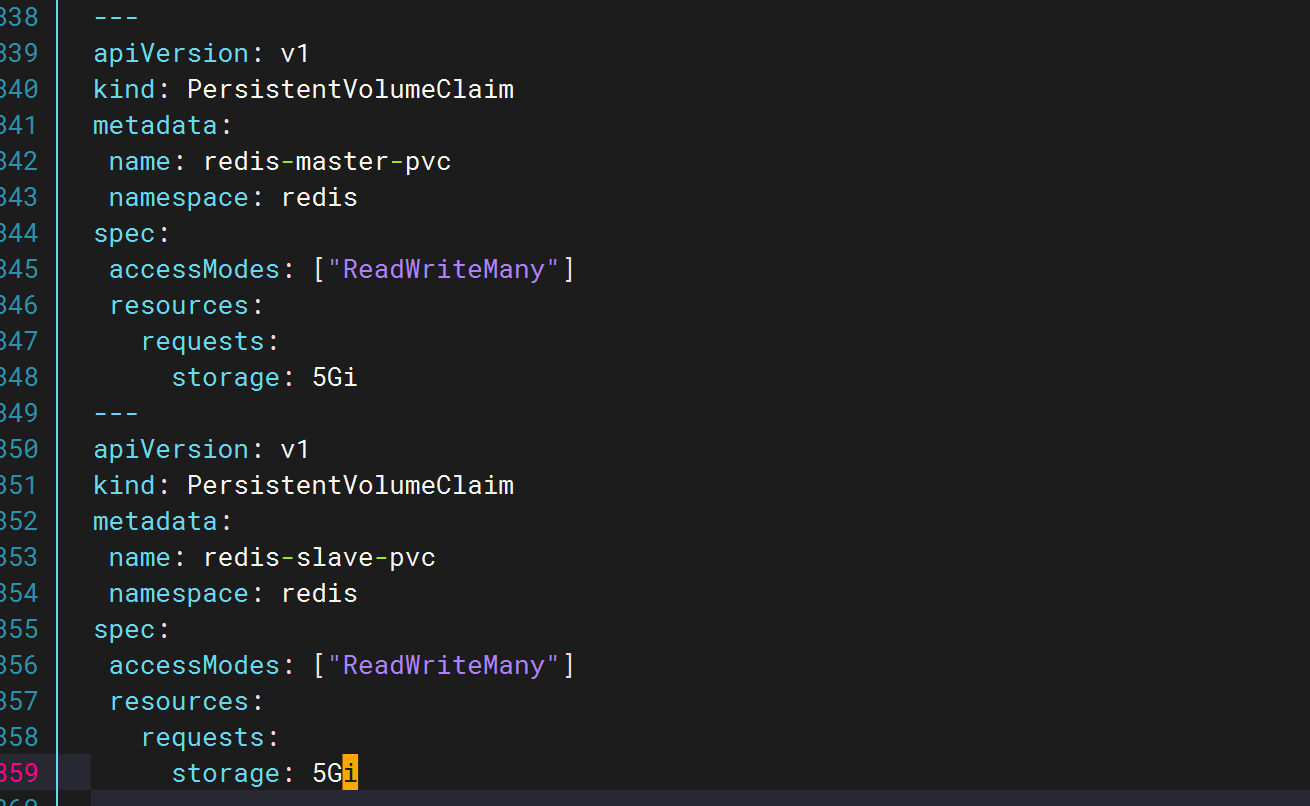

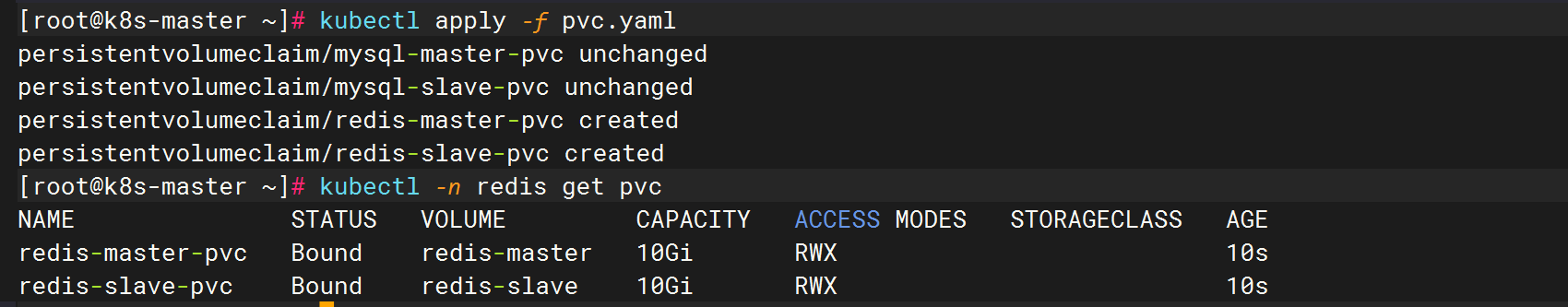

3.生成 pvc 对应 pv

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: redis-master-pvcnamespace: redis

spec:accessModes: ["ReadWriteMany"]resources:requests:storage: 5Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: redis-slave-pvcnamespace: redis

spec:accessModes: ["ReadWriteMany"]resources:requests:storage: 5Gi

更新

[root@k8s-master ~]# kubectl apply -f pvc.yaml

persistentvolumeclaim/mysql-master-pvc unchanged

persistentvolumeclaim/mysql-slave-pvc unchanged

persistentvolumeclaim/redis-master-pvc created

persistentvolumeclaim/redis-slave-pvc created

[root@k8s-master ~]# kubectl -n redis get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

redis-master-pvc Bound redis-master 10Gi RWX 10s

redis-slave-pvc Bound redis-slave 10Gi RWX 10s

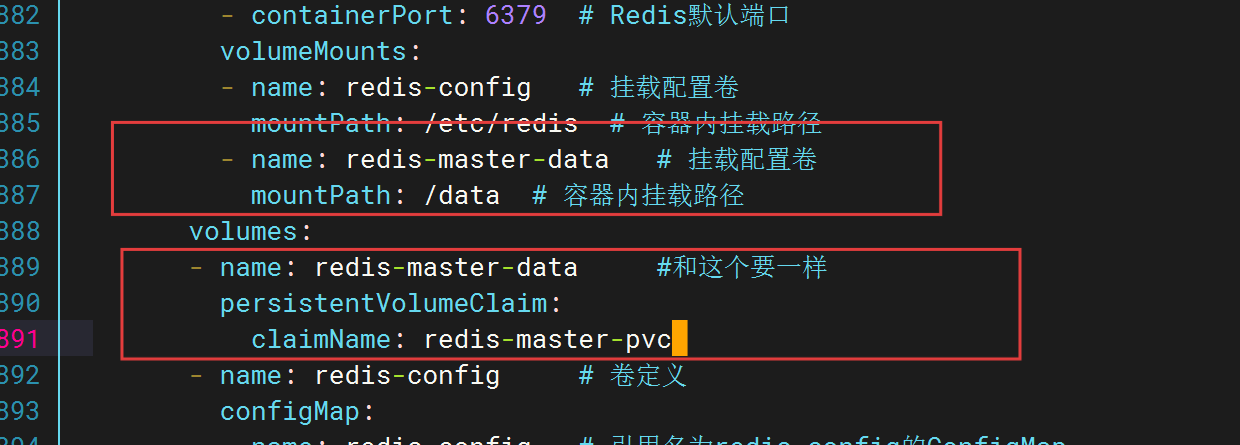

4.修改redis配置文件对应 pvc

1.master

添加如下

- name: redis-master-data # 挂载配置卷mountPath: /data # 容器内挂载路径- name: redis-master-data #和这个要一样persistentVolumeClaim:claimName: redis-master-pvc

2.slave

- name: redis-slave-data # 挂载配置卷mountPath: /data # 容器内挂载路径- name: redis-slave-data #和这个要一样persistentVolumeClaim:claimName: redis-slave-pvc

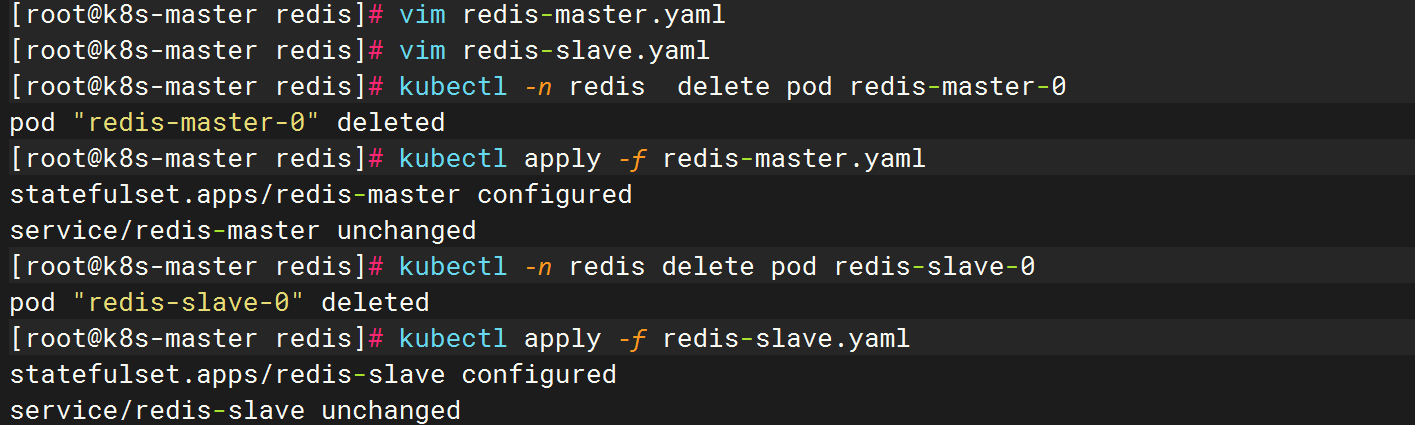

更新

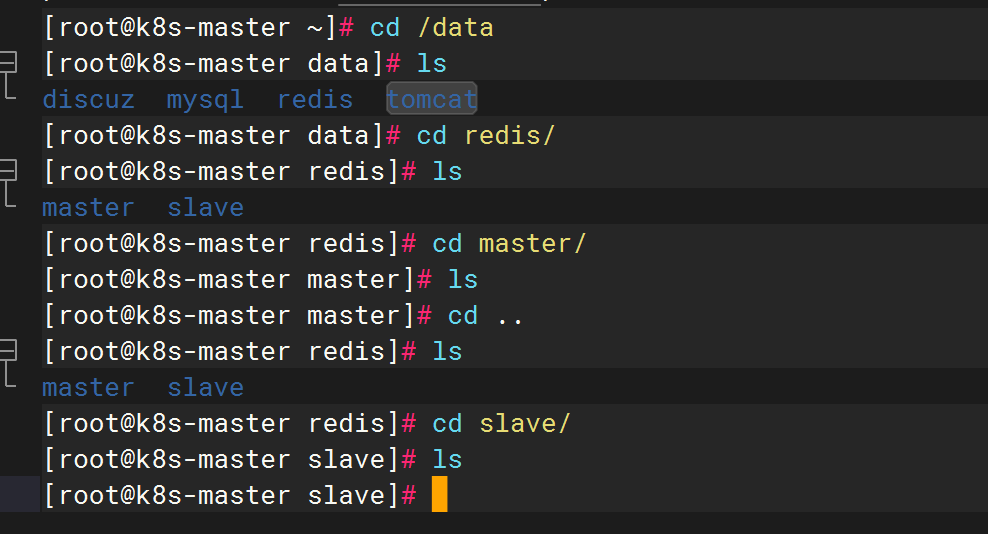

验证

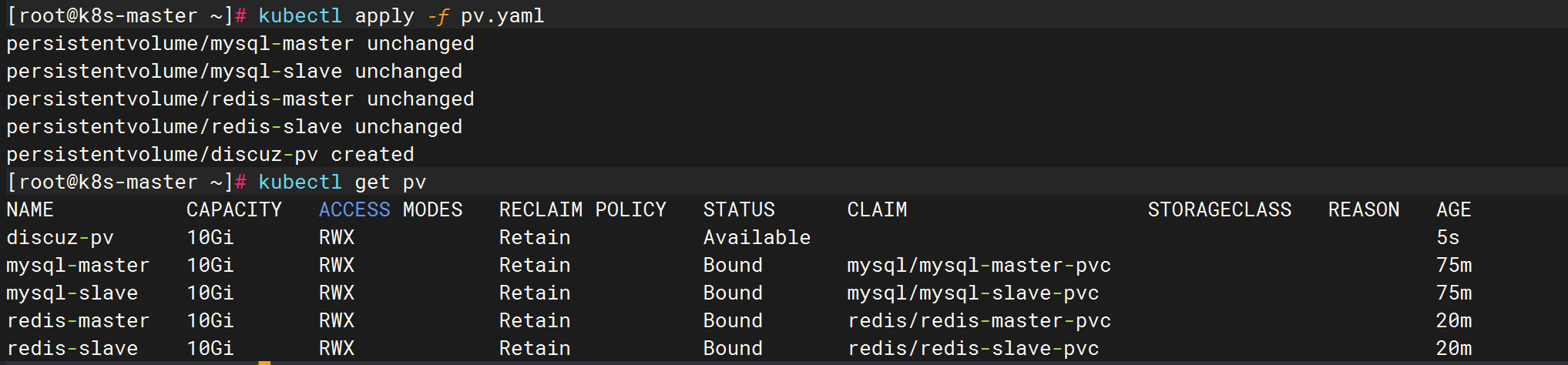

4、discuz 可持久化

1.生成pv

---

apiVersion: v1

kind: PersistentVolume

metadata:name: discuz-pv

spec:capacity:storage: 10GiaccessModes: ["ReadWriteMany"]nfs:path: /data/discuzserver: 192.168.158.33

更新

[root@k8s-master ~]# kubectl apply -f pv.yaml

persistentvolume/mysql-master unchanged

persistentvolume/mysql-slave unchanged

persistentvolume/redis-master unchanged

persistentvolume/redis-slave unchanged

persistentvolume/discuz-pv created

[root@k8s-master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

discuz-pv 10Gi RWX Retain Available 5s

mysql-master 10Gi RWX Retain Bound mysql/mysql-master-pvc 75m

mysql-slave 10Gi RWX Retain Bound mysql/mysql-slave-pvc 75m

redis-master 10Gi RWX Retain Bound redis/redis-master-pvc 20m

redis-slave 10Gi RWX Retain Bound redis/redis-slave-pvc 20m

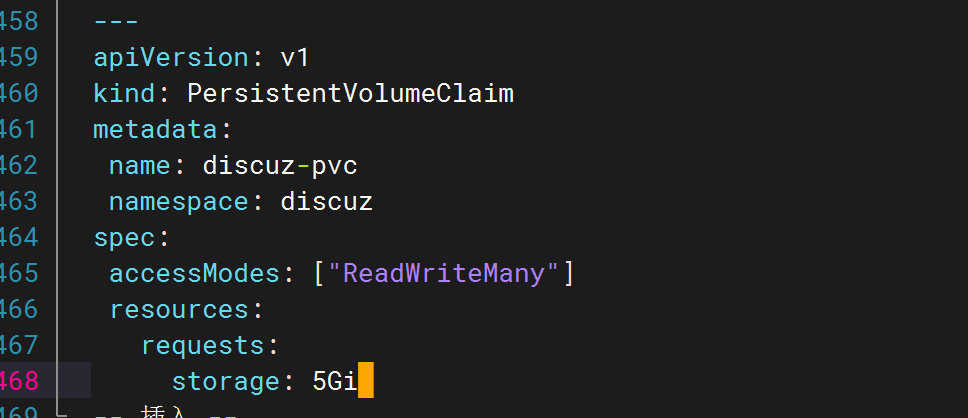

2.生成pvc 对应pv

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: discuz-pvcnamespace: discuz

spec:accessModes: ["ReadWriteMany"]resources:requests:storage: 5Gi

更新

[root@k8s-master ~]# vim pvc.yaml

[root@k8s-master ~]# kubectl apply -f pvc.yaml

persistentvolumeclaim/mysql-master-pvc unchanged

persistentvolumeclaim/mysql-slave-pvc unchanged

persistentvolumeclaim/redis-master-pvc unchanged

persistentvolumeclaim/redis-slave-pvc unchanged

persistentvolumeclaim/discuz-pvc created

[root@k8s-master ~]# kubectl -n discuz get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

discuz-pvc Bound discuz-pv 10Gi RWX 12s

3.修改discuz配置文件

/root/dt/nginx/discuz/upload/configvim config_global_default.php

vim config_ucenter_default.php

4.在共享目录中添加对应文件

修改属主和属组

修改配置文件 对应 pvc

spec.template.spec 下的 containers 添加 volumeMounts

spec.template.spec(与 containers 同级)中添加 volumes 部分

更新

访问

删除redis缓存之后在刷新一次就进来了

5.验证持久化

做了持久化之后,当删除nginx服务pod后,在重新访问,会回到之前的页面

删除mysql的pod之后,然后pod起来之后也同样回到之前的页面

redis也一样

做好持久化,就不会每次重新访问都是安装页面

删除nginx pod

5、tomcat 持久化

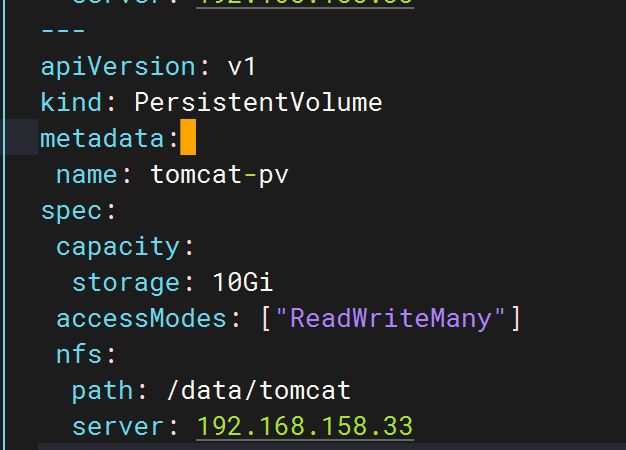

1.添加 pv 对应 共享目录

---

apiVersion: v1

kind: PersistentVolume

metadata:name: tomcat-pv

spec:capacity:storage: 10GiaccessModes: ["ReadWriteMany"]nfs:path: /data/tomcatserver: 192.168.158.33

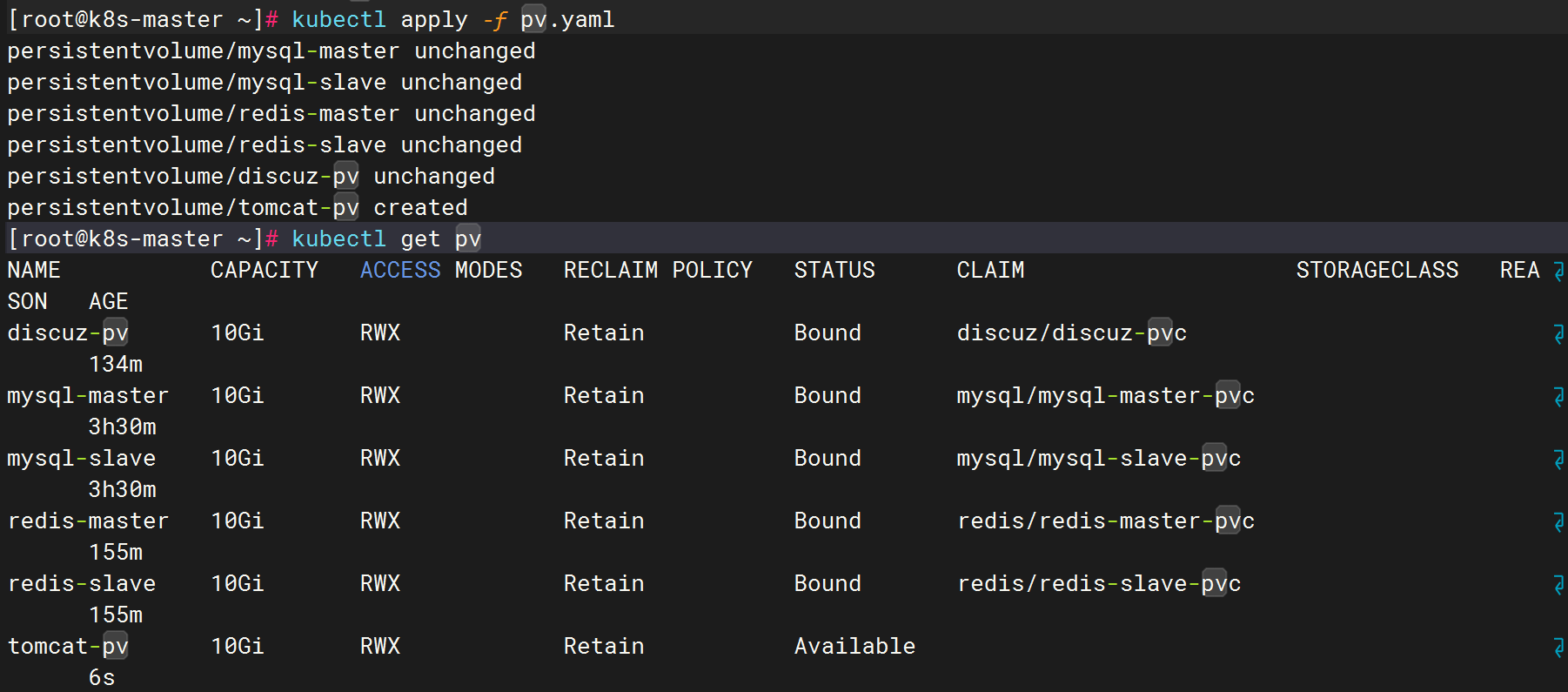

[root@k8s-master ~]# kubectl apply -f pv.yaml

persistentvolume/mysql-master unchanged

persistentvolume/mysql-slave unchanged

persistentvolume/redis-master unchanged

persistentvolume/redis-slave unchanged

persistentvolume/discuz-pv unchanged

persistentvolume/tomcat-pv created

[root@k8s-master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

discuz-pv 10Gi RWX Retain Bound discuz/discuz-pvc 134m

mysql-master 10Gi RWX Retain Bound mysql/mysql-master-pvc 3h30m

mysql-slave 10Gi RWX Retain Bound mysql/mysql-slave-pvc 3h30m

redis-master 10Gi RWX Retain Bound redis/redis-master-pvc 155m

redis-slave 10Gi RWX Retain Bound redis/redis-slave-pvc 155m

tomcat-pv 10Gi RWX Retain Available 6s

2.生成pvc 对应pv

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: tomcat-pvcnamespace: web

spec:accessModes: ["ReadWriteMany"]resources:requests:storage: 5Gi

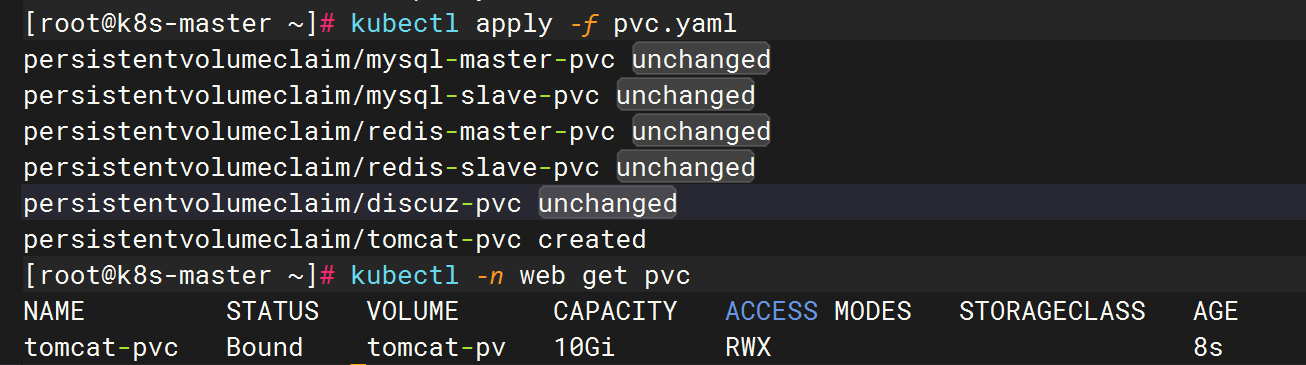

[root@k8s-master ~]# kubectl apply -f pvc.yaml

persistentvolumeclaim/mysql-master-pvc unchanged

persistentvolumeclaim/mysql-slave-pvc unchanged

persistentvolumeclaim/redis-master-pvc unchanged

persistentvolumeclaim/redis-slave-pvc unchanged

persistentvolumeclaim/discuz-pvc unchanged

persistentvolumeclaim/tomcat-pvc created

[root@k8s-master ~]# kubectl -n web get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

tomcat-pvc Bound tomcat-pv 10Gi RWX 8s

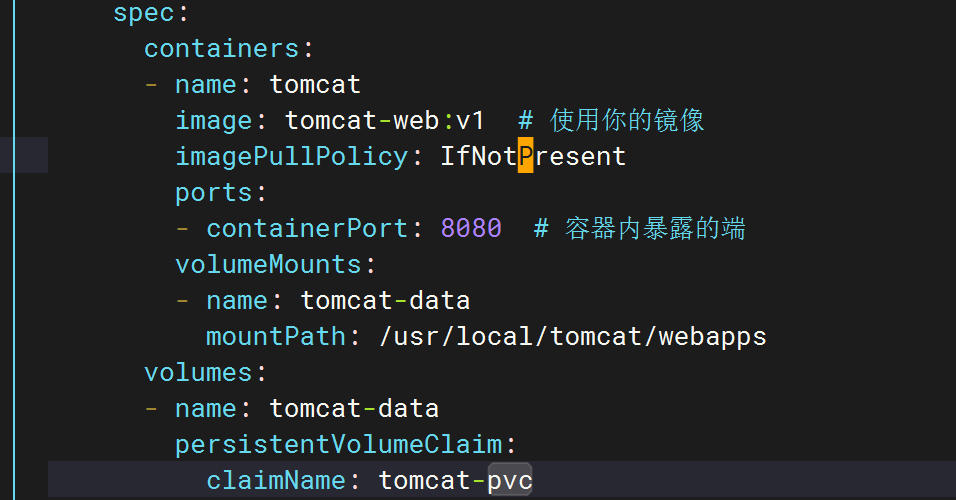

3.修改配置文件 对应 pvc

volumeMounts:- name: tomcat-datamountPath: /usr/local/tomcat/webappsvolumes:- name: tomcat-datapersistentVolumeClaim:claimName: tomcat-pvc

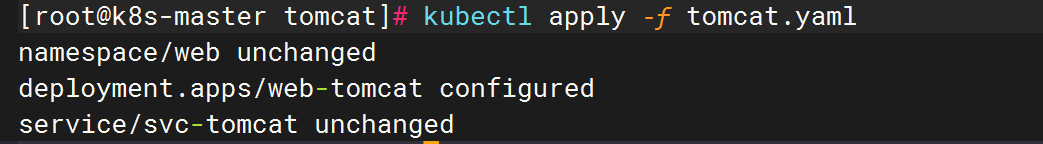

更新资源

进行pvc挂载

4.文件修改

tomcat挂载目录下的文件

修改index.jsp

修改jdbc.properties 文件

5.访问

删除pod再进行访问

6.访问成功