从零构建企业级K8S:高可用集群部署指南

文章目录

- 一、安装方式

- 1.1 kubeadm

- 1.2 安装注意事项

- 二、部署过程

- 2.1 具体步骤

- 2.2 基础环境准备

- 三、高可用反向代理

- 3.1 keepalived安装及配置

- 3.1.1 节点1安装及配置keepalived

- 3.1.2 节点2安装及配置keepalived

- 3.2 haproxy安装及配置

- 3.2.1 节点1安装及配置haproxy

- 3.2.2 节点2安装及配置haproxy

- 四、harbor安装

- 五、安装kubeadm等组件

- 5.1 版本选择

- 5.2 安装docker

- 5.3 所有节点安装kubeadm、kubelet、kubectl

- 5.4 验证master节点kubelet服务

- 六、master节点运行kubeadm init 初始化命令

- 6.1 kubeadm命令使用

- 6.2 kubeadm init命令使用

- 6.3 验证当前kubeadm版本

- 6.4 准备镜像

- 6.5 master节点下载镜像

- 七、单节点初始化(测试环境)

- 7.1 允许master节点部署pod

- 八、高可用master初始化(生产环境)

- 8.1 基于命令初始化高可用master方式

- 8.2 基于文件初始化高可用master方式

- 九、配置kube-config文件及网络组件

- 9.1 kube-config文件

- 9.2 当前master生成证书用于添加新控制节点

- 十、添加节点到k8s集群

- 10.1 master节点2、master节点3

- 10.2 添加node节点

- 10.3 验证当前证书状态

- 10.6 k8s创建容器并测试内部网络

- kubectl taint nodes --all node-role.kubernetes.io/master-

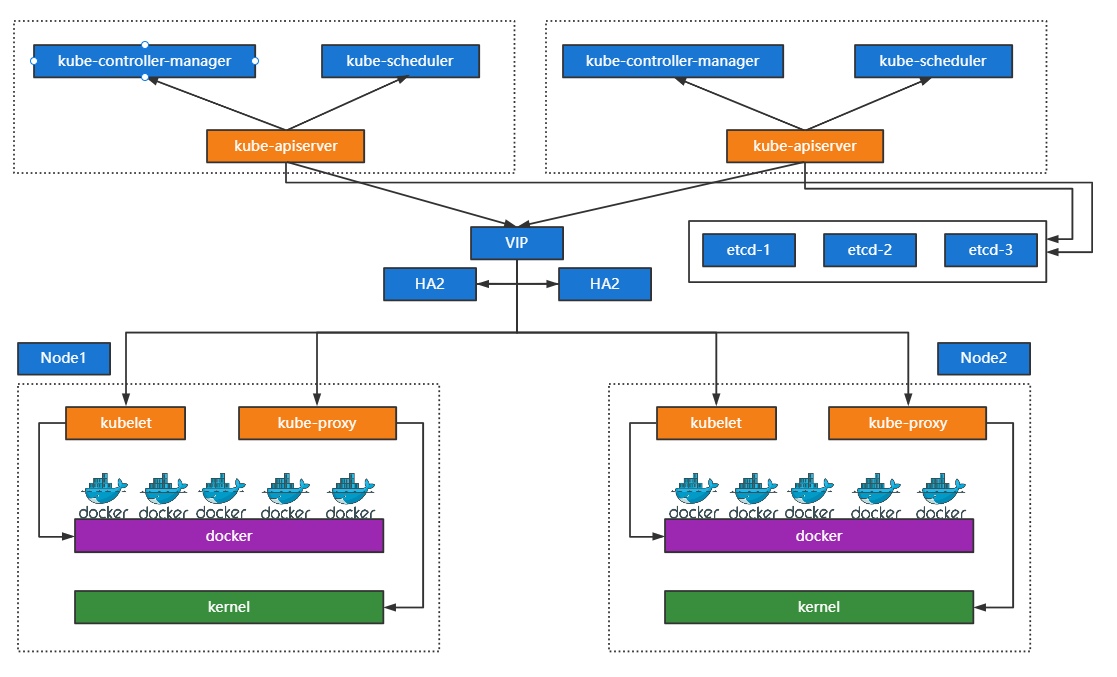

本文档提供一套完整的Kubernetes生产环境部署方案,重点解决高可用、安全合规及网络优化等核心需求。

通过 kubeadm工具 实现集群的标准化安装,结合 Keepalived+HAProxy 构建高可用API入口,确保控制平面稳定性;利用 Harbor私有仓库 统一管理容器镜像,并严格遵循生产环境规范(如禁用Swap、内核参数调优)。

文档覆盖从基础环境准备(节点规划、系统优化)、核心组件部署(Docker运行时、kubeadm初始化)、到网络插件集成(Flannel CNI)的全流程,同时包含多Master节点扩容、Node节点加入及常见故障排查指引,适用于CentOS/Ubuntu等主流系统环境。

一、安装方式

部署工具(如ansbile/saltstack)、二进制、kubeadm、apt-get/yum等按时安装

1.1 kubeadm

k8s官方提供的部署工具,需在master和node上安装docker等组件,然后初始化,把管理端的控制服务和node上的服务都以pod方式运行

1.2 安装注意事项

-

禁用swap

-

关闭selinux

-

关闭iptables

-

优化内核参数及资源限制参数

cat >> /etc/sysctl.conf << EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOFsysctl -pnet.bridge.bridge-nf-call-iptables = 1 # 二层的网桥在转发包时会被宿主机iptables的FORWARD规则匹配

二、部署过程

CRI(运行时接口)运行时选择:https://kubernetes.io/zh/docs/setup/production-environment/container-runtimes/

CNI(网络接口)选择:https://kubernetes.io/zh/docs/concepts/cluster-administration/addons/

2.1 具体步骤

1.基础环境准备

2.部署harbor及haproxy高可用反向代理,实现控制节点的API反问入口高可用

3.在所有master节点安装指定版本的kubeadm、kubelet、kubectl、docker

4.在所有node节点安装指定版本的kubeadm、kubelet、docker,在node节点kubectl为可选安装,看是否需要在node执行kubectl命令进行集群管理及pod管理等操作

5.master节点运行kubeadm init初始化命令

6.验证master节点状态

7.在node节点使用kubeadm命令将自己加入k8s master(需要使用master生成token认证)

8.验证node节点状态

9.创建pod并测试,网络通信

10.部署web服务Dashboard

11.k8s集群升级案例

2.2 基础环境准备

服务器环境:

最小化安装基础系统,如果是centos,关闭selinux和swap、更新软件源、时间同步、常用命令安装,重启验证基础配置(推荐cetnos7.5及以上版本);如果是ubuntu(推荐18.04及以上稳定版)

-

k8s-master:3台

-

haproxy :2台

-

harbor :1台

-

node :3台

三、高可用反向代理

基于keepalived及HAProxy实现高可用反向代理环境

3.1 keepalived安装及配置

安装及配置keepalived,并测试VIP高可用

3.1.1 节点1安装及配置keepalived

root@k8s-ha1:# apt install keepalived -y

root@k8s-ha1:# vim /etc/keepalived/keepalived.confvrrp_instance VI_1 {state MASTERinterface eth0garp_master_delay 10smtp_alertvirtual_router_id 51priority 100advert_int 1authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {10.0.1.200 dev eth0 label eth0:1}

}systemctl restart keepalived

systemctl enable keepalived

systemctl status keepalived

3.1.2 节点2安装及配置keepalived

root@k8s-ha2:# apt install keepalived -y

root@k8s-ha2:# vim /etc/keepalived/keepalived.conf vrrp_instance VI_1 {state BACKUPinterface eth0garp_master_delay 10smtp_alertvirtual_router_id 51priority 80advert_int 1authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {10.0.1.200 dev eth0 label eth0:1}

}systemctl restart keepalived

systemctl enable keepalived

systemctl status keepalived

3.2 haproxy安装及配置

3.2.1 节点1安装及配置haproxy

#修改haproxy内核参数(echo -e "net.ipv4.ip_nonlocal_bind = 1\nnet.ipv4.ip_forward = 1" > /etc/sysctl.conf)

root@k8s-ha1:# vim /etc/sysctl.conf

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

root@k8s-ha1:# sysctl -p# 安装haproxy1

root@k8s-ha1:# apt install haproxy -y

root@k8s-ha1:# vim /etc/haproxy/haproxy.cfglisten statsmode httpbind 0.0.0.0:9999stats enablelog globalstats uri /haproxy-statusstats auth haadmin:123456listen k8s-apiserver-6443bind 10.0.0.200:6443mode tcpbalance roundrobinserver 10.0.0.201 10.0.0.201:6443 check inter 3s fall 3 rise 5server 10.0.0.202 10.0.0.202:6443 check inter 3s fall 3 rise 5server 10.0.0.203 10.0.0.203:6443 check inter 3s fall 3 rise 5systemctl restart haproxy

systemctl enable haproxy

systemctl status haproxy

3.2.2 节点2安装及配置haproxy

#修改haproxy内核参数(echo -e "net.ipv4.ip_nonlocal_bind = 1\nnet.ipv4.ip_forward = 1" > /etc/sysctl.conf)

root@k8s-ha2:# vim /etc/sysctl.conf

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

root@k8s-ha2:# sysctl -p# 安装haproxy2

root@k8s-ha2:# apt install haproxy -y

root@k8s-ha2:# vim /etc/haproxy/haproxy.cfglisten statsmode httpbind 0.0.0.0:9999stats enablelog globalstats uri /haproxy-statusstats auth haadmin:123456listen k8s-apiserver-6443bind 10.0.0.200:6443mode tcpbalance roundrobinserver 10.0.0.201 10.0.0.201:6443 check inter 3s fall 3 rise 5server 10.0.0.202 10.0.0.202:6443 check inter 3s fall 3 rise 5server 10.0.0.203 10.0.0.203:6443 check inter 3s fall 3 rise 5systemctl restart haproxy

systemctl enable haproxy

systemctl status haproxy

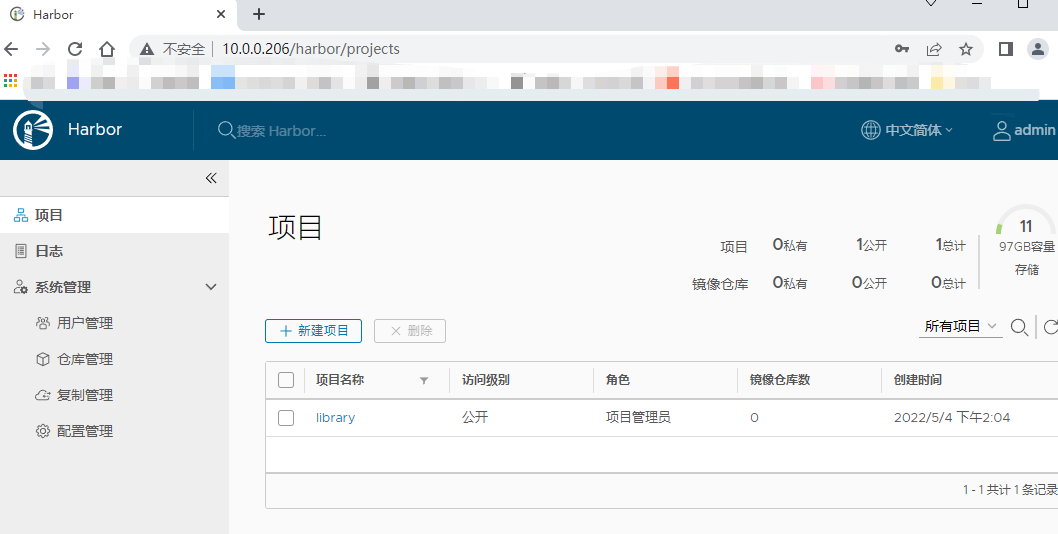

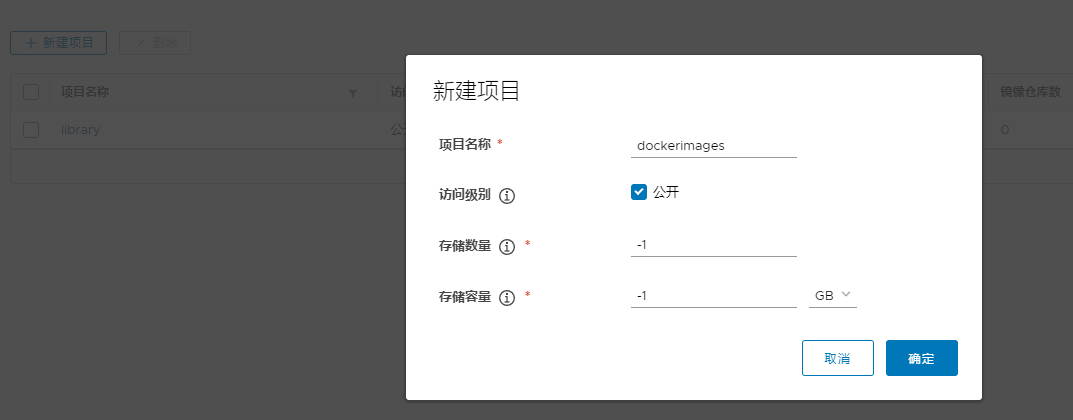

四、harbor安装

http://10.0.0.206/harbor/sign-in

install_harbor_ubuntu.sh

#!/bin/bashCOLOR="echo -e \E[1;31m"

END="\E[m"

DOCKER_VERSION="5:19.03.5~3-0~ubuntu-bionic"

HARBOR_VERSION=1.7.6

IPADDR=`hostname -I|awk '{print$1}'`

HARBOR_ADMIN_PASSWORD=123456install_docker() {${COLOR}"--------开始安装docker--------"${END}sleep 1apt updateapt -y install apt-transport-https ca-certificates curl software-properties-commoncurl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -add-apt-repository "deb [arch=amd64] https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

# add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs)stable"apt update${COLOR}"Docker有以下版本"${END}apt-cache madison docker-ce${COLOR}"5秒后即将安装: docker-"${DOCKER_VERSION}"版本......"${END}${COLOR}"如果想安装其他Docker版本,请按Ctrl+C键退出,修改版本后在执行"${END}sleep 5apt -y install docker-ce=${DOCKER_VERSION} docker-ce-cli=${DOCKER_VERSION}mkdir -p /etc/dockercat /etc/docker/daemon.json << EOF

{"registry-mirrors": ["https://si7y70hh.mirror.aliyuncs.com"]

}

EOFsystemctl daemon-reloadsystemctl enable --now dockerdocker version && ${COLOR}"--------docker安装成功--------"${END} || ${COLOR}"--------docker安装失败--------"${END}

}install_docker_compose() {${COLOR}"--------开始安装docker_compose--------"${END}sleep 1curl -L https://github.com/docker/compose/releases/download/1.25.3/docker-compose-`uname -s`-`uname -m` -o /usr/bin/docker-composechmod +x /usr/bin/docker-composedocker-compose --version && ${COLOR}"--------docker compose安装成功--------"${END} || ${COLOR}"--------docker compose安装失败--------"${END}

}install_harbor() {${COLOR}"--------开始安装harbor--------"${END}sleep 1if [ -e harbor-offline-installer-v${HARBOR_VERSION}.tgz ];then${COLOR}"--------harbor文件已存在--------"${END}elsewget https://storage.googleapis.com/harbor-releases/release-1.7.0/harbor-offline-installer-v${HARBOR_VERSION}.tgzfitar xf harbor-offline-installer-v${HARBOR_VERSION}.tgz -C /etcsed -i.bak -e 's/^hostname =.*/hostname = '''$IPADDR'''/' -e 's/^harbor_admin_password =.*/harbor_admin_password = '''$HARBOR_ADMIN_PASSWORD'''/' /etc/harbor/harbor.cfgapt -y install python3apt -y install python/etc/harbor/install.sh && ${COLOR}"--------harbor安装成功--------"${END} || ${COLOR}"--------harbor安装失败--------"${END}

}harbor_service() {cat > /lib/systemd/system/harbor.service <<EOF

[Unit]

Description=Harbor

After=docker.service systemd-networkd.service systemd-resolved.service

Requires=docker.service

Documentation=http://github.com/vmware/harbor[Service]

Type=simple

Restart=on-failure

RestartSec=5

ExecStart=/usr/bin/docker-compose -f /etc/harbor/docker-compose.yml up

ExecStop=/usr/bin/docker-compose -f /etc/harbor/docker-compose.yml down[Install]

WantedBy=multi-user.target

EOFsystemctl daemon-reload

systemctl enable harbor &> /dev/null || ${COLOR}"--------Harbor已配置为开机自动启动--------"${END}

}dpkg -s docker-ce &> /dev/null && ${COLOR}"Docker已安装,退出!"${END} || install_docker

docker-compose --version &> /dev/null && ${COLOR}"Docker compose已安装,退出!"${END} || install_docker_compose

install_harbor

harbor_service

五、安装kubeadm等组件

在master和node节点安装kubeadm、kubelet、kubectl、docker等组件,负载均衡不需要安装

5.1 版本选择

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.17.md#v11711# 安装经过验证的docker版本

5.2 安装docker

#!/bin/bashCOLOR="echo -e \E[1;31m"

END="\E[m"

DOCKER_VERSION="5:19.03.5~3-0~ubuntu-bionic"install_docker() {${COLOR}"--------开始安装docker--------"${END}sleep 1apt-get remove docker docker-engine docker.ioapt-get updateapt-get -y install apt-transport-https ca-certificates curl software-properties-commoncurl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -add-apt-repository "deb [arch=amd64] https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

# add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs)stable"apt-get update${COLOR}"Docker有以下版本"${END}apt-cache madison docker-ce${COLOR}"5秒后即将安装: docker-"${DOCKER_VERSION}"版本......"${END}${COLOR}"如果想安装其他Docker版本,请按Ctrl+C键退出,修改版本后在执行"${END}sleep 5apt-get -y install docker-ce=${DOCKER_VERSION} docker-ce-cli=${DOCKER_VERSION}mkdir -p /etc/dockercat > /etc/docker/daemon.json << EOF

{"registry-mirrors": ["https://si7y70hh.mirror.aliyuncs.com"]

}

EOFsystemctl daemon-reloadsystemctl enable --now dockerdocker version && ${COLOR}"--------Docker 安装成功--------"${END} || ${COLOR}"--------Docker 安装失败--------"${END}

}dpkg -s docker-ce &> /dev/null && ${COLOR}"Docker已安装,退出!"${END} || install_docker

5.3 所有节点安装kubeadm、kubelet、kubectl

所有节点配置阿里云仓库地址并安装相关组件,node节点可选安装kubectl

配置阿里云镜像的kubernetes源(用于安装kubelet kubeadm kubectl命令)

https://developer.aliyun.com/mirror/kubernetes?spm=a2c6h.13651102.0.0.3e221b11Otippu

Debian / Ubuntu

阿里镜像源

apt-get update && apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

apt-get update

apt-get install -y kubelet kubeadm kubectl

清华镜像源

apt-get update && apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.tuna.tsinghua.edu.cn/kubernetes/apt/ kubernetes-xenial main

EOF

apt-get update

apt-get install -y kubelet kubeadm kubectl

或指明版本

apt-cache madsion kubeadm

apt-get install -y kubelet=1.20.5_00 kubeadm=1.20.5_00 kubectl=1.20.5_00

CentOS / RHEL / Fedora

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

setenforce 0

yum install -y kubelet kubeadm kubectl

systemctl enable kubelet && systemctl start kubelet

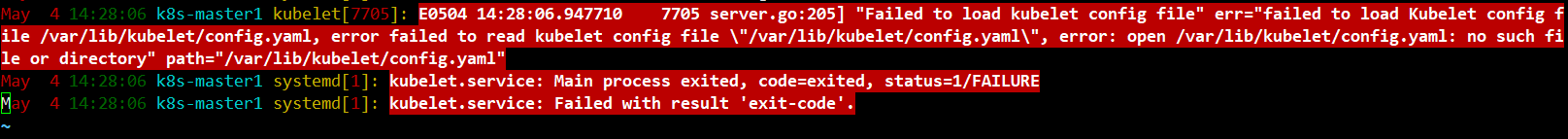

5.4 验证master节点kubelet服务

目前启动kubelet以下报错

六、master节点运行kubeadm init 初始化命令

在三台master中任意一台master进行集群初始化,而且集群初始化只需要初始化一次

6.1 kubeadm命令使用

https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/

* kubeadm init 初始化k8s控制平台

* kubeadm join 将工作节点加入到k8s master集群中

* kubeadm upgrade 用于升级 Kubernetes 集群到新版本

* kubeadm config 如果你使用了 v1.7.x 或更低版本的 kubeadm 版本初始化你的集群,则使用 kubeadm upgrade 来配置你的集群

* kubeadm token 用于管理token

* kubeadm reset 用于还原 kubeadm init 或者 kubeadm join 对系统产生的环境变化

* kubeadm certs 用于管理 Kubernetes 证书

* kubeadm kubeconfig 用于管理 kdubeconfig 文件

* kubeadm version 用于打印 kubeadm 的版本信息

* kubeadm alpha kubeadm处于测试阶段的命令

* kubeadm completion bash命令补全,需要安装bash-completion

6.2 kubeadm init命令使用

https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/kubeadm-init/

Usage:kubeadm init [flags]kubeadm init [command]Flags:

--apiserver-advertise-address string # k8s api server将要监听的本地IP

--apiserver-bind-port int32 # api server绑定的端口,默认为6443

--apiserver-cert-extra-sans stringSlice # 可选的证书额外信息,用于指定API Serverl的服务

--cert-dir string # 证书存储路径,默认/etc/kubernetes/pki

--certificate-key string # 定义一个用于加密kubeadm-certs Secret中的控制平台证书的密钥器证书。可以是IP地址也可以是DNS名称。

--config string # kubeadm 配置文件路径

--control-plane-endpoint string # 为控制平台指定一个稳定的IP地址或DNS名称,即配置一个可以长期使用切是高可用的vIP或者域名,k8s多master高可用基于此参数实现

--cri-socket string # 要连接的CRI(容器运行时接口,Container Runtime Interface,简称CRI)套接字的路径,如果为空,则kubeadm将尝试自动检测此值,"仅当安装了多个CRI或具有非标准CRI插槽时,才使用此选项”

--dry-run # 不要应用任何更改,只是输出将要执行的操作,其实就是测试运行。

--experimental-kustomize string # 用于存储kustomize为静态pod清单所提供的补丁的路径。

--feature-gates string # 一组用来描述各种功能特性的键值(key=value)对,选项是:IPv6Dualstack=true false (ALPHA default=false)PublicKeysECDSA=true|false (ALPHA - default=false)RootlessControlPlane=true|false (ALPHA - default=false)UnversionedKubeletConfigMap=true|false (ALPHA - default=false)--ignore-preflight-errors strings #可以忽略检查过程中出现的错误信息,比如忽略swap,如果为all就略所有

--image-repository string # 设置一个镜像仓库,默认为k8s,gcr.io

--kubernetes-version string # 指定安装k8s版本,默认为stable-1

--node-name string # 指定node节点名称

--patches string Path to a directory that contains files named "target[suffix][+patchtype].extension". For example, "kube-apiserver0+merge.yaml" or just "etcd.json". "target" can be one of "kube-apiserver", "kube-controller-manager", "kube-scheduler", "etcd". "patchtype" can be one of "strategic", "merge" or "json" and they match the patch formats supported by kubectl. The default "patchtype" is "strategic". "extension" must be either "json" or "yaml". "suffix" is an optional string that can be used to determine which patches are applied first alpha-numerically.

--pod-network-cidr string # 设置pod ip地址范围的

--service-cidr string # 设置service网络地址范围

--service-dns-domain string # 设置k8s内部域名,默认为cluster.1ocal,会有相应的DNS服务(kube-dns/coredns)解析生成的域名记录。

--skip-certificate-key-print # 不打印用于加密的key信息

--skip-phases strings # 要跳过哪些阶段

--skip-token-print # 跳过打印toker信息

--token string # 指定token

--token-ttl duration # 指定token过期时间,默认为24小时,0为永不过期

--upload-certs # 更新证书# 全局可选项:

--add-dir-header # 如果为true,在日志头部添加日志目录

--log-file string # 如果不为空,将使用此日志文件

--log-file-max-size uint # 设置日志文件的最大大小,单位为兆,默认为1800兆,0为没有限

--one-output If true, only write logs to their native severity level (vs also writing to each lower severity level)

--rootfs string # 宿主机的根路径,也就是绝对路径

--skip-headers # 如果为true,在log日志里面不显示标题前缀

--skip-log-headers # 如果为true,在log日志里面不显示标题

6.3 验证当前kubeadm版本

kubeadm version

6.4 准备镜像

root@k8s-master1:~# kubeadm config images list --kubernetes-version v1.20.5

could not convert cfg to an internal cfg: this version of kubeadm only supports deploying clusters with the control plane version >= 1.22.0. Current version: v1.20.5

To see the stack trace of this error execute with --v=5 or higherroot@k8s-master1:~# kubeadm config images list --kubernetes-version v1.22.0

k8s.gcr.io/kube-apiserver:v1.22.0

k8s.gcr.io/kube-controller-manager:v1.22.0

k8s.gcr.io/kube-scheduler:v1.22.0

k8s.gcr.io/kube-proxy:v1.22.0

k8s.gcr.io/pause:3.6

k8s.gcr.io/etcd:3.5.1-0

k8s.gcr.io/coredns/coredns:v1.8.6

6.5 master节点下载镜像

建议提前在master节点下载镜像以减少安装等待时间,但是镜像默认使用Google的镜像仓库,所以国内无法直接下载,但是可以通过阿里云的镜像仓库把镜像先提前下载下来,可以避免后期因镜像下载异常而导致k8s部署异常。

images-download.sh

#!/bin/bash

# images-download.sh

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.22.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.22.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.22.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.22.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.8.6

下载并验证

root@k8s-master1:~# bash images-download.sh

root@k8s-master1:~# docker images

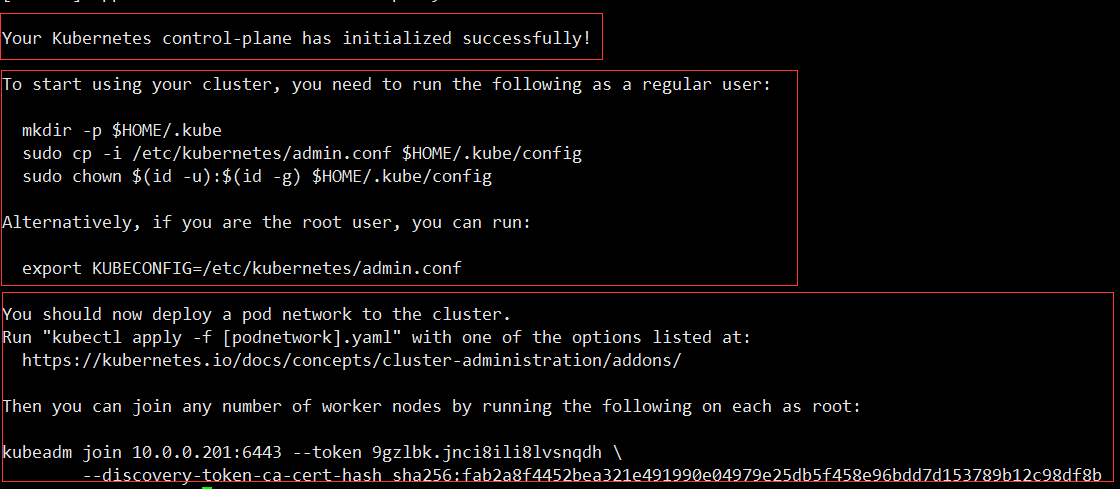

七、单节点初始化(测试环境)

注:需要关闭swap,修改从group驱动类型

root@k8s-master1:~# kubeadm init --apiserver-advertise-address=10.0.0.201 --apiserver-bind-port=6443 --kubernetes-version=v1.23.6 --pod-network-cidr=10.100.0.0/16 --service-cidr=10.200.0.0/16 --service-dns-domain=lhl.net --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --ignore-preflight-errors=swap

7.1 允许master节点部署pod

root@k8s-master1:~# kubectl taint nodes --all node-role.kubernetes.io/master-

The connection to the server localhost:8080 was refused - did you specify the right host or port?root@k8s-master1:~# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

root@k8s-master1:~# source /etc/profile

root@k8s-master1:~# kubectl taint nodes --all node-role.kubernetes.io/master-

node/k8s-master1 untainted

7.2 部署网络组件

https://kubernetes.io/zh/docs/concepts/cluster-administration/addons/# kubernetes支持的网络扩展

https://quay.io/repository/coreos/flannel?tab=tags# flannel镜像下载地址

https://github.com/flannel-io/flannel# flannel的github项目地址

root@k8s-master1:~# kubectl apply -f flannel-0.14.0-rc1.yml

root@k8s-master1:~# kubectl get pod -A

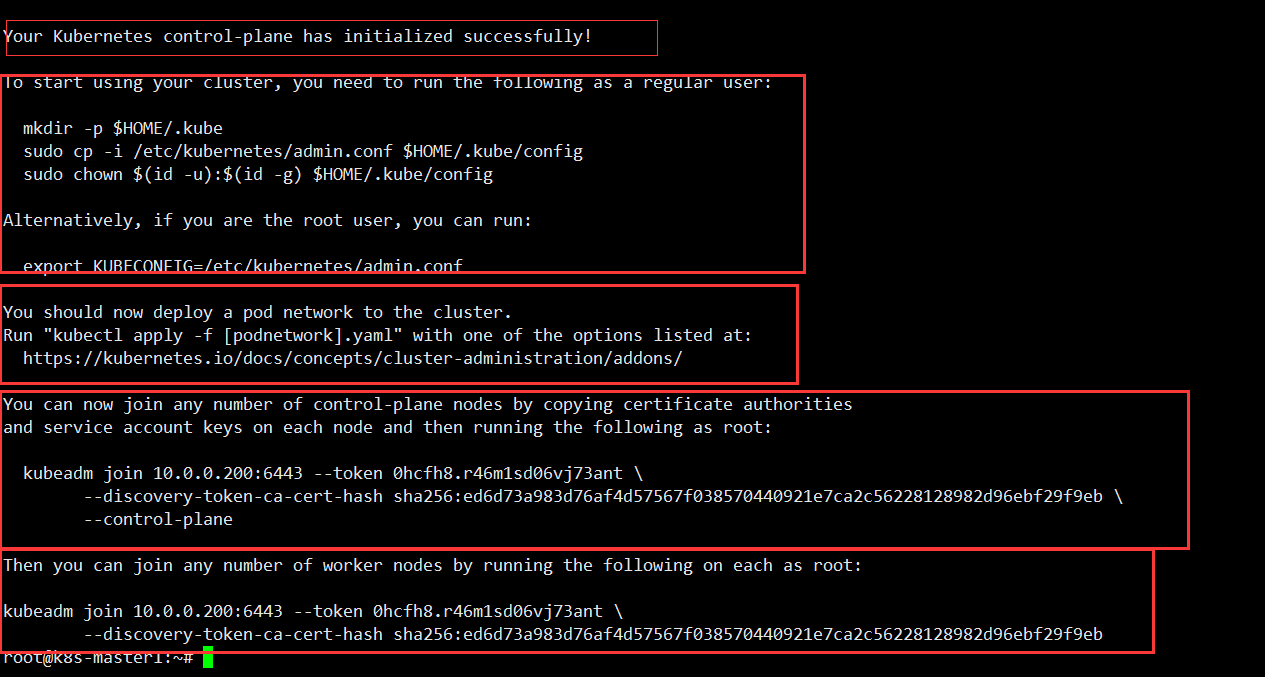

八、高可用master初始化(生产环境)

基于keepalived实现高可用VIP,通过haproxy实现kube-apiserver的反向代理,然后将对kube-apiserver的管理请求转发至多台k8 s master以实现管理端高可用。

添加主机hosts记录

vim /etc/hosts

10.0.0.201 k8s-master1.test.com

10.0.0.202 k8s-master2.test.com

10.0.0.203 k8s-master3.test.com

10.0.0.207 k8s-node1.test.com

10.0.0.208 k8s-node2.test.com

10.0.0.209 k8s-node3.test.com

8.1 基于命令初始化高可用master方式

注:需要关闭swap,修改从group驱动类型

关闭swap

swapoff -a永久关闭

vim /etc/fstab

注释掉最后一行的swapk8s 的是 systemd ,而 docker 是cgroupfs。

docker info | grep cgroup

[root@k8s-master ~]# cat /etc/docker/daemon.json

{"registry-mirrors": ["https://si7y70hh.mirror.aliyuncs.com"],"exec-opts": ["native.cgroupdriver=systemd"]

}

VIP必须启动

root@k8s-master1:~# kubeadm init --apiserver-advertise-address=10.0.0.201 --control-plane-endpoint=10.0.0.200 --apiserver-bind-port=6443 --kubernetes-version=v1.23.6 --pod-network-cidr=10.100.0.0/16 --service-cidr=10.200.0.0/16 --service-dns-domain=lhl.net --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --ignore-preflight-errors=swap# --control-plane-endpoint VIP地址

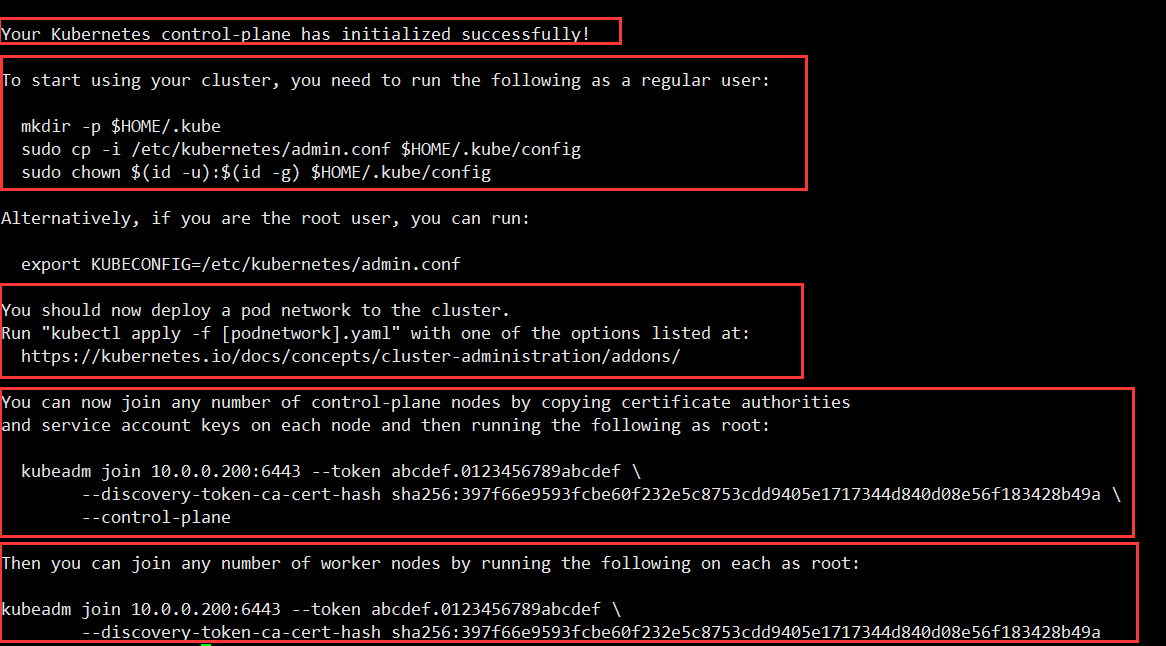

8.2 基于文件初始化高可用master方式

# kubeadm config print init-defaults #输出默认初始化配置

# kubeadm config print init-defaults > kubeadm-init.yaml #将默认配置输出至文件

# cat kubeadm-init.yaml #修改后的初始化文件内容root@k8s-master1:~#cat kubeadm-init.yamlapiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authentication

kind: InitConfiguration

localAPIEndpoint:advertiseAddress: 10.0.0.201bindPort: 6443

nodeRegistration:criSocket: /var/run/dockershim.sockname: k8s-master1.test.comtaints:- effect: NoSchedulekey: node-role.kubernetes.io/master

---

apiServer:timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 10.0.0.200:6443

controllerManager: {}

dns:type: CoreDNS

etcd:local:dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.23.6

networking:dnsDomain: lhl.netpodSubnet: 10.100.0.0/16serviceSubnet: 10.200.0.0/16

scheduler: {}root@k8s-masterl:~# kubeadm init --config kubeadm-init.yaml # 基于文件执行k8s master初始化

九、配置kube-config文件及网络组件

无论使用命令还是文件初始化的k8s环境,无论是单机还是集群,需要配置一下kube-config文件及网络组件。

9.1 kube-config文件

Kube-config文件中包含kube-apiserver地址及相关认证信息

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configroot@k8s-master1:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 NotReady control-plane,master 40h v1.23.6部署网络组件flannel

https://github.com/flannel-io/flannelroot@k8s-master1:~# vim kube-flannel.ymlnet-conf.json: |{"Network": "10.100.0.0/16", # 原配置10.244.0.0/16"Backend": {"Type": "vxlan"}}root@k8s-master1:~# kubectl apply -f kube-flannel.yml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.etc/kube-flannel-ds createdroot@k8s-master1:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1.test.com Ready control-plane,master 12m v1.23.6

9.2 当前master生成证书用于添加新控制节点

root@k8s-master1:~# kubeadm init phase upload-certs --upload-certs

I0507 15:25:56.845044 16527 version.go:255] remote version is much newer: v1.24.0; falling back to: stable-1.23

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

cf9bff6fbb96b1b0336e30e15a0efe5e936b93f3322d95b71a29db1d69a6edfa

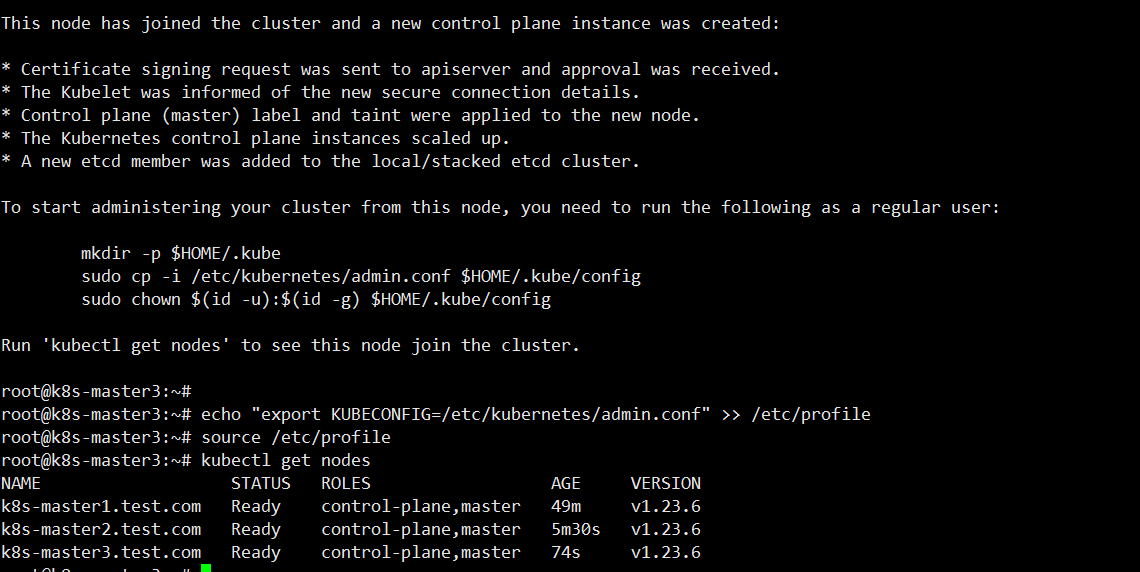

十、添加节点到k8s集群

将其他mater节点及node节点分别添加到k8s集群中

10.1 master节点2、master节点3

环境准备:安装docker、kubeadm、kubelet

# master节点2

root@k8s-master2:~# kubeadm join 10.0.0.200:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:397f66e9593fcbe60f232e5c8753cdd9405e1717344d840d08e56f183428b49a \

--control-plane --certificate-key cf9bff6fbb96b1b0336e30e15a0efe5e936b93f3322d95b71a29db1d69a6edfaecho "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

source /etc/profilekubectl get nodes# master节点3

root@k8s-master3:~# kubeadm join 10.0.0.200:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:397f66e9593fcbe60f232e5c8753cdd9405e1717344d840d08e56f183428b49a \

--control-plane --certificate-key cf9bff6fbb96b1b0336e30e15a0efe5e936b93f3322d95b71a29db1d69a6edfa# 永久生效

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

source /etc/profilekubectl get nodes

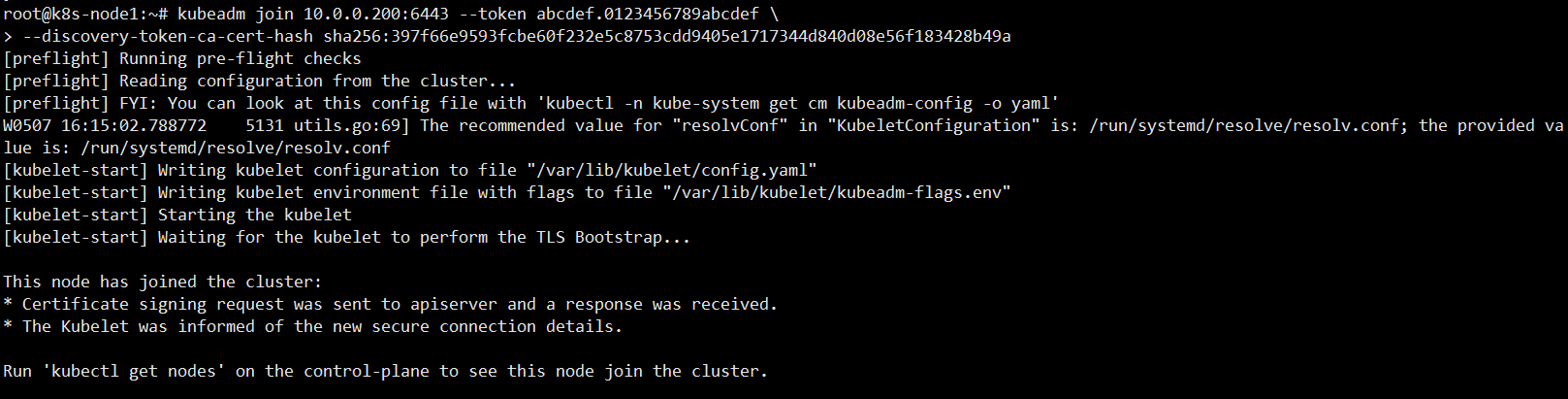

10.2 添加node节点

环境准备:安装docker、kubeadm、kubelet

添加命令为master端kubeadm init初始化完成之后返回的添加命令

root@k8s-node1:~# kubeadm join 10.0.0.200:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:397f66e9593fcbe60f232e5c8753cdd9405e1717344d840d08e56f183428b49aroot@k8s-node2:~# kubeadm join 10.0.0.200:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:397f66e9593fcbe60f232e5c8753cdd9405e1717344d840d08e56f183428b49aroot@k8s-node3:~# kubeadm join 10.0.0.200:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:397f66e9593fcbe60f232e5c8753cdd9405e1717344d840d08e56f183428b49a# 主节点配置拷贝至从节点1、2、3

scp 10.0.0.201:/etc/kubernetes/admin.conf /etc/kubernetes/echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

source /etc/profileroot@k8s-node1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1.test.com Ready control-plane,master 94m v1.23.6

k8s-master2.test.com Ready control-plane,master 50m v1.23.6

k8s-master3.test.com Ready control-plane,master 46m v1.23.6

k8s-node1.test.com Ready <none> 26m v1.23.6

k8s-node2.test.com Ready <none> 2m29s v1.23.6

k8s-node3.test.com Ready <none> 2m25s v1.23.6

10.3 验证当前证书状态

root@k8s-node1:~# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

csr-9s4lp 55m kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:abcdef <none> Approved,Issued

csr-b926h 47m kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:abcdef <none> Approved,Issued

csr-fnk54 3m50s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:abcdef <none> Approved,Issued

csr-gbb85 28m kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:abcdef <none> Approved,Issued

csr-j9bkb 52m kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:abcdef <none> Approved,Issued

csr-q8r7v 55m kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:abcdef <none> Approved,Issued

csr-rk4v2 3m46s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:abcdef <none> Approved,Issued

csr-x8b2g 55m kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:abcdef <none> Approved,Issued

10.6 k8s创建容器并测试内部网络

创建测试容器,测试网络连接是否可有通信

注1:单master节点要允许pod运行在master节点

注2:需要配置master节点和node节点的docker.service 镜像仓库 --insecure-registry 10.0.0.206

注3:拉去镜像时要登录docker login 10.0.0.206

kubectl taint nodes --all node-role.kubernetes.io/master-

root@k8s-master1:~# kubectl run net-test1 --image=alpine sleep 360000

pod/net-test1 created

root@k8s-master1:~# kubectl run net-test2 --image=alpine sleep 360000

pod/net-test2 created

root@k8s-master1:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test1 1/1 Running 3 (15h ago) 15h 10.100.5.8 k8s-node3.test.com <none> <none>

net-test2 1/1 Running 3 (15h ago) 15h 10.100.3.8 k8s-node1.test.com <none> <none>

root@k8s-master1:~# kubectl exec -it net-test1 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever

3: eth0@if6: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue state UPlink/ether 9a:ef:4d:c1:d3:a5 brd ff:ff:ff:ff:ff:ffinet 10.100.5.8/24 brd 10.100.5.255 scope global eth0valid_lft forever preferred_lft forever

/ # ping 10.100.3.8

PING 10.100.3.8 (10.100.3.8): 56 data bytes

64 bytes from 10.100.3.8: seq=0 ttl=62 time=1.171 ms

64 bytes from 10.100.3.8: seq=1 ttl=62 time=0.280 ms

64 bytes from 10.100.3.8: seq=2 ttl=62 time=0.307 ms

^C

--- 10.100.3.8 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.280/0.586/1.171 ms

/ # ping www.baidu.com

PING www.baidu.com (103.235.46.39): 56 data bytes

64 bytes from 103.235.46.39: seq=0 ttl=127 time=195.815 ms

64 bytes from 103.235.46.39: seq=1 ttl=127 time=195.567 ms

^C

--- www.baidu.com ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 195.567/195.691/195.815 ms#如果不通:

1.检查kube-flannel.yml配置文件模块net-conf.json中的地址段是否与初始化配置的–pod-network-cidr一致,如果不一致修改文件,重新apply,因为docker有些配置依赖于flannel,所以需要重启docker;

2.如果一致,检查是否开启了net.ipv4.ip_forward =1,内核其他参数是否优化;

3.swap分区没关闭,网络原因导致证书下载失败,需要kubeadm reset初始化,重新配置

4.如果以上都修改完毕还无法ping通,重启服务器即可(一般重启完服务器就正常了)