Zookeeper集群在Kubernetes上的高可用部署方案

#作者:程宏斌

文章目录

- 部署zk.yaml

- 1、正常启动zookeeper组件

- 2、检查集群角色

- 3、测试zk创建名为test的znode,并写入数据123456,查看test znode的数据

在分布式系统中,Zookeeper 作为一个高可用的协调服务,广泛应用于服务注册与发现、配置管理、分布式锁等场景,是众多中间件(如 Kafka、HBase、Hadoop)运行的基础保障组件。为了提升系统的自动化部署效率与弹性伸缩能力,将 Zookeeper 部署到 Kubernetes(K8s)平台成为当前主流趋势之一。Zookeeper 3.6.3 版本在稳定性与功能完善度方面表现优异,适合在生产环境中运行。本方案基于 Kubernetes 的 StatefulSet 机制实现对 Zookeeper 集群的有序部署与持久化存储配置,结合 Headless Service 实现节点间的固定网络标识,确保节点之间的可靠通信。同时,方案也提供了健康探针、资源限制、卷挂载等配置,提升系统的稳定性与可维护性,为企业构建高可用的分布式基础设施提供可靠支撑。

部署zk.yaml

apiVersion: v1

kind: Service

metadata:name: zk-hsnamespace: kafkalabels:app: zk

spec:ports:- port: 2888name: server- port: 3888name: leader-election- port: 2181name: client-1clusterIP: Noneselector:app: zk

---

apiVersion: apps/v1

kind: StatefulSet

metadata:name: zknamespace: kafka

spec:selector:matchLabels:app: zkserviceName: zk-hsreplicas: 3updateStrategy:type: RollingUpdatepodManagementPolicy: Paralleltemplate:metadata:labels:app: zkspec:affinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- labelSelector:matchExpressions:- key: appoperator: Invalues:- zktopologyKey: "kubernetes.io/hostname"containers:- command:- sh- -c- bash zkGenConfig.sh && zkServer.sh start-foregroundenv:- name: ZK_REPLICASvalue: "3"- name: BITNAMI_DEBUGvalue: "true"- name: ZOO_PORT_NUMBERvalue: "2181"- name: ZOO_TICK_TIMEvalue: "2000"- name: ZOO_INIT_LIMITvalue: "10"- name: ZOO_SYNC_LIMITvalue: "5"- name: ZOO_PRE_ALLOC_SIZEvalue: "65536"- name: ZOO_SNAPCOUNTvalue: "100"- name: ZOO_MAX_CLIENT_CNXNSvalue: "60"- name: ZOO_4LW_COMMANDS_WHITELISTvalue: srvr, mntr, ruok- name: ZOO_LISTEN_ALLIPS_ENABLEDvalue: "no"- name: ZOO_AUTOPURGE_INTERVALvalue: "0"- name: ZOO_AUTOPURGE_RETAIN_COUNTvalue: "3"- name: ZOO_MAX_SESSION_TIMEOUTvalue: "40000"- name: ZOO_SERVERSvalue:zk-0.zk-hs.kafka.svc.cluster.local:2888:3888::1zk-1.zk-hs.kafka.svc.cluster.local:2888:3888::2zk-2.zk-hs.kafka.svc.cluster.local:2888:3888::3- name: ZOO_ENABLE_AUTHvalue: "no"- name: ZOO_HEAP_SIZEvalue: "1024"- name: ZOO_LOG_LEVELvalue: ERROR- name: ALLOW_ANONYMOUS_LOGINvalue: "yes"- name: SERVER_JVMFLAGSvalue: -Djute.maxbuffer=6108864- name: POD_NAMEvalueFrom:fieldRef:apiVersion: v1fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:apiVersion: v1fieldPath: metadata.namespaceimage: zookeepe:3.6.3imagePullPolicy: Nevername: zkports:- containerPort: 2181name: client-1protocol: TCP- containerPort: 2888name: serverprotocol: TCP- containerPort: 3888name: electionprotocol: TCPresources:limits:cpu: "0.3"memory: 500Mirequests:cpu: "0.3"memory: 500MiterminationMessagePath: /dev/termination-logterminationMessagePolicy: FilevolumeMounts:- mountPath: /data/zookeepername: datasubPathExpr: $(POD_NAMESPACE)/zkrestartPolicy: AlwayssecurityContext:fsGroup: 1001serviceAccount: defaultserviceAccountName: defaultterminationGracePeriodSeconds: 30volumes:- name: datahostPath:path: /tmp/zookeepertype: DirectoryOrCreate

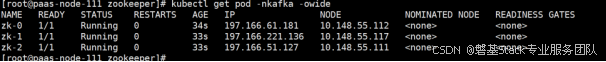

1、正常启动zookeeper组件

kubectl apply -f zk.yaml

kubectl get pod -nkafka -owide

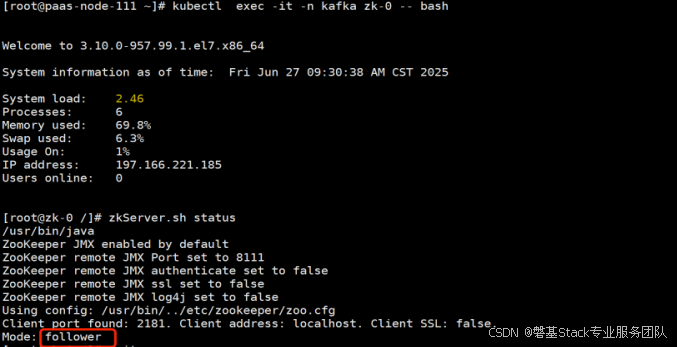

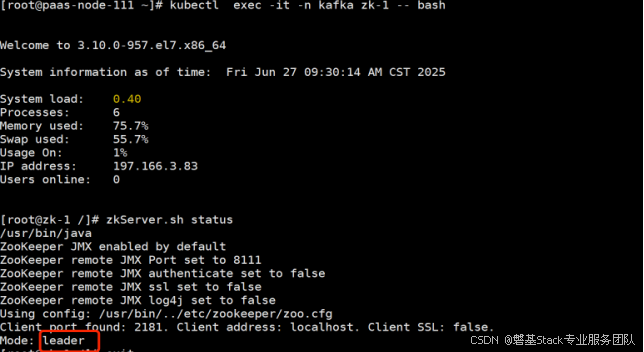

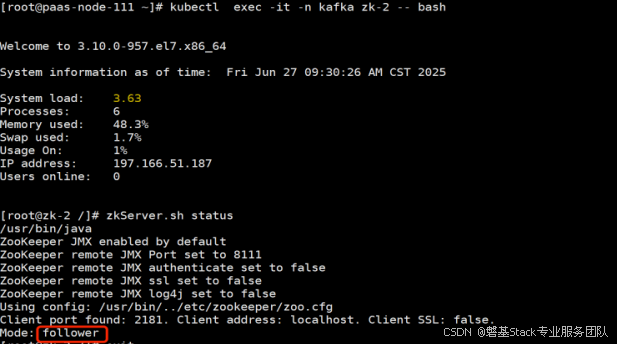

2、检查集群角色

进入pod1

进入pod2

进去pod3

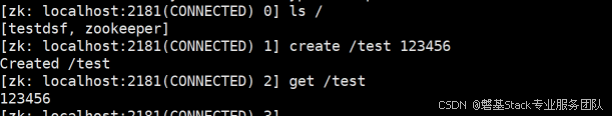

3、测试zk创建名为test的znode,并写入数据123456,查看test znode的数据

kubectl exec -it -nkafka zk-0 bash

zkCli.sh

create /test 123456

get /test