嵌入式学习-PyTorch(9)-day25

进入尾声,一个完整的模型训练 ,点亮的第一个led

#自己注释版

import torch

import torchvision.datasets

from torch import nn

from torch.utils.tensorboard import SummaryWriter

import time

# from model import *

from torch.utils.data import DataLoader#定义训练的设备

device= torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

#准备数据集

train_data = torchvision.datasets.CIFAR10(root='./data_CIF',train=True,transform=torchvision.transforms.ToTensor(),download=True)

test_data = torchvision.datasets.CIFAR10(root='./data_CIF',train=False,transform=torchvision.transforms.ToTensor(),download=True)#获得数据集长度

train_data_size = len(train_data)

test_data_size = len(test_data)

print(f"训练数据集的长度为 : {train_data_size}")

print(f"测试数据集的长度为 : {test_data_size}")#利用 Dataloader 来加载数据集

train_loader =DataLoader(dataset=train_data,batch_size=64)

test_loader =DataLoader(dataset=test_data,batch_size=64)#搭建神经网络

class Tudui(nn.Module):def __init__(self):super().__init__()self.model = nn.Sequential(nn.Conv2d(in_channels=3,out_channels=32,kernel_size=5,stride=1,padding=2),nn.MaxPool2d(2),nn.Conv2d(in_channels=32,out_channels=32,kernel_size=5,stride=1,padding=2),nn.MaxPool2d(2),nn.Conv2d(in_channels=32,out_channels=64,kernel_size=5,stride=1,padding=2),nn.MaxPool2d(2),nn.Flatten(),nn.Linear(in_features=64*4*4,out_features=64),nn.Linear(in_features=64,out_features=10),)def forward(self,x):x = self.model(x)return x#创建网络模型

tudui = Tudui()

#GPU

tudui.to(device)#损失函数

loss_fn = nn.CrossEntropyLoss()

#GPU

loss_fn.to(device)#优化器

# learning_rate = 0.001

learning_rate = 1e-2

optimizer = torch.optim.SGD(tudui.parameters(),lr=learning_rate)#设置训练网络的一些参数

#记录训练的次数

total_train_step = 0

#记录测试的次数

total_test_step = 0

#训练的轮数

epoch = 10#添加tensorboard

writer = SummaryWriter("./logs_train")start_time = time.time()

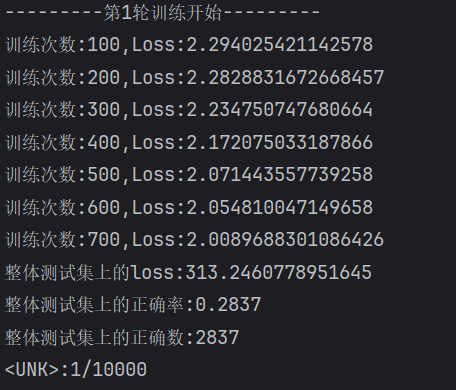

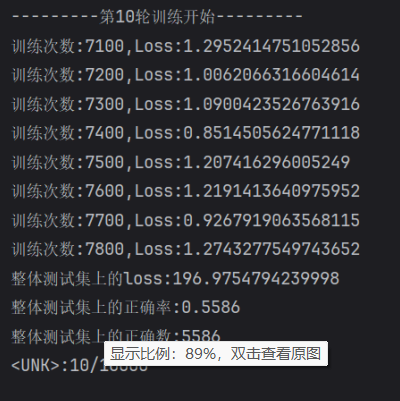

for i in range(epoch):print(f"---------第{i+1}轮训练开始---------")#训练步骤开始tudui.train() #当网络中有特定层的时候有用for data in train_loader:imgs, targets = data#GPUimgs.to(device)targets.to(device)output = tudui(imgs)loss = loss_fn(output,targets) #算出误差# 优化器优化模型#梯度置零optimizer.zero_grad()#反向传播loss.backward()#更新参数optimizer.step()#展示输出total_train_step += 1if total_train_step % 100 == 0:end_time = time.time()print(f"训练次数:{total_train_step} 花费时间:{end_time - start_time}")print(f"训练次数:{total_train_step},Loss:{loss.item()}")writer.add_scalar("train_loss",loss.item(),total_train_step)#测试步骤开始tudui.eval() #当网络中有特定层的时候有用total_test_loss = 0#整体的正确个数total_accuracy = 0#取消梯度with torch.no_grad():for data in test_loader:imgs, targets = data#GPUimgs.to(device)targets.to(device)output = tudui(imgs)loss = loss_fn(output,targets) #计算出误差#构建一些指标total_accuracy += (output.argmax(1) == targets).sum().item() #统计每一批预测的结果和真实结果相等的个数total_test_loss += loss.item()print(f"整体测试集上的loss:{total_test_loss}")print(f"整体测试集上的正确率:{total_accuracy/test_data_size}")print(f"整体测试集上的正确数:{total_accuracy}")#写入tensorboardwriter.add_scalar("test_loss",total_test_loss,total_test_step)writer.add_scalar("test_accuracy",total_accuracy/test_data_size,total_test_step)total_test_step += 1#保存模型torch.save(tudui.state_dict(),f"./tudui{i}.pth")print("模型已保存")writer.close()

#ai注释版

import torch

import torchvision.datasets

from torch import nn

from torch.utils.tensorboard import SummaryWriter

import time

from torch.utils.data import DataLoader# ----------------- 一、设备 -----------------

# 判断是否有可用的GPU,没有则用CPU

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")# ----------------- 二、准备数据 -----------------

# 下载CIFAR10训练集

train_data = torchvision.datasets.CIFAR10(root='./data_CIF', train=True, transform=torchvision.transforms.ToTensor(), download=True)

# 下载CIFAR10测试集

test_data = torchvision.datasets.CIFAR10(root='./data_CIF', train=False, transform=torchvision.transforms.ToTensor(), download=True)# 打印训练集和测试集的长度

train_data_size = len(train_data)

test_data_size = len(test_data)

print(f"训练数据集的长度为 : {train_data_size}")

print(f"测试数据集的长度为 : {test_data_size}")# 使用Dataloader封装数据,方便批量加载

train_loader = DataLoader(dataset=train_data, batch_size=64)

test_loader = DataLoader(dataset=test_data, batch_size=64)# ----------------- 三、搭建神经网络 -----------------

class Tudui(nn.Module):def __init__(self):super().__init__()# 搭建一个简单的卷积神经网络self.model = nn.Sequential(nn.Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2), # [b,3,32,32] -> [b,32,32,32]nn.MaxPool2d(2), # [b,32,32,32] -> [b,32,16,16]nn.Conv2d(32, 32, 5, 1, 2), # -> [b,32,16,16]nn.MaxPool2d(2), # -> [b,32,8,8]nn.Conv2d(32, 64, 5, 1, 2), # -> [b,64,8,8]nn.MaxPool2d(2), # -> [b,64,4,4]nn.Flatten(), # 拉平成一维 [b,64*4*4]nn.Linear(64*4*4, 64),nn.Linear(64, 10) # CIFAR10 一共10类)def forward(self, x):return self.model(x)# 创建模型对象

tudui = Tudui()

tudui.to(device) # 移动到GPU/CPU# ----------------- 四、定义损失函数和优化器 -----------------

# 交叉熵损失函数(多分类标准选择)

loss_fn = nn.CrossEntropyLoss().to(device)# SGD随机梯度下降优化器

learning_rate = 1e-2

optimizer = torch.optim.SGD(tudui.parameters(), lr=learning_rate)# ----------------- 五、训练准备 -----------------

total_train_step = 0 # 总训练次数

total_test_step = 0 # 总测试次数

epoch = 10 # 训练轮数# TensorBoard日志工具

writer = SummaryWriter("./logs_train")start_time = time.time() # 记录起始时间# ----------------- 六、开始训练 -----------------

for i in range(epoch):print(f"---------第{i+1}轮训练开始---------")# 训练模式(启用BN、Dropout等)tudui.train()for data in train_loader:imgs, targets = dataimgs, targets = imgs.to(device), targets.to(device)# 前向传播output = tudui(imgs)# 计算损失loss = loss_fn(output, targets)# 优化器梯度清零optimizer.zero_grad()# 反向传播,自动求导loss.backward()# 更新参数optimizer.step()total_train_step += 1# 每100次打印一次训练lossif total_train_step % 100 == 0:end_time = time.time()print(f"训练次数:{total_train_step} 花费时间:{end_time - start_time}")print(f"训练次数:{total_train_step}, Loss:{loss.item()}")# 写入TensorBoardwriter.add_scalar("train_loss", loss.item(), total_train_step)# ----------------- 七、测试步骤 -----------------tudui.eval() # 切换到测试模式(停用BN、Dropout)total_test_loss = 0total_accuracy = 0# 不计算梯度,节省显存,加快推理with torch.no_grad():for data in test_loader:imgs, targets = dataimgs, targets = imgs.to(device), targets.to(device)output = tudui(imgs)loss = loss_fn(output, targets)total_test_loss += loss.item()# 预测正确个数统计total_accuracy += (output.argmax(1) == targets).sum().item()print(f"整体测试集上的Loss: {total_test_loss}")print(f"整体测试集上的正确率: {total_accuracy / test_data_size}")print(f"整体测试集上的正确数: {total_accuracy}")# 写入TensorBoard(测试loss和准确率)writer.add_scalar("test_loss", total_test_loss, total_test_step)writer.add_scalar("test_accuracy", total_accuracy / test_data_size, total_test_step)total_test_step += 1# ----------------- 八、保存模型 -----------------torch.save(tudui.state_dict(), f"./tudui{i}.pth")print("模型已保存")# ----------------- 九、关闭TensorBoard -----------------

writer.close()

结果图

忘记清除历史数据了

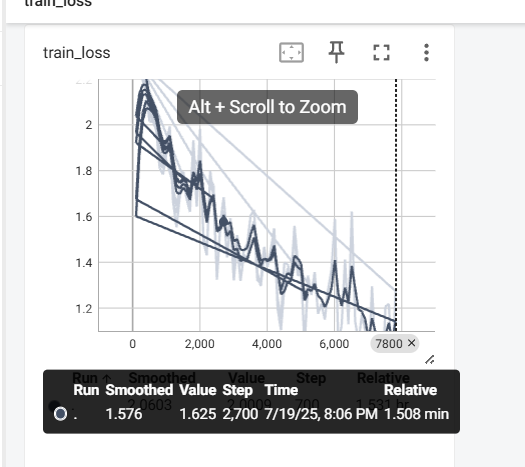

完整的模型验证套路

import torch

import torchvision.transforms

from PIL import Image

from torch import nnimage_path = "./images/微信截图_20250719220956.png"

image = Image.open(image_path).convert('RGB')

print(type(image))transform = torchvision.transforms.Compose([torchvision.transforms.Resize((32,32)),torchvision.transforms.ToTensor()])

image = transform(image)

print(type(image))#搭建神经网络

class Tudui(nn.Module):def __init__(self):super().__init__()self.model = nn.Sequential(nn.Conv2d(in_channels=3,out_channels=32,kernel_size=5,stride=1,padding=2),nn.MaxPool2d(2),nn.Conv2d(in_channels=32,out_channels=32,kernel_size=5,stride=1,padding=2),nn.MaxPool2d(2),nn.Conv2d(in_channels=32,out_channels=64,kernel_size=5,stride=1,padding=2),nn.MaxPool2d(2),nn.Flatten(),nn.Linear(in_features=64*4*4,out_features=64),nn.Linear(in_features=64,out_features=10),)def forward(self,x):x = self.model(x)return xmodel = Tudui()

model.load_state_dict(torch.load("tudui9.pth"))

image = torch.reshape(image, (1,3,32,32))

model.eval()

with torch.no_grad():output = model(image)

print(output)

print(output.argmax(1))

5确实是狗,验证成功