西安网站建设优化服务公司网站排名推广推荐

赛事背景:基于《Datawhale AI夏令营-基于带货视频评论的用户洞察挑战赛-CSDN博客》

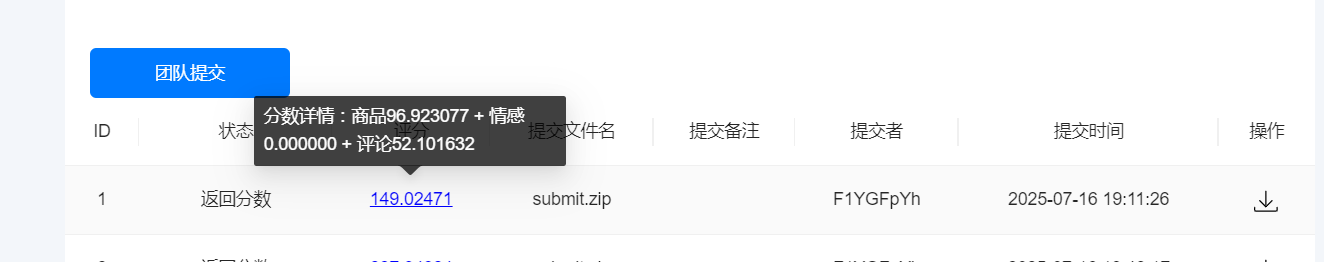

提升效果

主要的改进:用bert的上下文分词器替代jieba分词器,来提高识别商品的效果。

改进代码如下:

import pandas as pd

import torch

from sklearn.linear_model import SGDClassifier

from sklearn.cluster import KMeans

from sklearn.feature_extraction.text import TfidfVectorizer

from transformers import BertTokenizer

import numpy as np

import os# 1. 加载数据

video_data = pd.read_csv("origin_videos_data.csv")

comments_data = pd.read_csv("origin_comments_data.csv")# 2. 取样表条数10条

print(video_data.sample(10))# 3. 提取表头

print(comments_data.head())# 4. 合并视频数据的两个字段

video_data["text"] = video_data["video_desc"].fillna("") + " " + video_data["video_tags"].fillna("")# 5. 加载BERT分词器

tokenizer = BertTokenizer.from_pretrained("/mnt/workspace/bert-base-chinese")# 6. 商品识别

class BertProductNamePredictor:def __init__(self):self.vectorizer = TfidfVectorizer(tokenizer=lambda x: tokenizer.tokenize(x), max_features=100)self.classifier = SGDClassifier()def fit(self, texts, labels):X = self.vectorizer.fit_transform(texts)self.classifier.fit(X, labels)def predict(self, texts):X = self.vectorizer.transform(texts)return self.classifier.predict(X)product_name_predictor = BertProductNamePredictor()

product_name_predictor.fit(video_data[~video_data["product_name"].isnull()]["text"],video_data[~video_data["product_name"].isnull()]["product_name"]

)

video_data["product_name"] = product_name_predictor.predict(video_data["text"])# 7. 加载评论数据

print(comments_data.columns)# 8. 情感分析

class BertSentimentAnalyzer:def __init__(self):self.vectorizer = TfidfVectorizer(tokenizer=lambda x: tokenizer.tokenize(x))self.classifier = SGDClassifier()def fit(self, texts, labels):X = self.vectorizer.fit_transform(texts)self.classifier.fit(X, labels)def predict(self, texts):X = self.vectorizer.transform(texts)return self.classifier.predict(X)# 对情感分析进行训练和预测

for col in ['sentiment_category', 'user_scenario', 'user_question', 'user_suggestion']:sentiment_analyzer = BertSentimentAnalyzer()nonnull_data = comments_data[~comments_data[col].isnull()]if not nonnull_data.empty:sentiment_analyzer.fit(nonnull_data["comment_text"],nonnull_data[col].astype(int) # 确保标签为整数)comments_data[col] = np.nan # 清空列以避免后续赋值时出错comments_data.loc[nonnull_data.index, col] = sentiment_analyzer.predict(nonnull_data["comment_text"])# 9. 聚类提取关键词数量

top_n_words = 20# 10. 情感分析 - 正面聚类

kmeans_positive = KMeans(n_clusters=8, random_state=42)

X_positive = comments_data[comments_data["sentiment_category"].isin([1, 3])]["comment_text"]

if not X_positive.empty:X_positive_transformed = sentiment_analyzer.vectorizer.transform(X_positive)if X_positive_transformed.shape[0] > 0: # 确保有样本kmeans_positive.fit(X_positive_transformed)positive_labels = kmeans_positive.predict(X_positive_transformed)comments_data.loc[comments_data["sentiment_category"].isin([1, 3]), "positive_cluster"] = positive_labelskmeans_top_word_positive = []feature_names = sentiment_analyzer.vectorizer.get_feature_names_out()for i in range(kmeans_positive.n_clusters):top_word_indices = kmeans_positive.cluster_centers_[i].argsort()[::-1][:top_n_words]top_word = ' '.join([feature_names[idx] for idx in top_word_indices])kmeans_top_word_positive.append(top_word)# 这里需要确保 positive_labels 是整数类型comments_data.loc[comments_data["sentiment_category"].isin([1, 3]), "positive_cluster_theme"] = [kmeans_top_word_positive[int(x)] for x in positive_labels]# 11. 情感分析 - 负面聚类

kmeans_negative = KMeans(n_clusters=8, random_state=42)

X_negative = comments_data[comments_data["sentiment_category"].isin([2, 3])]["comment_text"]

if not X_negative.empty:X_negative_transformed = sentiment_analyzer.vectorizer.transform(X_negative)if X_negative_transformed.shape[0] > 0: # 确保有样本kmeans_negative.fit(X_negative_transformed)negative_labels = kmeans_negative.predict(X_negative_transformed)comments_data.loc[comments_data["sentiment_category"].isin([2, 3]), "negative_cluster"] = negative_labelskmeans_top_word_negative = []feature_names = sentiment_analyzer.vectorizer.get_feature_names_out()for i in range(kmeans_negative.n_clusters):top_word_indices = kmeans_negative.cluster_centers_[i].argsort()[::-1][:top_n_words]top_word = ' '.join([feature_names[idx] for idx in top_word_indices])kmeans_top_word_negative.append(top_word)# 这里需要确保 negative_labels 是整数类型comments_data.loc[comments_data["sentiment_category"].isin([2, 3]), "negative_cluster_theme"] = [kmeans_top_word_negative[int(x)] for x in negative_labels]# 12. 用户场景聚类

kmeans_scenario = KMeans(n_clusters=8, random_state=42)

X_scenario = comments_data[comments_data["user_scenario"] == 1]["comment_text"]

if not X_scenario.empty:X_scenario_transformed = sentiment_analyzer.vectorizer.transform(X_scenario)if X_scenario_transformed.shape[0] > 0: # 确保有样本kmeans_scenario.fit(X_scenario_transformed)scenario_labels = kmeans_scenario.predict(X_scenario_transformed)comments_data.loc[comments_data["user_scenario"] == 1, "scenario_cluster"] = scenario_labelskmeans_top_word_scenario = []feature_names = sentiment_analyzer.vectorizer.get_feature_names_out()for i in range(kmeans_scenario.n_clusters):top_word_indices = kmeans_scenario.cluster_centers_[i].argsort()[::-1][:top_n_words]top_word = ' '.join([feature_names[idx] for idx in top_word_indices])kmeans_top_word_scenario.append(top_word)# 这里需要确保 scenario_labels 是整数类型comments_data.loc[comments_data["user_scenario"] == 1, "scenario_cluster_theme"] = [kmeans_top_word_scenario[int(x)] for x in scenario_labels]# 13. 用户问题聚类

kmeans_question = KMeans(n_clusters=8, random_state=42)

X_question = comments_data[comments_data["user_question"] == 1]["comment_text"]

if not X_question.empty:X_question_transformed = sentiment_analyzer.vectorizer.transform(X_question)if X_question_transformed.shape[0] > 0: # 确保有样本kmeans_question.fit(X_question_transformed)question_labels = kmeans_question.predict(X_question_transformed)comments_data.loc[comments_data["user_question"] == 1, "question_cluster"] = question_labelskmeans_top_word_question = []feature_names = sentiment_analyzer.vectorizer.get_feature_names_out()for i in range(kmeans_question.n_clusters):top_word_indices = kmeans_question.cluster_centers_[i].argsort()[::-1][:top_n_words]top_word = ' '.join([feature_names[idx] for idx in top_word_indices])kmeans_top_word_question.append(top_word)# 这里需要确保 question_labels 是整数类型comments_data.loc[comments_data["user_question"] == 1, "question_cluster_theme"] = [kmeans_top_word_question[int(x)] for x in question_labels]# 14. 用户建议聚类

kmeans_suggestion = KMeans(n_clusters=8, random_state=42)

X_suggestion = comments_data[comments_data["user_suggestion"] == 1]["comment_text"]

if not X_suggestion.empty:X_suggestion_transformed = sentiment_analyzer.vectorizer.transform(X_suggestion)if X_suggestion_transformed.shape[0] > 0: # 确保有样本kmeans_suggestion.fit(X_suggestion_transformed)suggestion_labels = kmeans_suggestion.predict(X_suggestion_transformed)comments_data.loc[comments_data["user_suggestion"] == 1, "suggestion_cluster"] = suggestion_labelskmeans_top_word_suggestion = []feature_names = sentiment_analyzer.vectorizer.get_feature_names_out()for i in range(kmeans_suggestion.n_clusters):top_word_indices = kmeans_suggestion.cluster_centers_[i].argsort()[::-1][:top_n_words]top_word = ' '.join([feature_names[idx] for idx in top_word_indices])kmeans_top_word_suggestion.append(top_word)# 这里需要确保 suggestion_labels 是整数类型comments_data.loc[comments_data["user_suggestion"] == 1, "suggestion_cluster_theme"] = [kmeans_top_word_suggestion[int(x)] for x in suggestion_labels]# 15. 创建提交目录

os.makedirs("submit", exist_ok=True)# 16. 保存结果

video_data[["video_id", "product_name"]].to_csv("submit/submit_videos.csv", index=None)

comments_data[['video_id', 'comment_id', 'sentiment_category','user_scenario', 'user_question', 'user_suggestion','positive_cluster_theme', 'negative_cluster_theme','scenario_cluster_theme', 'question_cluster_theme','suggestion_cluster_theme']].to_csv("submit/submit_comments.csv", index=None)print("数据已成功保存至 submit 目录!")

!zip -r submit.zip submit/

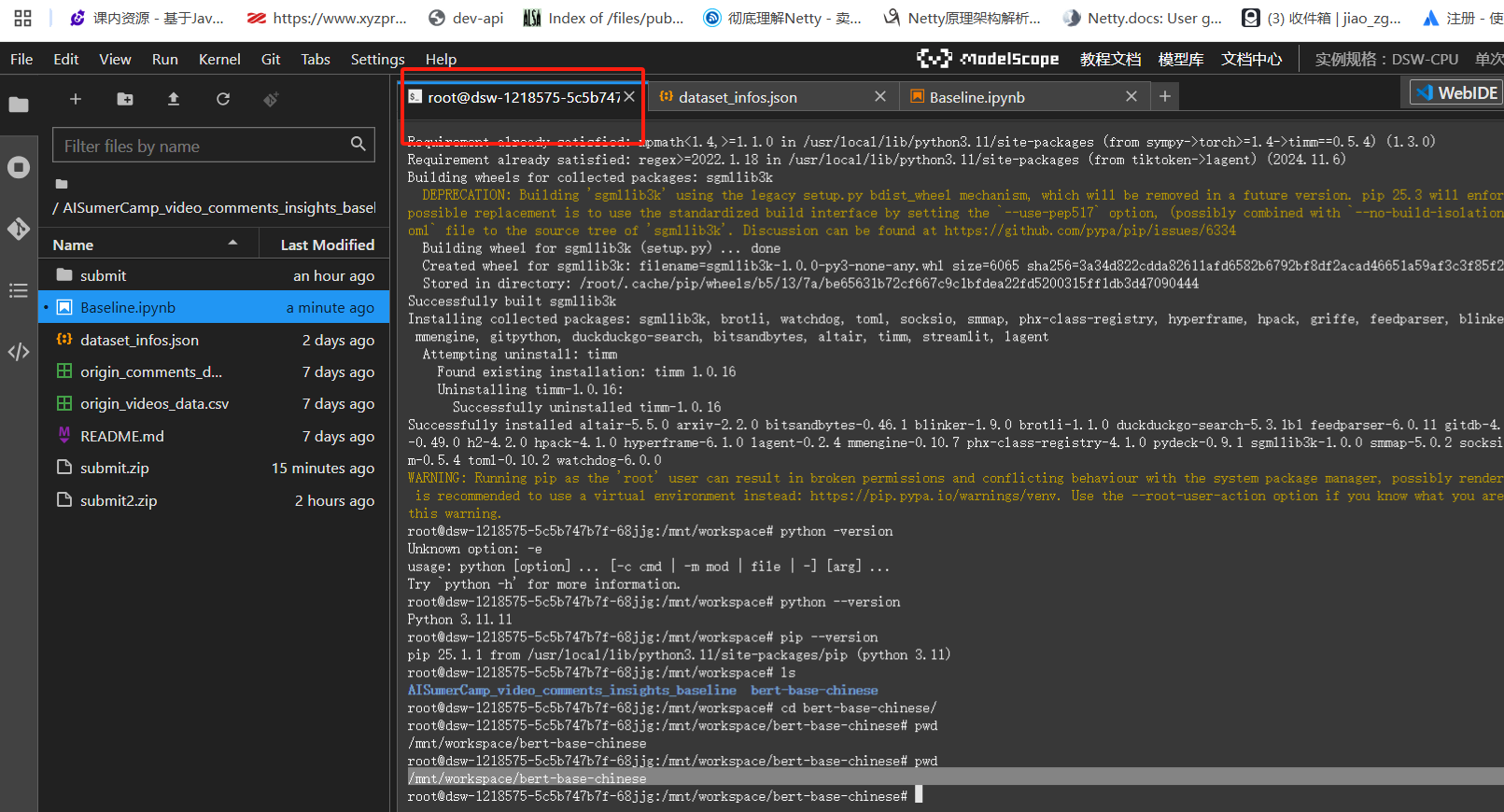

改进之前需要提前安装bert的模型包:

git lfs install

git clone https://hf-mirror.com/google-bert/bert-base-chinese

安装后目录:

进入bash环境查看

/mnt/workspace/bert-base-chinese

pwd 结果:虽然商品提升了,但是情感分析是0分,继续修改

继续优化

import pandas as pd

import torch

from sklearn.linear_model import SGDClassifier

from sklearn.pipeline import make_pipeline

from sklearn.cluster import KMeans

from sklearn.feature_extraction.text import TfidfVectorizer

from transformers import BertTokenizer

import numpy as np

import os

import jieba# 1. 加载数据

video_data = pd.read_csv("origin_videos_data.csv")

comments_data = pd.read_csv("origin_comments_data.csv")# 2. 取样表条数10条

print(video_data.sample(10))# 3. 提取表头

print(comments_data.head())# 4. 合并视频数据的两个字段

video_data["text"] = video_data["video_desc"].fillna("") + " " + video_data["video_tags"].fillna("")# 5. 加载BERT分词器

tokenizer = BertTokenizer.from_pretrained("/mnt/workspace/bert-base-chinese")# 6. 商品识别

class BertProductNamePredictor:def __init__(self):self.vectorizer = TfidfVectorizer(tokenizer=lambda x: tokenizer.tokenize(x), max_features=100)self.classifier = SGDClassifier()def fit(self, texts, labels):X = self.vectorizer.fit_transform(texts)self.classifier.fit(X, labels)def predict(self, texts):X = self.vectorizer.transform(texts)return self.classifier.predict(X)product_name_predictor = BertProductNamePredictor()

product_name_predictor.fit(video_data[~video_data["product_name"].isnull()]["text"],video_data[~video_data["product_name"].isnull()]["product_name"]

)

video_data["product_name"] = product_name_predictor.predict(video_data["text"])# 7. 加载评论数据

print(comments_data.columns)# 8. 情感分析

class BertSentimentAnalyzer:def __init__(self):self.vectorizer = TfidfVectorizer(tokenizer=lambda x: tokenizer.tokenize(x))self.classifier = SGDClassifier()def fit(self, texts, labels):X = self.vectorizer.fit_transform(texts)self.classifier.fit(X, labels)def predict(self, texts):X = self.vectorizer.transform(texts)return self.classifier.predict(X)# 对情感分析进行训练和预测

for col in ['sentiment_category', 'user_scenario', 'user_question', 'user_suggestion']:sentiment_analyzer = BertSentimentAnalyzer()nonnull_data = comments_data[~comments_data[col].isnull()]if not nonnull_data.empty:sentiment_analyzer.fit(nonnull_data["comment_text"],nonnull_data[col].astype(int) # 确保标签为整数)comments_data[col] = np.nan # 清空列以避免后续赋值时出错comments_data.loc[nonnull_data.index, col] = sentiment_analyzer.predict(nonnull_data["comment_text"])# 9. 聚类提取关键词数量

top_n_words = 5 # 修改为5,您可以根据需要进行调整# 10. 情感分析 - 负面聚类

kmeans_predictor = make_pipeline(TfidfVectorizer(tokenizer=jieba.lcut), KMeans(n_clusters=5, random_state=42)

)# 训练负面情感的聚类

kmeans_predictor.fit(comments_data[comments_data["sentiment_category"].isin([2, 3])]["comment_text"])

kmeans_cluster_label = kmeans_predictor.predict(comments_data[comments_data["sentiment_category"].isin([2, 3])]["comment_text"])kmeans_top_word = []

tfidf_vectorizer = kmeans_predictor.named_steps['tfidfvectorizer']

kmeans_model = kmeans_predictor.named_steps['kmeans']

feature_names = tfidf_vectorizer.get_feature_names_out()

cluster_centers = kmeans_model.cluster_centers_for i in range(kmeans_model.n_clusters):top_feature_indices = cluster_centers[i].argsort()[::-1]top_word = ' '.join([feature_names[idx] for idx in top_feature_indices[:top_n_words]])kmeans_top_word.append(top_word)comments_data.loc[comments_data["sentiment_category"].isin([2, 3]), "negative_cluster_theme"] = [kmeans_top_word[x] for x in kmeans_cluster_label]# 11. 情感分析 - 用户场景聚类

kmeans_predictor = make_pipeline(TfidfVectorizer(tokenizer=jieba.lcut), KMeans(n_clusters=5, random_state=42)

)kmeans_predictor.fit(comments_data[comments_data["user_scenario"] == 1]["comment_text"])

kmeans_cluster_label = kmeans_predictor.predict(comments_data[comments_data["user_scenario"] == 1]["comment_text"])kmeans_top_word = []

tfidf_vectorizer = kmeans_predictor.named_steps['tfidfvectorizer']

kmeans_model = kmeans_predictor.named_steps['kmeans']

feature_names = tfidf_vectorizer.get_feature_names_out()

cluster_centers = kmeans_model.cluster_centers_for i in range(kmeans_model.n_clusters):top_feature_indices = cluster_centers[i].argsort()[::-1]top_word = ' '.join([feature_names[idx] for idx in top_feature_indices[:top_n_words]])kmeans_top_word.append(top_word)comments_data.loc[comments_data["user_scenario"] == 1, "scenario_cluster_theme"] = [kmeans_top_word[x] for x in kmeans_cluster_label]# 12. 情感分析 - 用户问题聚类

kmeans_predictor = make_pipeline(TfidfVectorizer(tokenizer=jieba.lcut), KMeans(n_clusters=5, random_state=42)

)kmeans_predictor.fit(comments_data[comments_data["user_question"] == 1]["comment_text"])

kmeans_cluster_label = kmeans_predictor.predict(comments_data[comments_data["user_question"] == 1]["comment_text"])kmeans_top_word = []

tfidf_vectorizer = kmeans_predictor.named_steps['tfidfvectorizer']

kmeans_model = kmeans_predictor.named_steps['kmeans']

feature_names = tfidf_vectorizer.get_feature_names_out()

cluster_centers = kmeans_model.cluster_centers_for i in range(kmeans_model.n_clusters):top_feature_indices = cluster_centers[i].argsort()[::-1]top_word = ' '.join([feature_names[idx] for idx in top_feature_indices[:top_n_words]])kmeans_top_word.append(top_word)comments_data.loc[comments_data["user_question"] == 1, "question_cluster_theme"] = [kmeans_top_word[x] for x in kmeans_cluster_label]# 13. 情感分析 - 用户建议聚类

kmeans_predictor = make_pipeline(TfidfVectorizer(tokenizer=jieba.lcut), KMeans(n_clusters=5, random_state=42)

)kmeans_predictor.fit(comments_data[comments_data["user_suggestion"] == 1]["comment_text"])

kmeans_cluster_label = kmeans_predictor.predict(comments_data[comments_data["user_suggestion"] == 1]["comment_text"])kmeans_top_word = []

tfidf_vectorizer = kmeans_predictor.named_steps['tfidfvectorizer']

kmeans_model = kmeans_predictor.named_steps['kmeans']

feature_names = tfidf_vectorizer.get_feature_names_out()

cluster_centers = kmeans_model.cluster_centers_for i in range(kmeans_model.n_clusters):top_feature_indices = cluster_centers[i].argsort()[::-1]top_word = ' '.join([feature_names[idx] for idx in top_feature_indices[:top_n_words]])kmeans_top_word.append(top_word)comments_data.loc[comments_data["user_suggestion"] == 1, "suggestion_cluster_theme"] = [kmeans_top_word[x] for x in kmeans_cluster_label]# 14. 创建提交目录

os.makedirs("submit", exist_ok=True)# 15. 保存结果

video_data[["video_id", "product_name"]].to_csv("submit/submit_videos.csv", index=None)

comments_data[['video_id', 'comment_id', 'sentiment_category','user_scenario', 'user_question', 'user_suggestion','positive_cluster_theme', 'negative_cluster_theme','scenario_cluster_theme', 'question_cluster_theme','suggestion_cluster_theme']].to_csv("submit/submit_comments.csv", index=None)print("数据已成功保存至 submit 目录!")# 16. 创建压缩包

import zipfilewith zipfile.ZipFile("submit.zip", 'w') as zipf:zipf.write("submit/submit_videos.csv", arcname="submit_videos.csv")zipf.write("submit/submit_comments.csv", arcname="submit_comments.csv")print("结果文件已成功压缩为 results.zip!")

虽然没有机会验证结果了,不过在商品识别提高到96以上,也算完成了一个阶段的优化