南京网站推广公司google引擎入口

xformers

xformers 是Meta使用在Transformer注意力优化上的,能够有效加速attention计算并降低显存。看了很多文章,只有安装教程,没有使用教程。

安装

xformers必须是在pytorch 2.7.0以上版本环境下,使用linux或python终端安装

pip install torch==2.7.1+cu118 --index-url https://download.pytorch.org/whl/cu118

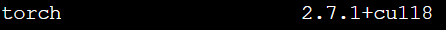

会根据你的GPU,即cuda版本下载对应的torch,必须下载GPU版本的torch!后面会显示+cu118,使用pip list 查看一下是否安装成功

在下载好,下载对应cuda版本的xformers

# [linux only] cuda 11.8 version

pip3 install -U xformers --index-url https://download.pytorch.org/whl/cu118

# [linux & win] cuda 12.6 version

pip3 install -U xformers --index-url https://download.pytorch.org/whl/cu126

# [linux & win] cuda 12.8 version

pip3 install -U xformers --index-url https://download.pytorch.org/whl/cu128

# [linux only] (EXPERIMENTAL) rocm 6.3 version

pip3 install -U xformers --index-url https://download.pytorch.org/whl/rocm6.3

运行

我们使用的是xformers.ops.memory_efficient_attention函数,会替代常规的注意力计算过程,下面是memory_efficient_attention函数原始代码,无需紧要:

def memory_efficient_attention(query: torch.Tensor,key: torch.Tensor,value: torch.Tensor,attn_bias: Optional[Union[torch.Tensor, AttentionBias]] = None,p: float = 0.0,scale: Optional[float] = None,*,op: Optional[AttentionOp] = None,

) -> torch.Tensor:"""Implements the memory-efficient attention mechanism following`"Self-Attention Does Not Need O(n^2) Memory" <http://arxiv.org/abs/2112.05682>`_.:Inputs shape:- Input tensors must be in format ``[B, M, H, K]``, where B is the batch size, M \the sequence length, H the number of heads, and K the embeding size per head- If inputs have dimension 3, it is assumed that the dimensions are ``[B, M, K]`` and ``H=1``- Inputs can be non-contiguous - we only require the last dimension's stride to be 1:Equivalent pytorch code:.. code-block:: pythonscale = 1 / query.shape[-1] ** 0.5query = query * scaleattn = query @ key.transpose(-2, -1)if attn_bias is not None:attn = attn + attn_biasattn = attn.softmax(-1)attn = F.dropout(attn, p)return attn @ value:Examples:.. code-block:: pythonimport xformers.ops as xops# Compute regular attentiony = xops.memory_efficient_attention(q, k, v)# With a dropout of 0.2y = xops.memory_efficient_attention(q, k, v, p=0.2)# Causal attentiony = xops.memory_efficient_attention(q, k, v,attn_bias=xops.LowerTriangularMask()):Supported hardware:NVIDIA GPUs with compute capability above 6.0 (P100+), datatype ``f16``, ``bf16`` and ``f32``.:Note:This operator may be nondeterministic.Raises:NotImplementedError: if there is no operator available to compute the MHAValueError: if inputs are invalid:parameter query: Tensor of shape ``[B, Mq, H, K]``:parameter key: Tensor of shape ``[B, Mkv, H, K]``:parameter value: Tensor of shape ``[B, Mkv, H, Kv]``:parameter attn_bias: Bias to apply to the attention matrix - defaults to no masking. \For common biases implemented efficiently in xFormers, see :attr:`xformers.ops.fmha.attn_bias.AttentionBias`. \This can also be a :attr:`torch.Tensor` for an arbitrary mask (slower).:parameter p: Dropout probability. Disabled if set to ``0.0``:parameter scale: Scaling factor for ``Q @ K.transpose()``. If set to ``None``, the default \scale (q.shape[-1]**-0.5) will be used.:parameter op: The operators to use - see :attr:`xformers.ops.AttentionOpBase`. \If set to ``None`` (recommended), xFormers \will dispatch to the best available operator, depending on the inputs \and options.:return: multi-head attention Tensor with shape ``[B, Mq, H, Kv]``"""return _memory_efficient_attention(Inputs(query=query, key=key, value=value, p=p, attn_bias=attn_bias, scale=scale),op=op,)

上面展示memory_efficient_attention函数代码,下面是我们常规的注意力计算过程:

class Attention(nn.Module):def __init__(self,

dim: int,num_heads: int = 8,qkv_bias: bool = False,proj_bias: bool = True,attn_drop: float = 0.0,proj_drop: float = 0.0,) -> None:super().__init__()self.num_heads = num_headshead_dim = dim // num_headsself.scale = head_dim**-0.5self.qkv = nn.Linear(dim, dim * 3, bias=qkv_bias)self.attn_drop = nn.Dropout(attn_drop)self.proj = nn.Linear(dim, dim, bias=proj_bias)self.proj_drop = nn.Dropout(proj_drop)def forward(self, x: Tensor) -> Tensor:B, N, C = x.shapeqkv = self.qkv(x).reshape(B, N, 3, self.num_heads, C // self.num_heads).permute(2, 0, 3, 1, 4)q, k, v = qkv[0] * self.scale, qkv[1], qkv[2]attn = q @ k.transpose(-2, -1attn = attn.softmax(dim=-1)attn = self.attn_drop(attn)x = (attn @ v).transpose(1, 2).reshape(B, N, C)x = self.proj(x)x = self.proj_drop(x)return x

可以看到,下面这部分是主要注意力计算过程

attn = q @ k.transpose(-2, -1)attn = attn.softmax(dim=-1)attn = self.attn_drop(attn)x = (attn @ v).transpose(1, 2).reshape(B, N, C)

那么使用memory_efficient_attention函数去替代:

def forward(self,x:Tensor)->Tensor:x=self.layer(x)B,N,C=x.shape#1:组数 2:头数 3:patch数量 4:每个头分的维度 相当于将每张图片的不同维度分给了不同的头qkv = self.qkv(x).reshape(B, N, 3, self.num_heads, C // self.num_heads).permute(2, 0, 3, 1, 4)q,k,v=qkv[0]*self.scale,qkv[1],qkv[2]q, k, v = qkv[0] * self.scale, qkv[1], qkv[2]#替代部分x = memory_efficient_attention(q, k, v, attn_bias=None)x = x.reshape(B, N, C)x=self.proj_drop(x)return x

因为xformers是在GPU环境下运行必须将张量和模型放到GPU设备上!下面进行实例化,这里没有直接使用模型,只是将注意力机制当成模型实例化,假如模型里面使用了注意力机制,将模型放到GPU上就行:

from torch import nn,Tensor

import torch

from xformers.ops import memory_efficient_attentionclass Attention(nn.Module):def __init__(self,dim: int,num_heads: int = 8,qkv_bias: bool = False,proj_bias: bool = True,attn_drop: float = 0.0,proj_drop: float = 0.0,)->None:super().__init__()self.num_heads=num_headshead_dim=dim//num_headsself.scale = head_dim**-0.5head_dim = dim // num_headsself.scale = head_dim**-0.5self.qkv=nn.Linear(dim,dim*3,bias=qkv_bias)self.attn_drop=nn.Dropout(attn_drop)self.proj=nn.Linear(dim,dim)self.proj_drop=nn.Dropout(proj_drop)self.layer=nn.LayerNorm(normalized_shape=768)def forward(self,x:Tensor)->Tensor:x=self.layer(x)B,N,C=x.shapeqkv = self.qkv(x).reshape(B, N, 3, self.num_heads, C // self.num_heads).permute(2, 0, 3, 1, 4)q,k,v=qkv[0]*self.scale,qkv[1],qkv[2]q, k, v = qkv[0] * self.scale, qkv[1], qkv[2]x = memory_efficient_attention(q, k, v, attn_bias=None)x = x.reshape(B, N, C)x=self.proj_drop(x)return x#得到GPU设备

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

#假设样本已经进行了编码,样本数为3,patch数为196,维度dim为768,将其放到GPU上

tensor1=torch.rand(3,196,768).to(device)

#实例化模型,将模型放到GPU上

att=Attention(dim=768).to(device)

result=att(tensor1)

print(result)

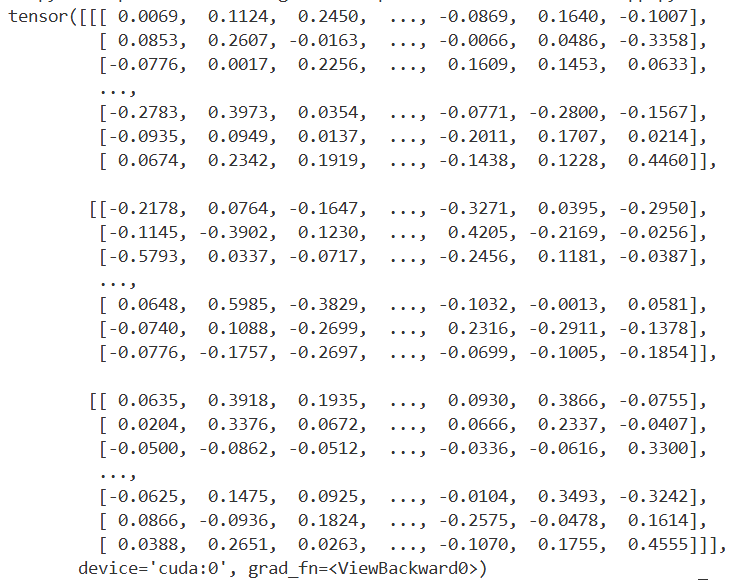

以下是运行结果,上面代码完全可以复制使用