Kubernetes service管理

第5部:kubernetes核心概念Service

service作用

使用kubernetes集群运行工作负载时,由于Pod经常处于用后即焚状态,Pod经常被重新生成,因此Pod对应的IP地址也会经常变化,导致无法直接访问Pod提供的服务,Kuberetes中使用了Service来解决这一问题,即在Pod前面使用Service对Pod进行代理,无论Pod怎样变化,只要有Label,就可以让Service能够联系上Pod,把Pod lP地址添加到Service对应的端点列表(Endpoints)实现对Pod IP跟踪,进而实现通过Service访问Pod目的。

Service通过Label联系Pod,并将其IP添加到Service对应的端点列表(Endpoints),以实现对Pod监控与管理

- 通过service为pod客户端提供访问pod方法,即可客户端访问pod入口

- 通过标签动态感知podIP地址变化等

- 防止pod失联

- 定义访问pod访问策略

- 通过label-selector相关联

- 通过Service实现Pod的负载均衡(TCP/UDP4层)

- 底层实现由kube-proxy通过userspace、iptables、ipvs三种代理模式

kube-proxy三种代理模式

- kubernetes集群中有三层网络,

- 一类是真实存在的,例如Node Network、Pod Network,提供真实IP地址

- 一类是虚拟的,例如ClusterNetwork或Service Network,提供虚拟IP地址,不会出现在接口上,仅会出现在Service当中

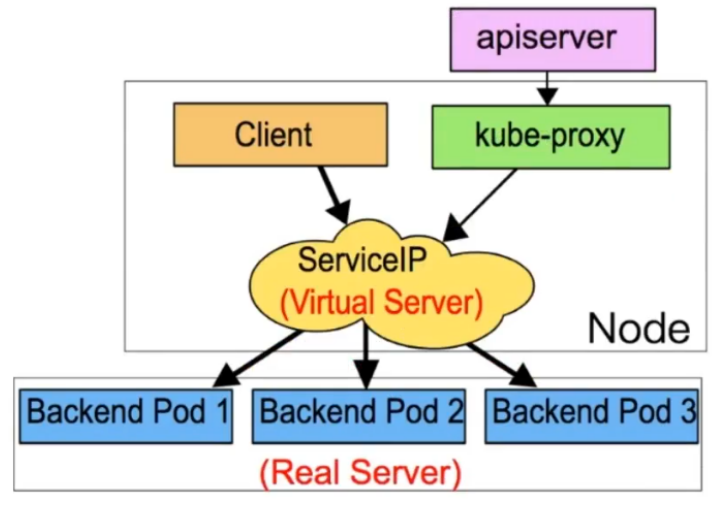

- kube-proxy始终watch(监控)kube-apiserver上关于Service相关的资源变动状态,一旦获取相关信息kube:proxy都要把相关信息转化为当前节点之上的,能够实现Service资源调度到特定Pod之上的规则,进而实现访问Service就能够获取Pod所提供的服务

- kube-proxy三种代理模式:userspace模式、iptables模式、ipvs模式

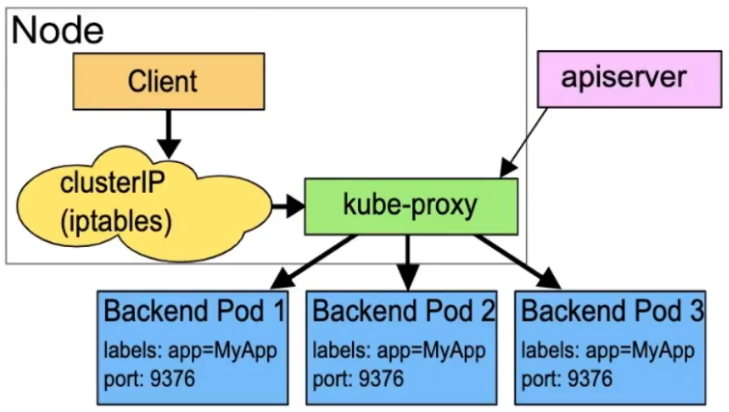

userspace模式

userspace 模式是 kube-proxy 使用的第一代模式,该模式在 kubernetes v1.0 版本开始支持使用。

userspace 模式的实现原理图示如下:

kube-proxy会为每个 Service 随机监听一个端口(proxyport),并增加一条 iptables规则。所以通过ClusterlP:Port 访问 Service 的报文都redirect 到 proxy port,kube-proxy 从它监听的 proxy port 收到报文以后,走 round robin(默认)或是 session affinity(会活亲和力,即同client IP 都走同一链路给同-pod 服务),分发给对应的 pod。

由于 userspace 模式会造成所有报文都走一遍用户态(也就是Service 请求会先从用户空间进入内核iptables,然后再回到用户空间,由kube-proxy 完成后端 Endpoints 的选择和代理工作),需要在内核空间和用户空间转换,流量从用户空间进出内核会带来性能损耗,所以这种模式效率低、性能不高,不推荐使用。

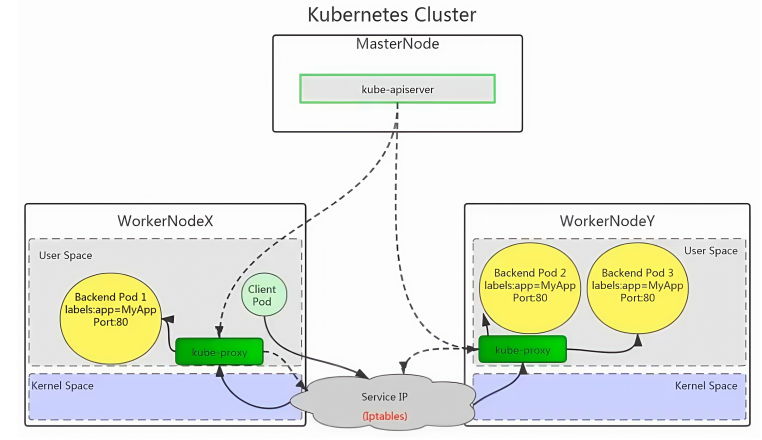

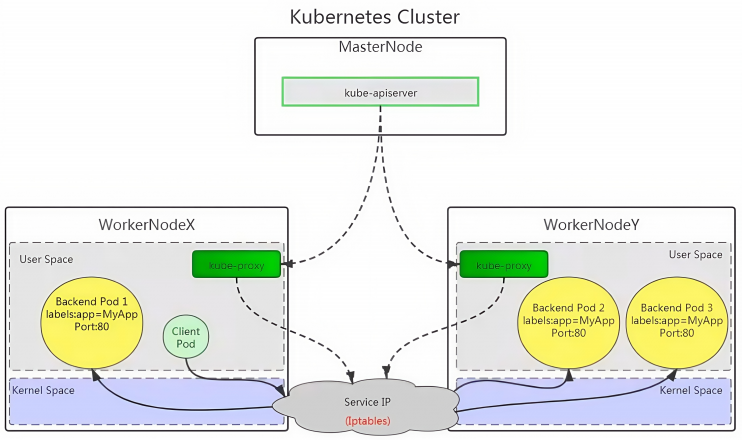

iptables模式

iptables 模式是 kube-proxy使用的第二代模式,该模式在 kubernetes v1.1版本开始支持,从v1.2 版本开始成为 kube-proxy 的默认模式。

iptables 模式的负载均衡模式是通过底层 netfilter/iptables 规则来实现的,通过 informer 机制 Watch接口实时跟踪 Service 和 Endpoint 的变更事件,并触发对 iptables 规则的同步更新。

iptables 模式的实现原理图示如下:

通过图示可以发现在 iptables模式下,kube proxy只是作为 controller,而不是server,真正服务的是内核的 netfilter,体现在用户态的是 iptables。所以整体的效率会比 userspace 模式高。

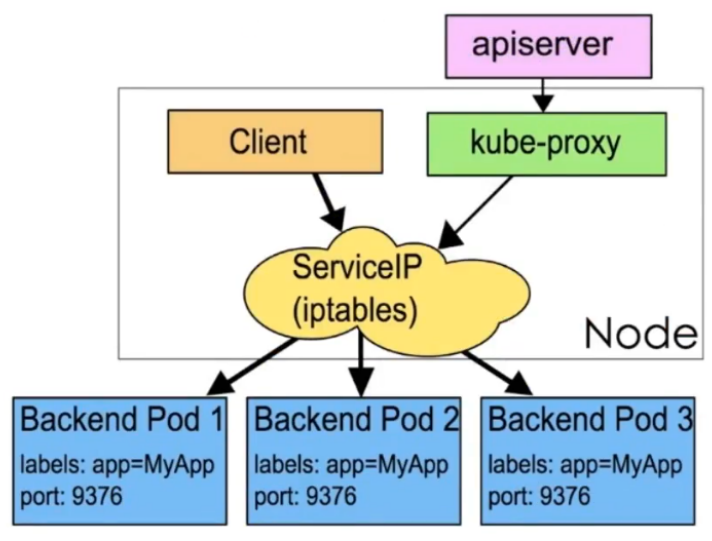

ipvs模式

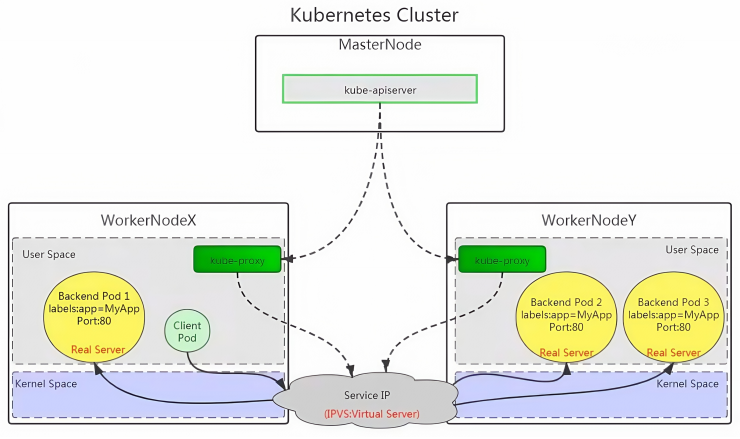

ipvs 模式被 kube-proxy采纳为第三代模式,模式在 kubernetes v1.8 版本开始引入,在 v1.9 版本中处于 beta 阶段,在 v1.11 版本中正式开始使用。

ipvs(iP Virtual Server)实现了传输层负载均衡,也就是4层交换,作为 Linux 内核的一部分。ipvs运行在主机上,在真实服务器前充当负载均衡器。ipvs 可以将基于 TCP和 UDP 的服务请求转发到真实服务器上,并使真实服务器上的服务在单个IP 地址上显示为虚拟服务。

ipvs 模式的实现原理图示如下:

ipvs 和 iptables 都是基于 netfilter 的,那么ipvs 模式有哪些更好的性能呢?

- ipvs 为大型集群提供了更好的可拓展性和性能

- ipvs 支持比 iptables 更复杂的负载均衡算法(包括:最小负载、最少连接、加权等)

- ipvs 支持服务器健康检查和连接重试等功能

- 可以动态修改 ipset的集合,即使iptables 的规则正在使用这个集合

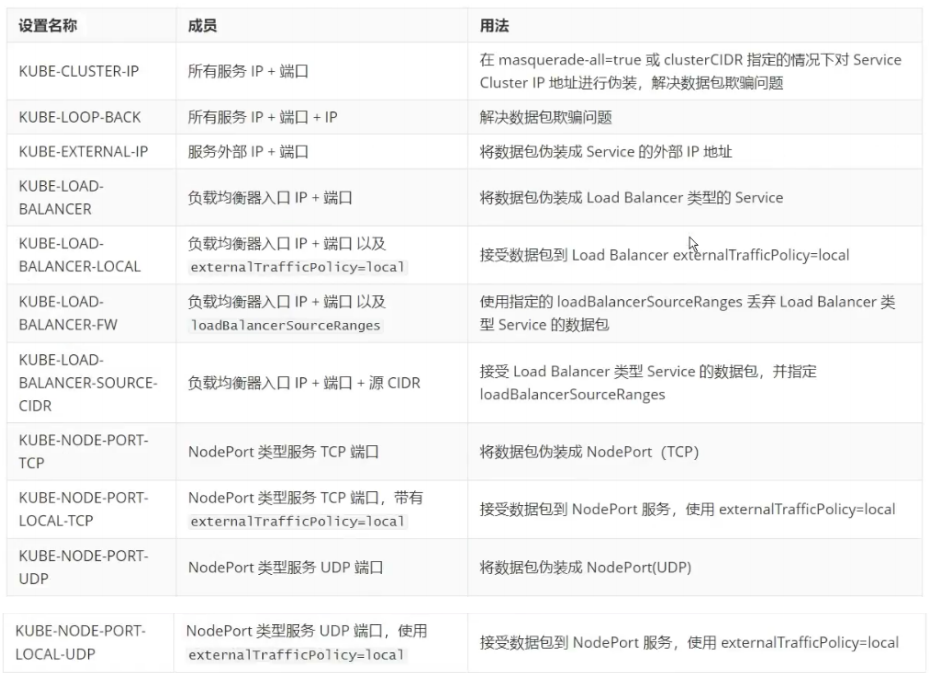

ipvs 依赖于 iptables。ipvs 会使用 iptables 进行包过滤、airpin-masquerade tricks(地址伪装)、SNAT等功能,但是使用的是 iptables 的扩展ipset,并不是直接调用 iptables 来生成规则链。通过 ipset 来存储需要 DROP或 masquerade 的流量的源或目标地址,用于确保iptables 规则的数量是恒定的,这样我

们就不需要关心有多少 Service 或是 Pod 了。

使用 ipset 相较于 iptables有什么优点呢?iptables 是线性的数据结构,而ipset引入了带索引的数据结构,当规则很多的时候,ipset 依然可以很高效的查找和配。可以将 ipset 简单理解为一个IP(段)的集合,这个集合的内容可以是IP 址、IP 网段、端口等,iptables 可以直接添加规则对这个“可变的集合进行作”,这样就可以大大减少iptables规则的数量,从而减少性能损耗。

举一个例子,如果我们要禁止成千上万个IP访问我们的服务器,如果使用 iptables 就需要一条一条的添加规则,这样会在 iptables 中生成大量的规则:如果用ipset 就只需要将相关的IP 地址(网段)加入到 ipset集合中,然后只需要设置少量的 iptables 规则就可以实现这个目标。

下面的表格是ipvs模式下维护的ipset表集合:

iptables与ipvs对比

-

iptables

- 工作在内核空间

- 优点:灵活,功能强大(可以在数据包不同阶段对包进行操作)

- 缺点:表中规则过多时,响应变慢,即规则遍历匹配和更新,呈线性延时

-

ipvs

- 工作在内核空间

- 优点:转发效率高;调度算法丰富:rr,wrr,lc,wlc,ip hash等

- 缺点:内核支持不全,低版本内核不能使用,需要升级到4.0或5.0以上。

-

使用iptables与ipvs时机

- 1.10版本之前使用iptables(1.1版本之前使用UserSpace进行转发)

- 1.11版本之后同时支持iptables与ipvs,默认使用ipvs,如果ipvs模块没有加载时,会自动降级至iptables

ipset集合:ipvs加入的是网段,iptables加入的是单个ip地址

service类型

-

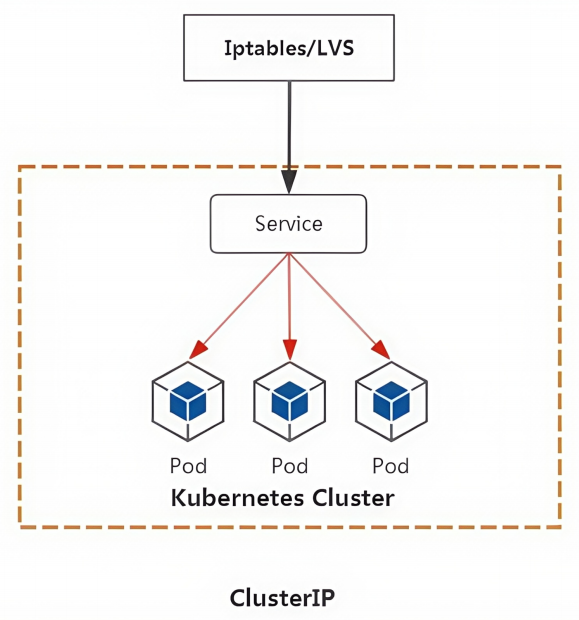

ClusterIP

- 默认,分配一个集群内部可以访问的虚拟IP

-

NodePort

- 在每个Node上分配一个端口作为外部访问入口

- nodePort端口范围为:30000-32767

-

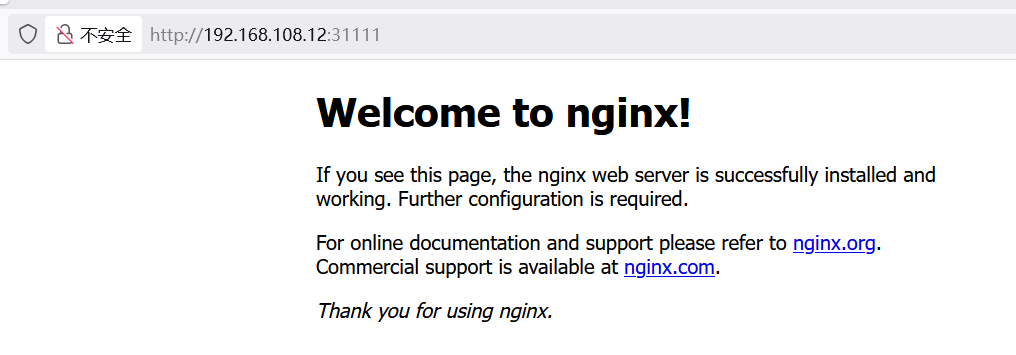

LoadBalancer

- 工作在特定的Cloud Provider上,例如Google Cloud,AWS,OpenStack

-

ExternalName

- 表示把集群外部的服务引入到集群内部中来,即实现了集群内部pod和集群外部的服务进行通信

Service创建

Service的创建在工作中有两种方式,一是命令行创建,二是通过资源清单文件YAML文件创建。

ClusterIP Service

clusterIP类型

ClusterlP根据是否生成ClusterlP又可分为普通Service和Headless Service。

service两类:

- 普通service:

为Kubernetes的Service分配一个集群内部可访问的固定虚拟IP(Cluster IP),实现集群内的访问,。

- Headless Service

该服务不会分配Cluster Ip,也不通过kube-proxy做反向代理和负载均衡。而是通过DNS提供稳定的网络ID来访问,DNS会将headless service的后端直接解析为pod IP列表。

普通clusterIP Service

创建deployment类型应用

#编写yml文件

[root@master ~]# vim deploy-nginx.yml

[root@master ~]# cat deploy-nginx.yml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-nginx

spec:replicas: 2selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:containers:- name: c1image: nginx:1.26-alpineimagePullPolicy: IfNotPresentports:- containerPort: 80#引用yml文件

[root@master ~]# kubectl apply -f deploy-nginx.yml

deployment.apps/deploy-nginx created#验证

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deploy-nginx-5b6d5cd699-dr4bs 0/1 ContainerCreating 0 4s

deploy-nginx-5b6d5cd699-gd8fw 0/1 ContainerCreating 0 4s

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deploy-nginx-5b6d5cd699-dr4bs 1/1 Running 0 57s

deploy-nginx-5b6d5cd699-gd8fw 1/1 Running 0 57s#查看pod的ip

[root@master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-nginx-5b6d5cd699-dr4bs 1/1 Running 0 63s 10.244.104.7 node2 <none> <none>

deploy-nginx-5b6d5cd699-gd8fw 1/1 Running 0 63s 10.244.166.133 node1 <none> <none>#查看deployment控制器

[root@master ~]# kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

deploy-nginx 2/2 2 2 69s

命令创建service

创建clusterIP类型service与Deployment类型应用关联

[root@master ~]# kubectl expose deployment deploy-nginx --type=ClusterIP --target-port=80 --port=80

service/deploy-nginx exposed

说明

- expose 创建service

- deployment.apps 控制器类型

- nginx-server1 应用名称,也是service名称

- –type=ClusterIP 指定service类型

- –target-port=80 指定Pod中容器端口

- –port=80 指定service端口

- nodePort 通过Node实现外网用户访问k8s集群内service==(30000-32767)==

#查看service详情

[root@master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

deploy-nginx ClusterIP 10.97.254.241 <none> 80/TCP 24s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d

[root@master ~]# kubectl describe svc deploy-nginx

Name: deploy-nginx

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.97.254.241 #clusterIP

IPs: 10.97.254.241

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.104.7:80,10.244.166.133:80

Session Affinity: None

Events: <none>#访问clusterIP就可以看到网页内容

[root@master ~]# curl 10.97.254.241

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p><p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p><p><em>Thank you for using nginx.</em></p>

</body>

</html>#验证负载均衡

[root@master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

deploy-nginx-5b6d5cd699-dr4bs 1/1 Running 0 6m34s

deploy-nginx-5b6d5cd699-gd8fw 1/1 Running 0 6m34s#修改两个pod的nginx主页信息

[root@master ~]# kubectl exec -it deploy-nginx-5b6d5cd699-dr4bs -- /bin/sh

/ # cd /usr/share/nginx/html/

/usr/share/nginx/html # ls

50x.html index.html

/usr/share/nginx/html # echo "web1" > index.html

/usr/share/nginx/html # exit

[root@master ~]# kubectl exec -it deploy-nginx-5b6d5cd699-gd8fw -- /bin/sh

/ # cd /usr/share/nginx/html/

/usr/share/nginx/html # echo "web2" > index.html

/usr/share/nginx/html # exit#访问验证

[root@master ~]# curl 10.97.254.241

web1

[root@master ~]# curl 10.97.254.241

web2

[root@master ~]# curl 10.97.254.241

web1

[root@master ~]# curl 10.97.254.241

web2

yaml方式创建service

删除旧资源

[root@master ~]# kubectl delete -f deploy-nginx.yml

deployment.apps "deploy-nginx" deleted

[root@master ~]# kubectl delete svc deploy-nginx

service "deploy-nginx" deleted

使用yml文件方式创建svc

[root@master ~]# vim deploy-svc-nginx.yml

[root@master ~]# cat deploy-svc-nginx.yml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-nginx

spec:replicas: 2selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:containers:- name: c2image: nginx:1.26-alpineimagePullPolicy: IfNotPresentports:- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:name: nginx-svc

spec:type: ClusterIPports:- protocol: TCPport: 80targetPort: 80selector:app: nginx#应用yml文件

[root@master ~]# kubectl apply -f deploy-svc-nginx.yml

deployment.apps/deploy-nginx created

service/nginx-svc created#验证

[root@master ~]# kubectl get pods,svc

NAME READY STATUS RESTARTS AGE

pod/deploy-nginx-5dc988f864-9k42b 1/1 Running 0 2m34s

pod/deploy-nginx-5dc988f864-fs6pq 1/1 Running 0 2m34sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14d

service/nginx-svc ClusterIP 10.100.203.67 <none> 80/TCP 2m34s

[root@master ~]# kubectl describe svc nginx-svc

Name: nginx-svc

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.100.203.67

IPs: 10.100.203.67

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.104.8:80,10.244.166.134:80

Session Affinity: None

Events: <none>

[root@master ~]# kubectl get endpoints

NAME ENDPOINTS AGE

kubernetes 192.168.108.10:6443 14d

nginx-svc 10.244.104.8:80,10.244.166.134:80 2m57s#访问主页

[root@master ~]# curl 10.100.203.67

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p><p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p><p><em>Thank you for using nginx.</em></p>

</body>

</html>无头clusterIP service

- 普通的clusterIP service是service name解析为cluster ip,然后cluster ip对应到后面pod ip。

- headless service是指service name直接解析为后面的pod ip

#创建deployment控制器类型的YAML文件

[root@master ~]# vim nginx_deploy.yml

[root@master ~]# cat nginx_deploy.yml

apiVersion: apps/v1

kind: Deployment

metadata:name: nginx-svc

spec:replicas: 2selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:containers:- name: c3image: nginx:1.26-alpineimagePullPolicy: IfNotPresentports:- containerPort: 80#应用yml文件

[root@master ~]# kubectl apply -f nginx_deploy.yml

deployment.apps/nginx-svc created#验证

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-svc-64dd6b5869-m52vv 1/1 Running 0 12s

nginx-svc-64dd6b5869-zc75r 1/1 Running 0 12s#创建headless service的YAML文件

[root@master ~]# vim headless-svc.yml

[root@master ~]# cat headless-svc.yml

apiVersion: v1

kind: Service

metadata:name: headless-svc

spec:type: ClusterIPclusterIP: Noneports:- port: 80protocol: TCPtargetPort: 80selector:app: nginx#应用yml文件

[root@master ~]# kubectl apply -f headless-svc.yml

service/headless-svc created#查看服务

[root@master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

headless-svc ClusterIP None <none> 80/TCP 11s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15d

[root@master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-svc-64dd6b5869-m52vv 1/1 Running 0 4m19s 10.244.166.135 node1 <none> <none>

nginx-svc-64dd6b5869-zc75r 1/1 Running 0 4m19s 10.244.104.9 node2 <none> <none>

[root@master ~]# kubectl get endpoints

NAME ENDPOINTS AGE

headless-svc 10.244.104.9:80,10.244.166.135:80 35s

kubernetes 192.168.108.10:6443 15dDNS

DNS服务监视Kubernetes APl,为每一个Service创建DNS记录用于域名解析

headless service需要DNS来解决访问问题

DNS记录格式为:

svc_name.namespace_name.svc.cluster.local.

#查看kube-dns服务的IP

[root@master ~]# kubectl get pod -o wide -n kube-system | grep dns

coredns-66f779496c-7pm8r 1/1 Running 0 15d 10.244.166.131 node1 <none> <none>

coredns-66f779496c-mzmc9 1/1 Running 0 15d 10.244.166.130 node1 <none> <none>

[root@master ~]# kubectl get svc -n kube-system | grep dns

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 15d#在集群主机通过DNS服务地址查找无头服务的dns解析

[root@master ~]# dig -t a headless-service.default.svc.cluster.local. @10.96.0.10; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7 <<>> -t a headless-service.default.svc.cluster.local. @10.96.0.10

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NXDOMAIN, id: 64627

;; flags: qr aa rd; QUERY: 1, ANSWER: 0, AUTHORITY: 1, ADDITIONAL: 1

;; WARNING: recursion requested but not available;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;headless-service.default.svc.cluster.local. IN A;; AUTHORITY SECTION:

cluster.local. 30 IN SOA ns.dns.cluster.local. hostmaster.cluster.local. 1758019283 7200 1800 86400 30;; Query time: 15 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Tue Sep 16 18:55:03 CST 2025

;; MSG SIZE rcvd: 164

NodePort

#编写yml文件

[root@master ~]# vim nginx_nodeport.yml

[root@master ~]# cat nginx_nodeport.yml

apiVersion: apps/v1

kind: Deployment

metadata:name: nginx-applabels:app: nginx-app

spec:replicas: 2selector:matchLabels:app: nginx-apptemplate:metadata:labels:app: nginx-appspec:containers:- name: c2image: nginx:1.26-alpineimagePullPolicy: IfNotPresentports:- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:name: nginx-app

spec:type: NodePortports:- port: 8060 #pod端口targetPort: 80 #container端口protocol: TCP #端口协议nodePort: 31111 #节点端口selector:app: nginx-app#应用yml文件创建资源

[root@master ~]# kubectl apply -f nginx_nodeport.yml

deployment.apps/nginx-app created

service/nginx-app unchanged#验证,查看创建的资源

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-app-75f4fbdbcd-l4rr6 1/1 Running 0 61s

nginx-app-75f4fbdbcd-m6rzm 1/1 Running 0 61s

[root@master ~]# kubectl get pods,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-app-75f4fbdbcd-l4rr6 1/1 Running 0 69s

pod/nginx-app-75f4fbdbcd-m6rzm 1/1 Running 0 69sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15d

service/nginx-app NodePort 10.97.254.241 <none> 8060:31111/TCP 2m17s

[root@master ~]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

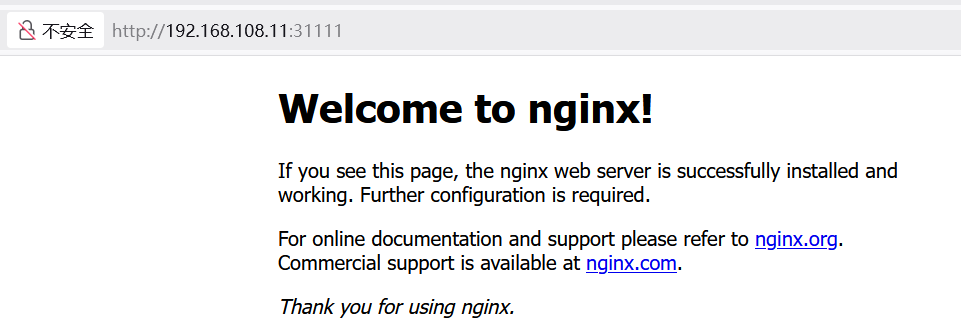

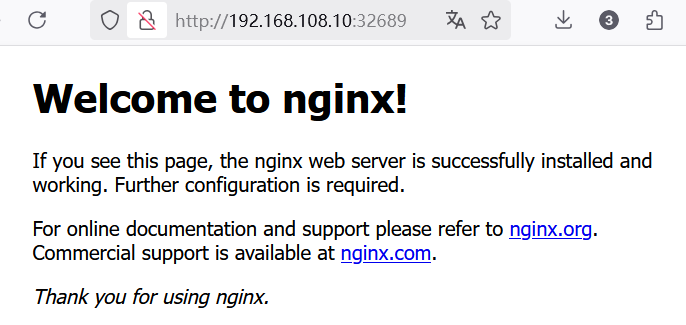

nginx-app 2/2 2 2 78s浏览器访问node1和node2两个节点的31111端口

LoadBalancer

集群外访问过程

- 用户

- 域名

- 云服务提供商提供LB服务

- NodeIP:Port(service IP)

- Pod IP:端口

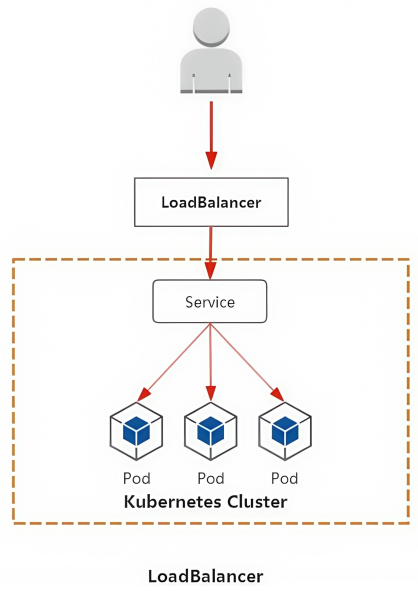

旧版MetalLB

自建kubernetes的loadbalancer类型服务方案

MetalLB可以为kubernetes集群中的Service提供网络负载均衡功能

MetalLB两大功能为:

- 地址分配,类似于DHCP(从地址池选取)

- 外部通告,一旦MetalLB为服务分配了外部IP地址,它就需要使集群之外的网络意识到该IP在集群中"存在"。(外部通过DNS访问该ip)

MetalLB使用标准路由协议来实现此目的:ARP,NDP或BGP。

参考文档:https://metallb.universe.tf/installation/

下载资源清单:

[root@master ~]# mkdir Service

[root@master ~]# cd Service/

[root@master Service]# wget https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/namespace.yaml

[root@master Service]# wget https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/metallb.yaml

[root@master Service]# ls

metallb.yaml namespace.yaml#应用yml文件

[root@master Service]# kubectl apply -f namespace.yaml

namespace/metallb-system created

[root@master Service]# kubectl apply -f metallb.yaml

serviceaccount/controller created

serviceaccount/speaker created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

role.rbac.authorization.k8s.io/config-watcher created

role.rbac.authorization.k8s.io/pod-lister created

role.rbac.authorization.k8s.io/controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/config-watcher created

rolebinding.rbac.authorization.k8s.io/pod-lister created

rolebinding.rbac.authorization.k8s.io/controller created

daemonset.apps/speaker created

deployment.apps/controller created

resource mapping not found for name: "controller" namespace: "" from "metallb.yaml": no matches for kind "PodSecurityPolicy" in version "policy/v1beta1"

ensure CRDs are installed first

resource mapping not found for name: "speaker" namespace: "" from "metallb.yaml": no matches for kind "PodSecurityPolicy" in version "policy/v1beta1"

ensure CRDs are installed first#验证,查看ns与pod资源

[root@master Service]# kubectl get ns

NAME STATUS AGE

default Active 15d

kube-node-lease Active 15d

kube-public Active 15d

kube-system Active 15d

kubernetes-dashboard Active 13d

metallb-system Active 71s[root@master Service]# kubectl get pod -n metallb-system

NAME READY STATUS RESTARTS AGE

controller-8d6664589-8c7qd 0/1 ContainerCreating 0 97s

speaker-fs6pq 0/1 CreateContainerConfigError 0 97s

speaker-v8znz 0/1 CreateContainerConfigError 0 97s

[root@master Service]# watch kubectl get pod -n metallb-system

[root@master Service]# kubectl get pod -n metallb-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

controller-8d6664589-8c7qd 1/1 Running 0 2m35s 10.244.166.134 node1 <none> <none>

speaker-fs6pq 1/1 Running 0 2m35s 192.168.108.11 node1 <none> <none>

speaker-v8znz 1/1 Running 0 2m35s 192.168.108.12 node2 <none> <none>[root@master Service]# vim metallb-conf.yml

[root@master Service]# cat metallb-conf.yml

apiVersion: v1

kind: ConfigMap

metadata:name: confignamespace: metallb-system

data:config: |address-pools:- name: defaultprotocol: layer2addresses:- 192.168.108.20-192.168.108.40 #要与节点ip所在同一个网段[root@master Service]# ls

metallb-conf.yml metallb.yaml namespace.yaml

[root@master Service]# kubectl apply -f metallb-conf.yml

configmap/configmap created

[root@master Service]# kubectl get cm -n metallb-system

NAME DATA AGE

configmap 1 9s

kube-root-ca.crt 1 14m

[root@master Service]# kubectl describe cm configmap -n metallb-system

Name: configmap

Namespace: metallb-system

Labels: <none>

Annotations: <none>Data

====

config:

----

address-pools:

- name: defaultprotocol: layer2addresses:- 192.168.108.20-192.168.108.40BinaryData

====Events: <none>[root@master Service]# vim nginx-metallb.yml

[root@master Service]# cat nginx-metallb.yml

apiVersion: apps/v1

kind: Deployment

metadata:name: nginx-metallbnamespace: default

spec:replicas: 2selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:containers:- name: c1image: nginx:1.26-alpineimagePullPolicy: IfNotPresentports:- containerPort: 80

[root@master Service]# kubectl get pod,deployments.apps

NAME READY STATUS RESTARTS AGE

pod/nginx-metallb-5b6d5cd699-4q9pg 1/1 Running 0 7s

pod/nginx-metallb-5b6d5cd699-xxgd8 1/1 Running 0 7sNAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-metallb 2/2 2 2 7s[root@master Service]# kubectl get pod,deployment

NAME READY STATUS RESTARTS AGE

pod/nginx-metallb-5b6d5cd699-4q9pg 1/1 Running 0 5m14s

pod/nginx-metallb-5b6d5cd699-xxgd8 1/1 Running 0 5m14sNAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-metallb 2/2 2 2 5m14s

[root@master Service]# cat lb-service.yml

apiVersion: v1

kind: Service

metadata:name: nginx-lbnamespace: default

spec:type: LoadBalancerports:- port: 80protocol: TCPtargetPort: 80selector:app: nginx[root@master Service]# kubectl apply -f lb-service.yml

service/nginx-lb created

[root@master Service]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15d

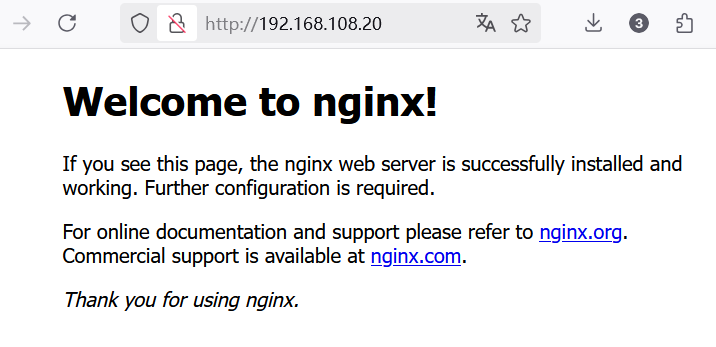

nginx-lb LoadBalancer 10.102.31.13 192.168.108.20 80:32689/TCP 6s浏览器访问192.168.108.20

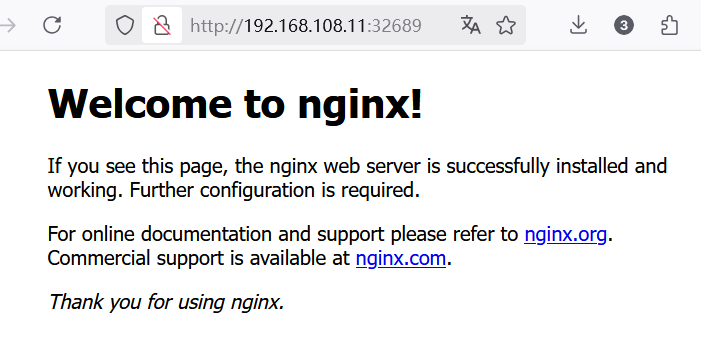

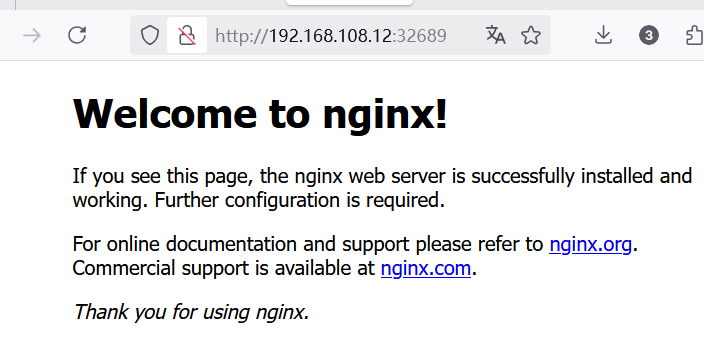

访问node节点的32689端口

包括API server主机的ip也可以访问:

删除资源,试验新版本

[root@master Service]# kubectl delete -f namespace.yaml

[root@master Service]# kubectl delete -f metallb.yaml

[root@master Service]# kubectl delete -f lb-service.yml

[root@master Service]# kubectl delete -f nginx-metallb.yml

[root@master Service]# kubectl delete -f metallb-conf.yml

新版metallb

#编辑ipvs的开启状态

[root@master Service]# kubectl edit configmaps -n kube-system kube-proxy

41 strictARP: true#直接应用文件地址

[root@master Service]# kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.15.2/config/manifests/metallb-native.yaml

namespace/metallb-system created

customresourcedefinition.apiextensions.k8s.io/bfdprofiles.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgpadvertisements.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgppeers.metallb.io created

customresourcedefinition.apiextensions.k8s.io/communities.metallb.io created

customresourcedefinition.apiextensions.k8s.io/ipaddresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/l2advertisements.metallb.io created

customresourcedefinition.apiextensions.k8s.io/servicebgpstatuses.metallb.io created

customresourcedefinition.apiextensions.k8s.io/servicel2statuses.metallb.io created

serviceaccount/controller created

serviceaccount/speaker created

role.rbac.authorization.k8s.io/controller created

role.rbac.authorization.k8s.io/pod-lister created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/controller created

rolebinding.rbac.authorization.k8s.io/pod-lister created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

configmap/metallb-excludel2 created

secret/metallb-webhook-cert created

service/metallb-webhook-service created

deployment.apps/controller created

daemonset.apps/speaker created

validatingwebhookconfiguration.admissionregistration.k8s.io/metallb-webhook-configuration created#验证资源

[root@master Service]# kubectl get all -n metallb-system

NAME READY STATUS RESTARTS AGE

pod/controller-8666ddd68b-mt84c 0/1 Running 0 96s

pod/speaker-gsfwv 0/1 ContainerCreating 0 96s

pod/speaker-vw57v 0/1 ContainerCreating 0 96s

pod/speaker-zwg59 0/1 ContainerCreating 0 96sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/metallb-webhook-service ClusterIP 10.102.116.16 <none> 443/TCP 96sNAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/speaker 3 3 0 3 0 kubernetes.io/os=linux 96sNAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/controller 0/1 1 0 96sNAME DESIRED CURRENT READY AGE

replicaset.apps/controller-8666ddd68b 1 1 0 96s

[root@master Service]# kubectl get pods -n metallb-system

NAME READY STATUS RESTARTS AGE

controller-8666ddd68b-mtddt 1/1 Running 0 4m19s

speaker-gtqcq 1/1 Running 0 4m19s

speaker-qmm6q 1/1 Running 0 4m19s

speaker-qs8xg 1/1 Running 0 4m19s

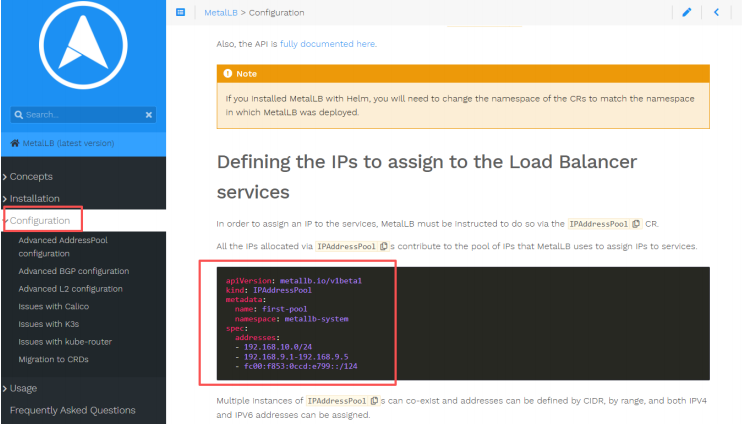

这里就和之前版本有区别

不需要创建configmap资源对象,而是直接使用IPAddressPool资源

[root@master Service]# cat ipaddresspoll.yml

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:name: first-poolnamespace: metallb-system

spec:addresses:- 192.168.108.100-192.168.108.110[root@master Service]# kubectl apply -f ipaddresspoll.yml

ipaddresspool.metallb.io/first-pool created

[root@master Service]# kubectl get ipaddresspool -n metallb-system

NAME AUTO ASSIGN AVOID BUGGY IPS ADDRESSES

first-pool true false ["192.168.108.100-192.168.108.110"]

[root@master Service]# cat nginx-metallb.yml

apiVersion: apps/v1

kind: Deployment

metadata:name: nginx-metallbnamespace: default

spec:replicas: 2selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:containers:- name: c1image: nginx:1.26-alpineimagePullPolicy: IfNotPresentports:- containerPort: 80

[root@master Service]# kubectl apply -f nginx-metallb.yml

deployment.apps/nginx-metallb created

[root@master Service]# kubectl get deployment,pod

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-metallb 2/2 2 2 15sNAME READY STATUS RESTARTS AGE

pod/nginx-metallb-5b6d5cd699-cxzjz 1/1 Running 0 15s

pod/nginx-metallb-5b6d5cd699-dznqv 1/1 Running 0 15s

[root@master Service]# cat lb-service.yml

apiVersion: v1

kind: Service

metadata:name: nginx-lbnamespace: default

spec:type: LoadBalancerports:- port: 80protocol: TCPtargetPort: 80selector:app: nginx

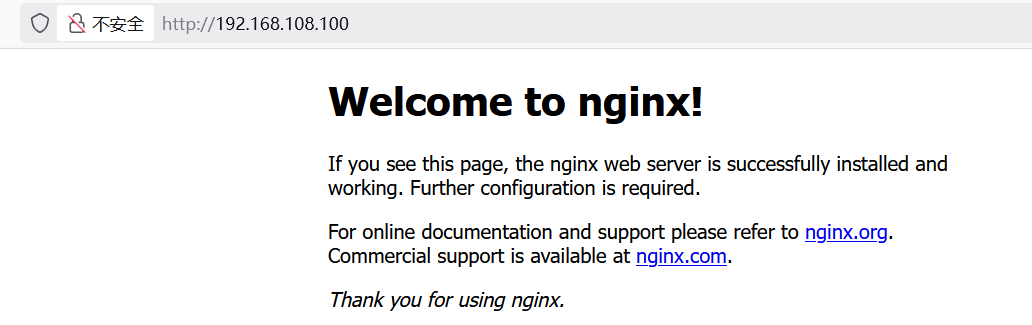

[root@master Service]# kubectl apply -f lb-service.yml

service/nginx-lb created

[root@master Service]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15d

nginx-lb LoadBalancer 10.105.173.66 192.168.108.100 80:30476/TCP 8s浏览器访问svc选择的外部IP:192.168.108.100

ExternalName

作用:

把集群外部的服务引入到集群内部中来,实现了集群内部pod和集群外部的服务进行通信ExternalName类型的服务适用于外部服务使用域名的方式,缺点是不能指定端口还有一点要注意:集群内的Pod会继承Node上的DNS解析规则。所以只要Node可以访问的服务,Pod中也可以访问到,这就实现了集群内和机器外相通信

[root@master LB]# cat lb-service.yaml

apiVersion: v1

kind: Service

metadata:name: nginx-metallb

spec:type: LoadBalancerports:- port: 80protocol: TCPtargetPort: 80selector:app: nginx[root@master LB]# kubectl apply -f lb-service.yaml

service/nginx-metallb created[root@master metallb]# kubectl get svc

5s服务访问集群外服务

公网域名引入

#查看dns资源

[root@master Service]# kubectl get svc -n kube-system | grep dns

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 15d#查看公网域名解析

[root@master Service]# dig -t a www.baidu.com @10.96.0.10

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7 <<>> -t a www.baidu.com @10.96.0.10

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 52014

;; flags: qr rd ra; QUERY: 1, ANSWER: 3, AUTHORITY: 0, ADDITIONAL: 1;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;www.baidu.com. IN A;; ANSWER SECTION:

www.baidu.com. 5 IN CNAME www.a.shifen.com.

www.a.shifen.com. 5 IN A 180.101.49.44

www.a.shifen.com. 5 IN A 180.101.51.73;; Query time: 6 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Wed Sep 17 16:08:00 CST 2025

;; MSG SIZE rcvd: 149#创建YAML

[root@master Service]# vim externalname.yml

[root@master Service]# cat externalname.yml

apiVersion: v1

kind: Service

metadata:name: my-externalnamenamespace: default

spec:type: ExternalNameexternalName: www.baidu.com#应用YAML

[root@master Service]# kubectl apply -f externalname.yml

service/my-externalname created

[root@master Service]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15d

my-externalname ExternalName <none> www.baidu.com <none> 4s

nginx-lb LoadBalancer 10.98.32.136 192.168.108.100 80:30476/TCP 84m

[root@master Service]# dig -t a my-externalname.default.svc.cluster.local. @10.96.0.1

[root@master Service]# dig -t a my-externalname.default.svc.cluster.local. @10.96.0.10; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7 <<>> -t a my-externalname.default.svc.cluster.local. @10.96.0.10

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 63112

;; flags: qr aa rd; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;my-externalname.default.svc.cluster.local. IN A;; ANSWER SECTION:

my-externalname.default.svc.cluster.local. 5 IN CNAME www.baidu.com.

www.baidu.com. 5 IN CNAME www.a.shifen.com.

www.a.shifen.com. 5 IN A 180.101.51.73

www.a.shifen.com. 5 IN A 180.101.49.44;; Query time: 38 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Wed Sep 17 16:01:04 CST 2025

;; MSG SIZE rcvd: 245[root@master Service]# kubectl run -it expod --image=busybox:1.28

If you don't see a command prompt, try pressing enter.

/ # nslookup www.baidu.com

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.localName: www.baidu.com

Address 1: 240e:e9:6002:1fd:0:ff:b0e1:fe69

Address 2: 240e:e9:6002:1ac:0:ff:b07e:36c5

Address 3: 180.101.51.73

Address 4: 180.101.49.44

/ # nslookup my-externalname.default.svc.cluster.local

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.localName: my-externalname.default.svc.cluster.local

Address 1: 240e:e9:6002:1ac:0:ff:b07e:36c5

Address 2: 240e:e9:6002:1fd:0:ff:b0e1:fe69

Address 3: 180.101.49.44

Address 4: 180.101.51.73

/ # exit

Session ended, resume using 'kubectl attach expod -c expod -i -t' command when the pod is running

[root@master Service]# kubectl get pods

NAME READY STATUS RESTARTS AGE

expod 1/1 Running 1 (10s ago) 107s

nginx-metallb-5b6d5cd699-76sdw 1/1 Running 0 90m

nginx-metallb-5b6d5cd699-npgqr 1/1 Running 0 90m不同命名空间访问

案例:实现ns1和ns2两个命名空间之间服务的访问

#创建ns1命名空间和相关deployment,pod,service

[root@master Service]# vim ns1-nginx.yml

[root@master Service]# cat ns1-nginx.yml

apiVersion: v1

kind: Namespace

metadata:name: ns1

---

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-nginxnamespace: ns1

spec:replicas: 1selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:containers:- name: c1image: nginx:1.26-alpineimagePullPolicy: IfNotPresentports:- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:name: svc1namespace: ns1 #服务名称

spec: #属于ns1命名空间selector:app: nginxports:- port: 80targetPort: 80clusterIP: None #无头服务

---

apiVersion: v1

kind: Service

metadata:name: external-svc1namespace: ns1 #属于ns1命名空间

spec:type: ExternalNameexternalName: svc2.ns2.svc.cluster.local. #将ns2空间的svc2服务引入到ns1空间#应用YAML

[root@master Service]# kubectl apply -f ns1-nginx.yml

namespace/ns1 unchanged

deployment.apps/deploy-nginx created

service/svc1 unchanged

service/external-svc1 unchanged#查看命名空间ns1中的资源

[root@master Service]# kubectl get all -n ns1

NAME READY STATUS RESTARTS AGE

pod/deploy-nginx-5b6d5cd699-x8tw6 1/1 Running 0 14sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/external-svc1 ExternalName <none> svc2.ns2.svc.cluster.local. <none> 45s

service/svc1 ClusterIP None <none> 80/TCP 45sNAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/deploy-nginx 1/1 1 1 14sNAME DESIRED CURRENT READY AGE

replicaset.apps/deploy-nginx-5b6d5cd699 1 1 1 14s#使用dns:10.96.0.10解析域名,可以直接看到pod的ip

[root@master Service]# kubectl get pods -n ns1 -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-nginx-5b6d5cd699-x8tw6 1/1 Running 0 86s 10.244.166.141 node1 <none> <none>

[root@master Service]# dig -t a svc1.ns1.svc.default.cluster.local. @10.96.0.10; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7 <<>> -t a svc1.ns1.svc.default.cluster.local. @10.96.0.10

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NXDOMAIN, id: 59098

;; flags: qr aa rd; QUERY: 1, ANSWER: 0, AUTHORITY: 1, ADDITIONAL: 1

;; WARNING: recursion requested but not available;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;svc1.ns1.svc.default.cluster.local. IN A;; AUTHORITY SECTION:

cluster.local. 30 IN SOA ns.dns.cluster.local. hostmaster.cluster.local. 1758097325 7200 1800 86400 30;; Query time: 10 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Wed Sep 17 16:23:54 CST 2025

;; MSG SIZE rcvd: 156[root@master Service]# dig -t A my-externalname.default.svc.cluster.local. @10

../../../../lib/isc/unix/socket.c:2171: internal_send: 0.0.0.10#53: Invalid argument

^C[root@master Service]# dig -t A my-externalname.default.svc.cluster.local. @10.96.0.10; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7 <<>> -t A my-externalname.default.svc.cluster.local. @10.96.0.10

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 9554

;; flags: qr aa rd; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;my-externalname.default.svc.cluster.local. IN A;; ANSWER SECTION:

my-externalname.default.svc.cluster.local. 5 IN CNAME www.baidu.com.

www.baidu.com. 5 IN CNAME www.a.shifen.com.

www.a.shifen.com. 5 IN A 180.101.51.73

www.a.shifen.com. 5 IN A 180.101.49.44;; Query time: 37 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Wed Sep 17 16:24:55 CST 2025

;; MSG SIZE rcvd: 245#创建ns2命名空间和相关deployment,pod,service

[root@master Service]# vim ns2-nginx.yml

[root@master Service]# kubectl apply -f ns2-nginx.yml

namespace/ns2 created

deployment.apps/deploy-nginx created

service/svc2 created

service/external-svc1 created

[root@master Service]# kubectl get pdos,svc -n ns2

error: the server doesn't have a resource type "pdos"

[root@master Service]# kubectl get pods,svc -n ns2

NAME READY STATUS RESTARTS AGE

pod/deploy-nginx-5b6d5cd699-68mz9 1/1 Running 0 22sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/external-svc1 ExternalName <none> svc1.ns1.svc.cluster.local. <none> 22s

service/svc2 ClusterIP None <none> 80/TCP 22s

[root@master Service]# dig svc2.ns2.default.svc.cluster.local. @10.96.0.10; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7 <<>> svc2.ns2.default.svc.cluster.local. @10.96.0.10

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NXDOMAIN, id: 60957

;; flags: qr aa rd; QUERY: 1, ANSWER: 0, AUTHORITY: 1, ADDITIONAL: 1

;; WARNING: recursion requested but not available;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;svc2.ns2.default.svc.cluster.local. IN A;; AUTHORITY SECTION:

cluster.local. 30 IN SOA ns.dns.cluster.local. hostmaster.cluster.local. 1758097631 7200 1800 86400 30;; Query time: 0 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Wed Sep 17 16:29:47 CST 2025

;; MSG SIZE rcvd: 156[root@master Service]# kubectl get pods -n ns2 -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-nginx-5b6d5cd699-68mz9 1/1 Running 0 3m1s 10.244.104.14 node2 <none> <none>

[root@master Service]# kubectl exec -it deploy-nginx-5b6d5cd699-68mz9 -n ns2 -- /bin/sh

/ # nslookup svc1

Server: 10.96.0.10

Address: 10.96.0.10:53** server can't find svc1.cluster.local: NXDOMAIN** server can't find svc1.svc.cluster.local: NXDOMAIN** server can't find svc1.ns2.svc.cluster.local: NXDOMAIN** server can't find svc1.ns2.svc.cluster.local: NXDOMAIN** server can't find svc1.svc.cluster.local: NXDOMAIN** server can't find svc1.cluster.local: NXDOMAIN/ # nslookup svc1.ns1..svc.cluster.local.

nslookup: write to '10.96.0.10': Message too large

;; connection timed out; no servers could be reached/ # nslookup svc1.ns1.svc.cluster.local.

Server: 10.96.0.10

Address: 10.96.0.10:53Name: svc1.ns1.svc.cluster.local

Address: 10.244.166.141/ # exit

[root@master Service]# kubectl delete pod deploy-nginx-5b6d5cd699-68mz9 -n ns2

pod "deploy-nginx-5b6d5cd699-68mz9" deleted

[root@master Service]# kubectl get pdos -n ns2 -o wide

error: the server doesn't have a resource type "pdos"

[root@master Service]# kubectl get pods -n ns2 -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-nginx-5b6d5cd699-mkstl 1/1 Running 0 18s 10.244.166.142 node1 <none> <none>

[root@master Service]# kubectl exec -it deploy-nginx-5b6d5cd699-mkstl -n ns2 -- /bin/sh

/ # nslookup svc1.ns1.svc.cluster.local.

Server: 10.96.0.10

Address: 10.96.0.10:53Name: svc1.ns1.svc.cluster.local

Address: 10.244.166.141/ # exitSessionAffinity

设置sessionAffinity(会话粘黏)为clientip(类似nginx的ip_hash算法、lvs的sh算法)

#创建nginx资源使用clusterip访问

[root@master Service]# vim deployment-nginx-svc.yml

[root@master Service]# cat deployment-nginx-svc.yml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-nginx

spec:replicas: 2selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:containers:- name: c1image: nginx:1.26-alpineimagePullPolicy: IfNotPresentports:- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:name: nginx-svc

spec:type: ClusterIPports:- port: 80protocol: TCPtargetPort: 80selector:app: nginx#应用yml文件

[root@master Service]# kubectl apply -f deployment-nginx-svc.yml

deployment.apps/deploy-nginx created

service/nginx-svc created#验证

[root@master Service]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deploy-nginx-5b6d5cd699-kb9nw 1/1 Running 0 8s

deploy-nginx-5b6d5cd699-nchsm 1/1 Running 0 8s

expod 1/1 Running 1 (62m ago) 64m#查看clusterIP

[root@master Service]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 16d

my-externalname ExternalName <none> www.baidu.com <none> 68m

nginx-svc ClusterIP 10.96.75.120 <none> 80/TCP 15s#修改两个服务的网站主页

[root@master Service]# kubectl exec -it deploy-nginx-5b6d5cd699-kb9nw -- /bin/sh

/ # echo web1 > /usr/share/nginx/html/index.html

/ # exit

[root@master Service]# kubectl exec -it deploy-nginx-5b6d5cd699-nchsm -- /bin/sh

/ # echo web2 > /usr/share/nginx/html/index.html

/ # exit#访问网站内容

[root@master Service]# curl 10.96.75.120

web1

[root@master Service]# curl 10.96.75.120

web2#修改参数Session Affinity

[root@master Service]# kubectl describe svc nginx-svc

Name: nginx-svc

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.96.75.120

IPs: 10.96.75.120

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.104.15:80,10.244.166.143:80

Session Affinity: None

Events: <none>[root@master Service]# kubectl patch svc nginx-svc -p '{"spec":{"sessionAffinity":"ClientIP"}}'

service/nginx-svc patched

[root@master Service]# kubectl describe svc nginx-svc Name: nginx-svc

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.96.75.120

IPs: 10.96.75.120

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.104.15:80,10.244.166.143:80

Session Affinity: ClientIP

Events: <none>#访问验证负载均衡

[root@master Service]# curl 10.96.75.120

web2

[root@master Service]# curl 10.96.75.120

web2

[root@master Service]# curl 10.96.75.120

web2

[root@master Service]# curl 10.96.75.120

web2

验证访问粘黏,第1次访问哪个pod,后面就一直访问这个pod,直到失效时间

sessionAffinity机制默认失效时间为10800秒(3小时)