NEFTUNE

Sure! The paper “NEFTUNE: Noisy Embeddings Improve Instruction Finetuning” introduces a simple but surprisingly effective technique to improve the performance of instruction-finetuned large language models (LLMs). Let’s break it down:

Core Idea

NEFTune (Noise Injection in Embeddings for Instruction Tuning) proposes adding controlled random noise to the text embeddings (i.e., the numerical representations of input tokens) during training only—not during inference. This small modification leads to significant performance gains across a wide range of tasks, especially in instruction-following and reasoning benchmarks.

Why It Works (Intuition)

- Prevents Overfitting: Instruction tuning datasets are often limited in size. Adding noise acts as a regularizer, making the model less likely to memorize training examples and more likely to generalize.

- Encourages Robust Representations: By slightly perturbing the input embeddings, the model learns to rely less on exact token representations and more on semantic patterns, improving robustness.

- Better Exploration of Representation Space: Noise helps the optimizer escape sharp minima and find flatter, more generalizable solutions.

How It’s Done

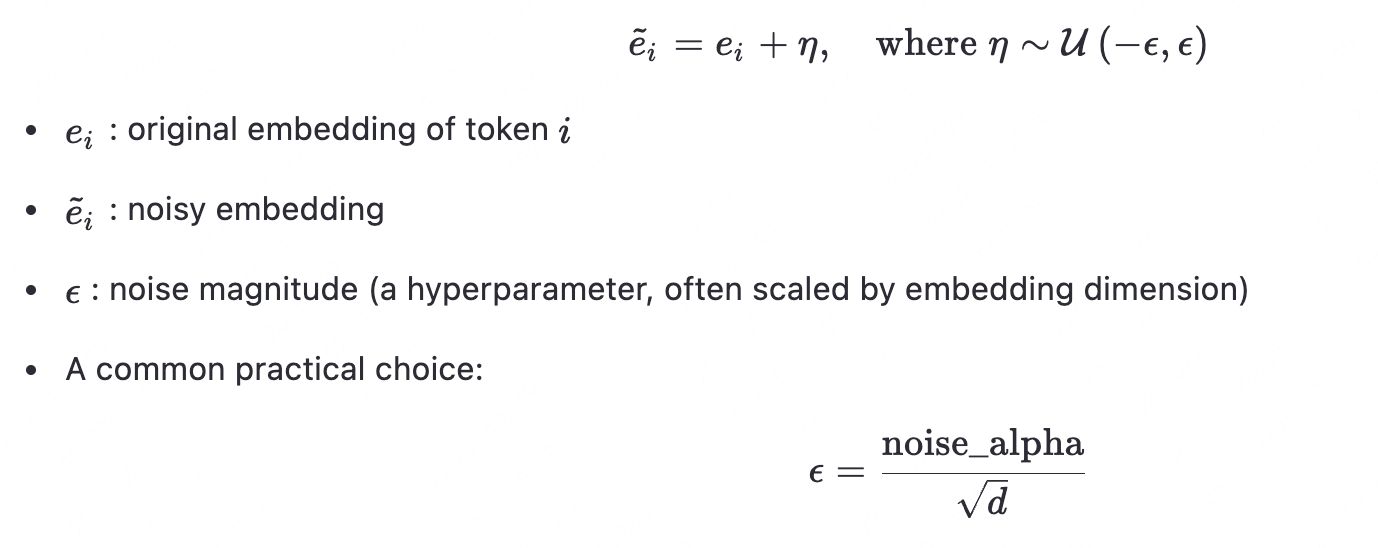

- During training, before feeding token embeddings into the transformer layers, NEFTune adds uniform random noise:

—

where (d) is the embedding dimension and noise_alpha is typically around 5–10.

- Crucially: No noise is added during inference—the model uses clean embeddings at test time.

Key Results

- NEFTune improves performance without changing model architecture, data, or training pipeline—just a one-line code change.

- Gains are consistent across models (e.g., LLaMA, Mistral) and tasks:

- Instruction-following: Better alignment with user intent.

- Reasoning: Improved performance on math (e.g., GSM8K) and logic tasks.

- Low-resource settings: Especially helpful when instruction data is limited.

- Often matches or exceeds gains from more complex methods (e.g., better data filtering, larger models).

Why It’s Significant

- Simplicity: Easy to implement—just add noise to embeddings during training.

- Zero inference cost: No slowdown or accuracy trade-off at test time.

- Broad applicability: Works across model sizes and architectures.

- Challenges assumptions: Shows that even minor perturbations in representation space can yield large gains, suggesting current instruction-tuning practices may be under-regularized.

Practical Takeaway

If you’re instruction-finetuning an LLM, try NEFTune—it’s a low-effort, high-reward tweak. Just inject uniform noise into input embeddings during training with a noise scale like noise_alpha = 10, and you’ll likely see better generalization.

Let me know if you’d like a deeper dive into the experiments, ablation studies, or how to implement it!