K8s学习笔记(六) K8s升级与节点管理

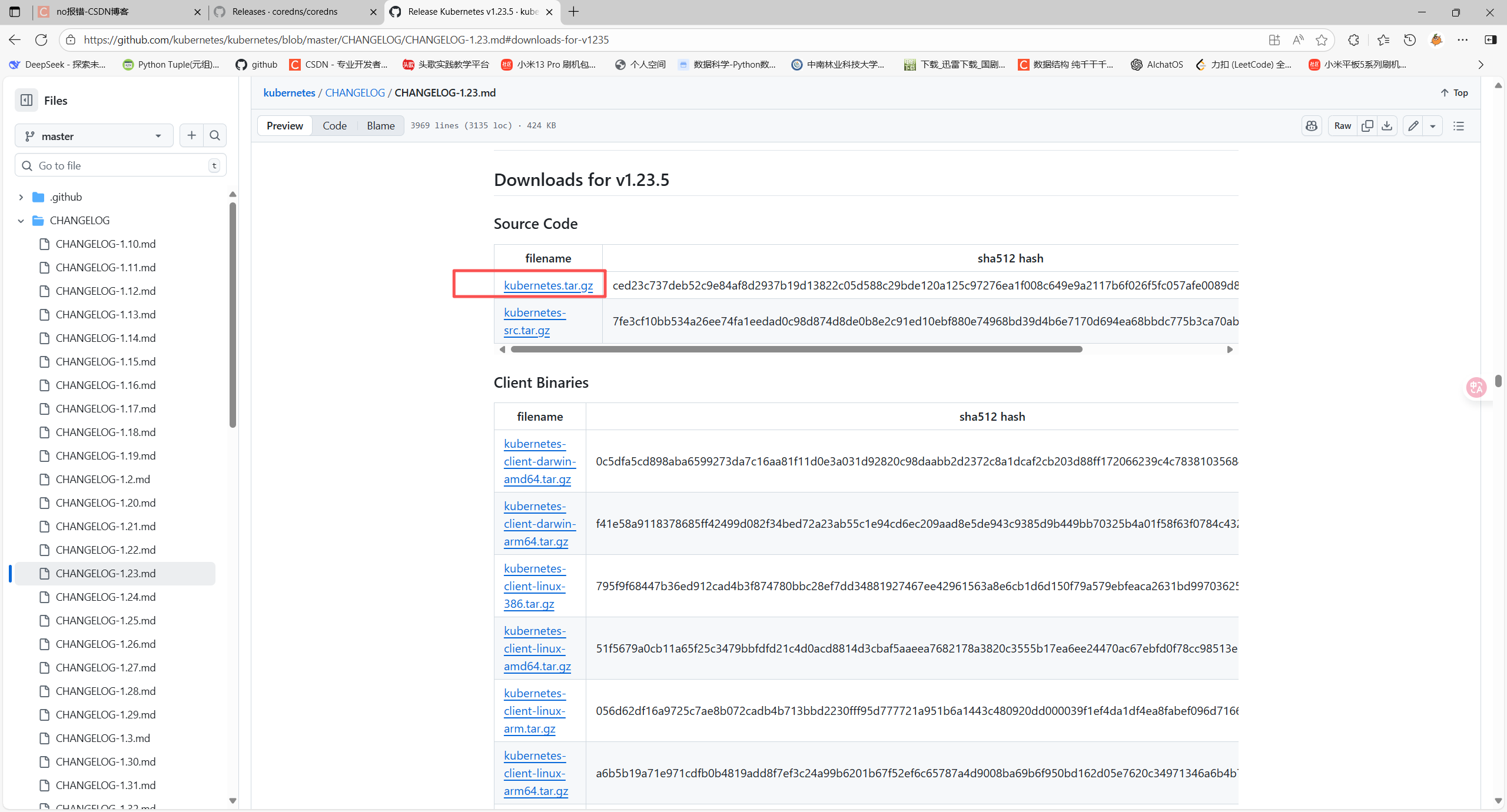

1 下载k8s二进制包

链接:[kubernetes/CHANGELOG/CHANGELOG-1.23.md at master · kubernetes/kubernetes](https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.23.md)

下载四个二进制文件

客户机二进制文件kubernetes-client-linux-amd64.tar.gz

源代码kubernetes.tar.gz

服务器二进制文件kubernetes-server-linux-amd64.tar.gz

节点二进制文件kubernetes-node-linux-amd64.tar.gz

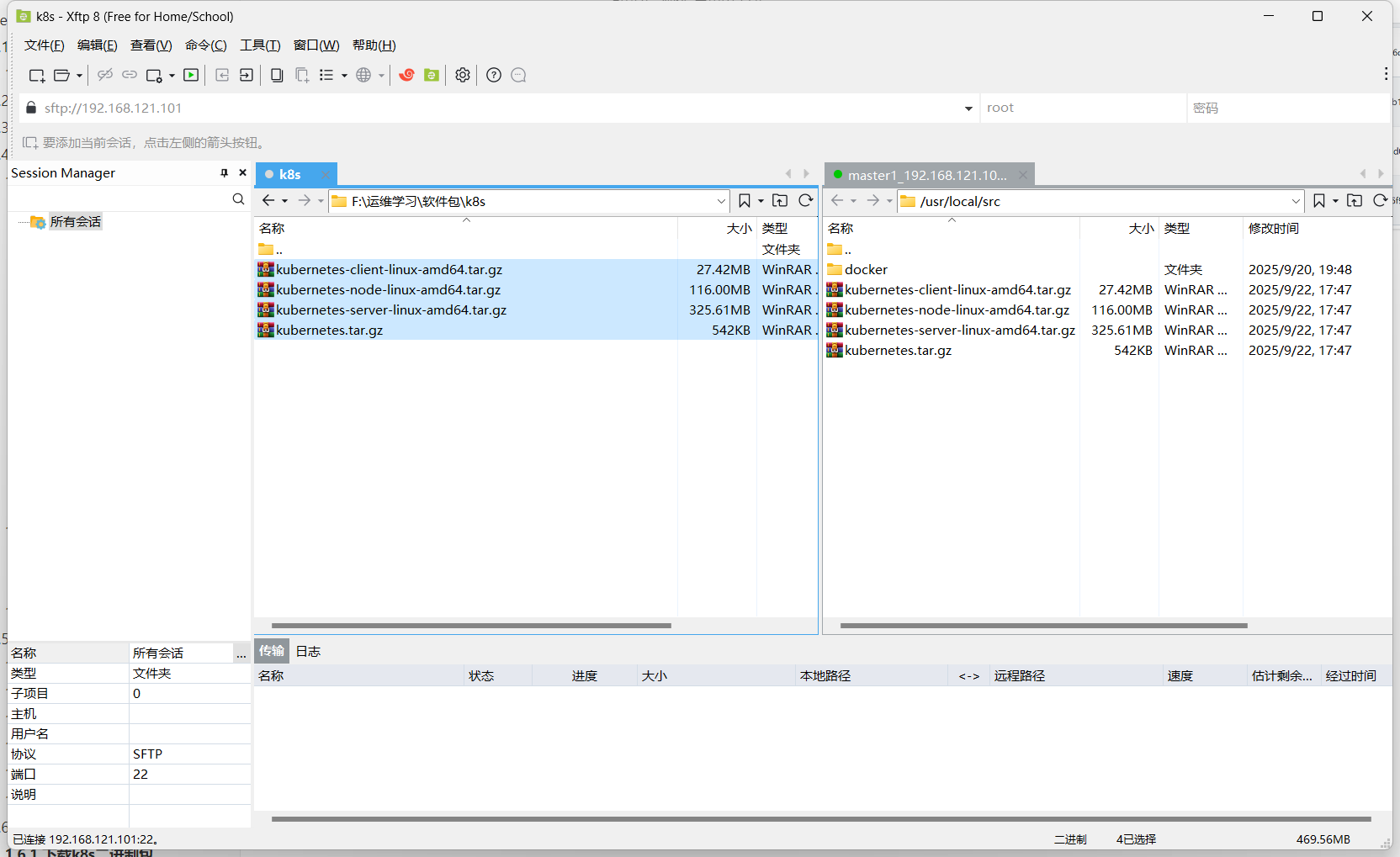

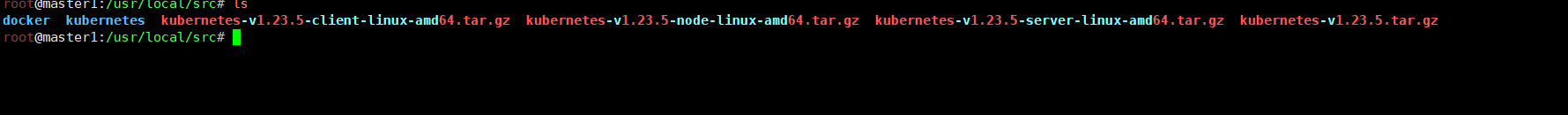

下载完成后上传至master1服务器/usr/local/src/目录下面

逐个进行解压

root@master1:/usr/local/src# tar xf kubernetes-node-linux-amd64.tar.gz

root@master1:/usr/local/src# tar xf kubernetes-server-linux-amd64.tar.gz

root@master1:/usr/local/src# tar xf kubernetes.tar.gz

root@master1:/usr/local/src# tar xf kubernetes-client-linux-amd64.tar.gz

解压完成会出现一个kubernetes文件夹

进入bin目录

root@master1:/usr/local/src/kubernetes/server/bin# cd /usr/local/src/kubernetes/server/bin

root@master1:/usr/local/src/kubernetes/server/bin# ls

apiextensions-apiserver kube-apiserver kube-apiserver.tar kube-controller-manager.docker_tag kube-log-runner kube-proxy.docker_tag kube-scheduler kube-scheduler.tar kubectl kubelet

kube-aggregator kube-apiserver.docker_tag kube-controller-manager kube-controller-manager.tar kube-proxy kube-proxy.tar kube-scheduler.docker_tag kubeadm kubectl-convert mounter2 升级

2.1 master节点升级

master节点逐个进行升级 master1 master2 master3 ,将需要升级的master1节点关闭进行离线升级,剩余两个master节点继续运行不影响服务。

将/usr/local/src/kubernetes/server/bin目录下的kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy kubectl 二进制文件升级包替换/usr/local/bin/目录下目前版本的二进制文件

root@master1:/usr/local/src/kubernetes/server/bin# cp kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy kubectl /etc/kubeasz/bin/

# 查看当前node节点是那些

root@master1:/usr/local/src/kubernetes/server/bin# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.121.101 Ready,SchedulingDisabled master 2d23h v1.23.1

192.168.121.102 Ready,SchedulingDisabled master 2d23h v1.23.1

192.168.121.111 Ready node 2d23h v1.23.1

192.168.121.112 Ready node 2d23h v1.23.1

# 编辑node1节点上的/etc/kube-lb/conf/kube-lb.conf文件注释掉需要更新的master1的ip信息root@node1:~# vim /etc/kube-lb/conf/kube-lb.conf

user root;

worker_processes 1;error_log /etc/kube-lb/logs/error.log warn;events {worker_connections 3000;

}stream {upstream backend {#server 192.168.121.101:6443 max_fails=2 fail_timeout=3s;server 192.168.121.102:6443 max_fails=2 fail_timeout=3s;}server {listen 127.0.0.1:6443;proxy_connect_timeout 1s;proxy_pass backend;}

}

# 重启服务更新状态

root@node1:~# systemctl restart kube-lb.service # 编辑node2节点上的/etc/kube-lb/conf/kube-lb.conf文件注释掉需要更新的master1的ip信息root@node2:~# vim /etc/kube-lb/conf/kube-lb.conf

user root;

worker_processes 1;error_log /etc/kube-lb/logs/error.log warn;events {worker_connections 3000;

}stream {upstream backend {#server 192.168.121.101:6443 max_fails=2 fail_timeout=3s;server 192.168.121.102:6443 max_fails=2 fail_timeout=3s;}server {listen 127.0.0.1:6443;proxy_connect_timeout 1s;proxy_pass backend;}

}

# 重启服务更新状态

root@node2:~# systemctl restart kube-lb.service # 停止master1节点的相关服务

root@master1:/usr/local/src/kubernetes/server/bin# systemctl stop kube

kube-apiserver.service kube-controller-manager.service kube-lb.service kube-proxy.service kube-scheduler.service kubelet.service root@master1:/usr/local/src/kubernetes/server/bin# systemctl stop kube-apiserver.service kube-controller-manager.service kube-proxy.service kube-scheduler.service kubelet.service

# 替换二进制文件

root@master1:/usr/local/src/kubernetes/server/bin# \cp kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy kubectl /usr/local/bin/

# 查看版本信息

root@master1:/usr/local/src/kubernetes/server/bin# kube-apiserver --version

Kubernetes v1.23.5

root@master1:/usr/local/src/kubernetes/server/bin# kube-controller-manager --version

Kubernetes v1.23.5

root@master1:/usr/local/src/kubernetes/server/bin# kube-scheduler --version

Kubernetes v1.23.5

# 启动服务

root@master1:/usr/local/src/kubernetes/server/bin# systemctl start kube-apiserver.service kube-controller-manager.service kube-proxy.service kube-scheduler.service kubelet.service

# 查看node节点版本信息

root@master1:/usr/local/src/kubernetes/server/bin# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.121.101 Ready,SchedulingDisabled master 2d23h v1.23.5 # 可以看到版本已经升级完成

192.168.121.102 Ready,SchedulingDisabled master 2d23h v1.23.1

192.168.121.111 Ready node 2d23h v1.23.1

192.168.121.112 Ready node 2d23h v1.23.1# 升级master2

# 修改node1节点的负载均衡ip

root@node1:~# vim /etc/kube-lb/conf/kube-lb.conf

# 恢复master1,注释master2

user root;

worker_processes 1;error_log /etc/kube-lb/logs/error.log warn;events {worker_connections 3000;

}stream {upstream backend {server 192.168.121.101:6443 max_fails=2 fail_timeout=3s;#server 192.168.121.102:6443 max_fails=2 fail_timeout=3s;}server {listen 127.0.0.1:6443;proxy_connect_timeout 1s;proxy_pass backend;}

# 重启服务

root@node2:~# systemctl restart kube-lb.service# 修改node2节点的负载均衡ip

root@node2:~# vim /etc/kube-lb/conf/kube-lb.conf

# 恢复master1,注释master2

user root;

worker_processes 1;error_log /etc/kube-lb/logs/error.log warn;events {worker_connections 3000;

}stream {upstream backend {server 192.168.121.101:6443 max_fails=2 fail_timeout=3s;#server 192.168.121.102:6443 max_fails=2 fail_timeout=3s;}server {listen 127.0.0.1:6443;proxy_connect_timeout 1s;proxy_pass backend;}

# 重启服务

root@node2:~# systemctl restart kube-lb.service# 停止master2服务

root@master2:~# systemctl stop kube-apiserver.service kube-controller-manager.service kube-proxy.service kube-scheduler.service kubelet.service# 在master1将二进制包传给master2

root@master1:/usr/local/src/kubernetes/server/bin# scp kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy kubectl master2:/usr/local/bin/# master2启动服务

root@master2:~# systemctl start kube-apiserver.service kube-controller-manager.service kube-proxy.service kube-scheduler.service kubelet.service# 验证更新

root@master1:/usr/local/src/kubernetes/server/bin# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.121.101 Ready,SchedulingDisabled master 2d23h v1.23.5

192.168.121.102 Ready,SchedulingDisabled master 2d23h v1.23.5 # master2已经更新为1.23.5

192.168.121.111 Ready node 2d23h v1.23.1

192.168.121.112 Ready node 2d23h v1.23.1

2.2 node节点升级

# 驱逐node1上的服务到node2上面

root@master1:/usr/local/src/kubernetes/server/bin# kubectl drain 192.168.121.111 --force --delete-emptydir-data --ignore-daemonsets

node/192.168.121.111 cordoned

WARNING: deleting Pods not managed by ReplicationController, ReplicaSet, Job, DaemonSet or StatefulSet: default/net-test2, default/net-test4; ignoring DaemonSet-managed Pods: kube-system/calico-node-4rnzt

evicting pod velero-system/velero-6755cb8697-b87p9

evicting pod default/net-test2

evicting pod default/net-test4

evicting pod kube-system/coredns-7db6b45f67-xpzmr

evicting pod kubernetes-dashboard/dashboard-metrics-scraper-69d947947b-94c4p

pod/dashboard-metrics-scraper-69d947947b-94c4p evicted

pod/velero-6755cb8697-b87p9 evicted

pod/coredns-7db6b45f67-xpzmr evicted

pod/net-test4 evicted

pod/net-test2 evicted

node/192.168.121.111 drained# 查看全部pod位置,已经驱逐到了ndoe2上面去了

root@master1:/usr/local/src/kubernetes/server/bin# kubectl get -A pod -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default net-test3 1/1 Running 3 (18h ago) 2d21h 10.200.104.10 192.168.121.112 <none> <none>

kube-system calico-kube-controllers-754966f84c-nb8mt 1/1 Running 8 (18h ago) 3d 192.168.121.112 192.168.121.112 <none> <none>

kube-system calico-node-29mld 1/1 Running 4 (18h ago) 3d 192.168.121.102 192.168.121.102 <none> <none>

kube-system calico-node-4rnzt 1/1 Running 6 (18h ago) 3d 192.168.121.111 192.168.121.111 <none> <none>

kube-system calico-node-p4ddl 1/1 Running 4 (18h ago) 3d 192.168.121.112 192.168.121.112 <none> <none>

kube-system calico-node-rn7fk 1/1 Running 10 (18h ago) 3d 192.168.121.101 192.168.121.101 <none> <none>

kube-system coredns-7db6b45f67-ht47r 1/1 Running 2 (18h ago) 45h 10.200.104.12 192.168.121.112 <none> <none>

kube-system coredns-7db6b45f67-nsrth 1/1 Running 0 83s 10.200.104.17 192.168.121.112 <none> <none>

kubernetes-dashboard dashboard-metrics-scraper-69d947947b-g74lh 1/1 Running 0 83s 10.200.104.18 192.168.121.112 <none> <none>

kubernetes-dashboard kubernetes-dashboard-744bdb9f9b-f2zns 1/1 Running 4 (18h ago) 43h 10.200.104.14 192.168.121.112 <none> <none>

myapp linux66-tomcat-app1-deployment-667c9cf879-hz98v 1/1 Running 0 117m 10.200.104.16 192.168.121.112 <none> <none>

velero-system velero-6755cb8697-8cv8b 0/1 Pending 0 83s <none> <none> <none> <none>root@master1:/usr/local/src/kubernetes/server/bin# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.121.101 Ready,SchedulingDisabled master 3d v1.23.5

192.168.121.102 Ready,SchedulingDisabled master 3d v1.23.5

192.168.121.111 Ready,SchedulingDisabled node 3d v1.23.1 # node1已经打上了污点禁止调度

192.168.121.112 Ready node 3d v1.23.1# node1节点停止服务

root@node1:~# systemctl stop kubelet.service kube-proxy.service # master1将node节点需要的二进制文件传送过去

root@master1:/usr/local/src/kubernetes/server/bin# scp kube-proxy kubelet node1:/usr/local/bin/

The authenticity of host 'node1 (192.168.121.111)' can't be established.

ECDSA key fingerprint is SHA256:e6246AoozwtEjYmPqU/mS4fWncpxKvoXtPgl9ZswNwQ.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added 'node1' (ECDSA) to the list of known hosts.

kube-proxy 100% 42MB 43.4MB/s 00:00 3

kubelet # node1节点启动服务

root@node1:/usr/local/bin# systemctl start kubelet.service kube-proxy.service # master1上查看node状态和版本信息

root@master1:/usr/local/src/kubernetes/server/bin# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.121.101 Ready,SchedulingDisabled master 3d v1.23.5

192.168.121.102 Ready,SchedulingDisabled master 3d v1.23.5

192.168.121.111 Ready,SchedulingDisabled node 3d v1.23.5 # 可以看到node1节点已经升级成功为1.23.5

192.168.121.112 Ready node 3d v1.23.1# 升级node2

# 停止node2服务

root@node2:~# systemctl stop kubelet.service kube-proxy.service

# 将二进制文件传送到node2进行替换升级

root@master1:/usr/local/src/kubernetes/server/bin# scp kube-proxy kubelet node2:/usr/local/bin/

The authenticity of host 'node2 (192.168.121.112)' can't be established.

ECDSA key fingerprint is SHA256:e6246AoozwtEjYmPqU/mS4fWncpxKvoXtPgl9ZswNwQ.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added 'node2' (ECDSA) to the list of known hosts.

kube-proxy 100% 42MB 47.3MB/s 00:00

kubelet # 取消node1的污点,让其允许调度

root@master1:/usr/local/src/kubernetes/server/bin# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.121.101 Ready,SchedulingDisabled master 3d v1.23.5

192.168.121.102 Ready,SchedulingDisabled master 3d v1.23.5

192.168.121.111 Ready,SchedulingDisabled node 3d v1.23.5

192.168.121.112 Ready node 3d v1.23.5

root@master1:/usr/local/src/kubernetes/server/bin# kubectl uncordon 192.168.121.111

node/192.168.121.111 uncordoned

root@master1:/usr/local/src/kubernetes/server/bin# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.121.101 Ready,SchedulingDisabled master 3d1h v1.23.5

192.168.121.102 Ready,SchedulingDisabled master 3d1h v1.23.5

192.168.121.111 Ready node 3d v1.23.5

192.168.121.112 Ready node 3d v1.23.53 管理节点

3.1 添加节点

root@master1:/etc/kubeasz# ./ezctl --help

Usage: ezctl COMMAND [args]

-------------------------------------------------------------------------------------

Cluster setups:list to list all of the managed clusterscheckout <cluster> to switch default kubeconfig of the clusternew <cluster> to start a new k8s deploy with name 'cluster'setup <cluster> <step> to setup a cluster, also supporting a step-by-step waystart <cluster> to start all of the k8s services stopped by 'ezctl stop'stop <cluster> to stop all of the k8s services temporarilyupgrade <cluster> to upgrade the k8s clusterdestroy <cluster> to destroy the k8s clusterbackup <cluster> to backup the cluster state (etcd snapshot)restore <cluster> to restore the cluster state from backupsstart-aio to quickly setup an all-in-one cluster with 'default' settingsCluster ops:add-etcd <cluster> <ip> to add a etcd-node to the etcd clusteradd-master <cluster> <ip> to add a master node to the k8s clusteradd-node <cluster> <ip> to add a work node to the k8s clusterdel-etcd <cluster> <ip> to delete a etcd-node from the etcd clusterdel-master <cluster> <ip> to delete a master node from the k8s clusterdel-node <cluster> <ip> to delete a work node from the k8s clusterExtra operation:kcfg-adm <cluster> <args> to manage client kubeconfig of the k8s clusterUse "ezctl help <command>" for more information about a given command.# 添加node节点

root@master1:/etc/kubeasz# ./ezctl add-node k8s-01 192.168.121.113 # 查看node节点

root@master1:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.121.101 Ready,SchedulingDisabled master 3d1h v1.23.5

192.168.121.102 Ready,SchedulingDisabled master 3d1h v1.23.5

192.168.121.111 Ready node 3d1h v1.23.5

192.168.121.112 Ready node 3d1h v1.23.5

192.168.121.113 Ready node 5m7s v1.23.53.2 添加master节点

root@master1:/etc/kubeasz# ./ezctl add-master k8s-01 192.168.121.103

# 查看node状态

root@master1:/etc/kubeasz# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.121.101 Ready,SchedulingDisabled master 3d2h v1.23.5

192.168.121.102 Ready,SchedulingDisabled master 3d2h v1.23.5

192.168.121.103 Ready,SchedulingDisabled master 36m v1.23.5 # 可以看到master已经添加成功

192.168.121.111 Ready node 3d2h v1.23.5

192.168.121.112 Ready node 3d2h v1.23.5

192.168.121.113 Ready node 58m v1.23.5