LangChain: Models, Prompts 模型和提示词

获取openapikey

#!pip install python-dotenv

#!pip install openai

import osimport openai

from dotenv import load_dotenv, find_dotenv

_ = load_dotenv(find_dotenv()) # read local .env file

openai.api_key = os.environ['OPENAI_API_KEY']# account for deprecation of LLM model

import datetime

# Get the current date

current_date = datetime.datetime.now().date()

# Define the date after which the model should be set to "gpt-3.5-turbo"

target_date = datetime.date(2024, 6, 12)

# Set the model variable based on the current date

if current_date > target_date:llm_model = "gpt-3.5-turbo"

else:llm_model = "gpt-3.5-turbo-0301"Chat API : OpenAI

调用openapi

def get_completion(prompt, model=llm_model):messages = [{"role": "user", "content": prompt}]response = openai.ChatCompletion.create(model=model,messages=messages,temperature=0, )return response.choices[0].message["content"]

get_completion("What is 1+1?")customer_email = """

Arrr, I be fuming that me blender lid \

flew off and splattered me kitchen walls \

with smoothie! And to make matters worse,\

the warranty don't cover the cost of \

cleaning up me kitchen. I need yer help \

right now, matey!

"""

style = """American English \

in a calm and respectful tone

"""prompt = f"""Translate the text \

that is delimited by triple backticks

into a style that is {style}.

text: ```{customer_email}```

"""

print(prompt)

response = get_completion(prompt)response

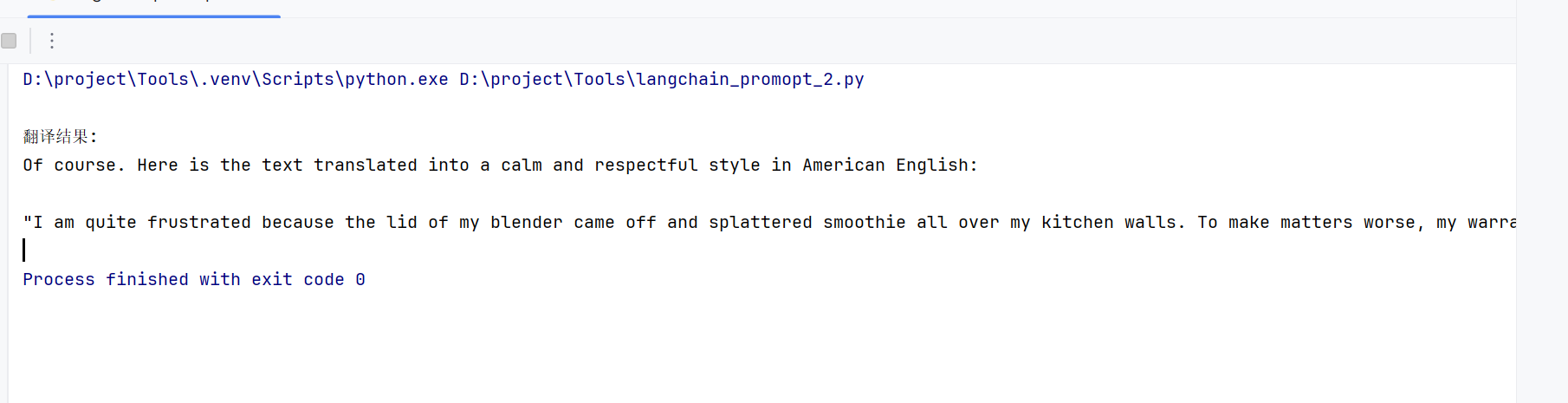

deepseek

import requests

import json# DeepSeek API 配置

DEEPSEEK_API_KEY = "替换为您的实际API密钥" # 替换为您的实际API密钥

DEEPSEEK_API_URL = "https://api.deepseek.com/v1/chat/completions"def get_deepseek_completion(prompt, model="deepseek-chat"):"""使用 DeepSeek API 获取回复"""headers = {"Authorization": f"Bearer {DEEPSEEK_API_KEY}","Content-Type": "application/json"}payload = {"model": model,"messages": [{"role": "user", "content": prompt}],"temperature": 0}try:response = requests.post(DEEPSEEK_API_URL, headers=headers, json=payload)response.raise_for_status() # 检查HTTP错误result = response.json()return result['choices'][0]['message']['content']except requests.exceptions.RequestException as e:print(f"API请求错误: {e}")return Noneexcept (KeyError, IndexError) as e:print(f"解析响应错误: {e}")return Nonecustomer_email = """

Arrr, I be fuming that me blender lid \

flew off and splattered me kitchen walls \

with smoothie! And to make matters worse,\

the warranty don't cover the cost of \

cleaning up me kitchen. I need yer help \

right now, matey!

"""style = """American English \

in a calm and respectful tone

"""prompt = f"""Translate the text \

that is delimited by triple backticks

into a style that is {style}.

text: ```{customer_email}```

"""# 获取并打印翻译结果

translation = get_deepseek_completion(prompt)

if translation:print("\n翻译结果:")print(translation)

| OpenAI 方式 | DeepSeek 方式 |

|---|---|

|

|

|

| 直接返回对象 | 解析JSON响应 |

LangChain+openapi

Let's try how we can do the same using LangChain.

#!pip install --upgrade langchain

from langchain.chat_models import ChatOpenAI# To control the randomness and creativity of the generated

# text by an LLM, use temperature = 0.0

chat = ChatOpenAI(temperature=0.0, model=llm_model)template_string = """Translate the text \

that is delimited by triple backticks \

into a style that is {style}. \

text: ```{text}```

"""from langchain.prompts import ChatPromptTemplate

prompt_template = ChatPromptTemplate.from_template(template_string)customer_style = """American English \

in a calm and respectful tone

"""customer_email = """

Arrr, I be fuming that me blender lid \

flew off and splattered me kitchen walls \

with smoothie! And to make matters worse, \

the warranty don't cover the cost of \

cleaning up me kitchen. I need yer help \

right now, matey!

"""customer_messages = prompt_template.format_messages(style=customer_style,text=customer_email)# Call the LLM to translate to the style of the customer message

customer_response = chat(customer_messages)

print(customer_response.content)service_reply = """Hey there customer, \

the warranty does not cover \

cleaning expenses for your kitchen \

because it's your fault that \

you misused your blender \

by forgetting to put the lid on before \

starting the blender. \

Tough luck! See ya!

"""

service_style_pirate = """\

a polite tone \

that speaks in English Pirate\

"""service_messages = prompt_template.format_messages(style=service_style_pirate,text=service_reply)

print(service_messages[0].content)

service_response = chat(service_messages)

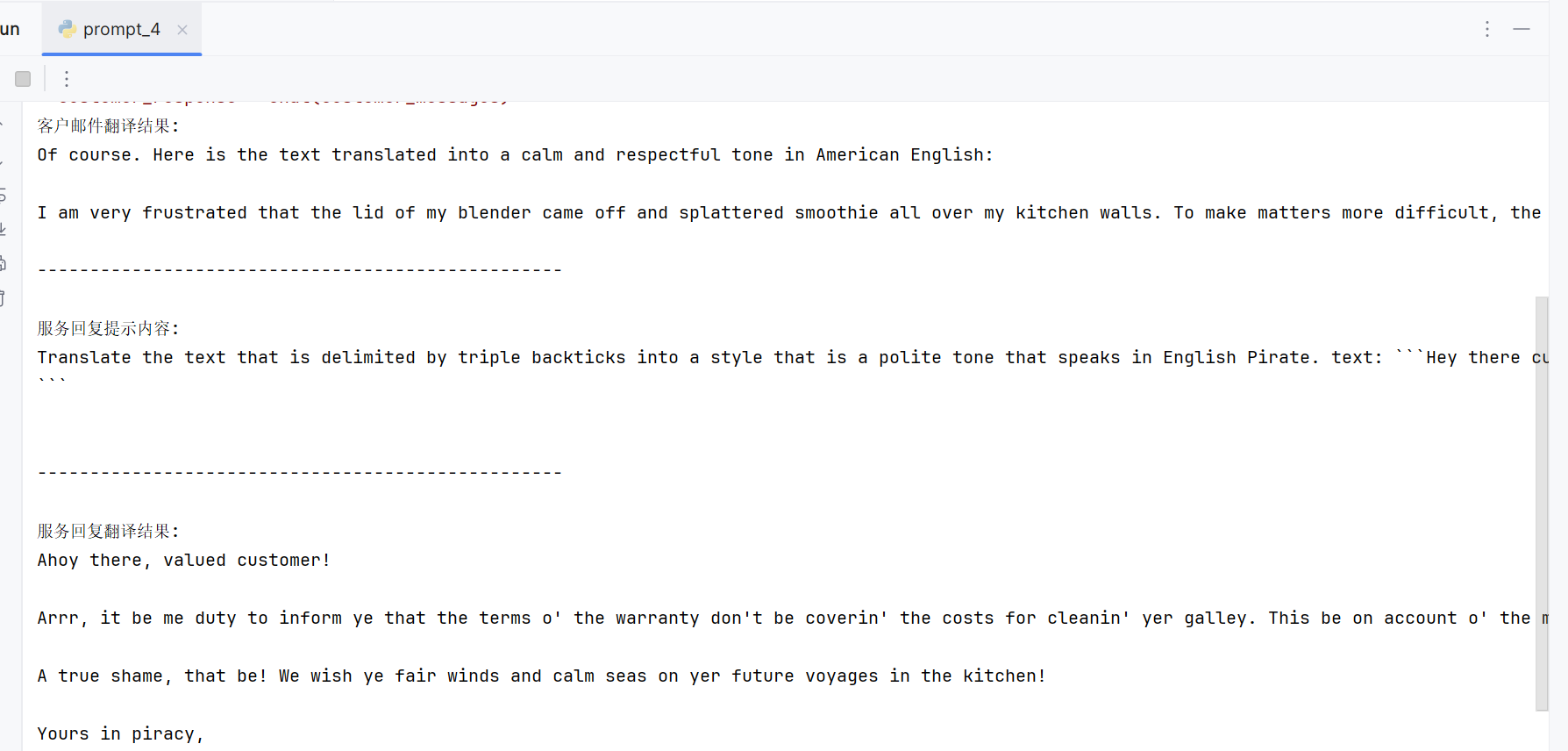

print(service_response.content)Langchain+deepseek

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate# 配置 DeepSeek AI

DEEPSEEK_API_KEY = " # 替换为您的实际API密钥"

DEEPSEEK_API_BASE = "https://api.deepseek.com/v1"# 创建 DeepSeek 聊天模型

chat = ChatOpenAI(temperature=0.0,model="deepseek-chat", # DeepSeek 模型名称openai_api_key=DEEPSEEK_API_KEY,openai_api_base=DEEPSEEK_API_BASE

)# 翻译模板

template_string = """Translate the text \

that is delimited by triple backticks \

into a style that is {style}. \

text: ```{text}```

"""# 创建提示模板

prompt_template = ChatPromptTemplate.from_template(template_string)# 第一部分:客户邮件翻译

customer_style = """American English in a calm and respectful tone"""

customer_email = """

Arrr, I be fuming that me blender lid \

flew off and splattered me kitchen walls \

with smoothie! And to make matters worse, \

the warranty don't cover the cost of \

cleaning up me kitchen. I need yer help \

right now, matey!

"""# 格式化消息

customer_messages = prompt_template.format_messages(style=customer_style,text=customer_email

)# 调用 DeepSeek 翻译

customer_response = chat(customer_messages)

print("客户邮件翻译结果:")

print(customer_response.content)

print("\n" + "-"*50 + "\n")# 第二部分:服务回复翻译

service_reply = """Hey there customer, \

the warranty does not cover \

cleaning expenses for your kitchen \

because it's your fault that \

you misused your blender \

by forgetting to put the lid on before \

starting the blender. \

Tough luck! See ya!

"""service_style_pirate = """a polite tone that speaks in English Pirate"""# 格式化消息

service_messages = prompt_template.format_messages(style=service_style_pirate,text=service_reply

)# 打印格式化后的提示

print("服务回复提示内容:")

print(service_messages[0].content)

print("\n" + "-"*50 + "\n")# 调用 DeepSeek 翻译

service_response = chat(service_messages)

print("服务回复翻译结果:")

print(service_response.content)

Output Parsers

{"gift": False,"delivery_days": 5,"price_value": "pretty affordable!"

}

customer_review = """\

This leaf blower is pretty amazing. It has four settings:\

candle blower, gentle breeze, windy city, and tornado. \

It arrived in two days, just in time for my wife's \

anniversary present. \

I think my wife liked it so much she was speechless. \

So far I've been the only one using it, and I've been \

using it every other morning to clear the leaves on our lawn. \

It's slightly more expensive than the other leaf blowers \

out there, but I think it's worth it for the extra features.

"""review_template = """\

For the following text, extract the following information:gift: Was the item purchased as a gift for someone else? \

Answer True if yes, False if not or unknown.delivery_days: How many days did it take for the product \

to arrive? If this information is not found, output -1.price_value: Extract any sentences about the value or price,\

and output them as a comma separated Python list.Format the output as JSON with the following keys:

gift

delivery_days

price_valuetext: {text}

"""

from langchain.prompts import ChatPromptTemplateprompt_template = ChatPromptTemplate.from_template(review_template)

print(prompt_template)

messages = prompt_template.format_messages(text=customer_review)

chat = ChatOpenAI(temperature=0.0, model=llm_model)

response = chat(messages)

print(response.content)

type(response.content)

# You will get an error by running this line of code

# because'gift' is not a dictionary

# 'gift' is a string

response.content.get('gift')Parse the LLM output string into a Python dictionary

from langchain.output_parsers import ResponseSchema

from langchain.output_parsers import StructuredOutputParser

gift_schema = ResponseSchema(name="gift",description="Was the item purchased\as a gift for someone else? \Answer True if yes,\False if not or unknown.")

delivery_days_schema = ResponseSchema(name="delivery_days",description="How many days\did it take for the product\to arrive? If this \information is not found,\output -1.")

price_value_schema = ResponseSchema(name="price_value",description="Extract any\sentences about the value or \price, and output them as a \comma separated Python list.")response_schemas = [gift_schema, delivery_days_schema,price_value_schema]

output_parser = StructuredOutputParser.from_response_schemas(response_schemas)

format_instructions = output_parser.get_format_instructions()

print(format_instructions)

review_template_2 = """\

For the following text, extract the following information:gift: Was the item purchased as a gift for someone else? \

Answer True if yes, False if not or unknown.delivery_days: How many days did it take for the product\

to arrive? If this information is not found, output -1.price_value: Extract any sentences about the value or price,\

and output them as a comma separated Python list.text: {text}{format_instructions}

"""prompt = ChatPromptTemplate.from_template(template=review_template_2)messages = prompt.format_messages(text=customer_review, format_instructions=format_instructions)

print(messages[0].content)

response = chat(messages)

print(response.content)

output_dict = output_parser.parse(response.content)

type(output_dict)

output_dict.get('delivery_days')参考:LangChain for LLM Application Development - DeepLearning.AI