四、Envoy动态配置

因语雀和CSDN MarkDown格式有区别,导入到CSDN时可能会有格式显示问题,请查看原文链接:

https://www.yuque.com/dycloud/pss8ys

一、动态配置

1.1 动态发现类型

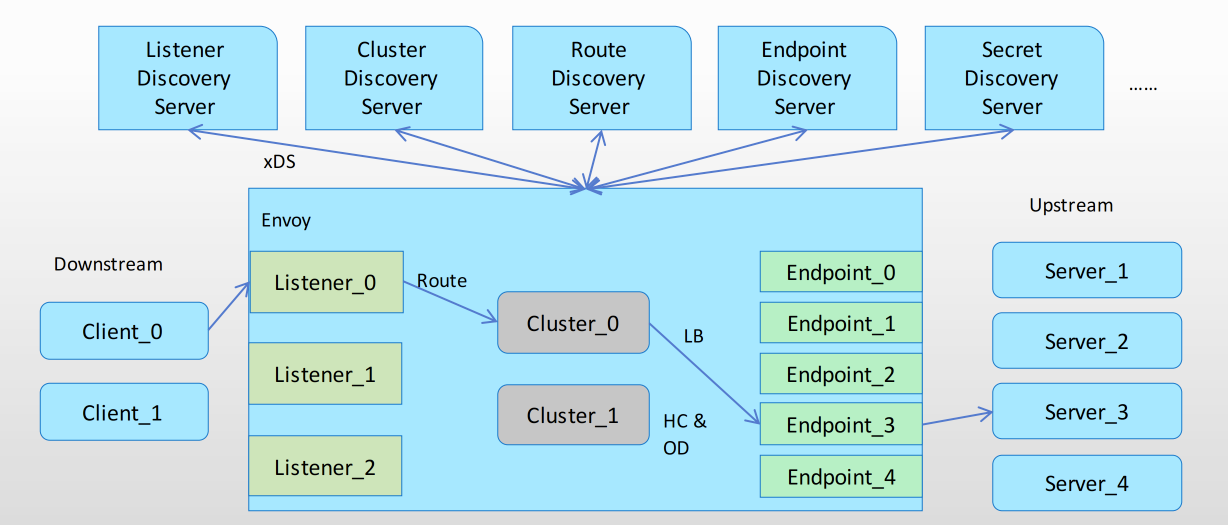

xDS API 为 Envoy 提供了资源的动态配置机制,它也被称为 Data Plane API

Envoy 支持三种类型的配置信息的动态发现机制,相关的服务发现及其相应的 API 联合起来成为 xDS API;

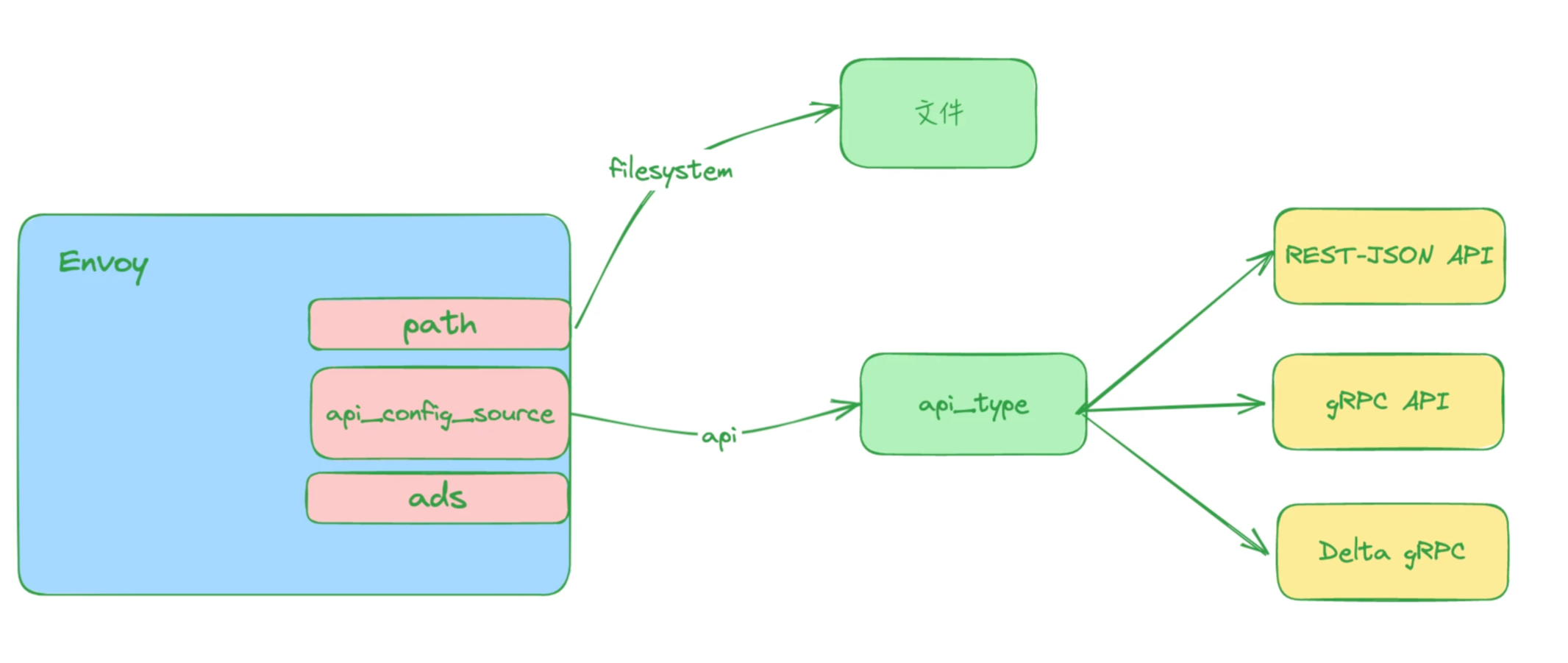

- 基于文件系统发现:指定要监视的文件系统路径

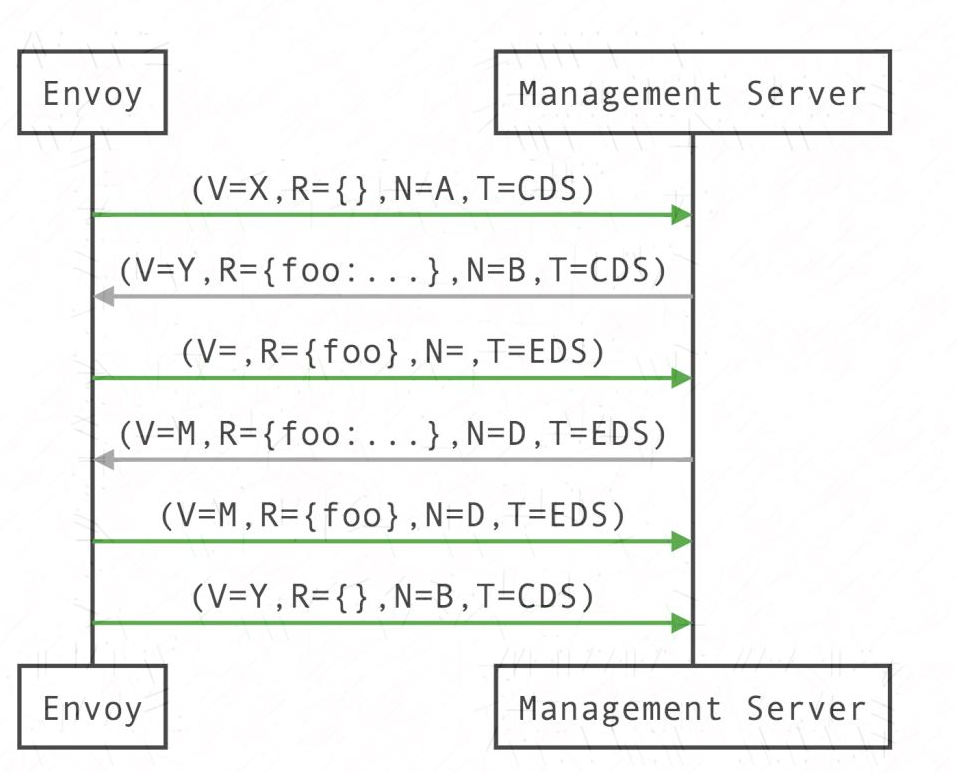

- xDS API 端点发现:通过

<font style="color:#DF2A3F;">DiscoveryRequest</font>协议报文发送请求,并要求服务方以<font style="color:#DF2A3F;">DiscoveryResponse</font>协议报文进行响应。- gRPC 服务:启动 gRPC 流

- RSET 服务:轮询 REST-JSON URL

v3 版本的 xDS 支持如下几种资源类型:

- envoy.config.listener.v3.Listener

- envoy.config.route.v3.RouteConfiguration

- envoy.config.route.v3.ScopedRouteConfiguration

- envoy.config.route.v3.VirtualHost

- envoy.config.cluster.v3.Cluster

- envoy.config.endpoint.v3.ClusterLoadAssignment

- envoy.extensions.transport_sockets.tls.v3.Secret

- envoy.service.runtime.v3.Runtime

1.2 xDS API 概述

Envoy对xDS API的管理由后端服务器实现,包括<font style="color:#DF2A3F;">LDS(Listener)</font>、<font style="color:#DF2A3F;">CDS(Cluster</font><font style="color:rgb(0,0,0);">)</font>、<font style="color:#DF2A3F;">RDS(Route)</font>、<font style="color:#DF2A3F;">SRDS</font>(Scoped Route)、<font style="color:#DF2A3F;">VHDS</font>(Virtual Host)、<font style="color:#DF2A3F;">EDS</font><font style="color:rgb(0,0,0);">(Endpoint)</font>、<font style="color:#DF2A3F;">SDS</font><font style="color:rgb(0,0,0);">(Secret)</font>、<font style="color:#DF2A3F;">RTDS</font>(Runtime)等;

- 所有这些API都提供了最终的一致性,并且彼此间不存在相互影响;

- ◼ 部分更高级别的操作(例如执行服务的A/B部署)需要进行排序以防止流量被丢弃,因此,基于一个管理服务器提供多类API时还需要使用聚合发现服务(ADS)API

- ADS API允许所有其他API通过来自单个管理服务器的单个gRPC双向流进行编组,从而允许对操作进行确定性排序;

- ◼ 另外,xDS的各API还支持增量传输机制,包括ADS;

Envoy 支持不同的模块进行动态配置,可配置的有如下几个 API(统称为 <font style="color:#DF2A3F;">xDS</font>):

<font style="color:#DF2A3F;">EDS</font>:端点发现服务(EDS)可以让 Envoy 自动发现上游集群的成员,这使得我们可以动态添加或者删除处理流量请求的服务。<font style="color:#DF2A3F;">CDS</font>:集群发现服务(CDS)可以让 Envoy 通过该机制自动发现在路由过程中使用的上游集群。<font style="color:#DF2A3F;">RDS</font>:路由发现服务(RDS)可以让 Envoy 在运行时自动发现 HTTP 连接管理过滤器的整个路由配置,这可以让我们来完成诸如动态更改流量分配或者蓝绿发布之类的功能。<font style="color:#DF2A3F;">VHDS</font>:虚拟主机发现服务(VHDS)允许根据需要与路由配置本身分开请求属于路由配置的虚拟主机。该 API 通常用于路由配置中有大量虚拟主机的部署中。<font style="color:#DF2A3F;">SRDS</font>:作用域路由发现服务(SRDS)允许将路由表分解为多个部分。该 API 通常用于具有大量路由表的 HTTP 路由部署中。<font style="color:#DF2A3F;">LDS</font>:监听器发现服务(LDS)可以让 Envoy 在运行时自动发现整个监听器。<font style="color:#DF2A3F;">SDS</font>:密钥发现服务(SDS)可以让 Envoy 自动发现监听器的加密密钥(证书、私钥等)以及证书校验逻辑(受信任的根证书、吊销等)。<font style="color:#DF2A3F;">RTDS</font>:运行时发现服务 (RTDS) API 允许通过 xDS API 获取运行时层。这可以通过文件系统层进行补充或改善。<font style="color:#DF2A3F;">ECDS</font>:扩展配置发现服务 (ECDS) API 允许独立于侦听器提供扩展配置(例如 HTTP 过滤器配置)。当构建更适合与主控制平面分离的系统(例如 WAF、故障测试等)时,这非常有用。<font style="color:#DF2A3F;">ADS</font>:EDS、CDS 等都是单独的服务,具有不同的 REST/gRPC 服务名称,例如<font style="color:#DF2A3F;">StreamListeners</font>、<font style="color:#DF2A3F;">StreamSecrets</font>。对于希望强制资源按照不同类型的顺序到达 Envoy 的用户来说,有聚合的 xDS,这是一个单独的 gRPC 服务,在一个 gRPC 流中携带所有资源类型。(ADS 只支持 gRPC)。

动态资源,是指由 Envoy 通过 <font style="color:#DF2A3F;">xDS</font> 协议发现所需要的各项配置的机制,相关的配置信息保存于称之为管理服务器(Management Server )的主机上,经由 xDS API 向外暴露;下面是一个纯动态资源的基础配置框架。

{"lds_config": "{...}","cds_config": "{...}","ads_config": "{...}"

}

xDS API 为 Envoy 提供了资源的动态配置机制,它也被称为 <font style="color:#DF2A3F;">Data Plane API</font>。

一个 Management Server 实例可能需要同时相应多个不同的 Envoy 实例的资源发现请求

Management Server 上的配置需要为适配到不同的 Envoy 实例

Envoy 实例请求发现配置时,需要再请求报文中上报自身的信息

- 例如

<font style="color:#DF2A3F;">id</font>、<font style="color:#DF2A3F;">cluster</font>、<font style="color:#DF2A3F;">metadata</font>、<font style="color:#DF2A3F;">locality</font>等 - 这些配置信息定义在 Bootstrap 配置文件中,专用的顶级配置段"

<font style="color:#DF2A3F;">node{...}</font>"

node:id: … # An opaque node identifier for the Envoy node. cluster: … # Defines the local service cluster name where Envoy is running. metadata: {…} # Opaque metadata extending the node identifier. Envoy will pass this directly to the management server.locality: # Locality specifying where the Envoy instance is running.region: …zone: …sub_zone: …user_agent_name: … # Free-form string that identifies the entity requesting config. E.g. “envoy” or “grpc”user_agent_version: … # Free-form string that identifies the version of the entity requesting config. E.g. “1.12.2” or “abcd1234” , or “SpecialEnvoyBuild”user_agent_build_version: # Structured version of the entity requesting config.version: …metadata: {…}extensions: [ ] # List of extensions and their versions supported by the node.client_features: [ ]listening_addresses: [ ] # Known listening ports on the node as a generic hint to the management server for filtering listeners to be returned

二、基于文件的动态配置

Envoy 除了支持静态配置之外,还支持动态配置,而且动态配置也是 Envoy 重点关注的功能,这里学习如何将 Envoy 静态配置转换为动态配置,从而允许 Envoy 自动更新。

接下来我们先更改配置来使用 <font style="color:#DF2A3F;">EDS</font>,让 Envoy 根据配置文件的数据来动态添加节点。

2.1 EDS 动态配置示例

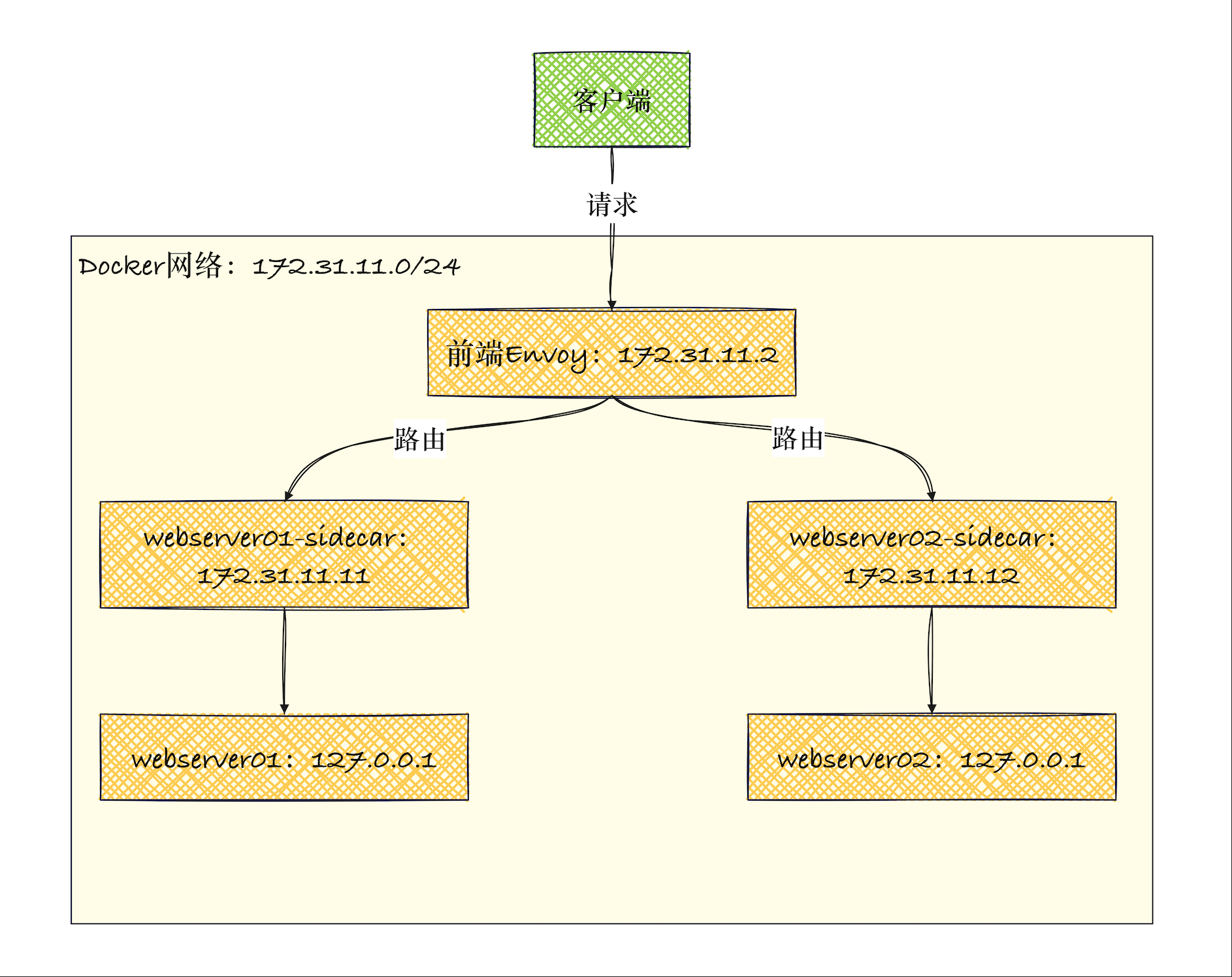

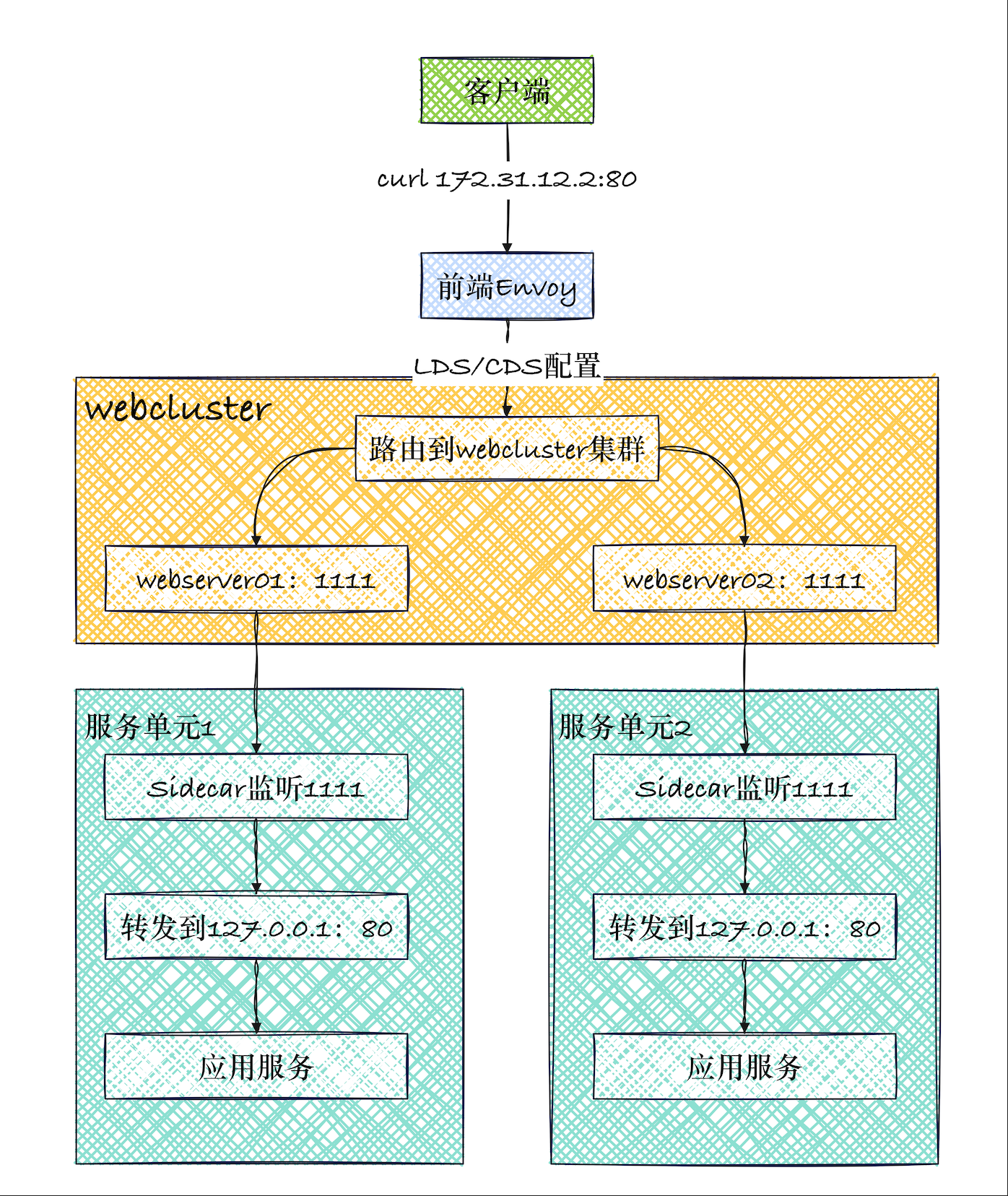

这里通过 docker-compose 模拟一下 k8s 中 <font style="color:#DF2A3F;">sidecar</font> 方式,首先会有一个前端的 envoy 配置作为入口网关,接受所有外部流量,通过文件方式动态发现后端 web,而每个 web 服务会配置一个独立的 sidecar,通过 <font style="color:#DF2A3F;">network_mode</font> 与 sidecar 共享网络栈。

2.2.1 ClusterID

首先这里定义了一个基本的 Envoy 配置文件,如下:

admin:profile_path: /tmp/envoy.profaccess_log_path: /tmp/admin_access.logaddress:socket_address:address: 0.0.0.0port_value: 9901static_resources:listeners:- name: listener_0address:socket_address: { address: 0.0.0.0, port_value: 80 }filter_chains:- filters:- name: envoy.filters.network.http_connection_managertyped_config:"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManagerstat_prefix: ingress_httpcodec_type: AUTOroute_config:name: local_routevirtual_hosts:- name: web_service_01domains: ["*"]routes:- match: { prefix: "/" }route: { cluster: webcluster }http_filters:- name: envoy.filters.http.routertyped_config:"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

现在我们还没有配置 <font style="color:#DF2A3F;">clusters</font> 集群部分,这是因为我们要通过使用 <font style="color:#DF2A3F;">EDS</font> 来进行自动发现。

首先我们需要添加一个 node 节点让 Envoy 来识别并应用这一个唯一的配置,动态配置中 Envoy 实例需要有唯一的 **<font style="color:#DF2A3F;">id</font>** 标识。将下面的配置放置在配置文件的顶部区域:

node:id: front_proxycluster: dujie_Cluster

除了 <font style="color:#DF2A3F;">id</font> 和 <font style="color:#DF2A3F;">cluster</font> 之外,我们还可以配置基于区域的一些位置信息来进行声明,比如 <font style="color:#DF2A3F;">region</font>、<font style="color:#DF2A3F;">zone</font>、<font style="color:#DF2A3F;">sub_zone</font> 等。

2.2.2 front-envoy 准备

端点发现服务 EDS 是基于 <font style="color:#DF2A3F;">gRPC</font> 或 <font style="color:#DF2A3F;">REST-JSON API</font> 服务器的 <font style="color:#DF2A3F;">xDS</font> 管理服务器,Envoy 使用它来获取集群成员。集群成员在 Envoy 术语中称为“端点”。对于每个集群,Envoy 从发现服务获取端点,EDS 是首选的服务发现机制:

- Envoy 明确了解每个上游主机(相对于通过 DNS 解析的负载均衡器进行路由),并且可以做出更智能的负载均衡决策。

- 每个主机的发现 API 响应中携带的额外属性告知 Envoy 主机的负载均衡权重、金丝雀状态、区域等。这些附加属性由 Envoy 网格在负载均衡、统计收集等过程中全局使用。

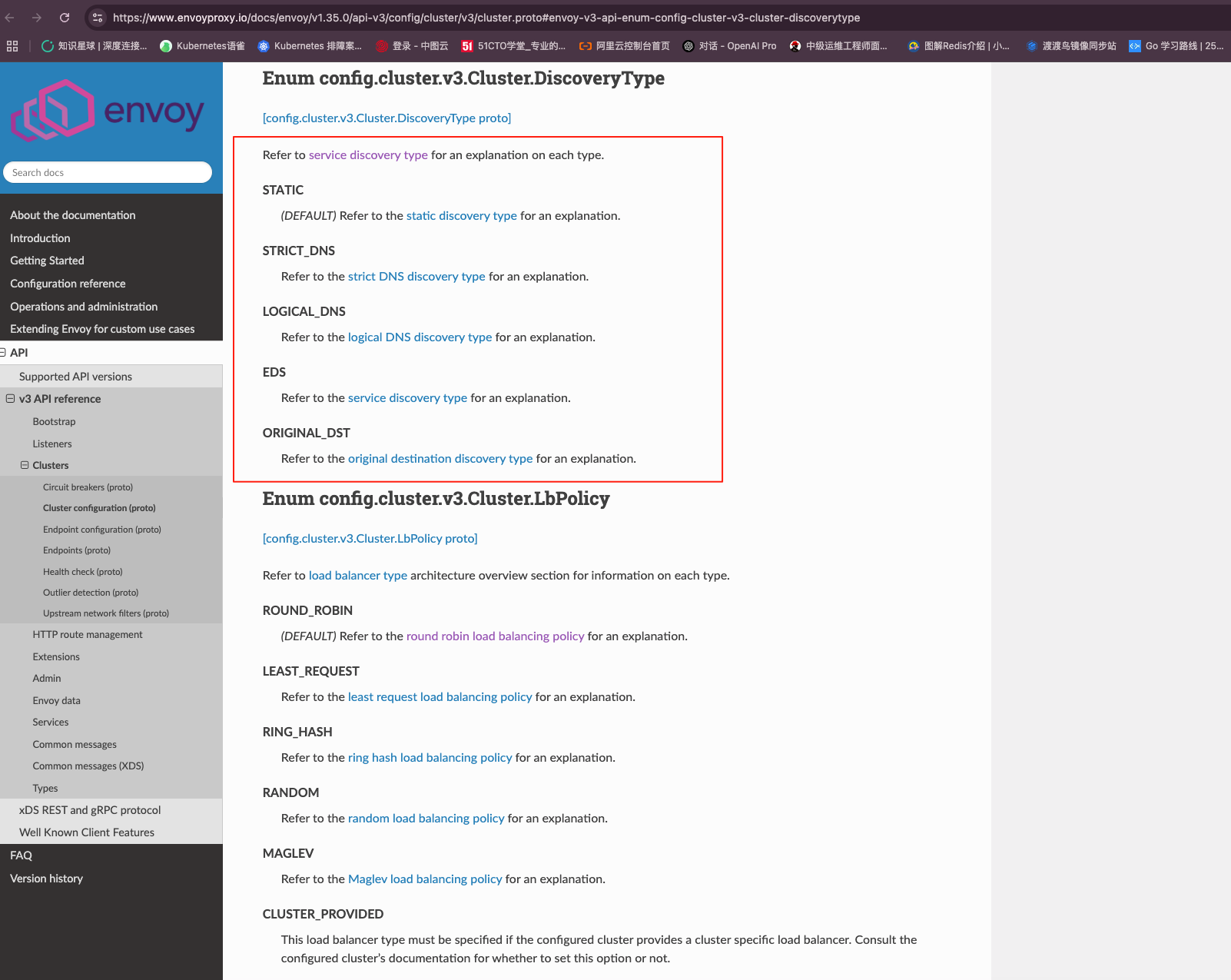

接下来我们就可以来定义 <font style="color:#DF2A3F;">EDS</font> 配置了,可以来动态控制上游集群数据。之前的静态配置是这样的:

clusters:- name: targetClusterconnect_timeout: 0.25stype: STRICT_DNSdns_lookup_family: V4_ONLYlb_policy: ROUND_ROBINload_assignment:cluster_name: targetClusterendpoints:- lb_endpoints:- endpoint:address:socket_address:address: xxxxport_value: 80- endpoint:address:socket_address:address: xxxxport_value: 80

现在我们将上面的静态配置转换成动态配置,首先需要转换为基于 <font style="color:#DF2A3F;">EDS</font> 的 <font style="color:#DF2A3F;">eds_cluster_config</font> 属性,并将类型更改为 <font style="color:#DF2A3F;">EDS</font>,将下面的集群配置添加到 Envoy 配置的末尾:

clusters:- name: webclusterconnect_timeout: 0.25stype: EDSlb_policy: ROUND_ROBINeds_cluster_config:service_name: webclustereds_config:path: '/etc/envoy/eds.conf.d/eds.yaml'

在上面的集群配置中我们设置了 <font style="color:#DF2A3F;">type: EDS</font>,表示这是一个基于 <font style="color:#DF2A3F;">EDS</font> 的集群配置

然后使用 <font style="color:#DF2A3F;">eds_cluster_config</font> 属性来定义 <font style="color:#DF2A3F;">EDS</font> 的配置信息,其中 <font style="color:#DF2A3F;">service_name</font> 属性是可选的,如果没有设置则使用集群的名称,这个属性是提供给 <font style="color:#DF2A3F;">EDS</font> 服务的,<font style="color:#DF2A3F;">eds_config</font> 属性定义了 <font style="color:#DF2A3F;">EDS</font> 更新源的配置,这里我们使用的是本地文件配置源,所以使用 <font style="color:#DF2A3F;">path_config_source</font> 属性来指定本地配置文件的路径,这里我们使用的是 <font style="color:#DF2A3F;">/etc/envoy/eds.conf.d/eds.yaml</font> 这个文件,这个文件将会被 Envoy 代理监视,当文件内容发生变化的时候,Envoy 将会自动更新配置。

此外还可以配置一个 <font style="color:#DF2A3F;">watched_directory</font> 属性来监视目录中的文件更改,当该目录中的文件被移动到时,该路径将被重新加载。这在某些部署场景中是必需的。比如如果我们使用 <font style="color:#DF2A3F;">Kubernetes ConfigMap</font> 来加载 <font style="color:#DF2A3F;">xDS</font> 资源,则可能会使用以下配置:

- 将

<font style="color:#DF2A3F;">xds.yaml</font>存储在 ConfigMap 内。 - 将 ConfigMap 挂载到

<font style="color:#DF2A3F;">/config_map/xds</font> - 配置路径

<font style="color:#DF2A3F;">/config_map/xds/xds.yaml</font> - 配置监视目录

<font style="color:#DF2A3F;">/config_map/xds</font>

上述配置将确保 Envoy 监视所属目录的移动,这是由于 Kubernetes 在原子更新期间管理 ConfigMap 符号链接的方式而必需的。

上游的服务器 IP 就将来自于 <font style="color:#DF2A3F;">/etc/envoy/eds.conf.d/eds.yaml</font> 这个文件,我们创建一个如下所示的 eds.yaml 文件,内容如下所示:

resources:

- "@type": type.googleapis.com/envoy.config.endpoint.v3.ClusterLoadAssignmentcluster_name: webclusterendpoints:- lb_endpoints:- endpoint:address:socket_address:address: 172.31.11.11port_value: 1111

上面暂时只定义了 **<font style="color:#DF2A3F;">172.31.11.11</font>** 这一个端点,并且端口为 81,****也就是说front-envoy 会将请求发送到这个 sidecar 容器的 **<font style="color:#DF2A3F;">1111</font>** 端口,然后再由 sidecar 容器将请求转发到本地共享网络空间的应用 8080 端口上。该配置文件是以 <font style="color:#DF2A3F;">DiscoveryResponse</font> 的格式提供响应实例的。

所以完整的 front-envoy.yaml 文件如下:

node:id: envoy_front_proxycluster: test_Clusteradmin:profile_path: /tmp/envoy.profaccess_log_path: /tmp/admin_access.logaddress:socket_address:address: 0.0.0.0port_value: 9901static_resources:listeners:- name: listener_0address:socket_address: { address: 0.0.0.0, port_value: 80 }filter_chains:- filters:- name: envoy.filters.network.http_connection_managertyped_config:"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManagerstat_prefix: ingress_httpcodec_type: AUTOroute_config:name: local_routevirtual_hosts:- name: web_service_01domains: ["*"]routes:- match: { prefix: "/" }route: { cluster: webcluster }http_filters:- name: envoy.filters.http.routertyped_config:"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Routerclusters:- name: webclusterconnect_timeout: 0.25stype: EDSlb_policy: ROUND_ROBINeds_cluster_config:service_name: webclustereds_config:path: '/etc/envoy/eds.conf.d/eds.yaml'

2.2.3 sidecar-envoy 准备

这里的 sidecar 接受 front-envoy 传入的请求,然后将请求分发到和自己共享网络空间的 web 端点(也就是本机的 8080 端口)

admin:profile_path: /tmp/envoy.profaccess_log_path: /tmp/admin_access.logaddress:socket_address:address: 0.0.0.0port_value: 9901static_resources:listeners:- name: listener_0address:socket_address: { address: 0.0.0.0, port_value: 1111 }filter_chains:- filters:- name: envoy.filters.network.http_connection_managertyped_config:"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManagerstat_prefix: ingress_httpcodec_type: AUTOroute_config:name: local_routevirtual_hosts:- name: local_service domains: ["*"]routes:- match: { prefix: "/" }route: { cluster: local_cluster }http_filters:- name: envoy.filters.http.routertyped_config:"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Routerclusters:- name: local_clusterconnect_timeout: 0.25stype: STATIClb_policy: ROUND_ROBINload_assignment:cluster_name: local_clusterendpoints:- lb_endpoints:- endpoint:address:socket_address: { address: 127.0.0.1, port_value: 8080 }

2.2.4 docker-compose 准备

配置完成后,我们可以启动 Envoy 代理来进行测试,docker-compose 文件

<font style="color:rgb(251, 71, 135);">network_mode: service:<name></font>参数实现了网络命名空间共享(即每个 sidecar 容器和对应的 web 容器共享同一个 ip 地址)

version: '3.3'services:envoy:image: envoyproxy/envoy:v1.35.0environment:- ENVOY_UID=0- ENVOY_GID=0volumes:- ./front-envoy.yaml:/etc/envoy/envoy.yaml- ./eds.conf.d/:/etc/envoy/eds.conf.d/networks:envoymesh:ipv4_address: 172.31.11.2aliases:- front-proxydepends_on:- webserver01-sidecar- webserver02-sidecarwebserver01-sidecar:image: envoyproxy/envoy:v1.35.0volumes:- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yamlhostname: webserver01networks:envoymesh:ipv4_address: 172.31.11.11aliases:- webserver01-sidecarwebserver01:image: test/demoapp:v1.0environment:- PORT=8080- HOST=127.0.0.1network_mode: "service:webserver01-sidecar"depends_on:- webserver01-sidecarwebserver02-sidecar:image: envoyproxy/envoy:v1.35.0volumes:- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yamlhostname: webserver02networks:envoymesh:ipv4_address: 172.31.11.12aliases:- webserver02-sidecarwebserver02:image: test/demoapp:v1.0environment:- PORT=8080- HOST=127.0.0.1network_mode: "service:webserver02-sidecar"depends_on:- webserver02-sidecarnetworks:envoymesh:driver: bridgeipam:config:- subnet: 172.31.11.0/24

2.2.5 启动测试

[root@VM_24_47_tlinux /usr/local/src]# docker-compose up

[root@VM_24_47_tlinux /usr/local/src]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

eabc69a51059 envoyproxy/envoy:v1.35.0 "/docker-entrypoint.…" 5 seconds ago Up 3 seconds 10000/tcp eds-filesystem-envoy-1

c1db8e05655e test/demoapp:v1.0 "/bin/sh -c 'python3…" 5 seconds ago Up 4 seconds eds-filesystem-webserver01-1

c1fd8e26092d test/demoapp:v1.0 "/bin/sh -c 'python3…" 5 seconds ago Up 4 seconds eds-filesystem-webserver02-1

f75b32090d87 envoyproxy/envoy:v1.35.0 "/docker-entrypoint.…" 5 seconds ago Up 4 seconds 10000/tcp eds-filesystem-webserver01-sidecar-1

93bf0e93e615 envoyproxy/envoy:v1.35.0 "/docker-entrypoint.…" 5 seconds ago Up 4 seconds 10000/tcp eds-filesystem-webserver02-sidecar-1

可以请求 front 地址 172.31.11.2 进行测试,可以看到当前转发的请求都是到了 172.31.11.11 ,因为上面 eds.yaml 配置的地址只有这个

[root@VM_24_47_tlinux /usr/local/src]# curl 172.31.11.2

test demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.11.11!

[root@VM_24_47_tlinux /usr/local/src]# curl 172.31.11.2

test demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.11.11!!

也可以 curl admin 接口查看,目前只有一个 11

[root@VM_24_47_tlinux /usr/local/src]# curl 172.31.11.2:9901/clusters

webcluster::observability_name::webcluster

webcluster::default_priority::max_connections::1024

webcluster::default_priority::max_pending_requests::1024

webcluster::default_priority::max_requests::1024

webcluster::default_priority::max_retries::3

webcluster::high_priority::max_connections::1024

webcluster::high_priority::max_pending_requests::1024

webcluster::high_priority::max_requests::1024

webcluster::high_priority::max_retries::3

webcluster::added_via_api::false

webcluster::eds_service_name::webcluster

webcluster::172.31.11.11:1111::cx_active::1

webcluster::172.31.11.11:1111::cx_connect_fail::0

webcluster::172.31.11.11:1111::cx_total::1

webcluster::172.31.11.11:1111::rq_active::0

webcluster::172.31.11.11:1111::rq_error::0

webcluster::172.31.11.11:1111::rq_success::2

webcluster::172.31.11.11:1111::rq_timeout::0

webcluster::172.31.11.11:1111::rq_total::2

webcluster::172.31.11.11:1111::hostname::

webcluster::172.31.11.11:1111::health_flags::healthy

webcluster::172.31.11.11:1111::weight::1

webcluster::172.31.11.11:1111::region::

webcluster::172.31.11.11:1111::zone::

webcluster::172.31.11.11:1111::sub_zone::

webcluster::172.31.11.11:1111::canary::false

webcluster::172.31.11.11:1111::priority::0

webcluster::172.31.11.11:1111::success_rate::-1

webcluster::172.31.11.11:1111::local_origin_success_rate::-1

可以提前准备 eds.yaml.v2 版本

resources:

- "@type": type.googleapis.com/envoy.config.endpoint.v3.ClusterLoadAssignmentcluster_name: webclusterendpoints:- lb_endpoints:- endpoint:address:socket_address:address: 172.31.11.11port_value: 1111- endpoint:address:socket_address:address: 172.31.11.12port_value: 1111

然后进入 front 容器,直接将 v2 版本替换成 eds.yaml,docker 就可以根据时间戳判断文件更新,从而实现动态发现

[root@VM_24_47_tlinux /usr/local/src]# docker exec -it eabc69a51059 bash

root@eabc69a51059:/etc/envoy/eds.conf.d# cd /etc/envoy/eds.conf.d/

root@eabc69a51059:/etc/envoy/eds.conf.d# mv eds.yaml.v2 eds.yaml

验证,这样就实现了基于文件的动态配置

[root@VM_24_47_tlinux /usr/local/src]# curl 172.31.11.2:9901/clusters

webcluster::observability_name::webcluster

webcluster::default_priority::max_connections::1024

webcluster::default_priority::max_pending_requests::1024

webcluster::default_priority::max_requests::1024

webcluster::default_priority::max_retries::3

webcluster::high_priority::max_connections::1024

webcluster::high_priority::max_pending_requests::1024

webcluster::high_priority::max_requests::1024

webcluster::high_priority::max_retries::3

webcluster::added_via_api::false

webcluster::eds_service_name::webcluster

webcluster::172.31.11.11:1111::cx_active::1

webcluster::172.31.11.11:1111::cx_connect_fail::0

webcluster::172.31.11.11:1111::cx_total::1

webcluster::172.31.11.11:1111::rq_active::0

webcluster::172.31.11.11:1111::rq_error::0

webcluster::172.31.11.11:1111::rq_success::2

webcluster::172.31.11.11:1111::rq_timeout::0

webcluster::172.31.11.11:1111::rq_total::2

webcluster::172.31.11.11:1111::hostname::

webcluster::172.31.11.11:1111::health_flags::healthy

webcluster::172.31.11.11:1111::weight::1

webcluster::172.31.11.11:1111::region::

webcluster::172.31.11.11:1111::zone::

webcluster::172.31.11.11:1111::sub_zone::

webcluster::172.31.11.11:1111::canary::false

webcluster::172.31.11.11:1111::priority::0

webcluster::172.31.11.11:1111::success_rate::-1

webcluster::172.31.11.11:1111::local_origin_success_rate::-1

webcluster::172.31.11.12:1111::cx_active::1

webcluster::172.31.11.12:1111::cx_connect_fail::0

webcluster::172.31.11.12:1111::cx_total::1

webcluster::172.31.11.12:1111::rq_active::0

webcluster::172.31.11.12:1111::rq_error::0

webcluster::172.31.11.12:1111::rq_success::2

webcluster::172.31.11.12:1111::rq_timeout::0

webcluster::172.31.11.12:1111::rq_total::2

webcluster::172.31.11.12:1111::hostname::

webcluster::172.31.11.12:1111::health_flags::healthy

webcluster::172.31.11.12:1111::weight::1

webcluster::172.31.11.12:1111::region::

webcluster::172.31.11.12:1111::zone::

webcluster::172.31.11.12:1111::sub_zone::

webcluster::172.31.11.12:1111::canary::false

webcluster::172.31.11.12:1111::priority::0

webcluster::172.31.11.12:1111::success_rate::-1

webcluster::172.31.11.12:1111::local_origin_success_rate::-1

2.2 LDS 和 CDS 动态配置示例

我们也可以基于lds和cds实现Envoy基本全动态的配置方式

2.2.1 定义 front-envoy 配置文件

- 各Listener的定义以

<font style="color:#DF2A3F;">Discovery Response</font>的标准格式保存于一个文件中; - 各Cluster的定义同样以

<font style="color:#DF2A3F;">Discovery Response</font>的标准格式保存于另一文件中;- 如下面Envoy Bootstrap配置文件示例中的文件引用

node:id: envoy_front_proxycluster: test_Clusteradmin:profile_path: /tmp/envoy.profaccess_log_path: /tmp/admin_access.logaddress:socket_address:address: 0.0.0.0port_value: 9901dynamic_resources:lds_config:path: /etc/envoy/conf.d/lds.yaml # 监听器动态配置cds_config:path: /etc/envoy/conf.d/cds.yaml # 集群动态配置

- 功能:通过文件系统实现动态配置加载

- 监听器(LDS):定义如何接收和处理流量

- 集群(CDS):定义后端服务集群

2.2.2 定义 LDS 和 CDS 配置

LDS:

resources:

- "@type": type.googleapis.com/envoy.config.listener.v3.Listenername: listener_httpaddress:socket_address: { address: 0.0.0.0, port_value: 80 }filter_chains:- filters:name: envoy.http_connection_managertyped_config:"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManagerstat_prefix: ingress_httproute_config:name: local_routevirtual_hosts:- name: local_servicedomains: ["*"]routes:- match:prefix: "/"route:cluster: webclusterhttp_filters:- name: envoy.filters.http.routertyped_config:"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

创建HTTP监听器,将所有流量路由到webcluster

CDS:

resources:

- "@type": type.googleapis.com/envoy.config.cluster.v3.Clustername: webclusterconnect_timeout: 1stype: STRICT_DNSload_assignment:cluster_name: webclusterendpoints:- lb_endpoints:- endpoint:address:socket_address:address: webserver01port_value: 1111- endpoint:address:socket_address:address: webserver02port_value: 1111

- 作用:定义名为

<font style="color:rgb(251, 71, 135);">webcluster</font>的后端集群 - 服务发现:通过DNS解析容器名称获取IP

2.2.3 sidecar-envoy 准备

admin:profile_path: /tmp/envoy.profaccess_log_path: /tmp/admin_access.logaddress:socket_address:address: 0.0.0.0port_value: 9901static_resources:listeners:- name: listener_0address:socket_address: { address: 0.0.0.0, port_value: 1111 }filter_chains:- filters:- name: envoy.filters.network.http_connection_managertyped_config:"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManagerstat_prefix: ingress_httpcodec_type: AUTOroute_config:name: local_routevirtual_hosts:- name: local_service domains: ["*"]routes:- match: { prefix: "/" }route: { cluster: local_cluster }http_filters:- name: envoy.filters.http.routertyped_config:"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Routerclusters:- name: local_clusterconnect_timeout: 0.25stype: STATIClb_policy: ROUND_ROBINload_assignment:cluster_name: local_clusterendpoints:- lb_endpoints:- endpoint:address:socket_address: { address: 127.0.0.1, port_value: 80 }

2.2.4 docker-compose 准备

version: '3.3'services:envoy:image: envoyproxy/envoy:v1.35.0environment:- ENVOY_UID=0- ENVOY_GID=0privileged: truevolumes:- ./front-envoy.yaml:/etc/envoy/envoy.yaml- ./conf.d/:/etc/envoy/conf.d/networks:envoymesh:ipv4_address: 172.31.12.2aliases:- front-proxydepends_on:- webserver01- webserver01-app- webserver02- webserver02-appwebserver01:image: envoyproxy/envoy:v1.35.0privileged: truevolumes:- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yamlhostname: webserver01networks:envoymesh:ipv4_address: 172.31.12.11aliases:- webserver01-sidecarwebserver01-app:image: test/demoapp:v1.0environment:- PORT=80- HOST=127.0.0.1network_mode: "service:webserver01"depends_on:- webserver01webserver02:image: envoyproxy/envoy:v1.35.0privileged: truevolumes:- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yamlhostname: webserver02networks:envoymesh:ipv4_address: 172.31.12.12aliases:- webserver02-sidecarwebserver02-app:image: test/demoapp:v1.0environment:- PORT=80- HOST=127.0.0.1network_mode: "service:webserver02"depends_on:- webserver02networks:envoymesh:driver: bridgeipam:config:- subnet: 172.31.12.0/24

2.2.5 访问测试

[root@VM_24_47_tlinux /usr/local/src]# curl 172.31.12.2

test demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver02, ServerIP: 172.31.12.12!

[root@VM_24_47_tlinux /usr/local/src]# curl 172.31.12.2

test demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.12.11!

[root@VM_24_47_tlinux /usr/local/src]# curl 172.31.12.2

test demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.12.11!

[root@VM_24_47_tlinux /usr/local/src]# curl 172.31.12.2

test demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver02, ServerIP: 172.31.12.12!

[root@VM_24_47_tlinux /usr/local/src]# curl 172.31.12.2

test demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver02, ServerIP: 172.31.12.12!

[root@VM_24_47_tlinux /usr/local/src]# curl 172.31.12.2

test demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: webserver01, ServerIP: 172.31.12.11![root@VM_24_47_tlinux /usr/local/src/servicemesh_in_practise/Dynamic-Configuration/lds-cds-filesystem]# curl 172.31.12.2:9901/clusters

webcluster::observability_name::webcluster

webcluster::default_priority::max_connections::1024

webcluster::default_priority::max_pending_requests::1024

webcluster::default_priority::max_requests::1024

webcluster::default_priority::max_retries::3

webcluster::high_priority::max_connections::1024

webcluster::high_priority::max_pending_requests::1024

webcluster::high_priority::max_requests::1024

webcluster::high_priority::max_retries::3

webcluster::added_via_api::true

webcluster::172.31.12.11:1111::cx_active::3

webcluster::172.31.12.11:1111::cx_connect_fail::0

webcluster::172.31.12.11:1111::cx_total::6

webcluster::172.31.12.11:1111::rq_active::0

webcluster::172.31.12.11:1111::rq_error::0

webcluster::172.31.12.11:1111::rq_success::6

webcluster::172.31.12.11:1111::rq_timeout::0

webcluster::172.31.12.11:1111::rq_total::6

webcluster::172.31.12.11:1111::hostname::webserver01

webcluster::172.31.12.11:1111::health_flags::healthy

webcluster::172.31.12.11:1111::weight::1

webcluster::172.31.12.11:1111::region::

webcluster::172.31.12.11:1111::zone::

webcluster::172.31.12.11:1111::sub_zone::

webcluster::172.31.12.11:1111::canary::false

webcluster::172.31.12.11:1111::priority::0

webcluster::172.31.12.11:1111::success_rate::-1

webcluster::172.31.12.11:1111::local_origin_success_rate::-1

webcluster::172.31.12.12:1111::cx_active::2

webcluster::172.31.12.12:1111::cx_connect_fail::0

webcluster::172.31.12.12:1111::cx_total::5

webcluster::172.31.12.12:1111::rq_active::0

webcluster::172.31.12.12:1111::rq_error::0

webcluster::172.31.12.12:1111::rq_success::6

webcluster::172.31.12.12:1111::rq_timeout::0

webcluster::172.31.12.12:1111::rq_total::6

webcluster::172.31.12.12:1111::hostname::webserver02

webcluster::172.31.12.12:1111::health_flags::healthy

webcluster::172.31.12.12:1111::weight::1

webcluster::172.31.12.12:1111::region::

webcluster::172.31.12.12:1111::zone::

webcluster::172.31.12.12:1111::sub_zone::

webcluster::172.31.12.12:1111::canary::false

webcluster::172.31.12.12:1111::priority::0

webcluster::172.31.12.12:1111::success_rate::-1

webcluster::172.31.12.12:1111::local_origin_success_rate::-1

三、基于 API 的动态配置

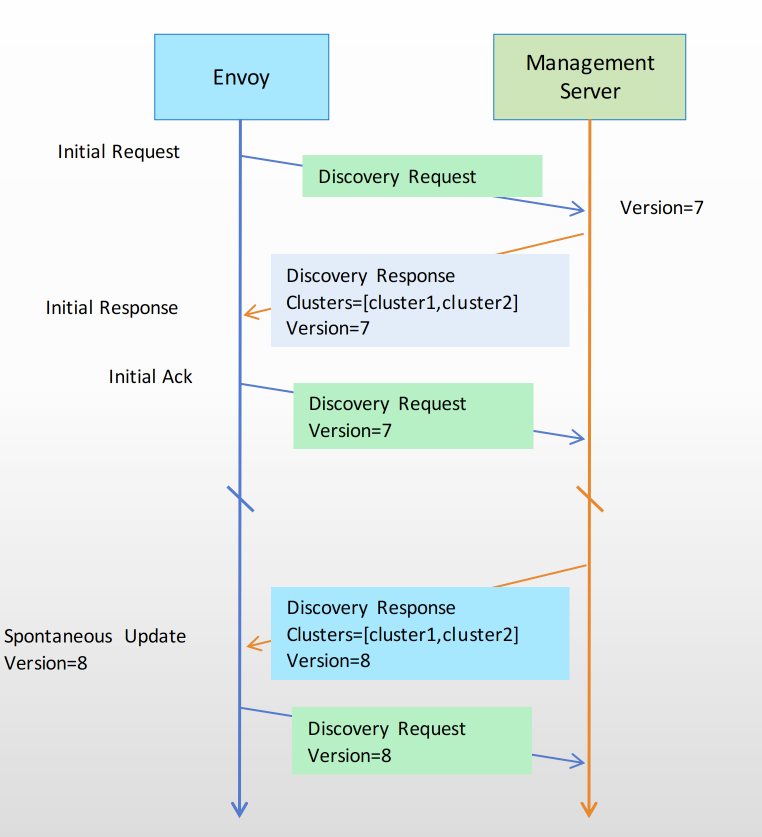

Enovy支持为每个<font style="color:#DF2A3F;">xDS API</font>独立指定<font style="color:#DF2A3F;">gRPC ApiConfigSource</font>,它指向与管理服务器对应的某上游集群;

- 这将为每个xDS资源类型启动一个独立的双向 gRPC流,可能会发送给不同的管理服务器

- 每个流都有自己独立维护的资源版本,且不存在跨资源类型的共享版本机制;

- 在不使用ADS的情况下,每个资源类型可能具有不同的版本,因为Envoy API允许指向不同的EDS/RDS资源配置并对应不同的

<font style="color:#DF2A3F;">ConfigSources</font>API的交付方式采用最终一致性机制;

以 LDS 为例,它配置Listener以动态方式发现和加载,而内部的路由可由发现的Listener直接提供,也可配置再经由RDS发现;

下面为LDS配置格式,CDS等的配置格式类同;

dynamic_resouces:lds_config:api_config_source:api_type: ... # API可经由REST或gRPC获取,支持的类型包括REST、gRPC和delta_gRPCresource_api_version: ... # xDS资源的API版本,对于1.19及之后的Envoy版本,要使用v3;rate_limit_settings: {...} # 速率限制grpc_services: # 提供grpc服务的一到多个服务源transport_api_version: ... # xDS传输协议使用的API版本,对于1.19及之后的Envoy版本,要使用v3;envoy_grpc: # Envoy内建的grpc客户端,envoy_grpc和google_grpc二者仅能用其一;cluster_name: ... # grpc集群的名称;google_grpc: # Google的C++ grpc客户端timeout: ... # grpc超时时长;

注意:提供gRPC API服务的Management Server(控制平面)也需要定义为Envoy上的集群,并由envoy实例通过xDS API进行请求;

- 通常,这些管理服务器需要以静态资源的格式提供;

- 类似于,DHCP协议的Server端的地址必须静态配置,而不能经由DHCP协议获取;

3.1 基于 gRPC 管理服务器订阅(lds 和 cds)

基于gRPC的订阅功能需要向专用的Management Server请求配置信息

下面的示例配置使用了lds和cds分别动态获取Listener和Cluster相关的配置

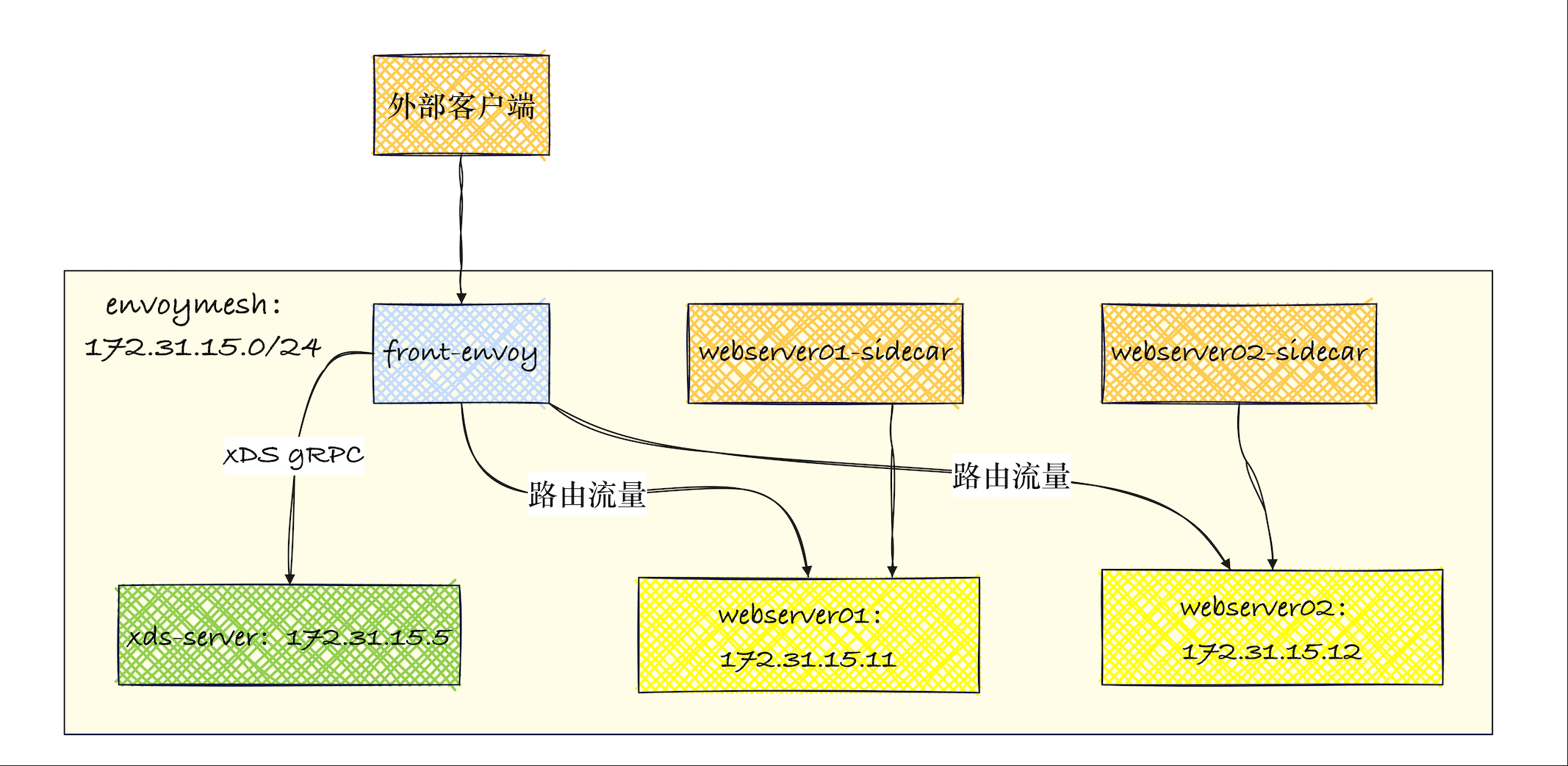

**front-envoy**:边缘代理,接收外部流量,通过 xDS 动态获取路由配置。**xds-server**:控制平面,提供 LDS(监听器)和 CDS(集群)配置。**webserver01/02**:两个后端服务(运行<font style="color:#DF2A3F;">demoapp:v1.0</font>)。**webserverXX-sidecar**:每个后端服务的 Envoy Sidecar,配置为静态代理到本地服务(127.0.0.1:80)。

3.1.1 front-envoy 配置文件

node:id: envoy_front_proxycluster: webclusteradmin:profile_path: /tmp/envoy.profaccess_log_path: /tmp/admin_access.logaddress:socket_address:address: 0.0.0.0port_value: 9901dynamic_resources:lds_config:resource_api_version: V3api_config_source:api_type: GRPCtransport_api_version: V3grpc_services:- envoy_grpc:cluster_name: xds_clustercds_config:resource_api_version: V3api_config_source:api_type: GRPCtransport_api_version: V3grpc_services:- envoy_grpc:cluster_name: xds_clusterstatic_resources:clusters:- name: xds_clusterconnect_timeout: 0.25stype: STRICT_DNStyped_extension_protocol_options:envoy.extensions.upstreams.http.v3.HttpProtocolOptions:"@type": type.googleapis.com/envoy.extensions.upstreams.http.v3.HttpProtocolOptionsexplicit_http_config:http2_protocol_options: {}lb_policy: ROUND_ROBINload_assignment:cluster_name: xds_clusterendpoints:- lb_endpoints:- endpoint:address:socket_address:address: xdsserver # XDS服务器地址port_value: 18000

3.3.2 sidecar-envoy 文件

admin:profile_path: /tmp/envoy.profaccess_log_path: /tmp/admin_access.logaddress:socket_address:address: 0.0.0.0port_value: 9901static_resources:listeners:- name: listener_0address:socket_address: { address: 0.0.0.0, port_value: 1111} # sidecar监听1111端口filter_chains:- filters:- name: envoy.filters.network.http_connection_managertyped_config:"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManagerstat_prefix: ingress_httpcodec_type: AUTOroute_config:name: local_routevirtual_hosts:- name: local_service domains: ["*"]routes:- match: { prefix: "/" }route: { cluster: local_cluster }http_filters:- name: envoy.filters.http.routertyped_config:"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Routerclusters:- name: local_clusterconnect_timeout: 0.25stype: STATIClb_policy: ROUND_ROBINload_assignment:cluster_name: local_clusterendpoints:- lb_endpoints:- endpoint:address:socket_address: { address: 127.0.0.1, port_value: 80 }

3.3.3 docker-compose 文件

- envoy(前端代理):

- 使用

<font style="color:#DF2A3F;">front-envoy.yaml</font>配置 - 依赖 xdsserver 获取动态配置

- 使用

- webserver01/02:

- 运行应用(监听 80 端口)

- webserverXX-sidecar:

- 使用

<font style="color:#DF2A3F;">envoy-sidecar-proxy.yaml</font>配置 <font style="color:#DF2A3F;">network_mode: service:webserverXX</font>表示与后端共享网络命名空间(共享 IP)

- 使用

- xdsserver:

- 提供 xDS 服务(端口 18000)

- 加载静态配置

<font style="color:#DF2A3F;">resources/config.yaml</font>

version: '3.3'services:envoy:image: envoyproxy/envoy:v1.29.2environment:- ENVOY_UID=0- ENVOY_GID=0privileged: truevolumes:- ./front-envoy.yaml:/etc/envoy/envoy.yamlnetworks:envoymesh:ipv4_address: 172.31.15.2aliases:- front-proxydepends_on:- webserver01- webserver02- xdsserverwebserver01:image: test/demoapp:v1.0environment:- PORT=80- HOST=127.0.0.1hostname: webserver01networks:envoymesh:ipv4_address: 172.31.15.11webserver01-sidecar:image: envoyproxy/envoy:v1.29.2privileged: truevolumes:- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yamlnetwork_mode: "service:webserver01"depends_on:- webserver01webserver02:image: test/demoapp:v1.0environment:- PORT=80- HOST=127.0.0.1hostname: webserver02networks:envoymesh:ipv4_address: 172.31.15.12webserver02-sidecar:image: envoyproxy/envoy:v1.29.2privileged: truevolumes:- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yamlnetwork_mode: "service:webserver02"depends_on:- webserver02xdsserver:image: test/envoy-xds-server:v0.2environment:- SERVER_PORT=18000- NODE_ID=envoy_front_proxy- RESOURCES_FILE=/etc/envoy-xds-server/config/config.yamlvolumes:- ./resources:/etc/envoy-xds-server/config/networks:envoymesh:ipv4_address: 172.31.15.5aliases:- xdsserver- xds-serviceexpose:- "18000"networks:envoymesh:driver: bridgeipam:config:- subnet: 172.31.15.0/24

3.3.4 xDS Server 配置准备

dockercompose 中 XDS Server 挂载了本地目录的 resouces

XDS Server (<font style="color:rgb(251, 71, 135);">xdsserver</font>)

- 角色:控制平面核心组件

- 镜像:

<font style="color:rgb(251, 71, 135);">test/envoy-xds-server:v0.2</font> - 功能:集中式配置管理中心

- 配置来源:

<font style="color:rgb(251, 71, 135);">resources/config.yaml</font> - 服务端口:18000 (gRPC)

[root@VM_24_47_tlinux /usr/local/src]# cat resources/config.yaml

name: myconfig

spec:listeners:- name: listener_httpaddress: 0.0.0.0port: 80routes:- name: local_routeprefix: / # 匹配所有路径clusters:- webcluster # 指向集群clusters:- name: webclusterendpoints:- address: 172.31.15.11 # webserver01 IPport: 1111 # sidecar 监听端口[root@VM_24_47_tlinux /usr/local/src]# cat resources/config.yaml-v2

name: myconfig

spec:listeners:- name: listener_httpaddress: 0.0.0.0port: 80routes:- name: local_routeprefix: /clusters:- webclusterclusters:- name: webclusterendpoints:- address: 172.31.15.11port: 1111- address: 172.31.15.12port: 1111

3.3.5 测试验证

运行后查看 clusters 端点,可以看到当前只有 15.11,还有 xds server 的端点

[root@VM_24_47_tlinux /usr/local/src]# curl 172.31.15.2:9901/clusters

xds_cluster::observability_name::xds_cluster

xds_cluster::default_priority::max_connections::1024

xds_cluster::default_priority::max_pending_requests::1024

xds_cluster::default_priority::max_requests::1024

xds_cluster::default_priority::max_retries::3

xds_cluster::high_priority::max_connections::1024

xds_cluster::high_priority::max_pending_requests::1024

xds_cluster::high_priority::max_requests::1024

xds_cluster::high_priority::max_retries::3

xds_cluster::added_via_api::false

xds_cluster::172.31.15.5:18000::cx_active::1

xds_cluster::172.31.15.5:18000::cx_connect_fail::0

xds_cluster::172.31.15.5:18000::cx_total::1

xds_cluster::172.31.15.5:18000::rq_active::4

xds_cluster::172.31.15.5:18000::rq_error::0

xds_cluster::172.31.15.5:18000::rq_success::0

xds_cluster::172.31.15.5:18000::rq_timeout::0

xds_cluster::172.31.15.5:18000::rq_total::4

xds_cluster::172.31.15.5:18000::hostname::xdsserver

xds_cluster::172.31.15.5:18000::health_flags::healthy

xds_cluster::172.31.15.5:18000::weight::1

xds_cluster::172.31.15.5:18000::region::

xds_cluster::172.31.15.5:18000::zone::

xds_cluster::172.31.15.5:18000::sub_zone::

xds_cluster::172.31.15.5:18000::canary::false

xds_cluster::172.31.15.5:18000::priority::0

xds_cluster::172.31.15.5:18000::success_rate::-1

xds_cluster::172.31.15.5:18000::local_origin_success_rate::-1

webcluster::observability_name::webcluster

webcluster::default_priority::max_connections::1024

webcluster::default_priority::max_pending_requests::1024

webcluster::default_priority::max_requests::1024

webcluster::default_priority::max_retries::3

webcluster::high_priority::max_connections::1024

webcluster::high_priority::max_pending_requests::1024

webcluster::high_priority::max_requests::1024

webcluster::high_priority::max_retries::3

webcluster::added_via_api::true

webcluster::172.31.15.11:1111::cx_active::4

webcluster::172.31.15.11:1111::cx_connect_fail::0

webcluster::172.31.15.11:1111::cx_total::4

webcluster::172.31.15.11:1111::rq_active::0

webcluster::172.31.15.11:1111::rq_error::0

webcluster::172.31.15.11:1111::rq_success::6

webcluster::172.31.15.11:1111::rq_timeout::0

webcluster::172.31.15.11:1111::rq_total::6

webcluster::172.31.15.11:1111::hostname::

webcluster::172.31.15.11:1111::health_flags::healthy

webcluster::172.31.15.11:1111::weight::1

webcluster::172.31.15.11:1111::region::

webcluster::172.31.15.11:1111::zone::

webcluster::172.31.15.11:1111::sub_zone::

webcluster::172.31.15.11:1111::canary::false

webcluster::172.31.15.11:1111::priority::0

webcluster::172.31.15.11:1111::success_rate::-1

webcluster::172.31.15.11:1111::local_origin_success_rate::-1

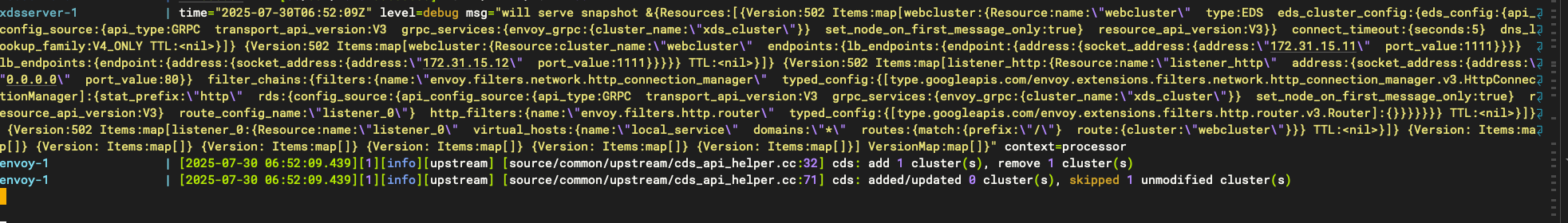

现在可以在 config 中添加 12,看看能否自动发现

name: myconfig

spec:listeners:- name: listener_httpaddress: 0.0.0.0port: 80routes:- name: local_routeprefix: /clusters:- webclusterclusters:- name: webclusterendpoints:- address: 172.31.15.11port: 1111- address: 172.31.15.12port: 1111

修改后可以查看日志,可以看到已经自动更新了

再次查看端点,发现 12 也有了

[root@VM_24_47_tlinux /usr/local/src]# curl 172.31.15.2:9901/clusters

xds_cluster::observability_name::xds_cluster

xds_cluster::default_priority::max_connections::1024

xds_cluster::default_priority::max_pending_requests::1024

xds_cluster::default_priority::max_requests::1024

xds_cluster::default_priority::max_retries::3

xds_cluster::high_priority::max_connections::1024

xds_cluster::high_priority::max_pending_requests::1024

xds_cluster::high_priority::max_requests::1024

xds_cluster::high_priority::max_retries::3

xds_cluster::added_via_api::false

xds_cluster::172.31.15.5:18000::cx_active::1

xds_cluster::172.31.15.5:18000::cx_connect_fail::0

xds_cluster::172.31.15.5:18000::cx_total::1

xds_cluster::172.31.15.5:18000::rq_active::4

xds_cluster::172.31.15.5:18000::rq_error::0

xds_cluster::172.31.15.5:18000::rq_success::0

xds_cluster::172.31.15.5:18000::rq_timeout::0

xds_cluster::172.31.15.5:18000::rq_total::4

xds_cluster::172.31.15.5:18000::hostname::xdsserver

xds_cluster::172.31.15.5:18000::health_flags::healthy

xds_cluster::172.31.15.5:18000::weight::1

xds_cluster::172.31.15.5:18000::region::

xds_cluster::172.31.15.5:18000::zone::

xds_cluster::172.31.15.5:18000::sub_zone::

xds_cluster::172.31.15.5:18000::canary::false

xds_cluster::172.31.15.5:18000::priority::0

xds_cluster::172.31.15.5:18000::success_rate::-1

xds_cluster::172.31.15.5:18000::local_origin_success_rate::-1

webcluster::observability_name::webcluster

webcluster::default_priority::max_connections::1024

webcluster::default_priority::max_pending_requests::1024

webcluster::default_priority::max_requests::1024

webcluster::default_priority::max_retries::3

webcluster::high_priority::max_connections::1024

webcluster::high_priority::max_pending_requests::1024

webcluster::high_priority::max_requests::1024

webcluster::high_priority::max_retries::3

webcluster::added_via_api::true

webcluster::172.31.15.11:1111::cx_active::4

webcluster::172.31.15.11:1111::cx_connect_fail::0

webcluster::172.31.15.11:1111::cx_total::4

webcluster::172.31.15.11:1111::rq_active::0

webcluster::172.31.15.11:1111::rq_error::0

webcluster::172.31.15.11:1111::rq_success::6

webcluster::172.31.15.11:1111::rq_timeout::0

webcluster::172.31.15.11:1111::rq_total::6

webcluster::172.31.15.11:1111::hostname::

webcluster::172.31.15.11:1111::health_flags::healthy

webcluster::172.31.15.11:1111::weight::1

webcluster::172.31.15.11:1111::region::

webcluster::172.31.15.11:1111::zone::

webcluster::172.31.15.11:1111::sub_zone::

webcluster::172.31.15.11:1111::canary::false

webcluster::172.31.15.11:1111::priority::0

webcluster::172.31.15.11:1111::success_rate::-1

webcluster::172.31.15.11:1111::local_origin_success_rate::-1

webcluster::172.31.15.12:1111::cx_active::0

webcluster::172.31.15.12:1111::cx_connect_fail::0

webcluster::172.31.15.12:1111::cx_total::0

webcluster::172.31.15.12:1111::rq_active::0

webcluster::172.31.15.12:1111::rq_error::0

webcluster::172.31.15.12:1111::rq_success::0

webcluster::172.31.15.12:1111::rq_timeout::0

webcluster::172.31.15.12:1111::rq_total::0

webcluster::172.31.15.12:1111::hostname::

webcluster::172.31.15.12:1111::health_flags::healthy

webcluster::172.31.15.12:1111::weight::1

webcluster::172.31.15.12:1111::region::

webcluster::172.31.15.12:1111::zone::

webcluster::172.31.15.12:1111::sub_zone::

webcluster::172.31.15.12:1111::canary::false

webcluster::172.31.15.12:1111::priority::0

webcluster::172.31.15.12:1111::success_rate::-1

webcluster::172.31.15.12:1111::local_origin_success_rate::-1

3.2 ADS 学习

Envoy 中的 ADS(Aggregated Discovery Service,聚合发现服务) 是 xDS API 的核心机制,用于统一管理和分发所有动态配置。它解决了多配置源场景下的顺序依赖和原子更新问题,是生产级服务网格(如 Istio)的基石。

1. 解决配置更新顺序问题

- 典型场景:更新集群时需顺序执行:

- 先更新集群定义(CDS)

- 再更新端点信息(EDS)

- 最后更新路由(RDS)

- ADS 方案:通过单条 gRPC 流按依赖顺序推送配置,避免更新错乱。

2. 减少连接风暴

**<font style="color:rgb(51, 54, 57);">非 ADS 模式</font>**:每个 xDS 类型(CDS/EDS/LDS/RDS)需独立 gRPC 连接**<font style="color:rgb(51, 54, 57);">ADS 模式</font>**:所有配置通过单连接传输,连接数减少 75%

3. 保证配置原子性

所有关联配置(如监听器+路由+集群)作为一个原子事务更新,避免中间状态不一致。

3.3 基于 ADS 实现 gRPC 管理服务

3.3.1 front-envoy 配置

node:id: envoy_front_proxycluster: webclusteradmin:profile_path: /tmp/envoy.profaccess_log_path: /tmp/admin_access.logaddress:socket_address:address: 0.0.0.0port_value: 9901dynamic_resources:ads_config:api_type: GRPCtransport_api_version: V3grpc_services:- envoy_grpc:cluster_name: xds_clusterset_node_on_first_message_only: truecds_config:resource_api_version: V3ads: {}lds_config:resource_api_version: V3ads: {}static_resources:clusters:- name: xds_clusterconnect_timeout: 0.25stype: STRICT_DNS# The extension_protocol_options field is used to provide extension-specific protocol options for upstream connections. typed_extension_protocol_options:envoy.extensions.upstreams.http.v3.HttpProtocolOptions:"@type": type.googleapis.com/envoy.extensions.upstreams.http.v3.HttpProtocolOptionsexplicit_http_config:http2_protocol_options: {}lb_policy: ROUND_ROBINload_assignment:cluster_name: xds_clusterendpoints:- lb_endpoints:- endpoint:address:socket_address:address: xdsserverport_value: 18000

3.3.2 sidecar-envoy 文件

admin:profile_path: /tmp/envoy.profaccess_log_path: /tmp/admin_access.logaddress:socket_address:address: 0.0.0.0port_value: 9901static_resources:listeners:- name: listener_0address:socket_address: { address: 0.0.0.0, port_value: 1111 }filter_chains:- filters:- name: envoy.filters.network.http_connection_managertyped_config:"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManagerstat_prefix: ingress_httpcodec_type: AUTOroute_config:name: local_routevirtual_hosts:- name: local_service domains: ["*"]routes:- match: { prefix: "/" }route: { cluster: local_cluster }http_filters:- name: envoy.filters.http.routertyped_config:"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Routerclusters:- name: local_clusterconnect_timeout: 0.25stype: STATIClb_policy: ROUND_ROBINload_assignment:cluster_name: local_clusterendpoints:- lb_endpoints:- endpoint:address:socket_address: { address: 127.0.0.1, port_value: 80 }

3.3.3 docker-compose 文件

version: '3.3'services:envoy:image: envoyproxy/envoy:v1.29.2environment:- ENVOY_UID=0- ENVOY_GID=0volumes:- ./front-envoy.yaml:/etc/envoy/envoy.yamlnetworks:envoymesh:ipv4_address: 172.31.16.2aliases:- front-proxydepends_on:- webserver01- webserver02- xdsserverwebserver01:image: test/demoapp:v1.0environment:- PORT=80- HOST=127.0.0.1hostname: webserver01networks:envoymesh:ipv4_address: 172.31.16.11webserver01-sidecar:image: envoyproxy/envoy:v1.29.2volumes:- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yamlnetwork_mode: "service:webserver01"depends_on:- webserver01webserver02:image: test/demoapp:v1.0environment:- PORT=80- HOST=127.0.0.1hostname: webserver02networks:envoymesh:ipv4_address: 172.31.16.12webserver02-sidecar:image: envoyproxy/envoy:v1.29.2volumes:- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yamlnetwork_mode: "service:webserver02"depends_on:- webserver02xdsserver:image: test/envoy-xds-server:v0.2environment:- SERVER_PORT=18000- NODE_ID=envoy_front_proxy- RESOURCES_FILE=/etc/envoy-xds-server/config/config.yamlvolumes:- ./resources:/etc/envoy-xds-server/config/networks:envoymesh:ipv4_address: 172.31.16.5aliases:- xdsserver- xds-serviceexpose:- "18000"networks:envoymesh:driver: bridgeipam:config:- subnet: 172.31.16.0/24

resource 配置文件

[root@VM_24_47_tlinux /usr/local/src]# cat resources/config.yaml

name: myconfig

spec:listeners:- name: listener_httpaddress: 0.0.0.0port: 80routes:- name: local_routeprefix: /clusters:- webclusterclusters:- name: webclusterendpoints:- address: 172.31.16.11port: 1111[root@VM_24_47_tlinux /usr/local/src]# cat resources/config.yaml-v2

name: myconfig

spec:listeners:- name: listener_httpaddress: 0.0.0.0port: 80routes:- name: local_routeprefix: /clusters:- webclusterclusters:- name: webclusterendpoints:- address: 172.31.16.11port: 1111- address: 172.31.16.12port: 1111