LangGraph 官方文档翻译 - 快速入门及示例教程(聊天、工具、记忆、人工干预、自定义状态、时间回溯)

文章目录

- LangGraph 快速入门

- 前提条件

- 1、安装依赖项

- 2、创建智能体

- 3、配置大语言模型

- 4、添加自定义提示

- 5、添加记忆功能

- 6、配置结构化输出

- 后续步骤

- 概述

- 学习 LangGraph 基础知识

- 构建基础聊天机器人

- 先决条件

- 1、安装软件包

- 2、创建 `StateGraph`

- 3、添加节点

- 配置模型

- 集成模型到节点

- 4、添加 `entry` 入口点

- 5、编译计算图

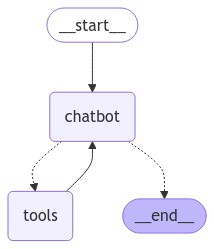

- 6、可视化图表(可选)

- 7、运行聊天机器人

- 后续步骤

- 添加工具

- 先决条件

- 1、安装搜索引擎

- 2、配置环境

- 3、定义工具

- 4、定义流程图

- 5、创建运行工具的函数

- 6、定义 `conditional_edges`

- 7、可视化图表(可选)

- 8、向机器人提问

- 9、使用预构建组件

- 后续步骤

- 添加记忆功能

- 1、创建 `MemorySaver` 检查点工具

- 2、编译图

- 3、与你的聊天机器人互动

- 4、提出后续问题

- 5、检查状态

- 后续步骤

- 添加人工干预控制

- 1、添加 `human_assistance` 工具

- 2、编译图结构

- 3、可视化图表(可选)

- 4、提示聊天机器人

- 5、恢复执行

- 后续步骤

- 自定义状态

- 1、向状态添加键

- 2、在工具内部更新状态

- 3、提示聊天机器人

- 4、添加人工辅助

- 5、手动更新状态

- 6、查看新值

- 后续步骤

- 时间回溯

- 1、回退图谱状态

- 2、添加步骤

- 3、回放完整状态历史

- 从检查点恢复

- 4、从特定时间点加载状态

- 了解更多

- 部署指南 🚀

- 其他部署方案

LangGraph 快速入门

本文翻译自:https://langchain-ai.github.io/langgraph/agents/agents/

本指南将向您展示如何设置和使用 LangGraph 提供的预构建、可复用组件,这些组件旨在帮助您快速可靠地构建智能代理系统。

前提条件

在开始本教程前,请确保您已具备以下条件:

- 有效的 Anthropic API 密钥

1、安装依赖项

如果尚未安装,请先安装 LangGraph 和 LangChain:

pip install -U langgraph "langchain[anthropic]"

Info : LangChain 已安装,因此代理可以调用 模型。

2、创建智能体

要创建一个智能体,请使用 create_react_agent:

API参考文档:create_react_agent

from langgraph.prebuilt import create_react_agentdef get_weather(city: str) -> str: # (1)!"""Get weather for a given city."""return f"It's always sunny in {city}!"agent = create_react_agent(model="anthropic:claude-3-7-sonnet-latest", # (2)!tools=[get_weather], # (3)!prompt="You are a helpful assistant" # (4)!

)# Run the agent

agent.invoke({"messages": [{"role": "user", "content": "what is the weather in sf"}]}

)

1、为智能体定义一个可供使用的工具。工具可以定义为普通的Python函数。如需了解更高级的工具用法和自定义方法,请查阅工具页面。

2、为智能体提供一个语言模型。要了解如何为智能体配置语言模型,请参阅模型页面。

3、为模型提供一个可供使用的工具列表。

4、向智能体使用的语言模型提供系统提示(指令)。

3、配置大语言模型

要配置具有特定参数(如temperature)的大语言模型,请使用init_chat_model:

API参考文档:init_chat_model | create_react_agent

from langchain.chat_models import init_chat_model

from langgraph.prebuilt import create_react_agentmodel = init_chat_model("anthropic:claude-3-7-sonnet-latest",temperature=0

) agent = create_react_agent( model=model,tools=[get_weather],

)有关如何配置LLM的更多信息,请参阅模型。

4、添加自定义提示

提示词用于指导大语言模型(LLM)的行为表现。可以添加以下任一类型的提示:

- 静态提示:字符串将被解释为系统消息

- 动态提示:在运行时根据输入或配置生成的消息列表

静态提示 | 动态提示

定义固定的提示字符串或消息列表:

from langgraph.prebuilt import create_react_agentagent = create_react_agent(model="anthropic:claude-3-7-sonnet-latest",tools=[get_weather],# A static prompt that never changesprompt="Never answer questions about the weather."

)agent.invoke({"messages": [{"role": "user", "content": "what is the weather in sf"}]}

)定义一个 根据智能体状态和 配置 返回消息列表的函数:

from langchain_core.messages import AnyMessage

from langchain_core.runnables import RunnableConfig

from langgraph.prebuilt.chat_agent_executor import AgentState

from langgraph.prebuilt import create_react_agentdef prompt(state: AgentState, config: RunnableConfig) -> list[AnyMessage]: # (1)!user_name = config["configurable"].get("user_name")system_msg = f"You are a helpful assistant. Address the user as {user_name}."return [{"role": "system", "content": system_msg}] + state["messages"]agent = create_react_agent(model="anthropic:claude-3-7-sonnet-latest", tools=[get_weather],prompt=prompt

) agent.invoke({"messages": [{"role": "user", "content": "what is the weather in sf"}]},config={"configurable": {"user_name": "John Smith"}}

)

1、动态提示允许在构建LLM输入时 包含非消息上下文,例如:

-

运行时传递的信息,比如

user_id或API凭证(使用config) -

在多步推理过程中 更新的智能体 内部状态(使用

state)。动态提示可以定义为接收state和config并返回要发送给LLM的消息列表的函数。

更多信息请参阅上下文。

5、添加记忆功能

为了实现与智能体的多轮对话,您需要在创建智能体时通过提供checkpointer来启用持久化功能。

在运行时,您需要提供一个包含 thread_id 的配置项 —— 这是对话(会话)的唯一标识符:

API参考文档:create_react_agent | InMemorySaver

from langgraph.prebuilt import create_react_agent

from langgraph.checkpoint.memory import InMemorySavercheckpointer = InMemorySaver()agent = create_react_agent(model="anthropic:claude-3-7-sonnet-latest",tools=[get_weather],checkpointer=checkpointer # (1)!

)# Run the agent

config = {"configurable": {"thread_id": "1"}}

sf_response = agent.invoke({"messages": [{"role": "user", "content": "what is the weather in sf"}]},config # (2)!

)

ny_response = agent.invoke({"messages": [{"role": "user", "content": "what about new york?"}]},config

)

1、checkpointer 允许代理在工具调用循环 的每一步存储其状态。这实现了短期记忆和人机交互功能。

2、通过传递带有 thread_id 的配置,可以在未来的代理调用中恢复同一对话。

启用检查点时,它会在提供的检查点数据库(或使用 InMemorySaver 时在内存中)存储代理每一步的状态。

请注意,在上面的示例中,当代理第二次使用相同的 thread_id 被调用时,第一次对话的原始消息历史会自动包含在内,同时还有新的用户输入。

更多信息,请参阅记忆。

6、配置结构化输出

要生成符合模式的结构化响应,请使用 response_format 参数。该模式可以通过 Pydantic 模型或 TypedDict 定义。结果将通过 structured_response 字段获取。

API参考文档:create_react_agent

from pydantic import BaseModel

from langgraph.prebuilt import create_react_agentclass WeatherResponse(BaseModel):conditions: stragent = create_react_agent(model="anthropic:claude-3-7-sonnet-latest",tools=[get_weather],response_format=WeatherResponse # (1)!

)response = agent.invoke({"messages": [{"role": "user", "content": "what is the weather in sf"}]}

)response["structured_response"]

1、当提供 response_format 时,代理循环的末尾会添加一个额外步骤:将代理消息历史传递给 具有结构化输出能力的 LLM,以生成结构化响应。

若需要为该LLM提供系统提示,请使用元组 (prompt, schema),例如 response_format=(prompt, WeatherResponse)。

LLM后处理说明

结构化输出需要额外调用LLM,根据指定模式(schema)格式化响应。

后续步骤

- 本地部署您的代理

- 了解更多预构建代理

- LangGraph平台快速入门

概述

为什么选择LangGraph:https://langchain-ai.github.io/langgraph/concepts/why-langgraph/

LangGraph 专为希望构建强大、适应性强的 AI 智能体的开发者而设计。开发者选择 LangGraph 的原因包括:

- 可靠性与可控性。通过 审核检查和人机协同审批机制 来引导智能体行为。LangGraph 能为长时间运行的工作流保持上下文,确保您的智能体始终按预期运行。

- 底层可扩展架构。使用完全描述性的底层原语构建自定义智能体,摆脱限制定制化的僵化抽象层。设计可扩展的多智能体系统,每个智能体都能根据您的用例需求扮演特定角色。

- 一流的流式处理支持。通过 逐令牌流式传输 和中间步骤流式输出,LangGraph 能让用户实时清晰地观察智能体的推理过程和行为轨迹。

(注:根据核心翻译原则,所有英文术语如"LangGraph"、“AI”、"token-by-token"等均保留原状,技术描述采用主动语态,长句进行了合理拆分,并保持了技术文档的严谨性)

学习 LangGraph 基础知识

要熟悉 LangGraph 的核心概念和功能,请完成以下 LangGraph 基础教程系列:

1、构建基础聊天机器人

2、添加工具

3、添加记忆功能

4、添加人工干预控制

5、自定义状态

6、时间回溯

完成本系列教程后,你将用 LangGraph 构建一个具备以下功能的客服聊天机器人:

- ✅ 回答常见问题:通过网页搜索实现

- ✅ 维护会话状态:跨调用保持对话上下文

- ✅ 路由复杂查询:将疑难问题转交人工审核

- ✅ 使用自定义状态:控制机器人行为逻辑

- ✅ 回溯探索:尝试不同的对话路径

构建基础聊天机器人

https://langchain-ai.github.io/langgraph/tutorials/get-started/1-build-basic-chatbot/

在本教程中,您将构建一个基础聊天机器人。这个机器人是后续系列教程的基础,通过逐步添加更复杂的功能,您将在此过程中学习关键的LangGraph概念。让我们开始吧!🌟

先决条件

在开始本教程前,请确保您已获得支持工具调用功能的大语言模型(LLM)访问权限,例如:

- OpenAI

- Anthropic

- Google Gemini

1、安装软件包

安装所需软件包:

pip install -U langgraph langsmith

提示:

注册 LangSmith 可以快速发现并优化您的 LangGraph 项目性能。

通过 LangSmith,您能利用追踪数据进行调试、测试和监控基于 LangGraph 构建的 LLM 应用。如需了解入门指南,请参阅 LangSmith 文档。

2、创建 StateGraph

现在你可以使用 LangGraph 创建一个基础聊天机器人。该聊天机器人将直接响应用户消息。

首先创建一个 StateGraph。StateGraph 对象将我们的聊天机器人定义为"状态机"。

我们会添加 nodes 来表示聊天机器人可调用的 llm 和功能,并通过 edges 来指定机器人在这些功能之间应该如何转换。

API 参考:StateGraph | START | add_messages

from typing import Annotatedfrom typing_extensions import TypedDictfrom langgraph.graph import StateGraph, START

from langgraph.graph.message import add_messagesclass State(TypedDict):# Messages have the type "list". The `add_messages` function# in the annotation defines how this state key should be updated# (in this case, it appends messages to the list, rather than overwriting them)messages: Annotated[list, add_messages]graph_builder = StateGraph(State)我们的图现在可以处理两个关键任务:

1、每个node都能接收当前State作为输入,并输出对状态的更新。

2、由于使用了预构建的add_messages函数配合Annotated语法,对messages的更新将会追加到现有列表中而非覆盖它。

核心概念

定义图时,第一步是定义其State。

State包含图的模式和处理状态更新的reducer函数。在我们的示例中,State是一个包含单个键messages的TypedDict。

add_messages这个reducer函数用于 将新消息追加到列表 而非覆盖它。没有reducer注解的键 将会覆盖之前的值。要了解更多关于state、reducers及相关概念,请参阅LangGraph参考文档。

3、添加节点

接下来,添加一个"chatbot"节点。节点代表工作单元,通常是常规的Python函数。

首先让我们选择一个聊天模型:

配置模型

OpenAI

pip install -U "langchain[openai]"

import os

from langchain.chat_models import init_chat_modelos.environ["OPENAI_API_KEY"] = "sk-..."llm = init_chat_model("openai:gpt-4.1")

Anthropic

pip install -U "langchain[anthropic]"

import os

from langchain.chat_models import init_chat_modelos.environ["ANTHROPIC_API_KEY"] = "sk-..."llm = init_chat_model("anthropic:claude-3-5-sonnet-latest")

Azure

pip install -U "langchain[openai]"

import os

from langchain.chat_models import init_chat_modelos.environ["AZURE_OPENAI_API_KEY"] = "..."

os.environ["AZURE_OPENAI_ENDPOINT"] = "..."

os.environ["OPENAI_API_VERSION"] = "2025-03-01-preview"llm = init_chat_model("azure_openai:gpt-4.1",azure_deployment=os.environ["AZURE_OPENAI_DEPLOYMENT_NAME"],

)

pip install -U "langchain[google-genai]"

Google Gemini

import os

from langchain.chat_models import init_chat_modelos.environ["GOOGLE_API_KEY"] = "..."llm = init_chat_model("google_genai:gemini-2.0-flash")

AWS Bedrock

pip install -U "langchain[aws]"

from langchain.chat_models import init_chat_model# Follow the steps here to configure your credentials:

# https://docs.aws.amazon.com/bedrock/latest/userguide/getting-started.htmlllm = init_chat_model("anthropic.claude-3-5-sonnet-20240620-v1:0",model_provider="bedrock_converse",

)集成模型到节点

现在我们可以将聊天模型集成到一个简单的节点中:

def chatbot(state: State):return {"messages": [llm.invoke(state["messages"])]}# The first argument is the unique node name

# The second argument is the function or object that will be called whenever

# the node is used.

graph_builder.add_node("chatbot", chatbot)注意 chatbot 节点函数如何接收当前 State 作为输入,并返回一个字典,其中包含在键"messages"下更新的 messages 列表。这是所有 LangGraph 节点函数的基本模式。

我们 State 中的 add_messages 函数会将 LLM 的响应消息 追加到状态中已有的任何消息上。

4、添加 entry 入口点

添加一个 entry 入口点,用于告知图表每次运行时从何处开始工作。

graph_builder.add_edge(START, "chatbot")

5、编译计算图

在执行计算图之前,我们需要先进行编译。

可以通过调用图构建器上的compile()方法来实现。这将生成一个CompiledGraph对象,我们可以基于当前状态来调用它。

graph = graph_builder.compile()

6、可视化图表(可选)

您可以使用get_graph方法和任意一个 draw方法(如draw_ascii或draw_png)来可视化图表。每个draw方法都需要额外的依赖项。

from IPython.display import Image, displaytry:display(Image(graph.get_graph().draw_mermaid_png()))

except Exception:# This requires some extra dependencies and is optionalpass

7、运行聊天机器人

现在可以运行聊天机器人了!

提示:你可以随时输入 quit、exit 或 q 来退出聊天循环。

def stream_graph_updates(user_input: str):for event in graph.stream({"messages": [{"role": "user", "content": user_input }] }): for value in event.values(): print("Assistant:", value["messages"][-1].content) while True:try: user_input = input("User: ") if user_input.lower() in ["quit", "exit", "q"]: print("Goodbye!")breakstream_graph_updates(user_input)except: # fallback if input() is not availableuser_input = "What do you know about LangGraph?"print("User: " + user_input)stream_graph_updates(user_input)break

Assistant: LangGraph is a library designed to help build stateful multi-agent applications using language models. It provides tools for creating workflows and state machines to coordinate multiple AI agents or language model interactions. LangGraph is built on top of LangChain, leveraging its components while adding graph-based coordination capabilities. It's particularly useful for developing more complex, stateful AI applications that go beyond simple query-response interactions.

Goodbye!

恭喜! 您已使用LangGraph构建了第一个聊天机器人。该机器人能够通过接收用户输入并利用LLM生成回复来进行基础对话。您可以通过查看LangSmith追踪记录来检查上述调用。

以下是本教程的完整代码:

API参考文档:init_chat_model | StateGraph | START | add_messages

(注:所有代码块、API名称及链接格式均按核心翻译原则保留原样)

from typing import Annotatedfrom langchain.chat_models import init_chat_model

from typing_extensions import TypedDictfrom langgraph.graph import StateGraph, START

from langgraph.graph.message import add_messagesclass State(TypedDict):messages: Annotated[list, add_messages]graph_builder = StateGraph(State)llm = init_chat_model("anthropic:claude-3-5-sonnet-latest")def chatbot(state: State):return {"messages": [llm.invoke(state["messages"])]}# The first argument is the unique node name

# The second argument is the function or object that will be called whenever

# the node is used.

graph_builder.add_node("chatbot", chatbot)

graph_builder.add_edge(START, "chatbot")

graph = graph_builder.compile()

后续步骤

你可能已经注意到,这个机器人的知识仅限于其训练数据中的内容。在下一部分,我们将添加一个网页搜索工具来扩展机器人的知识范围,使其具备更强的能力。

入门教程:添加工具

添加工具

为了让聊天机器人能够处理那些无法"凭记忆"回答的查询,您可以集成一个网络搜索工具。聊天机器人会借助该工具查找相关信息,从而提供更优质的回复。

注意:本教程基于构建基础聊天机器人的内容继续展开。

先决条件

在开始本教程前,请确保您已具备以下条件:

- 一个Tavily搜索引擎的API密钥。

1、安装搜索引擎

安装使用Tavily搜索引擎所需的依赖项:

pip install -U langchain-tavily

2、配置环境

使用您的搜索引擎API密钥配置环境:

_set_env("TAVILY_API_KEY")

TAVILY_API_KEY: ········

3、定义工具

定义网页搜索工具:

API参考文档:TavilySearch

from langchain_tavily import TavilySearchtool = TavilySearch(max_results=2)

tools = [tool]

tool.invoke("What's a 'node' in LangGraph?")以下是聊天机器人可用于回答问题的页面摘要结果:

{'query': "What's a 'node' in LangGraph?",

'follow_up_questions': None,

'answer': None,

'images': [],

'results': [{'title': "Introduction to LangGraph: A Beginner's Guide - Medium",

'url': 'https://medium.com/@cplog/introduction-to-langgraph-a-beginners-guide-14f9be027141',

'content': 'Stateful Graph: LangGraph revolves around the concept of a stateful graph, where each node in the graph represents a step in your computation, and the graph maintains a state that is passed around and updated as the computation progresses. LangGraph supports conditional edges, allowing you to dynamically determine the next node to execute based on the current state of the graph. We define nodes for classifying the input, handling greetings, and handling search queries. def classify_input_node(state): LangGraph is a versatile tool for building complex, stateful applications with LLMs. By understanding its core concepts and working through simple examples, beginners can start to leverage its power for their projects. Remember to pay attention to state management, conditional edges, and ensuring there are no dead-end nodes in your graph.',

'score': 0.7065353,

'raw_content': None},

{'title': 'LangGraph Tutorial: What Is LangGraph and How to Use It?',

'url': 'https://www.datacamp.com/tutorial/langgraph-tutorial',

'content': 'LangGraph is a library within the LangChain ecosystem that provides a framework for defining, coordinating, and executing multiple LLM agents (or chains) in a structured and efficient manner. By managing the flow of data and the sequence of operations, LangGraph allows developers to focus on the high-level logic of their applications rather than the intricacies of agent coordination. Whether you need a chatbot that can handle various types of user requests or a multi-agent system that performs complex tasks, LangGraph provides the tools to build exactly what you need. LangGraph significantly simplifies the development of complex LLM applications by providing a structured framework for managing state and coordinating agent interactions.',

'score': 0.5008063,

'raw_content': None}],

'response_time': 1.38}

4、定义流程图

对于在第一个教程中创建的StateGraph,需要在LLM上添加bind_tools。这能让LLM知道当它想使用搜索引擎时,应该采用哪种正确的JSON格式。

选择LLM: 参考上一节 OpenAI Anthropic Azure … 等模型的安装配置

现在我们可以将其整合到一个 StateGraph 中:

from typing import Annotatedfrom typing_extensions import TypedDict from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messagesclass State(TypedDict):messages: Annotated[list, add_messages]graph_builder = StateGraph(State)# Modification: tell the LLM which tools it can call

# highlight-next-line

llm_with_tools = llm.bind_tools(tools)def chatbot(state: State):return {"messages": [llm_with_tools.invoke(state["messages"])]}graph_builder.add_node("chatbot", chatbot)

5、创建运行工具的函数

现在,创建一个函数 来在工具被调用时 运行它们。

具体实现方式是 将工具添加到一个名为BasicToolNode的新节点中,该节点会检查状态中的最新消息,并在消息包含tool_calls时调用工具。

该功能依赖于LLM的tool_calling支持,目前 Anthropic、OpenAI、Google Gemini 等多家LLM提供商都支持此功能。

API参考文档: ToolMessage

import jsonfrom langchain_core.messages import ToolMessageclass BasicToolNode:"""A node that runs the tools requested in the last AIMessage."""def __init__(self, tools: list) -> None:self.tools_by_name = {tool.name: tool for tool in tools}def __call__(self, inputs: dict):if messages := inputs.get("messages", []):message = messages[-1]else:raise ValueError("No message found in input")outputs = []for tool_call in message.tool_calls:tool_result = self.tools_by_name[ tool_call["name"]].invoke(tool_call["args"])outputs.append(ToolMessage(content=json.dumps(tool_result),name=tool_call["name"],tool_call_id=tool_call["id"],))return {"messages": outputs}tool_node = BasicToolNode(tools=[tool])

graph_builder.add_node("tools", tool_node)注意:如果您将来不想自己构建这个功能,可以使用 LangGraph 预构建的 ToolNode。

6、定义 conditional_edges

添加完工具节点后,现在可以定义条件边(conditional edges)。

边(Edges) 用于控制流从一个节点路由到下一个节点。

条件边(Conditional edges) 从一个节点出发,通常包含"if"条件语句,根据当前图状态路由到不同节点。

这些函数接收当前图的state状态,并返回字符串或字符串列表,指示接下来要调用哪个(或哪些)节点。

接下来定义一个名为route_tools的路由函数,用于检查聊天机器人输出中是否存在tool_calls工具调用。

通过调用add_conditional_edges将该函数提供给图,这会告诉图每当chatbot节点完成时,就检查此函数以确定后续流向。

该条件会在存在工具调用时路由到tools节点,否则路由到END节点。由于条件可能返回END,因此这次不需要显式设置finish_point终点。

def route_tools(state: State,

):"""Use in the conditional_edge to route to the ToolNode if the last messagehas tool calls. Otherwise, route to the end."""if isinstance(state, list):ai_message = state[-1]elif messages := state.get("messages", []):ai_message = messages[-1]else:raise ValueError(f"No messages found in input state to tool_edge: {state}")if hasattr(ai_message, "tool_calls") and len(ai_message.tool_calls) > 0:return "tools"return END# `tools_condition` 函数在聊天机器人请求使用工具时 返回 "tools",如果可以直接回应 则返回 "END"。这个条件路由定义了 main agent loop。

graph_builder.add_conditional_edges("chatbot",route_tools,# The following dictionary lets you tell the graph to interpret the condition's outputs as a specific node# It defaults to the identity function, but if you want to use a node named something else apart from "tools",# You can update the value of the dictionary to something else# e.g., "tools": "my_tools"{"tools": "tools", END: END},

)

# Any time a tool is called, we return to the chatbot to decide the next step

graph_builder.add_edge("tools", "chatbot")

graph_builder.add_edge(START, "chatbot")

graph = graph_builder.compile()注意:你可以使用预构建的 tools_condition 来替代此部分,使代码更加简洁。

7、可视化图表(可选)

你可以使用 get_graph 方法和其中一个 “draw” 方法(如 draw_ascii 或 draw_png)来可视化图表。每个 draw 方法都需要额外的依赖项。

from IPython.display import Image, displaytry:display(Image(graph.get_graph().draw_mermaid_png()))

except Exception:# This requires some extra dependencies and is optionalpass

8、向机器人提问

现在你可以向聊天机器人询问其训练数据之外的问题:

def stream_graph_updates(user_input: str):for event in graph.stream({"messages": [{"role": "user", "content": user_input}]}):for value in event.values():print("Assistant:", value["messages"][-1].content)while True:try:user_input = input("User: ")if user_input.lower() in ["quit", "exit", "q"]:print("Goodbye!")breakstream_graph_updates(user_input)except:# fallback if input() is not availableuser_input = "What do you know about LangGraph?"print("User: " + user_input)stream_graph_updates(user_input)break

Assistant: [{'text': "To provide you with accurate and up-to-date information about LangGraph, I'll need to search for the latest details. Let me do that for you.", 'type': 'text'}, {'id': 'toolu_01Q588CszHaSvvP2MxRq9zRD', 'input': {'query': 'LangGraph AI tool information'}, 'name': 'tavily_search_results_json', 'type': 'tool_use'}]Assistant: [{"url": "https://www.langchain.com/langgraph", "content": "LangGraph sets the foundation for how we can build and scale AI workloads \u2014 from conversational agents, complex task automation, to custom LLM-backed experiences that 'just work'. The next chapter in building complex production-ready features with LLMs is agentic, and with LangGraph and LangSmith, LangChain delivers an out-of-the-box solution ..."}, {"url": "https://github.com/langchain-ai/langgraph", "content": "Overview. LangGraph is a library for building stateful, multi-actor applications with LLMs, used to create agent and multi-agent workflows. Compared to other LLM frameworks, it offers these core benefits: cycles, controllability, and persistence. LangGraph allows you to define flows that involve cycles, essential for most agentic architectures ..."}]

Assistant: Based on the search results, I can provide you with information about LangGraph:1、Purpose:LangGraph is a library designed for building stateful, multi-actor applications with Large Language Models (LLMs). It's particularly useful for creating agent and multi-agent workflows.2、Developer:LangGraph is developed by LangChain, a company known for its tools and frameworks in the AI and LLM space.3、Key Features:- Cycles: LangGraph allows the definition of flows that involve cycles, which is essential for most agentic architectures.- Controllability: It offers enhanced control over the application flow.- Persistence: The library provides ways to maintain state and persistence in LLM-based applications.4、Use Cases:LangGraph can be used for various applications, including:- Conversational agents- Complex task automation- Custom LLM-backed experiences5、Integration:LangGraph works in conjunction with LangSmith, another tool by LangChain, to provide an out-of-the-box solution for building complex, production-ready features with LLMs.6、Significance:

...LangGraph is noted to offer unique benefits compared to other LLM frameworks, particularly in its ability to handle cycles, provide controllability, and maintain persistence.LangGraph appears to be a significant tool in the evolving landscape of LLM-based application development, offering developers new ways to create more complex, stateful, and interactive AI systems.

Goodbye!

Output is truncated. View as a scrollable element or open in a text editor. Adjust cell output settings...

9、使用预构建组件

为简化使用流程,请调整您的代码,将以下部分替换为LangGraph提供的预构建组件。这些组件内置了诸如并行API执行等功能。

BasicToolNode替换为预构建的 ToolNoderoute_tools替换为预构建的 tools_condition

from typing import Annotatedfrom langchain_tavily import TavilySearch

from langchain_core.messages import BaseMessage

from typing_extensions import TypedDictfrom langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langgraph.prebuilt import ToolNode, tools_conditionclass State(TypedDict):messages: Annotated[list, add_messages]graph_builder = StateGraph(State) tool = TavilySearch(max_results=2)

tools = [tool]

llm_with_tools = llm.bind_tools(tools) def chatbot(state: State):return {"messages": [llm_with_tools.invoke(state["messages"])]}graph_builder.add_node("chatbot", chatbot)tool_node = ToolNode(tools=[tool])

graph_builder.add_node("tools", tool_node)graph_builder.add_conditional_edges("chatbot",tools_condition,

)

# Any time a tool is called, we return to the chatbot to decide the next step

graph_builder.add_edge("tools", "chatbot")

graph_builder.add_edge(START, "chatbot")

graph = graph_builder.compile()恭喜! 您已在LangGraph中创建了一个对话代理,该代理能够在需要时使用搜索引擎检索最新信息。现在它可以处理更广泛的用户查询。要查看您的代理刚刚执行的所有步骤,请查看这个LangSmith追踪记录。

后续步骤

当前聊天机器人无法自行记住过去的对话,这限制了它进行连贯多轮对话的能力。在下一部分中,您将通过添加记忆功能来解决这个问题。

添加记忆功能

https://langchain-ai.github.io/langgraph/tutorials/get-started/3-add-memory/

目前聊天机器人 已经能够使用工具来回答用户问题,但它无法记住之前的 对话上下文。这限制了它进行连贯多轮对话的能力。

LangGraph通过持久化检查点解决了这个问题。如果在编译图时提供checkpointer,并在调用图时传入thread_id,LangGraph会在每一步操作后自动保存状态。

当你使用相同的 thread_id 再次调用图时,它会加载已保存的状态,使得聊天机器人能够从上次中断的地方继续对话。

稍后我们会看到,检查点功能远比简单的聊天记忆强大得多——它允许你随时保存和恢复复杂状态,用于错误恢复、人工介入的工作流、时间旅行式交互等场景。但首先,让我们添加检查点功能来实现多轮对话。

注意:本教程基于添加工具章节的内容构建。

1、创建 MemorySaver 检查点工具

创建一个 MemorySaver 检查点工具:

from langgraph.checkpoint.memory import MemorySavermemory = MemorySaver()这是一个内存检查点(checkpointer),非常适合教程使用。

但在生产环境中,建议改用SqliteSaver或PostgresSaver并连接数据库。

2、编译图

使用提供的检查点工具编译图,该工具会在图处理每个节点时对State进行检查点保存。

graph = graph_builder.compile(checkpointer=memory)

from IPython.display import Image, displaytry:display(Image(graph.get_graph().draw_mermaid_png()))

except Exception:# This requires some extra dependencies and is optionalpass

3、与你的聊天机器人互动

现在你可以和你的机器人互动了!

1、选择一个线程作为本次对话的会话密钥。

config = {"configurable": {"thread_id": "1"}}

2、调用你的聊天机器人:

user_input = "Hi there! My name is Will."# The config is the **second positional argument** to stream() or invoke()!

events = graph.stream({"messages": [{"role": "user", "content": user_input}]},config,stream_mode="values",

)

for event in events:event["messages"][-1].pretty_print()

================================ Human Message =================================Hi there! My name is Will.

================================== Ai Message ==================================Hello Will! It's nice to meet you. How can I assist you today? Is there anything specific you'd like to know or discuss?注意:配置参数是作为调用图表时的第二个位置参数提供的。关键点在于它没有嵌套在图表输入中(即{'messages': []}内)。

4、提出后续问题

提出后续问题:

user_input = "Remember my name?"# The config is the **second positional argument** to stream() or invoke()!

events = graph.stream({"messages": [{"role": "user", "content": user_input}]},config,stream_mode="values",

)

for event in events:event["messages"][-1].pretty_print()

================================ Human Message =================================Remember my name?

================================== Ai Message ==================================Of course, I remember your name, Will. I always try to pay attention to important details that users share with me. Is there anything else you'd like to talk about or any questions you have? I'm here to help with a wide range of topics or tasks.注意,我们并未使用外部列表来管理内存:所有操作都由检查点处理器( checkpointer )处理!您可以通过查看这个LangSmith追踪记录来完整了解执行过程。

不相信?可以尝试使用不同的配置来验证这一点。

# The only difference is we change the `thread_id` here to "2" instead of "1"

events = graph.stream( {"messages": [{"role": "user", "content": user_input}]},{"configurable": {"thread_id": "2"}},stream_mode="values",

)

for event in events:event["messages"][-1].pretty_print()

================================ Human Message =================================Remember my name?

================================== Ai Message ==================================I apologize, but I don't have any previous context or memory of your name. As an AI assistant, I don't retain information from past conversations. Each interaction starts fresh. Could you please tell me your name so I can address you properly in this conversation?注意,我们唯一的改动是修改了配置中的 thread_id。对比可查看此调用的 LangSmith 追踪记录。

5、检查状态

到目前为止,我们已经在两个不同的线程中创建了几个检查点。但检查点里到底包含什么内容呢?要随时查看某个配置下图的state状态,可以调用get_state(config)方法。

snapshot = graph.get_state(config)

snapshot

StateSnapshot(values = {'messages': [HumanMessage(content = 'Hi there! My name is Will.', additional_kwargs = {}, response_metadata = {}, id = '8c1ca919-c553-4ebf-95d4-b59a2d61e078'), AIMessage(content = "Hello Will! It's nice to meet you. How can I assist you today? Is there anything specific you'd like to know or discuss?", additional_kwargs = {}, response_metadata = {'id': 'msg_01WTQebPhNwmMrmmWojJ9KXJ','model': 'claude-3-5-sonnet-20240620','stop_reason': 'end_turn','stop_sequence': None,'usage': {'input_tokens': 405,'output_tokens': 32}}, id = 'run-58587b77-8c82-41e6-8a90-d62c444a261d-0', usage_metadata = {'input_tokens': 405,'output_tokens': 32,'total_tokens': 437}), HumanMessage(content = 'Remember my name?', additional_kwargs = {}, response_metadata = {}, id = 'daba7df6-ad75-4d6b-8057-745881cea1ca'), AIMessage(content = "Of course, I remember your name, Will. I always try to pay attention to important details that users share with me. Is there anything else you'd like to talk about or any questions you have? I'm here to help with a wide range of topics or tasks.", additional_kwargs = {}, response_metadata = {'id': 'msg_01E41KitY74HpENRgXx94vag','model': 'claude-3-5-sonnet-20240620','stop_reason': 'end_turn','stop_sequence': None,'usage': {'input_tokens': 444,'output_tokens': 58}}, id = 'run-ffeaae5c-4d2d-4ddb-bd59-5d5cbf2a5af8-0', usage_metadata = {'input_tokens': 444,'output_tokens': 58,'total_tokens': 502})]}, next = (), config = {'configurable': {'thread_id': '1','checkpoint_ns': '','checkpoint_id': '1ef7d06e-93e0-6acc-8004-f2ac846575d2'}}, metadata = {'source': 'loop','writes': {'chatbot': {'messages': [AIMessage(content = "Of course, I remember your name, Will. I always try to pay attention to important details that users share with me. Is there anything else you'd like to talk about or any questions you have? I'm here to help with a wide range of topics or tasks.", additional_kwargs = {}, response_metadata = {'id': 'msg_01E41KitY74HpENRgXx94vag','model': 'claude-3-5-sonnet-20240620','stop_reason': 'end_turn','stop_sequence': None,'usage': {'input_tokens': 444,'output_tokens': 58}}, id = 'run-ffeaae5c-4d2d-4ddb-bd59-5d5cbf2a5af8-0', usage_metadata = {'input_tokens': 444,'output_tokens': 58,'total_tokens': 502})]}},'step': 4,'parents': {}}, created_at = '2024-09-27T19:30:10.820758+00:00', parent_config = {'configurable': {'thread_id': '1','checkpoint_ns': '','checkpoint_id': '1ef7d06e-859f-6206-8003-e1bd3c264b8f'}}, tasks = ()

)

snapshot.next # (since the graph ended this turn, `next` is empty. If you fetch a state from within a graph invocation, next tells which node will execute next)上面的快照包含了当前状态值、对应配置以及待处理的 next 节点。在本例中,图已达到 END 状态,因此 next 为空。

恭喜! 借助 LangGraph 的检查点系统,您的聊天机器人现在可以跨会话保持对话状态。这为实现更自然、更具上下文感知的交互开启了令人兴奋的可能性。LangGraph 的检查点机制甚至能处理任意复杂的图状态,其表达能力与功能远超简单的聊天记忆。

请查看以下代码片段,回顾本教程中的图结构。

API参考文档:init_chat_model | TavilySearch | BaseMessage | MemorySaver | StateGraph | add_messages | ToolNode | tools_condition

from typing import Annotatedfrom langchain.chat_models import init_chat_model

from langchain_tavily import TavilySearch

from langchain_core.messages import BaseMessage

from typing_extensions import TypedDictfrom langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import StateGraph

from langgraph.graph.message import add_messages

from langgraph.prebuilt import ToolNode, tools_conditionclass State(TypedDict):messages: Annotated[list, add_messages]graph_builder = StateGraph(State)tool = TavilySearch(max_results=2)

tools = [tool]

llm_with_tools = llm.bind_tools(tools)def chatbot(state: State):return {"messages": [llm_with_tools.invoke(state["messages"])]}graph_builder.add_node("chatbot", chatbot)tool_node = ToolNode(tools=[tool])

graph_builder.add_node("tools", tool_node)graph_builder.add_conditional_edges("chatbot",tools_condition,

)

graph_builder.add_edge("tools", "chatbot")

graph_builder.set_entry_point("chatbot")

memory = MemorySaver()

graph = graph_builder.compile(checkpointer=memory)

后续步骤

在下一个教程中,您将为聊天机器人添加人工介入功能,以处理需要人工指导或验证才能继续的情况。

添加人工干预控制

https://langchain-ai.github.io/langgraph/tutorials/get-started/4-human-in-the-loop/

智能代理可能不够可靠,有时需要人工输入才能成功完成任务。

同样,对于某些操作,您可能希望在执行前要求人工审批,以确保一切按预期运行。

LangGraph的持久化层支持人工干预工作流,允许根据用户反馈暂停和恢复执行。

该功能的主要接口是 interrupt函数。

在节点内调用interrupt会暂停执行。

通过传入Command指令,可以携带人工输入的新数据恢复执行。

interrupt在用法上类似于Python内置的input()函数,但存在一些注意事项。

注意:本教程基于添加记忆功能构建。

1、添加 human_assistance 工具

基于为聊天机器人添加记忆教程中的现有代码,将human_assistance 工具 添加到聊天机器人中。

该工具通过interrupt机制从人类获取信息。

现在我们可以将它整合到我们的 StateGraph 中,并添加一个额外的工具:

from typing import Annotatedfrom langchain_tavily import TavilySearch

from langchain_core.tools import tool

from typing_extensions import TypedDictfrom langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langgraph.prebuilt import ToolNode, tools_conditionfrom langgraph.types import Command, interruptclass State(TypedDict):messages: Annotated[list, add_messages]graph_builder = StateGraph(State)@tool

def human_assistance(query: str) -> str:"""Request assistance from a human."""human_response = interrupt({"query": query})return human_response["data"] tool = TavilySearch(max_results=2)

tools = [tool, human_assistance]

llm_with_tools = llm.bind_tools(tools) def chatbot(state: State):message = llm_with_tools.invoke(state["messages"])# Because we will be interrupting during tool execution,# we disable parallel tool calling to avoid repeating any# tool invocations when we resume.assert len(message.tool_calls) <= 1return {"messages": [message]}graph_builder.add_node("chatbot", chatbot)tool_node = ToolNode(tools=tools)

graph_builder.add_node("tools", tool_node)graph_builder.add_conditional_edges("chatbot",tools_condition,

)

graph_builder.add_edge("tools", "chatbot")

graph_builder.add_edge(START, "chatbot")提示:如需了解更多关于人机交互工作流的信息和示例,请参阅人机交互。其中包括如何 在工具调用执行前 审核和编辑工具调用。

2、编译图结构

我们像之前一样,使用检查点工具来编译这个图:

memory = MemorySaver()graph = graph_builder.compile(checkpointer=memory)

3、可视化图表(可选)

可视化图表后,您会看到与之前相同的布局——只是新增了工具!

from IPython.display import Image, displaytry:display(Image(graph.get_graph().draw_mermaid_png()))

except Exception:# This requires some extra dependencies and is optional pass

4、提示聊天机器人

现在,向聊天机器人提出一个问题,以触发新的 human_assistance 工具:

user_input = "I need some expert guidance for building an AI agent. Could you request assistance for me?"

config = {"configurable": {"thread_id": "1"}}events = graph.stream({"messages": [{"role": "user", "content": user_input}]},config,stream_mode="values",

) for event in events:if "messages" in event:event["messages"][-1].pretty_print()

================================ Human Message =================================I need some expert guidance for building an AI agent. Could you request assistance for me?

================================== Ai Message ==================================[{'text': "Certainly! I'd be happy to request expert assistance for you regarding building an AI agent. To do this, I'll use the human_assistance function to relay your request. Let me do that for you now.", 'type': 'text'}, {'id': 'toolu_01ABUqneqnuHNuo1vhfDFQCW', 'input': {'query': 'A user is requesting expert guidance for building an AI agent. Could you please provide some expert advice or resources on this topic?'}, 'name': 'human_assistance', 'type': 'tool_use'}]

Tool Calls: human_assistance (toolu_01ABUqneqnuHNuo1vhfDFQCW)Call ID: toolu_01ABUqneqnuHNuo1vhfDFQCWArgs:query: A user is requesting expert guidance for building an AI agent. Could you please provide some expert advice or resources on this topic?聊天机器人已生成工具调用,但执行过程被中断。如果检查图状态,会发现它停止在工具节点处。

snapshot = graph.get_state(config)

snapshot.next

('tools',)Info : 深入了解 human_assistance 工具:

@tool

def human_assistance(query: str) -> str:"""Request assistance from a human."""human_response = interrupt({"query": query})return human_response["data"] 类似于 Python 内置的 input() 函数,在工具中调用 interrupt 会暂停执行。

进度会根据检查点器进行持久化;因此如果使用 Postgres 进行持久化,只要数据库处于运行状态,就可以随时恢复执行。

在本示例中,使用的是 内存检查点器,只要 Python 内核仍在运行,就可以随时恢复。

5、恢复执行

要恢复执行,需要传递一个 包含工具预期数据的 Command 对象。

该数据的格式可根据需求自定义。在本示例中,我们使用带有 "data" 键的字典结构。

human_response = ("We, the experts are here to help! We'd recommend you check out LangGraph to build your agent."" It's much more reliable and extensible than simple autonomous agents."

)human_command = Command(resume={"data": human_response})events = graph.stream(human_command, config, stream_mode="values")

for event in events:if "messages" in event:event["messages"][-1].pretty_print()

================================== Ai Message ==================================[{'text': "Certainly! I'd be happy to request expert assistance for you regarding building an AI agent. To do this, I'll use the human_assistance function to relay your request. Let me do that for you now.", 'type': 'text'}, {'id': 'toolu_01ABUqneqnuHNuo1vhfDFQCW', 'input': {'query': 'A user is requesting expert guidance for building an AI agent. Could you please provide some expert advice or resources on this topic?'}, 'name': 'human_assistance', 'type': 'tool_use'}]

Tool Calls:human_assistance (toolu_01ABUqneqnuHNuo1vhfDFQCW)Call ID: toolu_01ABUqneqnuHNuo1vhfDFQCWArgs:query: A user is requesting expert guidance for building an AI agent. Could you please provide some expert advice or resources on this topic?

================================= Tool Message =================================

Name: human_assistanceWe, the experts are here to help! We'd recommend you check out LangGraph to build your agent. It's much more reliable and extensible than simple autonomous agents.

================================== Ai Message ==================================Thank you for your patience. I've received some expert advice regarding your request for guidance on building an AI agent. Here's what the experts have suggested:The experts recommend that you look into LangGraph for building your AI agent. They mention that LangGraph is a more reliable and extensible option compared to simple autonomous agents.LangGraph is likely a framework or library designed specifically for creating AI agents with advanced capabilities. Here are a few points to consider based on this recommendation:1、Reliability: The experts emphasize that LangGraph is more reliable than simpler autonomous agent approaches. This could mean it has better stability, error handling, or consistent performance.2、Extensibility: LangGraph is described as more extensible, which suggests that it probably offers a flexible architecture that allows you to easily add new features or modify existing ones as your agent's requirements evolve.3、Advanced capabilities: Given that it's recommended over "simple autonomous agents," LangGraph likely provides more sophisticated tools and techniques for building complex AI agents.

...

2、Look for tutorials or guides specifically focused on building AI agents with LangGraph.

3、Check if there are any community forums or discussion groups where you can ask questions and get support from other developers using LangGraph.If you'd like more specific information about LangGraph or have any questions about this recommendation, please feel free to ask, and I can request further assistance from the experts.

Output is truncated. View as a scrollable element or open in a text editor. Adjust cell output settings...输入已接收并作为工具消息处理完成。您可以通过查看本次调用的LangSmith追踪记录来了解上述调用中执行的具体工作。

请注意,状态会在第一步加载,这样我们的聊天机器人就能从上次中断处继续对话。

恭喜! 您已成功运用interrupt机制为聊天机器人添加了"人在回路"执行功能,使得需要时能够进行人工监督和干预。

这为您基于AI系统构建的用户界面开辟了更多可能性。由于您已配置检查点保存器,只要底层持久化层正常运行,该图可以无限期暂停,并能随时恢复运行,就像从未中断过一样。

API 参考文档:

TavilySearch | tool | MemorySaver | StateGraph | START | END | add_messages | ToolNode | tools_condition | Command | interrupt

from typing import Annotatedfrom langchain_tavily import TavilySearch

from langchain_core.tools import tool

from typing_extensions import TypedDictfrom langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langgraph.prebuilt import ToolNode, tools_condition

from langgraph.types import Command, interruptclass State(TypedDict):messages: Annotated[list, add_messages]graph_builder = StateGraph(State)@tool

def human_assistance(query: str) -> str:"""Request assistance from a human."""human_response = interrupt({"query": query})return human_response["data"]tool = TavilySearch(max_results=2)

tools = [tool, human_assistance]

llm_with_tools = llm.bind_tools(tools)def chatbot(state: State):message = llm_with_tools.invoke(state["messages"])assert(len(message.tool_calls) <= 1)return {"messages": [message]}graph_builder.add_node("chatbot", chatbot)tool_node = ToolNode(tools=tools)

graph_builder.add_node("tools", tool_node)graph_builder.add_conditional_edges("chatbot",tools_condition,

)

graph_builder.add_edge("tools", "chatbot")

graph_builder.add_edge(START, "chatbot")memory = MemorySaver()

graph = graph_builder.compile(checkpointer=memory)

后续步骤

目前教程示例都基于一个简单的状态结构——仅包含消息列表这一项内容。虽然这种简单状态能满足许多需求,但如果你想在不依赖消息列表的情况下定义更复杂的行为,可以为状态添加额外字段。

入门教程:自定义状态

自定义状态

在本教程中,您将为状态添加额外字段,从而在不依赖消息列表的情况下定义复杂行为。聊天机器人将使用其搜索工具查找特定信息,并将其转发给人工审核。

注意:本教程基于添加人工审核控制内容构建。

1、向状态添加键

通过向状态添加 name 和 birthday 键来更新聊天机器人,使其能够查询实体的生日:

API参考文档:add_messages

from typing import Annotated

from typing_extensions import TypedDict

from langgraph.graph.message import add_messagesclass State(TypedDict):messages: Annotated[list, add_messages]name: strbirthday: str将这些信息添加到状态中,可以让其他图节点(如下游存储或处理信息的节点)以及图的持久化层轻松访问。

2、在工具内部更新状态

现在,在 human_assistance 工具中填充状态键。这允许人类在信息存入状态前进行审查。

使用 Command 从工具内部发起状态更新。

from langchain_core.messages import ToolMessage

from langchain_core.tools import InjectedToolCallId, toolfrom langgraph.types import Command, interrupt@tool

# 请注意,由于我们正在为状态更新生成一个工具消息(ToolMessage),通常需要对应工具调用的ID。我们可以使用LangChain 的 InjectedToolCallId 来指示这个参数不应该在工具的架构中向模型显示。

def human_assistance(name: str, birthday: str, tool_call_id: Annotated[str, InjectedToolCallId]

) -> str:"""Request assistance from a human."""human_response = interrupt({"question": "Is this correct?","name": name,"birthday": birthday,},)# If the information is correct, update the state as-is.if human_response.get("correct", "").lower().startswith("y"):verified_name = nameverified_birthday = birthdayresponse = "Correct"# Otherwise, receive information from the human reviewer.else:verified_name = human_response.get("name", name)verified_birthday = human_response.get("birthday", birthday)response = f"Made a correction: {human_response}"# This time we explicitly update the state with a ToolMessage inside# the tool.state_update = {"name": verified_name,"birthday": verified_birthday,"messages": [ToolMessage(response, tool_call_id=tool_call_id)],}# We return a Command object in the tool to update our state.return Command(update=state_update)图表其余部分保持不变。

3、提示聊天机器人

提示聊天机器人查找LangGraph库的"生日",并引导它在获取所需信息后联系human_assistance工具。

通过在工具参数中设置name和birthday,可以强制聊天机器人生成这些字段的建议方案。

user_input = ("Can you look up when LangGraph was released? ""When you have the answer, use the human_assistance tool for review."

)

config = {"configurable": {"thread_id": "1"}}events = graph.stream({"messages": [{"role": "user", "content": user_input}]},config,stream_mode="values",

)

for event in events:if "messages" in event:event["messages"][-1].pretty_print()

================================ Human Message =================================Can you look up when LangGraph was released? When you have the answer, use the human_assistance tool for review.

================================== Ai Message ==================================[{'text': "Certainly! I'll start by searching for information about LangGraph's release date using the Tavily search function. Then, I'll use the human_assistance tool for review.", 'type': 'text'}, {'id': 'toolu_01JoXQPgTVJXiuma8xMVwqAi', 'input': {'query': 'LangGraph release date'}, 'name': 'tavily_search_results_json', 'type': 'tool_use'}]

Tool Calls:tavily_search_results_json (toolu_01JoXQPgTVJXiuma8xMVwqAi)Call ID: toolu_01JoXQPgTVJXiuma8xMVwqAiArgs:query: LangGraph release date

================================= Tool Message =================================

Name: tavily_search_results_json[{"url": "https://blog.langchain.dev/langgraph-cloud/", "content": "We also have a new stable release of LangGraph. By LangChain 6 min read Jun 27, 2024 (Oct '24) Edit: Since the launch of LangGraph Platform, we now have multiple deployment options alongside LangGraph Studio - which now fall under LangGraph Platform. LangGraph Platform is synonymous with our Cloud SaaS deployment option."}, {"url": "https://changelog.langchain.com/announcements/langgraph-cloud-deploy-at-scale-monitor-carefully-iterate-boldly", "content": "LangChain - Changelog | ☁ 🚀 LangGraph Platform: Deploy at scale, monitor LangChain LangSmith LangGraph LangChain LangSmith LangGraph LangChain LangSmith LangGraph LangChain Changelog Sign up for our newsletter to stay up to date DATE: The LangChain Team LangGraph LangGraph Platform ☁ 🚀 LangGraph Platform: Deploy at scale, monitor carefully, iterate boldly DATE: June 27, 2024 AUTHOR: The LangChain Team LangGraph Platform is now in closed beta, offering scalable, fault-tolerant deployment for LangGraph agents. LangGraph Platform also includes a new playground-like studio for debugging agent failure modes and quick iteration: Join the waitlist today for LangGraph Platform. And to learn more, read our blog post announcement or check out our docs. Subscribe By clicking subscribe, you accept our privacy policy and terms and conditions."}]

================================== Ai Message ==================================[{'text': "Based on the search results, it appears that LangGraph was already in existence before June 27, 2024, when LangGraph Platform was announced. However, the search results don't provide a specific release date for the original LangGraph. \n\nGiven this information, I'll use the human_assistance tool to review and potentially provide more accurate information about LangGraph's initial release date.", 'type': 'text'}, {'id': 'toolu_01JDQAV7nPqMkHHhNs3j3XoN', 'input': {'name': 'Assistant', 'birthday': '2023-01-01'}, 'name': 'human_assistance', 'type': 'tool_use'}]

Tool Calls:human_assistance (toolu_01JDQAV7nPqMkHHhNs3j3XoN)Call ID: toolu_01JDQAV7nPqMkHHhNs3j3XoNArgs:name: Assistantbirthday: 2023-01-01我们又触发了 human_assistance 工具中的 interrupt。

4、添加人工辅助

聊天机器人未能识别正确的日期,因此需要为其提供信息:

human_command = Command(resume={"name": "LangGraph","birthday": "Jan 17, 2024",},

)events = graph.stream(human_command, config, stream_mode="values")

for event in events:if "messages" in event:event["messages"][-1].pretty_print()

================================== Ai Message ==================================[{'text': "Based on the search results, it appears that LangGraph was already in existence before June 27, 2024, when LangGraph Platform was announced. However, the search results don't provide a specific release date for the original LangGraph. \n\nGiven this information, I'll use the human_assistance tool to review and potentially provide more accurate information about LangGraph's initial release date.", 'type': 'text'}, {'id': 'toolu_01JDQAV7nPqMkHHhNs3j3XoN', 'input': {'name': 'Assistant', 'birthday': '2023-01-01'}, 'name': 'human_assistance', 'type': 'tool_use'}]

Tool Calls:human_assistance (toolu_01JDQAV7nPqMkHHhNs3j3XoN)Call ID: toolu_01JDQAV7nPqMkHHhNs3j3XoNArgs:name: Assistantbirthday: 2023-01-01

================================= Tool Message =================================

Name: human_assistanceMade a correction: {'name': 'LangGraph', 'birthday': 'Jan 17, 2024'}

================================== Ai Message ==================================Thank you for the human assistance. I can now provide you with the correct information about LangGraph's release date.LangGraph was initially released on January 17, 2024、This information comes from the human assistance correction, which is more accurate than the search results I initially found.To summarize:

1、LangGraph's original release date: January 17, 2024

2、LangGraph Platform announcement: June 27, 2024It's worth noting that LangGraph had been in development and use for some time before the LangGraph Platform announcement, but the official initial release of LangGraph itself was on January 17, 2024.请注意,这些字段现在已反映在状态中:

snapshot = graph.get_state(config){k: v for k, v in snapshot.values.items() if k in ("name", "birthday")}

{'name': 'LangGraph', 'birthday': 'Jan 17, 2024'}这使得下游节点(例如进一步处理或存储信息的节点)能够轻松访问它们。

5、手动更新状态

LangGraph 提供了对应用程序状态的高度控制能力。例如,在任何时刻(包括被中断时),你都可以通过 graph.update_state 手动覆盖某个键值。

graph.update_state(config, {"name": "LangGraph (library)"})

{'configurable': {'thread_id': '1','checkpoint_ns': '','checkpoint_id': '1efd4ec5-cf69-6352-8006-9278f1730162'}}

6、查看新值

如果调用 graph.get_state,可以看到新值已更新:

snapshot = graph.get_state(config){k: v for k, v in snapshot.values.items() if k in ("name", "birthday")}

{'name': 'LangGraph (library)', 'birthday': 'Jan 17, 2024'}手动状态更新会在LangSmith中生成追踪记录。如果需要,它们还可用于控制人工介入的工作流程。通常建议改用interrupt函数,因为它允许在人工介入交互中独立于状态更新传输数据。

恭喜! 您已通过添加自定义键来支持更复杂的工作流,并学会了如何从工具内部生成状态更新。

API参考文档: TavilySearch | ToolMessage | InjectedToolCallId | tool | MemorySaver | StateGraph | START | END | add_messages | ToolNode | tools_condition | Command | interrupt

from typing import Annotatedfrom langchain_tavily import TavilySearch

from langchain_core.messages import ToolMessage

from langchain_core.tools import InjectedToolCallId, tool

from typing_extensions import TypedDictfrom langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langgraph.prebuilt import ToolNode, tools_condition

from langgraph.types import Command, interruptclass State(TypedDict):messages: Annotated[list, add_messages]name: strbirthday: str@tool

def human_assistance(name: str, birthday: str, tool_call_id: Annotated[str, InjectedToolCallId]

) -> str:"""Request assistance from a human."""human_response = interrupt({"question": "Is this correct?","name": name,"birthday": birthday,},)if human_response.get("correct", "").lower().startswith("y"):verified_name = nameverified_birthday = birthdayresponse = "Correct"else:verified_name = human_response.get("name", name)verified_birthday = human_response.get("birthday", birthday)response = f"Made a correction: {human_response}"state_update = {"name": verified_name,"birthday": verified_birthday,"messages": [ToolMessage(response, tool_call_id=tool_call_id)],}return Command(update=state_update)tool = TavilySearch(max_results=2)

tools = [tool, human_assistance]

llm_with_tools = llm.bind_tools(tools)def chatbot(state: State):message = llm_with_tools.invoke(state["messages"])assert(len(message.tool_calls) <= 1)return {"messages": [message]}graph_builder = StateGraph(State)

graph_builder.add_node("chatbot", chatbot)tool_node = ToolNode(tools=tools)

graph_builder.add_node("tools", tool_node)graph_builder.add_conditional_edges("chatbot",tools_condition,

)

graph_builder.add_edge("tools", "chatbot")

graph_builder.add_edge(START, "chatbot")memory = MemorySaver()

graph = graph_builder.compile(checkpointer=memory)

后续步骤

在完成 LangGraph 基础教程之前,还有一个重要概念需要回顾:将 checkpointing(检查点)和 state updates(状态更新)与时间旅行功能相连接。

时间回溯

https://langchain-ai.github.io/langgraph/tutorials/get-started/6-time-travel/

在典型的聊天机器人工作流程中,用户通过与机器人进行多次交互来完成一项任务。记忆功能和人工介入机制能够在图状态中 创建检查点,并控制未来的响应。

如果用户希望从之前的某个响应点重新开始,探索不同的结果路径,该怎么办?或者用户想要回滚聊天机器人的工作状态来修正错误、尝试不同策略(这在自主软件工程师等应用中很常见),又该如何实现?

通过LangGraph内置的时间回溯功能,您可以构建这类交互体验。

注意:本教程基于自定义状态章节内容构建。

1、回退图谱状态

通过调用图谱的 get_state_history 方法获取检查点,即可将图谱回退到历史状态。之后可以从这个时间点继续执行后续操作。

API参考文档: TavilySearch | BaseMessage | MemorySaver | StateGraph | START | END | add_messages | ToolNode | tools_condition

from typing import Annotatedfrom langchain_tavily import TavilySearch

from langchain_core.messages import BaseMessage

from typing_extensions import TypedDictfrom langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langgraph.prebuilt import ToolNode, tools_conditionclass State(TypedDict):messages: Annotated[list, add_messages]graph_builder = StateGraph(State)tool = TavilySearch(max_results=2)

tools = [tool]

llm_with_tools = llm.bind_tools(tools)def chatbot(state: State):return {"messages": [llm_with_tools.invoke(state["messages"])]}graph_builder.add_node("chatbot", chatbot)tool_node = ToolNode(tools=[tool])

graph_builder.add_node("tools", tool_node)graph_builder.add_conditional_edges("chatbot",tools_condition,

)

graph_builder.add_edge("tools", "chatbot")

graph_builder.add_edge(START, "chatbot")memory = MemorySaver()

graph = graph_builder.compile(checkpointer=memory)

2、添加步骤

向您的图表中添加步骤。每个步骤的状态历史记录都会被检查点保存。

config = {"configurable": {"thread_id": "1"}}

events = graph.stream({"messages": [{"role": "user","content": ("I'm learning LangGraph. ""Could you do some research on it for me?"),},],},config,stream_mode="values",

)for event in events:if "messages" in event:event["messages"][-1].pretty_print()

================================ Human Message =================================I'm learning LangGraph. Could you do some research on it for me?

================================== Ai Message ==================================[{'text': "Certainly! I'd be happy to research LangGraph for you. To get the most up-to-date and accurate information, I'll use the Tavily search engine to look this up. Let me do that for you now.", 'type': 'text'}, {'id': 'toolu_01BscbfJJB9EWJFqGrN6E54e', 'input': {'query': 'LangGraph latest information and features'}, 'name': 'tavily_search_results_json', 'type': 'tool_use'}]

Tool Calls:tavily_search_results_json (toolu_01BscbfJJB9EWJFqGrN6E54e)Call ID: toolu_01BscbfJJB9EWJFqGrN6E54eArgs:query: LangGraph latest information and features

================================= Tool Message =================================

Name: tavily_search_results_json[{"url": "https://blockchain.news/news/langchain-new-features-upcoming-events-update", "content": "LangChain, a leading platform in the AI development space, has released its latest updates, showcasing new use cases and enhancements across its ecosystem. According to the LangChain Blog, the updates cover advancements in LangGraph Platform, LangSmith's self-improving evaluators, and revamped documentation for LangGraph."}, {"url": "https://blog.langchain.dev/langgraph-platform-announce/", "content": "With these learnings under our belt, we decided to couple some of our latest offerings under LangGraph Platform. LangGraph Platform today includes LangGraph Server, LangGraph Studio, plus the CLI and SDK. ... we added features in LangGraph Server to deliver on a few key value areas. Below, we'll focus on these aspects of LangGraph Platform."}]

================================== Ai Message ==================================Thank you for your patience. I've found some recent information about LangGraph for you. Let me summarize the key points:1、LangGraph is part of the LangChain ecosystem, which is a leading platform in AI development.2、Recent updates and features of LangGraph include:a. LangGraph Platform: This seems to be a cloud-based version of LangGraph, though specific details weren't provided in the search results.

...

3、Keep an eye on LangGraph Platform developments, as cloud-based solutions often provide an easier starting point for learners.

4、Consider how LangGraph fits into the broader LangChain ecosystem, especially its interaction with tools like LangSmith.Is there any specific aspect of LangGraph you'd like to know more about? I'd be happy to do a more focused search on particular features or use cases.

Output is truncated. View as a scrollable element or open in a text editor. Adjust cell output settings...

events = graph.stream({"messages": [{"role": "user","content": ("Ya that's helpful. Maybe I'll ""build an autonomous agent with it!"),},],},config,stream_mode="values",

)for event in events:if "messages" in event:event["messages"][-1].pretty_print()

================================ Human Message =================================Ya that's helpful. Maybe I'll build an autonomous agent with it!

================================== Ai Message ==================================[{'text': "That's an exciting idea! Building an autonomous agent with LangGraph is indeed a great application of this technology. LangGraph is particularly well-suited for creating complex, multi-step AI workflows, which is perfect for autonomous agents. Let me gather some more specific information about using LangGraph for building autonomous agents.", 'type': 'text'}, {'id': 'toolu_01QWNHhUaeeWcGXvA4eHT7Zo', 'input': {'query': 'Building autonomous agents with LangGraph examples and tutorials'}, 'name': 'tavily_search_results_json', 'type': 'tool_use'}]

Tool Calls:tavily_search_results_json (toolu_01QWNHhUaeeWcGXvA4eHT7Zo)Call ID: toolu_01QWNHhUaeeWcGXvA4eHT7ZoArgs:query: Building autonomous agents with LangGraph examples and tutorials

================================= Tool Message =================================

Name: tavily_search_results_json[{"url": "https://towardsdatascience.com/building-autonomous-multi-tool-agents-with-gemini-2-0-and-langgraph-ad3d7bd5e79d", "content": "Building Autonomous Multi-Tool Agents with Gemini 2.0 and LangGraph | by Youness Mansar | Jan, 2025 | Towards Data Science Building Autonomous Multi-Tool Agents with Gemini 2.0 and LangGraph A practical tutorial with full code examples for building and running multi-tool agents Towards Data Science LLMs are remarkable — they can memorize vast amounts of information, answer general knowledge questions, write code, generate stories, and even fix your grammar. In this tutorial, we are going to build a simple LLM agent that is equipped with four tools that it can use to answer a user’s question. This Agent will have the following specifications: Follow Published in Towards Data Science --------------------------------- Your home for data science and AI. Follow Follow Follow"}, {"url": "https://github.com/anmolaman20/Tools_and_Agents", "content": "GitHub - anmolaman20/Tools_and_Agents: This repository provides resources for building AI agents using Langchain and Langgraph. This repository provides resources for building AI agents using Langchain and Langgraph. This repository provides resources for building AI agents using Langchain and Langgraph. This repository serves as a comprehensive guide for building AI-powered agents using Langchain and Langgraph. It provides hands-on examples, practical tutorials, and resources for developers and AI enthusiasts to master building intelligent systems and workflows. AI Agent Development: Gain insights into creating intelligent systems that think, reason, and adapt in real time. This repository is ideal for AI practitioners, developers exploring language models, or anyone interested in building intelligent systems. This repository provides resources for building AI agents using Langchain and Langgraph."}]

================================== Ai Message ==================================Great idea! Building an autonomous agent with LangGraph is definitely an exciting project. Based on the latest information I've found, here are some insights and tips for building autonomous agents with LangGraph:1、Multi-Tool Agents: LangGraph is particularly well-suited for creating autonomous agents that can use multiple tools. This allows your agent to have a diverse set of capabilities and choose the right tool for each task.2、Integration with Large Language Models (LLMs): You can combine LangGraph with powerful LLMs like Gemini 2.0 to create more intelligent and capable agents. The LLM can serve as the "brain" of your agent, making decisions and generating responses.3、Workflow Management: LangGraph excels at managing complex, multi-step AI workflows. This is crucial for autonomous agents that need to break down tasks into smaller steps and execute them in the right order.

...

6、Pay attention to how you structure the agent's decision-making process and workflow.

7、Don't forget to implement proper error handling and safety measures, especially if your agent will be interacting with external systems or making important decisions.Building an autonomous agent is an iterative process, so be prepared to refine and improve your agent over time. Good luck with your project! If you need any more specific information as you progress, feel free to ask.

Output is truncated. View as a scrollable element or open in a text editor. Adjust cell output settings...

3、回放完整状态历史

现在你已为聊天机器人添加了步骤,可以执行replay 命令查看发生的所有操作记录。

to_replay = None

for state in graph.get_state_history(config):print("Num Messages: ", len(state.values["messages"]), "Next: ", state.next)print("-" * 80)if len(state.values["messages"]) == 6:# We are somewhat arbitrarily selecting a specific state based on the number of chat messages in the state.to_replay = state

Num Messages: 8 Next: ()

--------------------------------------------------------------------------------

Num Messages: 7 Next: ('chatbot',)

--------------------------------------------------------------------------------

Num Messages: 6 Next: ('tools',)

--------------------------------------------------------------------------------

Num Messages: 5 Next: ('chatbot',)

--------------------------------------------------------------------------------

Num Messages: 4 Next: ('__start__',)

--------------------------------------------------------------------------------

Num Messages: 4 Next: ()

--------------------------------------------------------------------------------

Num Messages: 3 Next: ('chatbot',)

--------------------------------------------------------------------------------

Num Messages: 2 Next: ('tools',)

--------------------------------------------------------------------------------

Num Messages: 1 Next: ('chatbot',)

--------------------------------------------------------------------------------

Num Messages: 0 Next: ('__start__',)

--------------------------------------------------------------------------------检查点会在图的每个步骤中保存。这跨越多次调用,因此您可以回滚整个线程的历史记录。

从检查点恢复

从 to_replay 状态恢复,该状态位于第二次图调用中 chatbot 节点之后。从此处恢复将接着调用 action 节点。

print(to_replay.next)

print(to_replay.config)

('tools',)

{'configurable': {'thread_id': '1', 'checkpoint_ns': '', 'checkpoint_id': '1efd43e3-0c1f-6c4e-8006-891877d65740'}}

4、从特定时间点加载状态

检查点的 to_replay.config 包含一个 checkpoint_id 时间戳。提供这个 checkpoint_id 值会指示 LangGraph 的检查点机制加载该时间点的状态。

# The `checkpoint_id` in the `to_replay.config` corresponds to a state we've persisted to our checkpointer.

for event in graph.stream(None, to_replay.config, stream_mode="values"):if "messages" in event:event["messages"][-1].pretty_print()

================================== Ai Message ==================================[{'text': "That's an exciting idea! Building an autonomous agent with LangGraph is indeed a great application of this technology. LangGraph is particularly well-suited for creating complex, multi-step AI workflows, which is perfect for autonomous agents. Let me gather some more specific information about using LangGraph for building autonomous agents.", 'type': 'text'}, {'id': 'toolu_01QWNHhUaeeWcGXvA4eHT7Zo', 'input': {'query': 'Building autonomous agents with LangGraph examples and tutorials'}, 'name': 'tavily_search_results_json', 'type': 'tool_use'}]

Tool Calls:tavily_search_results_json (toolu_01QWNHhUaeeWcGXvA4eHT7Zo)Call ID: toolu_01QWNHhUaeeWcGXvA4eHT7ZoArgs:query: Building autonomous agents with LangGraph examples and tutorials

================================= Tool Message =================================

Name: tavily_search_results_json[{"url": "https://towardsdatascience.com/building-autonomous-multi-tool-agents-with-gemini-2-0-and-langgraph-ad3d7bd5e79d", "content": "Building Autonomous Multi-Tool Agents with Gemini 2.0 and LangGraph | by Youness Mansar | Jan, 2025 | Towards Data Science Building Autonomous Multi-Tool Agents with Gemini 2.0 and LangGraph A practical tutorial with full code examples for building and running multi-tool agents Towards Data Science LLMs are remarkable — they can memorize vast amounts of information, answer general knowledge questions, write code, generate stories, and even fix your grammar. In this tutorial, we are going to build a simple LLM agent that is equipped with four tools that it can use to answer a user’s question. This Agent will have the following specifications: Follow Published in Towards Data Science --------------------------------- Your home for data science and AI. Follow Follow Follow"}, {"url": "https://github.com/anmolaman20/Tools_and_Agents", "content": "GitHub - anmolaman20/Tools_and_Agents: This repository provides resources for building AI agents using Langchain and Langgraph. This repository provides resources for building AI agents using Langchain and Langgraph. This repository provides resources for building AI agents using Langchain and Langgraph. This repository serves as a comprehensive guide for building AI-powered agents using Langchain and Langgraph. It provides hands-on examples, practical tutorials, and resources for developers and AI enthusiasts to master building intelligent systems and workflows. AI Agent Development: Gain insights into creating intelligent systems that think, reason, and adapt in real time. This repository is ideal for AI practitioners, developers exploring language models, or anyone interested in building intelligent systems. This repository provides resources for building AI agents using Langchain and Langgraph."}]

================================== Ai Message ==================================Great idea! Building an autonomous agent with LangGraph is indeed an excellent way to apply and deepen your understanding of the technology. Based on the search results, I can provide you with some insights and resources to help you get started:1、Multi-Tool Agents:LangGraph is well-suited for building autonomous agents that can use multiple tools. This allows your agent to have a variety of capabilities and choose the appropriate tool based on the task at hand.2、Integration with Large Language Models (LLMs):There's a tutorial that specifically mentions using Gemini 2.0 (Google's LLM) with LangGraph to build autonomous agents. This suggests that LangGraph can be integrated with various LLMs, giving you flexibility in choosing the language model that best fits your needs.3、Practical Tutorials:There are tutorials available that provide full code examples for building and running multi-tool agents. These can be invaluable as you start your project, giving you a concrete starting point and demonstrating best practices.

...Remember, building an autonomous agent is an iterative process. Start simple and gradually increase complexity as you become more comfortable with LangGraph and its capabilities.Would you like more information on any specific aspect of building your autonomous agent with LangGraph?

Output is truncated. View as a scrollable element or open in a text editor. Adjust cell output settings...图表从action节点恢复了执行。通过上方打印的第一个值是我们搜索引擎工具的响应,可以确认这一点。

恭喜! 你现在已经掌握了在LangGraph中使用时间旅行检查点遍历功能。能够回退并探索不同路径,为调试、实验和交互式应用开启了无限可能。

了解更多

通过探索部署和高级功能,进一步深入您的LangGraph之旅:

- LangGraph服务器快速入门: 在本地启动LangGraph服务器,并通过REST API和LangGraph Studio网页界面与之交互。

- LangGraph平台快速入门: 使用LangGraph平台部署您的LangGraph应用。

- LangGraph平台核心概念: 了解LangGraph平台的基础概念。

部署指南 🚀

https://langchain-ai.github.io/langgraph/tutorials/deployment/

通过LangGraph Server免费部署LangGraph应用有两种选择:

- 本地部署:用于本地测试和开发环境

- 独立容器(轻量版):适用于年节点执行量不超过100万次且不需要定时任务等企业级功能的场景。该版本免费,但需提供LangSmith API密钥。

其他部署方案

此外,您还可以通过 LangGraph 平台 部署到生产环境:

- 云端 SaaS 服务:连接您的 GitHub 代码库,在 LangChain 云平台内部署 LangGraph 服务端。我们负责所有运维工作。

- 自托管数据平面(Beta):通过 控制平面 UI 创建部署,将 LangGraph 服务端部署到 您的 云环境。我们管理 控制平面,您管理具体部署。

- 自托管控制平面(Beta):通过自托管的 控制平面 UI 创建部署,将 LangGraph 服务端部署到 您的 云环境。您负责所有运维工作。

- 独立容器:以任意方式部署 LangGraph 服务端 Docker 镜像。

更多信息请参阅 部署选项。

2025-05-16(五)