Ingress、Kubernetes数据存储相关概念及相关实验案例

Ingress是k8s中Web服务访问的入口;是基于Service,并且可以管理多个Service访问;

Ingress主要负责七层负载,将外部 HTTP/HTTPS 请求路由到集群内部的服务。它可以基于域名和路径定义规则,从而将外部请求分配到不同的服务。

Ingress本质是反向代理服务器;Ingress-Nginx;

通过不同域名或路径,将流量转发给不同的Service;

Ingress是七层(应用层),Service是四层(传输层)(TCP/UDP)应表会传网数物

Ingress的搭建与使用

kubernetes中的一个对象,作用是定义请求如何转发到service的规则

ingress controller:具体实现反向代理及负载均衡的程序,对ingress定义的规则进行解析,根据配置的规则来实现请求转发,实现方式有很多,比如Nginx,Contour,Haproxy等等,需要安装使用;

安装Ingress controller

第一种方法:从网站下载适配的yaml文件

# 部署Ingress-Nginx

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.6.4/deploy/static/provider/cloud/deploy.yaml

# 修改镜像地址为国内镜像地址,否则镜像拉取会失败

vim deploy.yaml

# 在第439行

将image改为registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.6.3

# 教室局域网使用:192.168.57.200:8099/ingress-nginx/controller:v1.6.3

# 第536行和第585行

将registry.k8s.io/ingress-nginx/替换为registry.aliyuncs.com/google_containers/

# 教室局域网可以使用:192.168.57.200:8099/ingress-nginx/kube-webhook-certgen:v20220916-gd32f8c343

第二种方法:自己编写yaml文件

apiVersion: v1

kind: Namespace

metadata:labels:app.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxname: ingress-nginx

---

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginxnamespace: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx-admissionnamespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginxnamespace: ingress-nginx

rules:

- apiGroups:- ""resources:- namespacesverbs:- get

- apiGroups:- ""resources:- configmaps- pods- secrets- endpointsverbs:- get- list- watch

- apiGroups:- ""resources:- servicesverbs:- get- list- watch

- apiGroups:- networking.k8s.ioresources:- ingressesverbs:- get- list- watch

- apiGroups:- networking.k8s.ioresources:- ingresses/statusverbs:- update

- apiGroups:- networking.k8s.ioresources:- ingressclassesverbs:- get- list- watch

- apiGroups:- coordination.k8s.ioresourceNames:- ingress-nginx-leaderresources:- leasesverbs:- get- update

- apiGroups:- coordination.k8s.ioresources:- leasesverbs:- create

- apiGroups:- ""resources:- eventsverbs:- create- patch

- apiGroups:- discovery.k8s.ioresources:- endpointslicesverbs:- list- watch- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx-admissionnamespace: ingress-nginx

rules:

- apiGroups:- ""resources:- secretsverbs:- get- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:labels:app.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx

rules:

- apiGroups:- ""resources:- configmaps- endpoints- nodes- pods- secrets- namespacesverbs:- list- watch

- apiGroups:- coordination.k8s.ioresources:- leasesverbs:- list- watch

- apiGroups:- ""resources:- nodesverbs:- get

- apiGroups:- ""resources:- servicesverbs:- get- list- watch

- apiGroups:- networking.k8s.ioresources:- ingressesverbs:- get- list- watch

- apiGroups:- ""resources:- eventsverbs:- create- patch

- apiGroups:- networking.k8s.ioresources:- ingresses/statusverbs:- update

- apiGroups:- networking.k8s.ioresources:- ingressclassesverbs:- get- list- watch

- apiGroups:- discovery.k8s.ioresources:- endpointslicesverbs:- list- watch- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx-admission

rules:

- apiGroups:- admissionregistration.k8s.ioresources:- validatingwebhookconfigurationsverbs:- get- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginxnamespace: ingress-nginx

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: ingress-nginx

subjects:

- kind: ServiceAccountname: ingress-nginxnamespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx-admissionnamespace: ingress-nginx

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: ingress-nginx-admission

subjects:

- kind: ServiceAccountname: ingress-nginx-admissionnamespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:labels:app.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: ingress-nginx

subjects:

- kind: ServiceAccountname: ingress-nginxnamespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx-admission

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: ingress-nginx-admission

subjects:

- kind: ServiceAccountname: ingress-nginx-admissionnamespace: ingress-nginx

---

apiVersion: v1

data:allow-snippet-annotations: "true"

kind: ConfigMap

metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx-controllernamespace: ingress-nginx

---

apiVersion: v1

kind: Service

metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx-controllernamespace: ingress-nginx

spec:externalTrafficPolicy: LocalipFamilies:- IPv4ipFamilyPolicy: SingleStackports:- appProtocol: httpname: httpport: 80protocol: TCPtargetPort: http- appProtocol: httpsname: httpsport: 443protocol: TCPtargetPort: httpsselector:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxtype: LoadBalancer

---

apiVersion: v1

kind: Service

metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx-controller-admissionnamespace: ingress-nginx

spec:ports:- appProtocol: httpsname: https-webhookport: 443targetPort: webhookselector:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxtype: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx-controllernamespace: ingress-nginx

spec:minReadySeconds: 0revisionHistoryLimit: 10selector:matchLabels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxtemplate:metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxspec:containers:- args:- /nginx-ingress-controller- --publish-service=$(POD_NAMESPACE)/ingress-nginx-controller- --election-id=ingress-nginx-leader- --controller-class=k8s.io/ingress-nginx- --ingress-class=nginx- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller- --validating-webhook=:8443- --validating-webhook-certificate=/usr/local/certificates/cert- --validating-webhook-key=/usr/local/certificates/keyenv:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: LD_PRELOADvalue: /usr/local/lib/libmimalloc.soimage: 192.168.57.200:8099/ingress-nginx/controller:v1.6.3imagePullPolicy: IfNotPresentlifecycle:preStop:exec:command:- /wait-shutdownlivenessProbe:failureThreshold: 5httpGet:path: /healthzport: 10254scheme: HTTPinitialDelaySeconds: 10periodSeconds: 10successThreshold: 1timeoutSeconds: 1name: controllerports:- containerPort: 80name: httpprotocol: TCP- containerPort: 443name: httpsprotocol: TCP- containerPort: 8443name: webhookprotocol: TCPreadinessProbe:failureThreshold: 3httpGet:path: /healthzport: 10254scheme: HTTPinitialDelaySeconds: 10periodSeconds: 10successThreshold: 1timeoutSeconds: 1resources:requests:cpu: 100mmemory: 90MisecurityContext:allowPrivilegeEscalation: truecapabilities:add:- NET_BIND_SERVICEdrop:- ALLrunAsUser: 101volumeMounts:- mountPath: /usr/local/certificates/name: webhook-certreadOnly: truednsPolicy: ClusterFirstnodeSelector:kubernetes.io/os: linuxserviceAccountName: ingress-nginxterminationGracePeriodSeconds: 300volumes:- name: webhook-certsecret:secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx-admission-createnamespace: ingress-nginx

spec:template:metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx-admission-createspec:containers:- args:- create- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc- --namespace=$(POD_NAMESPACE)- --secret-name=ingress-nginx-admissionenv:- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespaceimage: 192.168.57.200:8099/ingress-nginx/kube-webhook-certgen:v20220916-gd32f8c343imagePullPolicy: IfNotPresentname: createsecurityContext:allowPrivilegeEscalation: falsenodeSelector:kubernetes.io/os: linuxrestartPolicy: OnFailuresecurityContext:fsGroup: 2000runAsNonRoot: truerunAsUser: 2000serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx-admission-patchnamespace: ingress-nginx

spec:template:metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx-admission-patchspec:containers:- args:- patch- --webhook-name=ingress-nginx-admission- --namespace=$(POD_NAMESPACE)- --patch-mutating=false- --secret-name=ingress-nginx-admission- --patch-failure-policy=Failenv:- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespaceimage: 192.168.57.200:8099/ingress-nginx/kube-webhook-certgen:v20220916-gd32f8c343imagePullPolicy: IfNotPresentname: patchsecurityContext:allowPrivilegeEscalation: falsenodeSelector:kubernetes.io/os: linuxrestartPolicy: OnFailuresecurityContext:fsGroup: 2000runAsNonRoot: truerunAsUser: 2000serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: nginx

spec:controller: k8s.io/ingress-nginx

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.6.3name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:- v1clientConfig:service:name: ingress-nginx-controller-admissionnamespace: ingress-nginxpath: /networking/v1/ingressesfailurePolicy: FailmatchPolicy: Equivalentname: validate.nginx.ingress.kubernetes.iorules:- apiGroups:- networking.k8s.ioapiVersions:- v1operations:- CREATE- UPDATEresources:- ingressessideEffects: None# 应用yaml文件

kubectl apply -f deploy.yaml

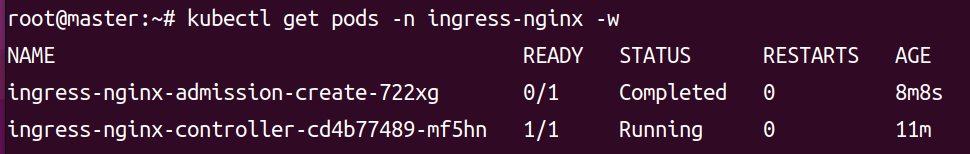

kubectl get pod -n ingress-nginx

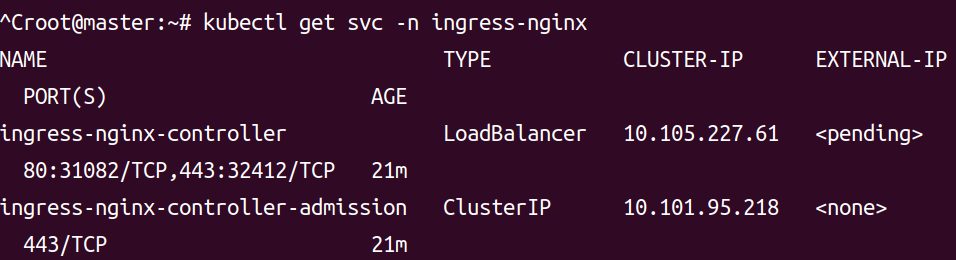

kubectl get svc -n ingress-nginx

# 创建Nginx和Tomcat的Pod,和Service放在一个文件里

vim tomcat-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx-pod

template:

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: 192.168.57.200:8099/library/nginx:1.21

ports:

- containerPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deployment

spec:

replicas: 3

selector:

matchLabels:

app: tomcat-pod

template:

metadata:

labels:

app: tomcat-pod

spec:

containers:

- name: tomcat

image: 192.168.57.200:8099/library/tomcat:8

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx-pod

clusterIP: None

type: ClusterIP

ports:

- port: 80

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: tomcat-service

spec:

selector:

app: tomcat-pod

clusterIP: None

type: ClusterIP

ports:

- port: 8080

targetPort: 8080

查看创建结果

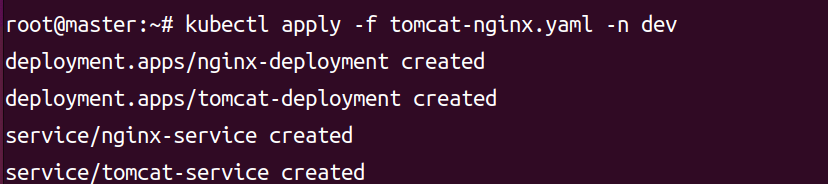

# 1.创建nginx-deployment和tomcat-deployment并创建命名空间

kubectl apply -f tomcat-nginx.yaml -n dev

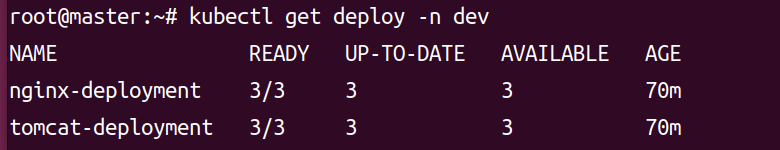

# 2.查看 deploy

kubectl get deploy -n dev

# 3.查看pod

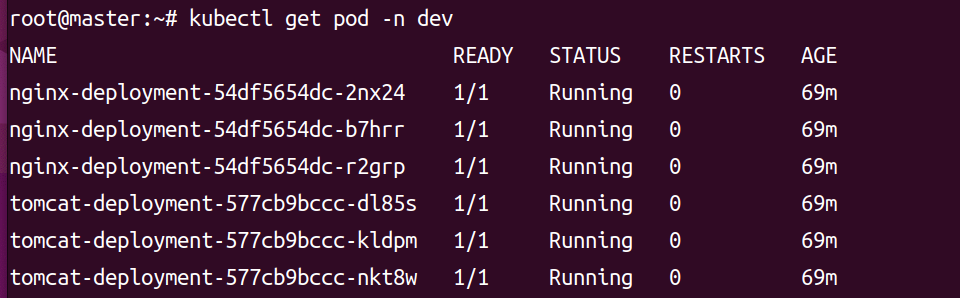

kubectl get pod -n dev

# 4.查看svc

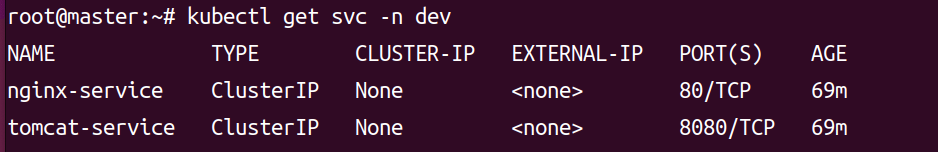

kubectl get svc -n dev

# http代理

vim ingress-http.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-http

spec:

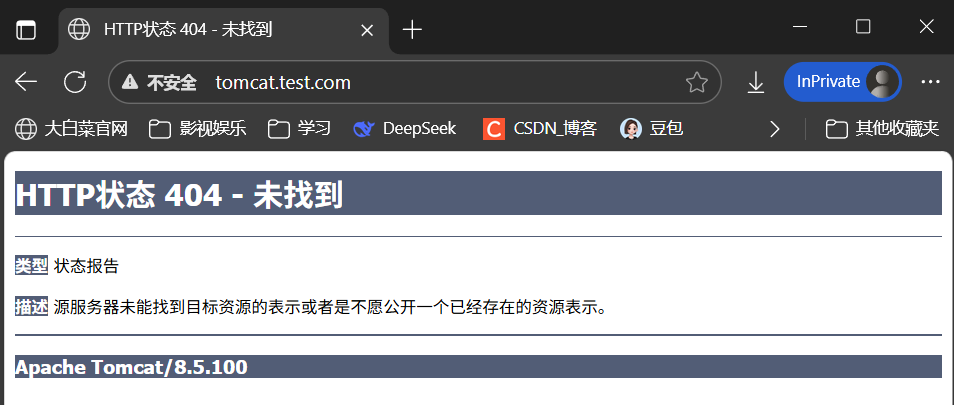

# 这个很关键,如果写错会导致访问404

ingressClassName: nginx

rules:

- host: nginx.test.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-service

port:

number: 80

- host: tomcat.test.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: tomcat-service

port:

number: 8080

# 应用Ingress资源

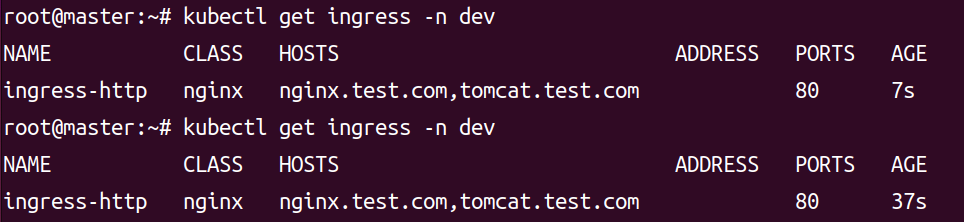

kubectl apply -f ingress-http.yaml -n dev

# 检查 Ingress 状态

kubectl get ingress -n dev

# 获取 Ingress Controller 的访问地址

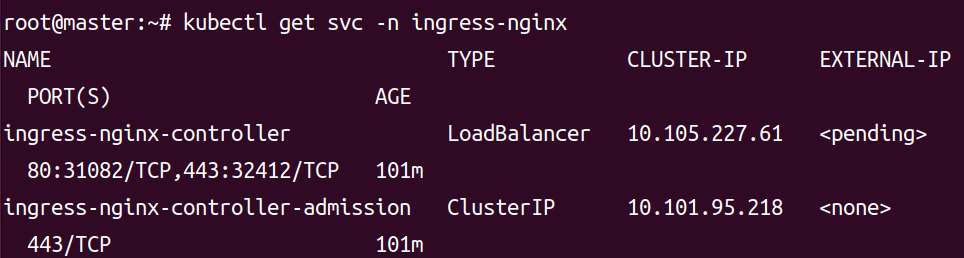

kubectl get svc -n ingress-nginx

在本机的C:\Windows\System32\drivers\etc\hosts添加两个域名(修改成自己的)

192.168.221.11 nginx.test.com

192.168.221.11 tomcat.test.com

# 用域名nginx.test.com访问网页

————————————————

# 用域名tomcat.test.com访问网页

Kubernetes数据存储

临时存储

emptyDir:在同一个Pod内的不同容器间共享数据,和pod生命周期相同,Pod创建时创建,Pod销毁时销毁,临时存储;

configMap:(配置映射)存储配置文件,例如:my.cnf,redis.conf,nginx.conf

secret:存储敏感数据,例如:密码、私钥、证书

downwardAPI:存储Pod与容器元数据,例如:名称、ID...

持久化存储:将数据存储到磁盘空间中;

hostPath:让Pod中容器直接挂载当前Node节点的目录;

PV : 持久化存储卷,用于关联存储介质(后台存储),相当于“硬盘”;每个PV都有权限、容量;

PVC:在Pod中设置的持久化存储需求,启动Pod时,根据需求自动匹配相应的PV,从而实现数据目录的映射;在容器中的目录上挂载存储介质;

StorageClass:存储类,根据PVC的需求自动创建PV,使用时更加方便便捷;

存储方式:

NFS

CEPH

MINIO

...

存算分离:

将存储与运算分离,集中存储,方便管理;

emptyDir

案例 1:容器间共享临时数据

假设我们有一个 Web 应用 Pod,包含两个容器:一个生成临时报告,另一个需要读取并处理该报告。可以用 emptyDir 实现容器间数据共享。

#vim 01-emptyDir.yaml

apiVersion: v1

kind: Pod

metadata:name: shared-data-pod

spec:containers:- name: report-generatorimage: busyboxcommand: ["sh", "-c", "echo '临时报告内容' > /data/report.txt; sleep 3600"]volumeMounts:- name: shared-volumemountPath: /data- name: report-processorimage: busyboxcommand: ["sh", "-c", "while true; do if [ -f /data/report.txt ]; then cat /data/report.txt; break; fi; sleep 1; done; sleep 3600"]volumeMounts:- name: shared-volumemountPath: /datavolumes:- name: shared-volumeemptyDir: {}

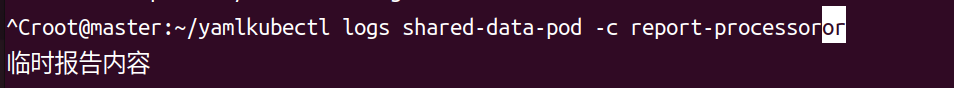

- 部署后,

report-generator容器会在emptyDir中生成report.txt。 report-processor容器会读取并打印该文件内容,实现容器间数据共享。

如果你是指在 Kubernetes 中创建了使用 emptyDir 卷的 Pod 后,接下来可以通过以下方式验证和使用它:

1. 部署 Pod 并验证数据共享(以 “容器间共享临时数据” 案例为例)

执行以下命令创建 Pod:

kubectl apply -f 01-emptyDir.yaml

然后查看 report-processor 容器的日志,确认是否成功读取共享数据:

kubectl logs shared-data-pod -c report-processor

预期输出会显示 临时报告内容,说明容器间数据共享成功。

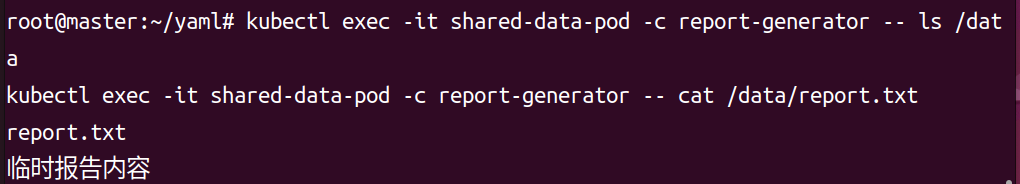

2. 进入 Pod 查看 emptyDir 卷的实际存储

可以进入 Pod 中的任意容器,查看 emptyDir 挂载目录的内容:

kubectl exec -it shared-data-pod -c report-generator -- ls /data

kubectl exec -it shared-data-pod -c report-generator -- cat /data/report.txt

会看到 report.txt 文件及其中的内容,验证 emptyDir 卷的存储功能。

3. 测试 emptyDir 的生命周期(可选)

删除 Pod 后,emptyDir 中的数据会被永久删除:

kubectl delete pod shared-data-pod

重新创建 Pod 后,之前的 report.txt 不会存在,因为 emptyDir 是随 Pod 生命周期创建和销毁的。

4. 扩展场景(如缓存、日志收集)

如果是缓存或日志收集场景,可根据业务逻辑验证数据的生成、读取或转发。例如,在缓存案例中,可检查应用是否将临时文件写入 /app/cache 目录;在日志收集案例中,可检查 Fluentd 是否成功将日志转发到目标存储。

案例2:内存介质(tmpfs)+ 只读权限

核心结论:emptyDir 可通过 medium: Memory 配置为 tmpfs 内存介质,同时给特定容器设置 readOnly: true 实现只读权限,适用于 “一个容器写、多个容器只读共享” 的场景(如配置分发、日志只读访问)。

一、完整配置示例(内存介质 + 只读权限)

apiVersion: v1

kind: Pod

metadata:name: emptydir-memory-readonly-pod # 修改为全小写

spec:containers:- name: data-writerimage: busyboxcommand: ["sh", "-c", "echo '内存中的只读共享数据' > /data/shared.txt; sleep 3600"]volumeMounts:- name: memory-volumemountPath: /data- name: data-readerimage: busyboxcommand: ["sh", "-c", "while true; do cat /data/shared.txt; sleep 5; done"]volumeMounts:- name: memory-volumemountPath: /datareadOnly: truevolumes:- name: memory-volumeemptyDir:medium: MemorysizeLimit: 64Mi二、核心配置说明

1. 内存介质(tmpfs)配置

medium: Memory:指定emptyDir存储在节点内存的 tmpfs 文件系统中,读写速度远快于磁盘。sizeLimit: 64Mi:限制卷最大占用内存,超过后会触发 Pod 驱逐(可选,建议配置避免耗尽节点内存)。- 特性:Pod 重启 / 删除后,数据会永久丢失(内存存储特性),仅适用于临时数据。

2. 只读权限配置

- 在需要只读的容器的

volumeMounts中添加readOnly: true。 - 效果:该容器只能读取卷中数据,无法执行

echo、rm、mkdir等写操作,触发权限报错。

三、验证步骤

1. 部署 Pod 并查看状态

kubectl apply -f emptyDir-memory-readonly.yaml

kubectl get pods -w # 等待状态变为 2/2 Running

2. 验证只读权限(关键)

# 进入只读容器,尝试修改文件(应报错)

kubectl exec -it emptyDir-memory-readonly-pod -c data-reader -- sh

echo "尝试修改" > /data/shared.txt # 报错:read-only file system

rm /data/shared.txt # 报错:read-only file system

exit# 进入写权限容器,修改文件(正常执行)

kubectl exec -it emptyDir-memory-readonly-pod -c data-writer -- sh

echo "新增内容" >> /data/shared.txt

cat /data/shared.txt # 能看到修改后内容

exit# 只读容器能看到更新后的数据(共享生效)

kubectl logs emptyDir-memory-readonly-pod -c data-reader

3. 验证内存存储特性

# 查看 Pod 所在节点

kubectl describe pod emptyDir-memory-readonly-pod | grep Node:# 在节点上查看 tmpfs 挂载(验证内存存储)

ssh 节点IP

df -h | grep tmpfs | grep kubelet

四、适用场景

- 临时缓存:需要高速读写的临时缓存数据(如计算任务中间结果)。

- 配置分发:一个容器生成配置文件,多个容器只读访问(避免误改)。

- 日志只读:业务容器写日志,日志收集容器只读转发(防止日志被篡改)。

五、注意事项

- 内存介质的数据易失:Pod 重启、节点重启或 Pod 被驱逐,数据会立即丢失。

- 内存限制:必须配置

sizeLimit,否则卷可能耗尽节点内存,导致节点异常。 - 只读权限仅对容器生效:卷本身无读写限制,需通过容器的

readOnly配置控制。

ConfigMap 实践案例

我们来详细讲解 ConfigMap 的经典案例。ConfigMap 用于存储非敏感的配置信息,例如应用的配置文件、环境变量等。它可以帮助你将配置与应用代码分离,便于配置的管理和更新。

在实际应用中,通常有两种使用方式:

- 通过环境变量注入

- 通过 Volume 挂载配置文件

1. 场景:多环境配置隔离

假设你有一个应用,需要在 开发 / 测试 / 生产 环境中使用不同的数据库连接地址和日志级别。

步骤 1: 创建 ConfigMap

# configmap-demo.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: app-config

data:# 环境变量配置DB_HOST: "mysql.default.svc.cluster.local"DB_PORT: "3306"LOG_LEVEL: "info"# 配置文件内容app.conf: |[database]host = mysql.default.svc.cluster.localport = 3306[logging]level = infoformat = json

执行命令:

kubectl apply -f configmap-demo.yaml

步骤 2: 在 Pod 中使用 ConfigMap

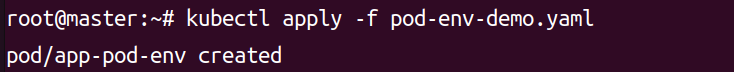

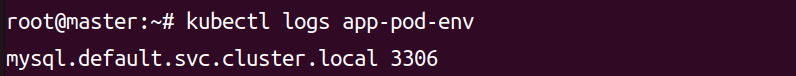

方式 A:通过环境变量注入

# pod-env-demo.yaml

apiVersion: v1

kind: Pod

metadata:name: app-pod-env

spec:containers:- name: appimage: busyboxcommand: ["sh", "-c", "echo $DB_HOST $DB_PORT && sleep 3600"]env:- name: DB_HOSTvalueFrom:configMapKeyRef:name: app-configkey: DB_HOST- name: DB_PORTvalueFrom:configMapKeyRef:name: app-configkey: DB_PORT

方式 B:通过 Volume 挂载配置文件

# pod-volume-demo.yaml

apiVersion: v1

kind: Pod

metadata:name: app-pod-volume

spec:containers:- name: appimage: busyboxcommand: ["sh", "-c", "cat /etc/config/app.conf && sleep 3600"]volumeMounts:- name: config-volumemountPath: /etc/configvolumes:- name: config-volumeconfigMap:name: app-config

执行命令:

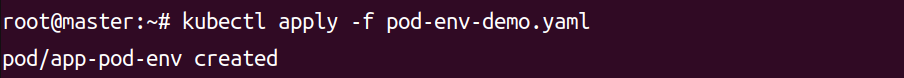

kubectl apply -f pod-env-demo.yaml

kubectl apply -f pod-volume-demo.yaml

步骤 3: 验证配置是否生效

# 查看环境变量方式的输出

kubectl logs app-pod-env# 查看配置文件方式的输出

kubectl logs app-pod-volume

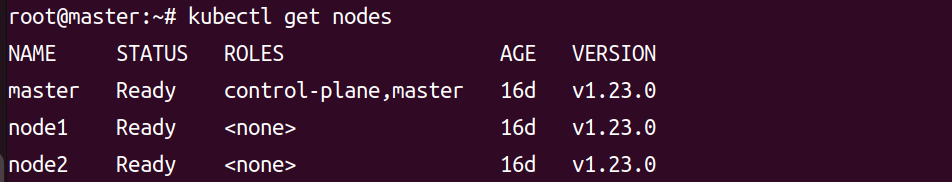

三个节点的状态都要是running:

2. 场景:动态更新配置

ConfigMap 支持动态更新,无需重启 Pod 即可生效(取决于应用是否支持热加载)。

步骤 1: 更新 ConfigMap

kubectl edit configmap app-config

将 LOG_LEVEL 从 info 修改为 debug。

步骤 2: 验证配置更新

- 环境变量方式:需要重启 Pod 才能生效。

- Volume 挂载方式:配置文件会自动更新(通常在几秒内),但应用需要支持热加载。

示例(验证 Volume 方式的更新):

# 再次查看配置文件内容

kubectl exec app-pod-volume -- cat /etc/config/app.conf

3. 场景:使用 ConfigMap 挂载多个配置文件

一个 ConfigMap 可以包含多个 key-value 对,每个 key 可以对应一个配置文件。

# configmap-multi-files.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: multi-file-config

data:app.conf: |log_level = infodatabase.conf: |host = mysqlport = 3306

在 Pod 中挂载:

spec:containers:- name: appimage: busyboxvolumeMounts:- name: config-volumemountPath: /etc/configvolumes:- name: config-volumeconfigMap:name: multi-file-config

Pod 内会看到:

/etc/config/app.conf

/etc/config/database.conf

4. 场景:结合 Deployment 使用

在 Deployment 中使用 ConfigMap,可以实现配置的滚动更新。

# deployment-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: app-deployment

spec:replicas: 3selector:matchLabels:app: my-apptemplate:metadata:labels:app: my-appspec:containers:- name: appimage: busyboxcommand: ["sh", "-c", "echo $LOG_LEVEL && sleep 3600"]env:- name: LOG_LEVELvalueFrom:configMapKeyRef:name: app-configkey: LOG_LEVEL

当 ConfigMap 更新后,执行滚动更新:

kubectl rollout restart deployment app-deployment

5. 场景:ConfigMap 权限控制

可以通过 RBAC 控制谁能访问 ConfigMap。

# configmap-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:name: configmap-reader

rules:

- apiGroups: [""]resources: ["configmaps"]verbs: ["get", "list", "watch"]resourceNames: ["app-config"]

总结

ConfigMap 的核心价值在于:

- 配置与代码分离

- 多环境配置隔离

- 动态更新配置

- 集中化配置管理

在生产环境中,建议将敏感配置(如密码、Token)存储在 Secret 中,非敏感配置存储在 ConfigMap 中。