LLMs之HPT:《Towards a Unified View of Large Language Model Post-Training》翻译与解读

LLMs之HPT:《Towards a Unified View of Large Language Model Post-Training》翻译与解读

导读:该论文通过提出统一策略梯度估计器(UPGE),从理论上深刻地揭示了监督微调(SFT)与强化学习(RL)在大型语言模型后训练中的内在联系,证明了它们本质上是优化同一目标的、具有不同bias-variance特性的互补方法。基于这一理论洞见,论文设计了混合后训练(HPT)算法,该算法能根据模型的实时表现动态地在SFT(利用)和RL(探索)之间切换,从而高效地结合了二者的优点。大量的实验证明,HPT不仅在性能上全面超越了现有的主流方法,而且显著增强了模型的探索能力和泛化性,为构建更强大、更高效的后训练流程提供了新的理论视角和实用的解决方案。

>> 背景痛点

● 强化学习(RL)的局限性: 强化学习虽然能通过探索提升模型(LLM)的推理能力,但直接应用于基础能力较弱的模型(即“Zero RL”)时,模型可能因无法探索到有意义的奖励信号而失败。

● 监督微调(SFT)的局限性: SFT能高效地从高质量的人工标注数据中学习,但它限制了模型的探索能力,容易导致在演示数据上过拟合,损害模型在分布外(out-of-distribution)数据上的泛化性能。

● 传统流程的低效性: 当前业界标准的“先SFT后RL”(SFT-then-RL)流程,虽然有效,但这是一个多阶段过程,不仅资源消耗巨大,而且通常需要精细的调优来保证效果。

● 混合方法的理论缺失: 近期一些工作尝试将SFT和RL的目标函数结合起来进行训练,但大多将它们视为两个独立的目标,缺乏一个统一的理论基础来解释为什么这两种看似不同的学习信号可以被有效地结合并共同优化。

>> 具体的解决方案

● 理论框架:统一策略梯度估计器(UPGE): 论文提出了一个名为“统一策略梯度估计器”(Unified Policy Gradient Estimator, UPGE)的理论框架。该框架从数学上证明了,包括SFT和多种RL算法(如PPO, GRPO等)在内的各种后训练(Post-Training)方法的梯度计算,都可以被归纳为一个统一的、通用的表达式。

● 实用算法:混合后训练(HPT): 基于UPGE的理论洞见,论文提出了一种名为“混合后训练”(Hybrid Post-Training, HPT)的算法。HPT算法并非采用固定的比例或顺序混合SFT和RL,而是根据模型在解决问题时的实时表现(rollout accuracy),动态地、自适应地在这两种训练模式之间进行切换。

>> 核心思路步骤

● 核心思路:将不同算法视为同一目标的梯度估计: 论文的核心思路是,SFT和RL并非相互矛盾,而是优化同一个通用目标(最大化期望奖励)的不同梯度估计器。它们之间的差异主要体现在各自的偏差-方差权衡(bias-variance tradeoff)上。

● 步骤1:构建统一梯度表达式(UPGE): 论文证明,所有后训练算法的梯度都可以分解为四个可互换的部分,并统一成以下形式:

grad_Uni = 1_stable * (1 / π_ref) * Â * ∇π_θ

稳定性掩码 (Stabilization Mask, 1_stable): 用于控制训练稳定性,例如PPO中的裁剪(clipping)操作。

参考策略分母 (Reference Policy, π_ref): 一个重加权系数,在不同算法中形式不同(如SFT中使用当前策略π_θ,PPO中使用旧的策略π_θ_old)。

优势估计 (Advantage Estimate, Â): 用于衡量一个生成序列的“好坏程度”,可以是简单的固定值(如SFT中为1),也可以是经过归一化的相对得分(如GRPO)。

似然梯度 (Likelihood Gradient, ∇π_θ): 将目标信号反向传播至模型参数的标准策略梯度项。

● 步骤2:设计HPT算法流程:

性能评估: 对于每个训练问题,首先让模型生成n个候选答案(rollouts)。

动态切换: 使用一个验证器(verifier)评估这n个答案的正确率P。根据P与一个预设阈值γ的比较结果,决定本次更新采用SFT还是RL。

如果 P ≤ γ(模型表现不佳),则切换到SFT模式,利用高质量的专家数据进行学习(利用)。

如果 P > γ(模型表现尚可),则切换到RL模式,通过自我探索来进一步提升(探索)。

损失计算与更新: 根据选择的模式,计算对应的SFT损失或RL损失,并用其梯度来更新模型参数。

>> 优势

● 理论上的统一性: UPGE为SFT和RL的结合提供了坚实的理论基础,解释了为什么混合训练是有效且合理的,填补了该领域的理论空白。

● 卓越的性能表现: 在多个数学推理基准测试中,HPT在不同规模和家族的模型(Qwen, LLaMA)上均显著超越了SFT、GRPO、SFT→GRPO以及LUFFY等强基线方法。

● 增强的探索与泛化能力: 实验表明,HPT不仅提升了模型的单次通过率(Pass@1),还在高采样次数的通过率(Pass@k, k值高达1024)上取得了最佳表现,证明它能有效扩展模型的能力边界和探索能力。

● 自适应与高效率: HPT的动态切换机制能够自动适应不同能力水平的模型和不同难度的数据,避免了“SFT→RL”流程的高昂资源消耗,并在切换到SFT时因无需生成rollouts而节省了计算成本。

● 有效解决难题并缓解遗忘: HPT能够让模型学会解决其他方法难以攻克的难题,同时保留在简单问题上的性能,有效缓解了灾难性遗忘的风险。

>> 论文结论与观点

● 核心观点—SFT与RL是互补而非冲突的: 它们是实现同一个优化目标的不同路径,各自具有不同的偏差-方差特性。将它们视为不同但有效的“噪声测量”,可以结合起来得到更准确的梯度估计。

● 经验建议—动态适应是关键: 一个固定的混合比例或僵化的训练顺序是次优的。一个能够根据模型实时性能动态调整的自适应机制,对于构建高效且强大的后训练算法至关重要。

● HPT的机制:有效平衡探索与利用: HPT成功地利用SFT进行“利用”(当模型能力不足时,从专家数据中学习),并利用RL进行“探索”(当模型有一定能力时,通过自我生成进行改进)。

● 学习模式的保留: 实验发现,模型通过HPT从SFT数据中学到的长链条推理模式,在后续切换到RL阶段后不会被“遗忘”,而是被保留并进一步优化,这表明模型真正内化了知识,而不仅仅是模仿。

● 离线数据的使用策略: 论文通过实验对比发现,在HPT框架下,使用SFT来学习离线数据比使用更复杂的离线强化学习(Off-policy RL)效果更好。这表明,对于混合训练场景,SFT作为一种直接的知识注入方式已经足够有效。

● 切换阈值的选择: 切换阈值γ是一个重要的超参数。实验表明,并非引入越多的SFT就越好。一个更严格的阈值(例如γ=0,即仅在所有尝试都失败时才求助于SFT)可能效果更佳,但最优值需要根据模型和数据特性进行调整。

目录

《Towards a Unified View of Large Language Model Post-Training》翻译与解读

Abstract

Figure 1: Illustration of the Unified Policy Gradient Estimator. The “∇” in the background of the Likelihood Gradient part refers to the calculation of the gradient with respect to the πθ .图 1:统一策略梯度估计器的图示。似然梯度部分背景中的“∇”指的是关于 πθ 的梯度计算

1、Introduction

2 Related Works相关工作

2.1 LLM Post-Training: SFT and RL大语言模型的后期训练:监督微调与强化学习

2.2 A Combination of Online and Offline Data in LLM Post-Training大语言模型后期训练中线上与线下数据的结合

3 A Unified View on Post-Training Algorithms后训练算法的统一视角

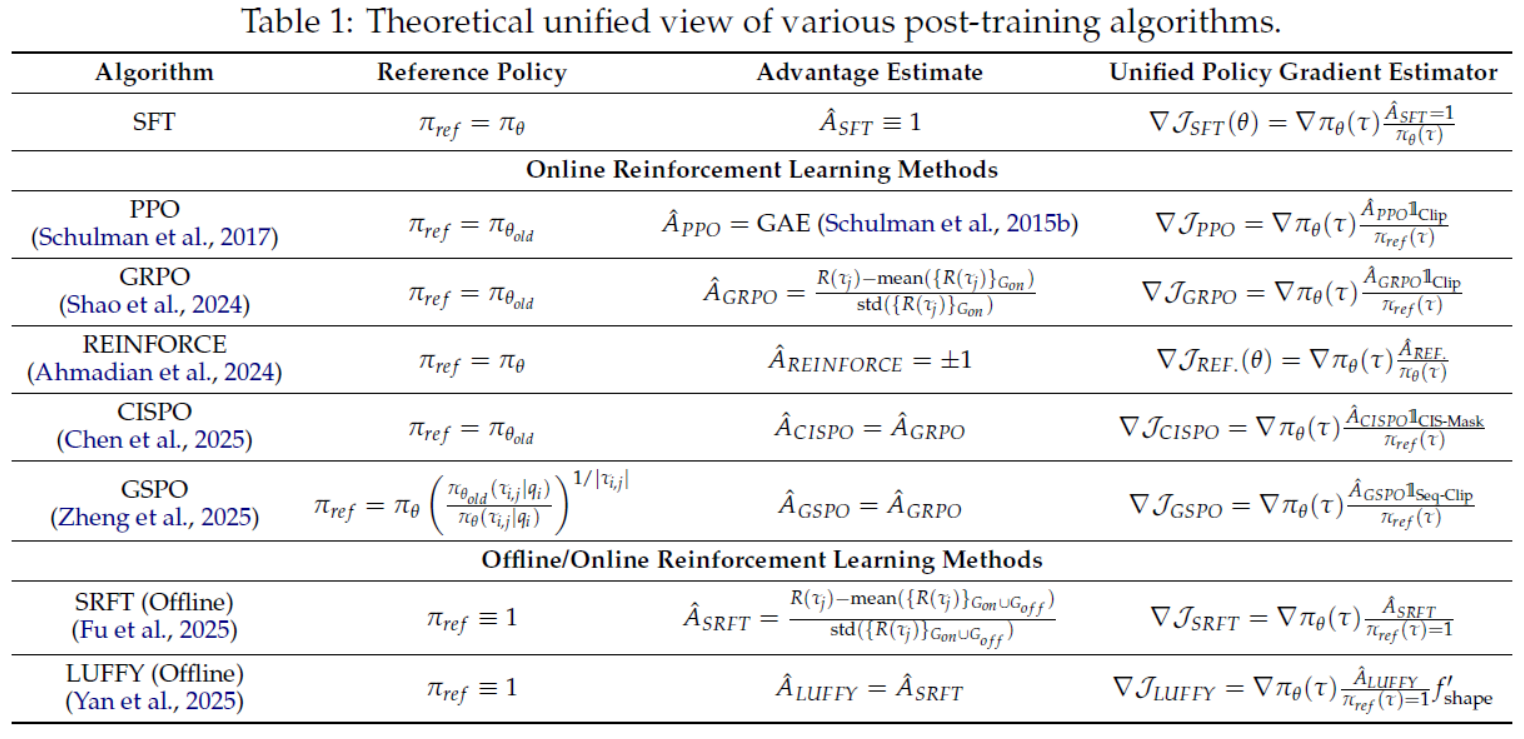

Table 1: Theoretical unified view of various post-training algorithms.表 1:各种后训练算法的理论统一视图。

3.1 Components of the Unified Policy Gradient Estimator统一策略梯度估计器的组成部分

6 Conclusion

《Towards a Unified View of Large Language Model Post-Training》翻译与解读

| 地址 | https://arxiv.org/abs/2509.04419 |

| 时间 | 2025年9月4日 |

| 作者 | 清华大学 上海AI实验室 WeChat AI |

Abstract

| Two major sources of training data exist for post-training modern language models: online (model-generated rollouts) data, and offline (human or other-model demonstrations) data. These two types of data are typically used by approaches like Reinforcement Learning (RL) and Supervised Fine-Tuning (SFT), respectively. In this paper, we show that these approaches are not in contradiction, but are instances of a single optimization process. We derive a Unified Policy Gradient Estimator, and present the calculations of a wide spectrum of post-training approaches as the gradient of a common objective under different data distribution assumptions and various bias-variance tradeoffs. The gradient estimator is constructed with four interchangeable parts: stabilization mask, reference policy denominator, advantage estimate, and likelihood gradient. Motivated by our theoretical findings, we propose Hybrid Post-Training (HPT), an algorithm that dynamically selects different training signals. HPT is designed to yield both effective exploitation of demonstration and stable exploration without sacrificing learned reasoning patterns. We provide extensive experiments and ablation studies to verify the effectiveness of our unified theoretical framework and HPT. Across six mathematical reasoning benchmarks and two out-of-distribution suites, HPT consistently surpasses strong baselines across models of varying scales and families. | 对于现代语言模型的后期训练,存在两种主要的训练数据来源:在线(模型生成的展开)数据和离线(人类或其他模型的演示)数据。这两种类型的数据通常分别被强化学习(RL)和监督微调(SFT)等方法所采用。在本文中,我们表明这些方法并非相互矛盾,而是单一优化过程的不同实例。我们推导出了一个统一的策略梯度估计器,并展示了在不同数据分布假设和各种偏差-方差权衡下,一系列后期训练方法的计算结果,都是一个共同目标的梯度。该梯度估计器由四个可互换的部分构成:稳定掩码、参考策略分母、优势估计和似然梯度。受我们的理论发现启发,我们提出了混合后期训练(HPT)算法,该算法能够动态选择不同的训练信号。HPT旨在既有效利用演示数据,又实现稳定的探索,同时不牺牲已学习到的推理模式。我们进行了大量实验和消融研究,以验证我们统一的理论框架和 HPT 的有效性。在六个数学推理基准和两个分布外测试集上,HPT 在不同规模和类型的模型中始终优于强大的基线。 |

Figure 1: Illustration of the Unified Policy Gradient Estimator. The “∇” in the background of the Likelihood Gradient part refers to the calculation of the gradient with respect to the πθ .图 1:统一策略梯度估计器的图示。似然梯度部分背景中的“∇”指的是关于 πθ 的梯度计算

1、Introduction

| Reinforcement Learning has played an integral role in enhancing the reasoning capabilities of large language models (LLMs) (Jaech et al., 2024; Team et al., 2025; Guo et al., 2025). RL allows the model to freely explore the reasoning space in the post-training process and improve its performance based on the feedback provided in the environment. However, applying Reinforcement Learning directly to a base model (i.e., “Zero RL”) (Zeng et al., 2025a) presupposes a certain level of inherent capability. This method often falters when applied to weaker models or tasks of high complexity, as the exploration process may fail to explore and discover meaningful reward signals. Conversely, the classical Supervised Fine-Tuning (SFT) (Wei et al., 2021) offers a direct and efficient method to distill knowl-edge from high-quality, human-annotated data, enabling models to rapidly and accurately fit the target distribution. Yet this approach often curtails the model’s exploratory capa-bilities, potentially leading to overfitting on the demonstration data and compromising its generalization performance on out-of-distribution inputs. Consequently, a sequential “SFT-then-RL” pipeline (Yoshihara et al., 2025) has emerged as the standard, adopted by numerous state-of-the-art open-source models. While effective, this multi-stage process, which first elevates the model’s capabilities through SFT before refining them with RL, is notoriously resource-intensive and usually requires careful tuning to ensure effectiveness. To circumvent these challenges, recent works have focused on integrating SFT or SFT-style imitation learning losses directly with RL objectives (Yan et al., 2025; Fu et al., 2025; Zhang et al., 2025a). In these approaches, the model is updated using a composite loss function. The balance between the imitation and exploration components is governed by various strategies, including a fixed coefficient, a predefined schedule, a dynamic adjustment based on entropy, or a learnable parameter. These works predominantly treat the SFT and RL losses as two distinct objectives. And a detailed analysis of why these two learning signals can be effectively combined within a unified optimization process remains largely unexplored. Despite their distinct mathematical formulations, we find that the gradient calculations from these approaches can be viewed as a single, unified form. Inspired by Generalized Advantage Estimator (Schulman et al., 2015b), we introduce Unified Policy Gradient Esti-mator (UPGE), a framework that formally subsumes the gradients of various post-training objectives into one generalized expression. We provide analysis to show that the various forms of gradients are, in fact, not conflicting. Instead, they act as complementary learning signals that can jointly guide the optimization process. However, these gradient estimators possess different characteristics, and there exists a bias-variance tradeoff in their respective gradient components. Building upon this unified perspective, we propose Hybrid Post-Training (HPT), a hybrid algorithm to dynamically choose more desirable training signals by adapting a mixing ratio between the SFT and RL losses. This mechanism allows HPT to be intrinsically adaptive to models of varying capabilities and data of differing complexities. We implement a simple instance of HPT, which adaptively switches between SFT and RL based on rollout accuracy, and empirically demonstrate that it achieves strong results. Our empirical evaluations demonstrate that HPT surpasses strong baselines such as SFT→GRPO and LUFFY with Qwen2.5-Math-7B, achieving a 7-point gain over our strongest baseline on AIME 2024. Moreover, HPT also yields substantial improvements even on relatively smaller and weaker models, including Qwen2.5-Math-1.5B and Llama3.1-8B. Through detailed training dynamics and illustrative training visualizations, we clearly reveal the features and underlying mechanisms of HPT. The following are several key takeaways: 1、UPGE provides a theoretical unification of a wide spectrum of post-training algo-rithms, covering both SFT and RL losses within a single formulation (§ 3). 2、HPT is capable of outperforming previous post-training and mixed-policy algo-rithms across a diverse range of models (§ 4). 3、Dynamic integration of SFT and RL in HPT achieves the highest Pass@1024, facili-tating enhanced exploration and generalization of the model (§ 5.1). | 强化学习在提升大型语言模型(LLMs)的推理能力方面发挥了不可或缺的作用(Jaech 等人,2024 年;Team 等人,2025 年;Guo 等人,2025 年)。强化学习使模型能够在训练后的过程中自由探索推理空间,并根据环境中提供的反馈来提升其性能。然而,直接将强化学习应用于基础模型(即“零强化学习”)(Zeng 等人,2025a)需要模型具备一定的内在能力。这种方法在应用于较弱的模型或复杂度较高的任务时往往会失败,因为探索过程可能无法探索并发现有意义的奖励信号。相反,传统的监督微调(SFT)(Wei 等人,2021 年)提供了一种直接且高效的方法,能够从高质量的人工标注数据中提炼知识,使模型能够快速准确地适应目标分布。但这种方法往往限制了模型的探索能力,可能导致模型过度拟合演示数据,从而损害其在分布外输入上的泛化性能。因此,一种顺序的“先 SFT 再 RL”的流水线(Yoshihara 等人,2025 年)已成为标准,被众多最先进的开源模型所采用。尽管有效,但这种多阶段流程,即先通过 SFT 提升模型能力,再用 RL 进行优化,却因资源消耗巨大而声名狼藉,通常还需要精心调参以确保效果。 为克服这些挑战,近期的研究工作集中于将 SFT 或 SFT 风格的模仿学习损失直接与 RL 目标相结合(Yan 等人,2025 年;Fu 等人,2025 年;Zhang 等人,2025a)。在这些方法中,模型通过一个复合损失函数进行更新。模仿和探索成分之间的平衡由多种策略控制,包括固定系数、预定义的时间表、基于熵的动态调整或可学习参数。这些工作主要将 SFT 和 RL 损失视为两个独立的目标。而对于为何这两种学习信号能够在统一的优化过程中有效结合,其详细分析仍鲜有人问津。 尽管这些方法有着不同的数学公式,但我们发现这些方法的梯度计算可以被视为一种统一的形式。受广义优势估计器(Schulman 等人,2015b)的启发,我们引入了统一策略梯度估计器(UPGE),这是一个框架,它将各种后训练目标的梯度正式归结为一个通用表达式。我们进行了分析,表明这些梯度形式实际上并不冲突。相反,它们作为互补的学习信号,可以共同引导优化过程。然而,这些梯度估计器具有不同的特性,并且它们各自的梯度分量之间存在偏差 - 方差权衡。基于这种统一的观点,我们提出了混合后训练(HPT),这是一种混合算法,通过调整 SFT 和 RL 损失之间的混合比例来动态选择更理想的训练信号。这种机制使 HPT 能够内在地适应不同能力的模型和不同复杂度的数据。我们实现了一个简单的 HPT 实例,它根据滚动预测的准确性在 SFT 和 RL 之间自适应切换,并通过实证证明其取得了出色的结果。我们的实证评估表明,HPT 超过了诸如 SFT→GRPO 和 LUFFY 等强大的基线,在 Qwen2.5-Math-7B 上比我们最强的基线在 AIME 2024 上高出 7 分。此外,HPT 即使在相对较小和较弱的模型上也能带来显著的改进,包括 Qwen2.5-Math-1.5B 和 Llama3.1-8B。通过详细的训练动态和说明性的训练可视化,我们清晰地揭示了 HPT 的特征和潜在机制。以下是几个关键要点: 1、UPGE 为广泛的后训练算法提供了理论统一,将 SFT 和 RL 损失纳入单一公式(§ 3)。 2、HPT 能够在各种模型上超越先前的后训练和混合策略算法(§ 4)。 3、HPT 中 SFT 和 RL 的动态整合实现了最高的 Pass@1024,有助于模型的增强探索和泛化(§ 5.1)。 |

2 Related Works相关工作

2.1 LLM Post-Training: SFT and RL大语言模型的后期训练:监督微调与强化学习

| Current post-training methodologies for LLMs are largely centered around two primary paradigms: Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL) (Wei et al., 2021; Ouyang et al., 2022). In the SFT paradigm, models are adapted for specific applica-tions through training on curated input-output pairs, a process which has been shown to effectively align their behavior with human demonstrations (Chung et al., 2022; Longpre et al., 2023; Touvron et al., 2023a;b). In parallel, numerous works have highlighted RL as an effective approach for refining LLM behavior in ways that are difficult to capture with SFT’s static datasets (Glaese et al., 2022; Bai et al., 2022; Nakano et al., 2021). Within this domain, a popular framework is Reinforcement Learning from Human Feedback (RLHF), which optimizes the LLM policy against a reward model trained on human preferences (Chris-tiano et al., 2017; Stiennon et al., 2020). Multiple works have established Proximal Policy Optimization (PPO) as a cornerstone algorithm for this phase (Schulman et al., 2017; Ziegler et al., 2019). To further improve reasoning capabilities in reward-driven optimization, recent advancements like Group Relative Policy Optimization (GRPO) have also been developed and widely adopted (Shao et al., 2024; Zheng et al., 2025; Chen et al., 2025). | 当前针对大语言模型的后期训练方法主要集中在两个主要范式上:监督微调(SFT)和强化学习(RL)(Wei 等人,2021;Ouyang 等人,2022)。在监督微调范式中,模型通过在精心挑选的输入输出对上进行训练来适应特定应用,这一过程已被证明能有效地使其行为与人类示范保持一致(Chung 等人,2022;Longpre 等人,2023;Touvron 等人,2023a;b)。与此同时,大量研究工作表明,强化学习是一种有效的方法,能够以监督微调的静态数据集难以捕捉的方式改进大语言模型的行为(Glaese 等人,2022;Bai 等人,2022;Nakano 等人,2021)。在这一领域,一种流行的框架是基于人类反馈的强化学习(RLHF),它通过与基于人类偏好的奖励模型进行对抗来优化大语言模型的策略(Cristiano 等人,2017;Stiennon 等人,2020)。多项研究已将近端策略优化(PPO)确立为这一阶段的核心算法(Schulman 等人,2017;Ziegler 等人,2019)。为了进一步提升奖励驱动优化中的推理能力,诸如组相对策略优化(GRPO)之类的最新进展也已得到开发并被广泛采用(Shao 等人,2024 年;Zheng 等人,2025 年;Chen 等人,2025 年)。 |

2.2 A Combination of Online and Offline Data in LLM Post-Training大语言模型后期训练中线上与线下数据的结合

| Beyond applying SFT or RL in isolation, further explorations have sought to synergize their respective strengths by combining signals from pre-existing offline data and dynamically generated online data (Fu et al., 2025; Yan et al., 2025). This motivation stems from the distinct characteristics of each approach: SFT is noted for its efficiency in distilling knowledge from offline sources, whereas RL is valued for fostering exploration through online rollouts, a process frequently linked to improved generalization (Rajani et al., 2025; Chu et al., 2025). The strategies for this integration are diverse; some techniques use offline data as a prefix to guide online generation (Zhou et al., 2023; Touvron et al., 2024; Li et al., 2025; Wang et al., 2025), while others enhance offline data by incorporating reward signals in a process known as reward-augmented fine-tuning (Liu et al., 2024; Zhao et al., 2023; Park et al., 2025; Sun et al., 2025). The broader landscape also includes various purely offline preference optimization methods, though they follow a different paradigm (Rafailov et al., 2023; Mitchell et al., 2024; Liu et al., 2025c; Ethayarajh et al., 2024; Ahmadian et al., 2024). However, the most direct approach to synergy involves the concurrent use of both data types for training updates. | 除了单独应用有监督微调(SFT)或强化学习(RL)之外,进一步的研究还试图通过结合预先存在的线下数据和动态生成的线上数据来协同发挥各自的优势(Fu 等人,2025 年;Yan 等人,2025 年)。这种动机源于这两种方法各自的特点:SFT 因能高效地从线下来源提炼知识而著称,而 RL 则因通过线上回放促进探索而受到重视,这一过程通常与更好的泛化能力相关联(Rajani 等人,2025 年;Chu 等人,2025 年)。这种整合的策略多种多样;一些技术将线下数据作为前缀来引导线上生成(Zhou 等人,2023 年;Touvron 等人,2024 年;Li 等人,2025 年;Wang 等人,2025 年),而另一些则通过在奖励增强微调过程中加入奖励信号来增强线下数据(Liu 等人,2024 年;Zhao 等人,2023 年;Park 等人,2025 年;Sun 等人,2025 年)。更广泛的领域还包括各种纯线下偏好优化方法,尽管它们遵循不同的范式(Rafailov 等人,2023 年;Mitchell 等人,2024 年;Liu 等人,2025 年 c;Ethayarajh 等人,2024 年;Ahmadian 等人,2024 年)。然而,实现协同作用最直接的方法是同时使用这两种数据类型进行训练更新。 |

| This direct approach, often termed mix-policy learning, is particularly relevant to our work and typically involves updating the model with a composite objective that combines an SFT loss from offline data and an RL loss from online data (Dong et al., 2023; Gulcehre et al., 2023; Singh et al., 2023; Liu et al., 2023). For instance, LUFFY (Yan et al., 2025) explores this paradigm by combining a fixed ratio of offline demonstration data with online rollouts in each training batch. Subsequently, SRFT (Fu et al., 2025) proposed a monolithic training phase that dynamically adjusts the weights of SFT and RL losses based on the model’s policy entropy, further demonstrating the viability of unifying these signals over a sequential pipeline. The principle of creating such a composite loss is shared by a variety of other recent frameworks (Wu et al., 2024; Zhang et al., 2025a; Kim et al., 2025; Yu et al., 2025; Liu et al., 2025a), and AMFT (He et al., 2025) begins to explore meta-gradient-based controllers. While these methods highlight a clear trend towards unifying training signals, a foundational theoretical analysis explaining why these different learning signals can be effectively combined is still lacking. This motivates our work to establish a unified theoretical framework that in turn inspires a more principled algorithm design. | 这种直接的方法,通常被称为混合策略学习,与我们的工作特别相关,通常涉及使用结合了来自离线数据的监督微调(SFT)损失和来自在线数据的强化学习(RL)损失的复合目标来更新模型(Dong 等人,2023 年;Gulcehre 等人,2023 年;Singh 等人,2023 年;Liu 等人,2023 年)。例如,LUFFY(Yan 等人,2025 年)通过在每个训练批次中将固定比例的离线演示数据与在线回放相结合来探索这一范式。随后,SRFT(Fu 等人,2025 年)提出了一种单一的训练阶段,该阶段根据模型的策略熵动态调整 SFT 和 RL 损失的权重,进一步证明了在连续流程中统一这些信号的可行性。创建这种复合损失的原则被许多其他近期框架所采用(Wu 等人,2024 年;Zhang 等人,2025a;Kim 等人,2025 年;Yu 等人,2025 年;Liu 等人,2025a),而 AMFT(He 等人,2025 年)开始探索基于元梯度的控制器。尽管这些方法突显了将训练信号统一的明显趋势,但对为何这些不同的学习信号能够有效结合的基础理论分析仍显不足。这促使我们开展工作,建立一个统一的理论框架,进而启发更具原则性的算法设计。 |

3 A Unified View on Post-Training Algorithms后训练算法的统一视角

| In this section, we adopt a unified perspective to understand both Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL) as post-training objectives. We present the gradient calculations of various post-training approaches in Table 1, with exact derivations of classical approaches presented in the Appendix A. From the table, it can be seen that policy gradient calculations for LLM post-training can be written in a unified policy gradient form. In the following sections, we further show that the differences between different gradient calculations can be broken down into four distinct components. We theoretically derive the Unified Policy Gradient Estimator from a common objective and provide a detailed analysis of its gradient components. Based on this unified perspective, we then propose the Hybrid Post-Training (HPT) algorithm. | 在本节中,我们将监督微调(SFT)和强化学习(RL)作为后训练目标采用统一视角进行理解。我们在表 1 中展示了各种后训练方法的梯度计算,经典方法的精确推导见附录 A。从表中可以看出,LLM 后训练的策略梯度计算可以写成统一的策略梯度形式。 在接下来的部分中,我们将进一步表明不同梯度计算之间的差异可以分解为四个不同的组成部分。我们从一个共同的目标出发,理论推导出统一策略梯度估计器,并对其梯度成分进行详细分析。基于这种统一视角,我们随后提出了混合后训练(HPT)算法。 |

| We propose a unified framework for the gradient calculation of LLM post-training, named the Unified Policy Gradient Estimator: All gradient calculations can be written in the unified form. | 我们提出了一个用于 LLM 后训练梯度计算的统一框架,称为统一策略梯度估计器: 所有梯度计算都可以写成统一的形式。 |

Table 1: Theoretical unified view of various post-training algorithms.表 1:各种后训练算法的理论统一视图。

3.1 Components of the Unified Policy Gradient Estimator统一策略梯度估计器的组成部分

| We present the Unified Policy Gradient Estimator, our unified framework for gradient calculations. In Table 1, we list a series of fundamental and well-studied post-training methods, divided into SFT and two types of RL processes. Apart from providing the closed-form policy gradients of these methods, we also present the decomposition of these methods with detailed components. It can be seen that these seemingly different methods in fact share common components and that all gradients follow our proposed unified framework. In this paper, we divide the unified gradient into four terms: stabilization mask, reference policy, advantage estimate, and likelihood gradient. We address each of the terms below. | 我们提出了统一策略梯度估计器,这是我们的统一梯度计算框架。在表 1 中,我们列出了系列基础且研究充分的后训练方法,分为策略迁移(SFT)和两种类型的强化学习过程。除了提供这些方法的闭式策略梯度外,我们还展示了这些方法的分解及其详细组成部分。可以看出,这些看似不同的方法实际上具有共同的组成部分,并且所有梯度都遵循我们提出的统一框架。 在本文中,我们将统一梯度分为四个项:稳定掩码、参考策略、优势估计和似然梯度。下面我们将逐一介绍这些项。 |

| Stabilization Mask 1stable Starting from PPO (Schulman et al., 2017), the stabilization mask was first derived as an approximation of the TRPO Algorithm (Schulman et al., 2015a). In practice, the PPO clipping addresses the instability issue during RL training by turning off the current gradient when the current iterate is considered unsafe. In consequent works in Table 1, many have provided their modifications on the stability mask, usually motivated by empirical evaluations. Reference Policy Denominator πre f The second term in our unified estimator is the reference policy on the denominator. We note that our notion of reference policy differs from the commonly used rollout policy πθold , for which we provide a discussion in Section 3.3. This denominator denotes a token-level reweight coefficient, usually in the form of an inverse probability. There are multiple choices for this coefficient. For the case of SFT, the policy denominator uses the current policy πθ(τ). This is a result of L = −log(πθ(τ)) as the objective function. For the case of PPO-style online RL algorithms, generally, the policy denominator uses the rollout policy πθold (τ). Due to the unavailability of πre f (τ) in the offline demonstration dataset, most offline RL algorithms simply assume πre f (τ) = 1 for the denominator. | 稳定掩码 1stable 从 PPO(Schulman 等人,2017)开始,稳定掩码最初是作为 TRPO 算法(Schulman 等人,2015a)的一种近似而推导出来的。在实践中,PPO 剪裁通过在当前迭代被认为不安全时关闭当前梯度来解决强化学习训练期间的不稳定性问题。在表 1 中的后续工作中,许多研究者对稳定性掩码进行了修改,通常这是基于经验评估的动机。 参考策略分母 πref 我们统一估计器中的第二项是分母上的参考策略。我们注意到,我们的参考策略概念不同于常用的滚动策略 πθold ,对此我们在第 3.3 节进行了讨论。这个分母表示一个标记级别的重新加权系数,通常以逆概率的形式出现。对于这个系数有多种选择。对于 SFT 情况,策略分母使用当前策略 πθ(τ)。这是由于 L = -log(πθ(τ)) 作为目标函数的结果。对于 PPO 风格的在线强化学习算法,通常策略分母使用滚动策略 πθold (τ)。由于离线演示数据集中无法获取 πref (τ),大多数离线强化学习算法简单地假设分母 πref (τ) = 1。 |

| Advantage Estimate Aˆ In traditional RL, the advantage evaluates the additional benefit of taking the current action given the current state. For the context of LLMs, most of the advantage estimation is sequence-level rather than token-level, and measures the quality of the current response sequence. Similar to traditional RL literature, the post-training process seeks to maximize the likelihood of generating positive sequences with high advantage and minimize negative sequences. Likelihood Gradient ∇πθ (τ) The policy gradient term is a general term which maps gradient information from the actions to the model parameters θ. It is crucial for back-propagating the objective signals to the network weights, and is kept the same across all gradient calculations. | 优势估计 Aˆ 在传统的强化学习中,优势评估的是在当前状态下采取当前行动所带来的额外收益。对于大型语言模型而言,大多数优势估计是在序列层面而非标记层面进行的,衡量的是当前响应序列的质量。与传统强化学习文献类似,训练后的过程旨在最大化生成具有高优势的积极序列的可能性,并最小化消极序列的可能性。 似然梯度 ∇πθ (τ) 策略梯度项是一个通用术语,它将来自行动的梯度信息映射到模型参数 θ 上。这对于将目标信号反向传播到网络权重至关重要,并且在所有梯度计算中保持不变。 |

6 Conclusion

| In this paper, we introduce the Unified Policy Gradient Estimator to provide a theoretical framework for LLM post-training. We demonstrate that SFT and RL optimize a common objective, with their respective gradients representing different bias-variance tradeoffs. Mo-tivated by this unified perspective, we propose Hybrid Post-Training (HPT), an algorithm that dynamically adapts between SFT for exploitation and RL for exploration based on real-time performance feedback. Extensive empirical validation shows that HPT consis-tently outperforms strong baselines, including sequential and static mixed-policy methods, across various models and benchmarks. Our work contributes both a unifying theoretical perspective on post-training and a practical algorithm that effectively balances exploitation and exploration to enhance model capabilities. | 在本文中,我们引入了统一策略梯度估计器,为大语言模型的后期训练提供了一个理论框架。我们证明了有监督微调(SFT)和强化学习(RL)优化的是一个共同的目标,它们各自的梯度代表了不同的偏差-方差权衡。受这种统一视角的启发,我们提出了混合后期训练(HPT)算法,该算法能够根据实时性能反馈在 SFT 的利用和 RL 的探索之间动态切换。大量的实证验证表明,HPT 在各种模型和基准测试中始终优于强大的基线方法,包括顺序和静态混合策略方法。我们的工作既贡献了一个关于后期训练的统一理论视角,又提供了一种实用的算法,能够有效地平衡利用和探索,从而提升模型的能力。 |